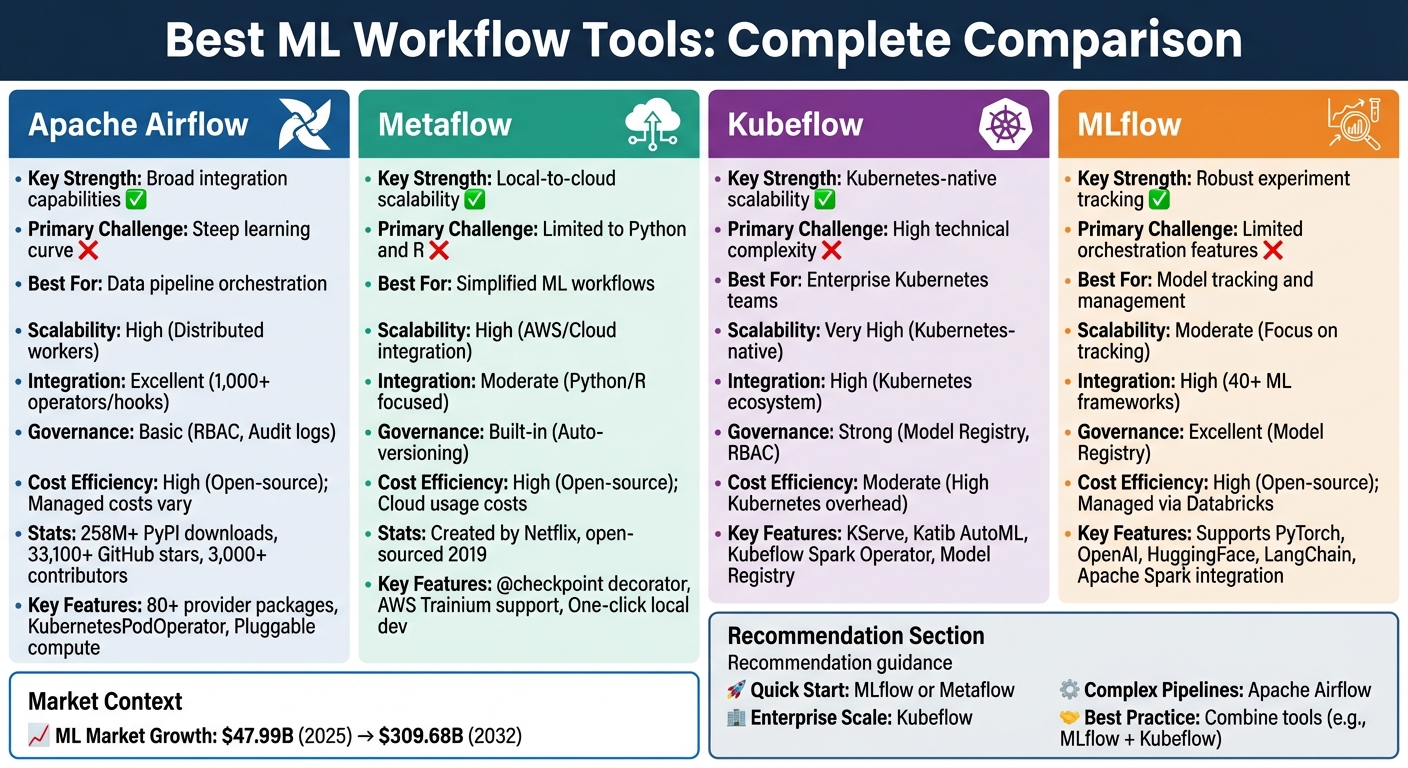

Machine learning workflows can be complex, often bogged down by dependency management and experiment tracking. Specialized tools simplify this process, increasing automation, efficiency, and reproducibility. Apache Airflow, Metaflow, Kubeflow, and MLflow are four standout options, each addressing different stages of the ML lifecycle. Here's what you need to know:

| Tool | Key Strength | Primary Challenge | Best For |

|---|---|---|---|

| Apache Airflow | Broad integration capabilities | Steep learning curve | Data pipeline orchestration |

| Metaflow | Local-to-cloud scalability | Limited to Python and R | Simplified ML workflows |

| Kubeflow | Kubernetes-native scalability | High technical complexity | Enterprise Kubernetes teams |

| MLflow | Robust experiment tracking | Limited orchestration features | Model tracking and management |

Each tool serves a specific need, and combining them can unlock even greater efficiency. For example, pairing MLflow with Kubeflow enables seamless model tracking and orchestration. Start with the tool that aligns with your current workflow, then expand as your needs grow.

Machine Learning Workflow Tools Comparison: Apache Airflow vs Metaflow vs Kubeflow vs MLflow

Apache Airflow has become the go-to choice for orchestrating data pipelines across various platforms. As an Apache Software Foundation project, it boasts impressive stats: over 258 million PyPI downloads, more than 33,100 GitHub stars, and contributions from over 3,000 developers. Its Python-native framework enables data scientists to seamlessly turn existing machine learning scripts into orchestrated workflows using simple tools like the @task decorator. This approach minimizes the need for extensive code changes while boosting automation and reproducibility.

One of Airflow's standout features is its provider packages - a collection of over 80 modules that simplify connections to third-party services. These packages include pre-built operators, hooks, and sensors, making it easy to integrate with major platforms like AWS, GCP, and Azure. For machine learning workflows, Airflow connects with tools like MLflow, SageMaker, and Azure ML. It also supports LLMOps and retrieval-augmented generation (RAG) pipelines through integrations with vector databases such as Weaviate, Pinecone, Qdrant, and PgVector. Features like the KubernetesPodOperator and @task.external_python_operator allow tasks to run in isolated environments, adding flexibility.

"Apache Airflow sits at the heart of the modern MLOps stack. Because it is tool agnostic, Airflow can orchestrate all actions in any MLOps tool that has an API." - Astronomer Docs

This extensive integration framework underscores Airflow's ability to adapt to diverse workflows.

Airflow’s modular design ensures it can handle workloads of any size. It uses message queues to manage an unlimited number of workers, making it scalable from a single laptop to large distributed systems. Its pluggable compute feature lets teams offload resource-heavy tasks to external clusters like Kubernetes, Spark, Databricks, or cloud GPU instances. The KubernetesExecutor further enhances scalability by dynamically allocating resources, spinning up compute pods as needed. This ensures organizations only pay for what they use, keeping resource management efficient.

While Airflow’s open-source nature eliminates licensing fees, its reliance on Docker and Kubernetes can lead to higher setup and maintenance costs. Managing dependencies and navigating its steep learning curve are often cited as challenges. Noah Ford, Senior Data Scientist, remarked:

"Airflow starts and stays hard, making it demotivating to get started."

Managed services like Astronomer, which offers a 14-day trial and $20 in free credits, can help reduce infrastructure burdens. Additionally, consolidating multiple tools into a single orchestration layer can streamline operations and lower overall costs by eliminating the need for separate systems.

Metaflow, originally created by Netflix and open-sourced in 2019, is a framework designed to simplify the lives of data scientists. It stands out by allowing users to build workflows locally on their laptops and seamlessly scale them to the cloud without the need for code adjustments. This ease of use has translated into tangible results - CNN's data science team, for instance, managed to test twice as many models in the first quarter of 2021 compared to the entire previous year after adopting Metaflow [1]. Its streamlined workflow design makes it a strong choice for scaling in high-demand environments.

When it comes to handling complex models, Metaflow truly shines. It supports cloud bursting across platforms like AWS (EKS, Batch), Azure (AKS), and Google Cloud (GKE), allowing precise resource allocation for each workflow step through simple decorators. For those working with large language models, it even offers native support for AWS Trainium hardware. The @checkpoint decorator ensures progress is saved during lengthy jobs, preventing the frustration of starting over after failures. Once workflows are ready for production, Metaflow can export them to robust orchestrators like AWS Step Functions or Kubeflow, which are capable of managing millions of runs.

Metaflow also excels in its ability to integrate with a wide range of tools and libraries. It’s designed to work seamlessly with any Python-based machine learning library, including PyTorch, HuggingFace, and XGBoost. For data management, it connects natively to AWS S3, Azure Blob Storage, and Google Cloud Storage. It supports both Python and R, catering to a broad range of users. Additionally, the integration with the uv tool ensures quick dependency resolution, whether working locally or in the cloud - an essential feature when scaling across multiple instances. Realtor.com’s engineering team leveraged these capabilities to significantly reduce the time it took to transition models from research to production, cutting months off their timeline [2].

Metaflow ensures every workflow, experiment, and artifact is versioned automatically, making reproducibility a built-in feature. It also integrates smoothly with existing enterprise security and governance frameworks, offering dedicated APIs for managing secrets. This provides complete visibility and compliance for machine learning workflows, aligning with enterprise-grade requirements.

As an open-source tool, Metaflow eliminates licensing fees, making it an economical choice for teams of all sizes. Its one-click local development environment reduces the time spent on infrastructure setup, while the ability to test workflows locally before deploying to the cloud helps avoid unnecessary expenses. With granular resource allocation, you only pay for the hardware needed at each step, avoiding the waste that comes with over-provisioning. Additionally, its in-browser Sandbox environment allows users to experiment with cloud features without immediately committing infrastructure resources. These cost-conscious features make Metaflow an attractive option for building efficient, production-ready machine learning workflows.

Kubeflow is a platform built specifically for machine learning (ML) workflows, designed to work seamlessly with Kubernetes. Unlike general-purpose orchestrators, it offers tools tailored to tasks like hyperparameter tuning and model serving. Its Kubernetes foundation ensures flexibility, allowing it to run on Google Cloud, AWS, Azure, or even on-premises setups. This portability makes it ideal for teams operating across diverse environments. With its focus on ML-specific needs, Kubeflow delivers scalability and integration suited for complex workflows, as outlined below.

Kubeflow takes advantage of Kubernetes' ability to scale efficiently, making it well-suited for large-scale ML workflows. Each step in a pipeline runs as an independent, containerized task, enabling automatic parallel execution through a directed acyclic graph (DAG). The platform’s Trainer component supports distributed training across frameworks like PyTorch, HuggingFace, DeepSpeed, JAX, and XGBoost. For inference, KServe handles both generative and predictive AI models with scalable performance. Users can specify CPU, GPU, and memory requirements for tasks, while node selectors route intensive training jobs to GPU-equipped nodes and assign lighter tasks to cost-effective CPU-only instances. Additionally, Kubeflow’s caching feature prevents redundant executions when inputs remain unchanged, saving both time and computational resources.

Kubeflow’s modular design integrates tools for every phase of the ML lifecycle. For development, Kubeflow Notebooks offer web-based Jupyter environments running directly in Kubernetes Pods. Katib facilitates AutoML and hyperparameter tuning, using early stopping to halt underperforming trials. Data processing is streamlined with the Kubeflow Spark Operator, which runs Spark applications as native Kubernetes workloads. For notebook users, the Kale tool simplifies converting Jupyter notebooks into Kubeflow Pipelines without requiring manual adjustments. The Model Registry serves as a central repository for managing model versions and metadata, bridging experimentation and deployment. All these components are accessible through the Kubeflow Central Dashboard, which provides a unified interface for managing the ecosystem. With built-in governance tools, Kubeflow ensures clear model tracking and consistent performance across workflows.

Kubeflow offers robust tracking and visualization of pipeline definitions, runs, experiments, and ML artifacts, ensuring a clear lineage from raw data to deployed models. The Model Registry acts as a central hub for model versions and metadata, maintaining consistency across iterations. Workflows are compiled into platform-neutral IR YAML files, enabling seamless movement between Kubernetes environments without requiring major adjustments. This consistency supports smooth transitions across development, staging, and production environments.

As an open-source platform, Kubeflow eliminates licensing costs, leaving only the expense of the underlying Kubernetes infrastructure. Its caching feature reduces compute costs by avoiding re-execution of data processing or training steps when inputs remain unchanged. Katib’s early stopping capability further saves resources by ending poorly performing hyperparameter tuning trials early. For teams with simpler needs, Kubeflow Pipelines can be installed as a standalone application, reducing the resource load on the cluster. Additionally, Kubeflow’s ability to run multiple workflow components simultaneously ensures optimal resource utilization, minimizing idle time and maximizing efficiency.

MLflow is an open-source platform designed to streamline the machine learning (ML) lifecycle, covering everything from tracking experiments to packaging and deploying models. With seamless GitHub integration and compatibility with over 40 frameworks - including PyTorch, OpenAI, HuggingFace, and LangChain - it has become a go-to solution for ML teams. Licensed under Apache-2.0, MLflow is available for self-hosting or as a managed service through Databricks. Below, we explore its scalability, integration capabilities, governance features, and cost advantages, which complement the tools discussed earlier.

MLflow's Tracking Server ensures all parameters, metrics, and artifacts from distributed runs are captured, maintaining a clear data lineage. Thanks to its native integration with Apache Spark, the platform handles large-scale datasets and distributed training effortlessly, making it ideal for teams managing significant data workloads. For production, Mosaic AI Model Serving supports real-time predictions with features like zero-downtime updates and traffic splitting to compare models (e.g., "Champion" vs. "Challenger"). Additionally, batch and streaming inference pipelines offer cost-effective solutions for high-throughput scenarios where ultra-low latency isn't required. With model aliases in Unity Catalog, pipelines can dynamically load the latest validated model version without any code modifications.

MLflow excels in bringing scalability together with extensive integration options. It supports traditional ML, deep learning, and generative AI workflows. The platform is tailored for large language model (LLM) providers such as OpenAI, Anthropic, Gemini, and AWS Bedrock, and integrates with orchestration tools like LangChain, LlamaIndex, DSPy, AutoGen, and CrewAI. On November 4, 2025, MLflow added OpenTelemetry support, enabling seamless integration with enterprise monitoring tools. Its AI Gateway provides a centralized interface for managing interactions across various LLM providers, simplifying operations across cloud platforms. Further enhancing its observability, MLflow introduced support for every TypeScript LLM stack on December 23, 2025, underscoring its alignment with modern AI workflows.

MLflow's Model Registry offers centralized tracking of model versions, lineage, and transitions from development to production. For every experiment, the platform logs code versions, parameters, metrics, and artifacts, ensuring reproducibility across teams and environments. For generative AI applications, MLflow includes tools to trace and evaluate LLM workflows, providing greater visibility into complex systems. Models are packaged in a standardized format that ensures consistent behavior across deployment environments, while dependency graphs automatically document the features and functions required for inference.

MLflow's open-source nature eliminates licensing fees, leaving infrastructure as the primary cost for self-hosted setups. Teams can opt for full control with self-hosting or reduce operational demands by choosing managed hosting, which even includes a free tier. The platform's batch and streaming inference capabilities offer affordable alternatives to real-time serving for high-throughput tasks. By centralizing experiment tracking and model management, MLflow minimizes redundant efforts, helping teams avoid repeating experiments or losing track of model versions - saving both time and computational resources in the process.

When it comes to workflow tools for managing ML pipelines, each option brings its own strengths and trade-offs. Here's a closer look at how some popular tools stack up:

Apache Airflow stands out for its ability to connect a wide range of systems using its extensive library of operators and hooks. This makes it a go-to choice for complex data engineering pipelines that support ML models. However, it lacks built-in ML-specific features like model tracking or a model registry, which can be a drawback. Additionally, users often find its learning curve to be steep. While its distributed worker architecture offers excellent scalability, managing the infrastructure can become intricate.

Metaflow focuses on simplicity, automatically handling experiment and data versioning, so teams don’t have to worry about infrastructure management. It integrates smoothly with AWS storage and compute services, allowing data scientists to concentrate on Python development. The downside? Its integration capabilities are more limited, primarily catering to Python and R workflows.

Kubeflow is built for scalability, leveraging its Kubernetes-native design and the support of the Kubernetes community. It offers tools for the entire AI lifecycle, such as KServe for model serving and a Model Registry for version control. However, the platform demands significant Kubernetes expertise, which can be a challenge for teams without specialized engineering skills. Additionally, the infrastructure overhead is often higher.

MLflow excels in managing the ML lifecycle, offering top-tier experiment tracking and compatibility with over 40 frameworks, including PyTorch and TensorFlow. Its Model Registry and packaging standards ensure reproducibility across environments. That said, while it’s fantastic for tracking, MLflow doesn’t focus as much on orchestrating complex pipelines, often requiring pairing with another tool like Airflow or Kubeflow for advanced data movement.

| Tool | Scalability | Integration Capabilities | Governance Features | Cost Efficiency |

|---|---|---|---|---|

| Apache Airflow | High (Distributed workers) | Excellent (1,000+ operators/hooks) | Basic (RBAC, Audit logs) | High (Open-source); Managed costs vary |

| Metaflow | High (AWS/Cloud integration) | Moderate (Python/R focused) | Built-in (Auto-versioning) | High (Open-source); Cloud usage costs |

| Kubeflow | Very High (Kubernetes-native) | High (Kubernetes ecosystem) | Strong (Model Registry, RBAC) | Moderate (High Kubernetes overhead) |

| MLflow | Moderate (Focus on tracking) | High (40+ ML frameworks) | Excellent (Model Registry) | High (Open-source); Managed via Databricks |

Ultimately, choosing the right tool depends on your specific ML pipeline needs and priorities.

Selecting the most suitable machine learning workflow tool depends largely on your team's expertise and the resources at hand. If your focus is on quick development without heavy engineering, Metaflow offers an efficient way to transition from local experimentation to cloud deployment. For teams prioritizing budget-conscious solutions and effective experiment tracking, MLflow stands out as a reliable, open-source option. Its flexibility across frameworks and robust versioning features make it a go-to choice for managing models.

For organizations already utilizing Kubernetes, Kubeflow provides native scalability and portability, making it a strong contender for enterprise-level deployments. However, its complexity and steep learning curve may pose challenges for smaller teams with limited engineering capabilities. Despite this, Kubeflow's production readiness has been proven in numerous large-scale deployments.

On the orchestration side, Apache Airflow remains a versatile and mature tool for integrating various systems. While its complexity often requires dedicated maintenance, many production teams find value in combining tools rather than relying on just one. For instance, pairing MLflow for experiment tracking with Kubeflow for orchestration is a popular strategy, enabling workflows that capitalize on the strengths of each tool. This multi-tool approach ensures flexibility and efficiency, especially as machine learning workflows grow more complex.

With the machine learning market expected to soar from $47.99 billion in 2025 to $309.68 billion by 2032, choosing tools that integrate well and scale with your needs is critical. For teams with limited resources, starting with MLflow or Metaflow can minimize costs while still providing essential features like tracking and versioning. As your requirements grow, more advanced orchestration tools can be added without disrupting your existing setup, allowing for a seamless evolution of your workflow.

Apache Airflow and Kubeflow serve different purposes and cater to distinct needs, particularly when it comes to managing workflows and machine learning pipelines.

Apache Airflow is a Python-based platform designed for orchestrating, scheduling, and monitoring workflows. It shines in handling a wide range of automation tasks, including data pipelines, with its scalable architecture and user-friendly interface. While Airflow isn't specifically tailored for machine learning, its versatility allows it to integrate seamlessly into existing infrastructures and support ML-related operations alongside other automation needs.

Kubeflow, by contrast, is built specifically for machine learning workflows within Kubernetes environments. It offers a modular ecosystem designed to support the entire AI lifecycle, from pipeline orchestration to model training and deployment. With its focus on portability, scalability, and integration with cloud-native technologies, Kubeflow is particularly well-suited for managing end-to-end ML pipelines in containerized environments.

In essence, Airflow is a flexible orchestration tool that addresses a broad spectrum of tasks, while Kubeflow is purpose-engineered for machine learning workflows, providing specialized tools for every stage of the ML lifecycle within Kubernetes setups.

Metaflow simplifies the journey of taking machine learning workflows from a personal computer to the cloud by offering a cohesive and easy-to-use framework. It empowers data scientists to build and test workflows on their local machines, then transition to cloud platforms seamlessly, without needing to overhaul their code.

The platform makes it straightforward to allocate resources like CPUs, memory, and GPUs for handling larger datasets or enabling parallel processing. It integrates effortlessly with leading cloud providers, including AWS, Azure, and Google Cloud, allowing for a smooth shift from local development to production environments. Whether running locally, on-premises, or in the cloud, Metaflow ensures workflows are both scalable and reliable, reducing complexity while increasing efficiency.

Teams often combine MLflow and Kubeflow to harness their complementary features and create a more streamlined machine learning workflow. MLflow specializes in tracking experiments, managing model versions, and overseeing deployment stages, ensuring models remain reproducible and well-documented. On the other hand, Kubeflow offers a scalable, Kubernetes-native platform designed for orchestrating and managing machine learning pipelines, covering everything from training to serving and monitoring.

Integrating these tools allows teams to simplify the transition from experimentation to production. MLflow’s strengths in tracking and model management align perfectly with Kubeflow’s robust infrastructure, providing enhanced automation, scalability, and operational efficiency. This combination is especially suited for teams seeking a flexible, end-to-end solution for managing the entire machine learning lifecycle.