Prompt engineering platforms are transforming how businesses design and scale AI workflows. These tools simplify access to multiple AI models like GPT-4, Claude, and Llama, enabling U.S. enterprises to cut costs by up to 98% and boost productivity by 10×. However, selecting the right platform is critical, as 95% of generative AI pilots fail to reach production due to inefficiencies.

Here’s a quick look at three leading platforms:

Each platform supports the entire AI workflow, from prompt design to deployment, with unique strengths in model comparison, cost optimization, and governance. Your choice depends on your team’s size, technical expertise, and compliance needs.

| Platform | Key Features | Pricing | Best For |

|---|---|---|---|

| Prompts.ai | Access to 35+ models, cost-saving TOKN Pooling, SOC 2 compliance | Free to $129/month | Enterprises needing unified tools |

| Platform B | Model Abstraction layer, cost analysis, compliance tools | Custom pricing | Teams avoiding vendor lock-in |

| Vellum | Collaborative tools, versioning, private cloud security | Custom pricing | Teams focused on teamwork |

Takeaway: Choose a platform that aligns with your business goals, whether it’s reducing costs, improving governance, or scaling AI workflows.

Comparison of Top 3 Prompt Engineering Platforms for AI Workflows

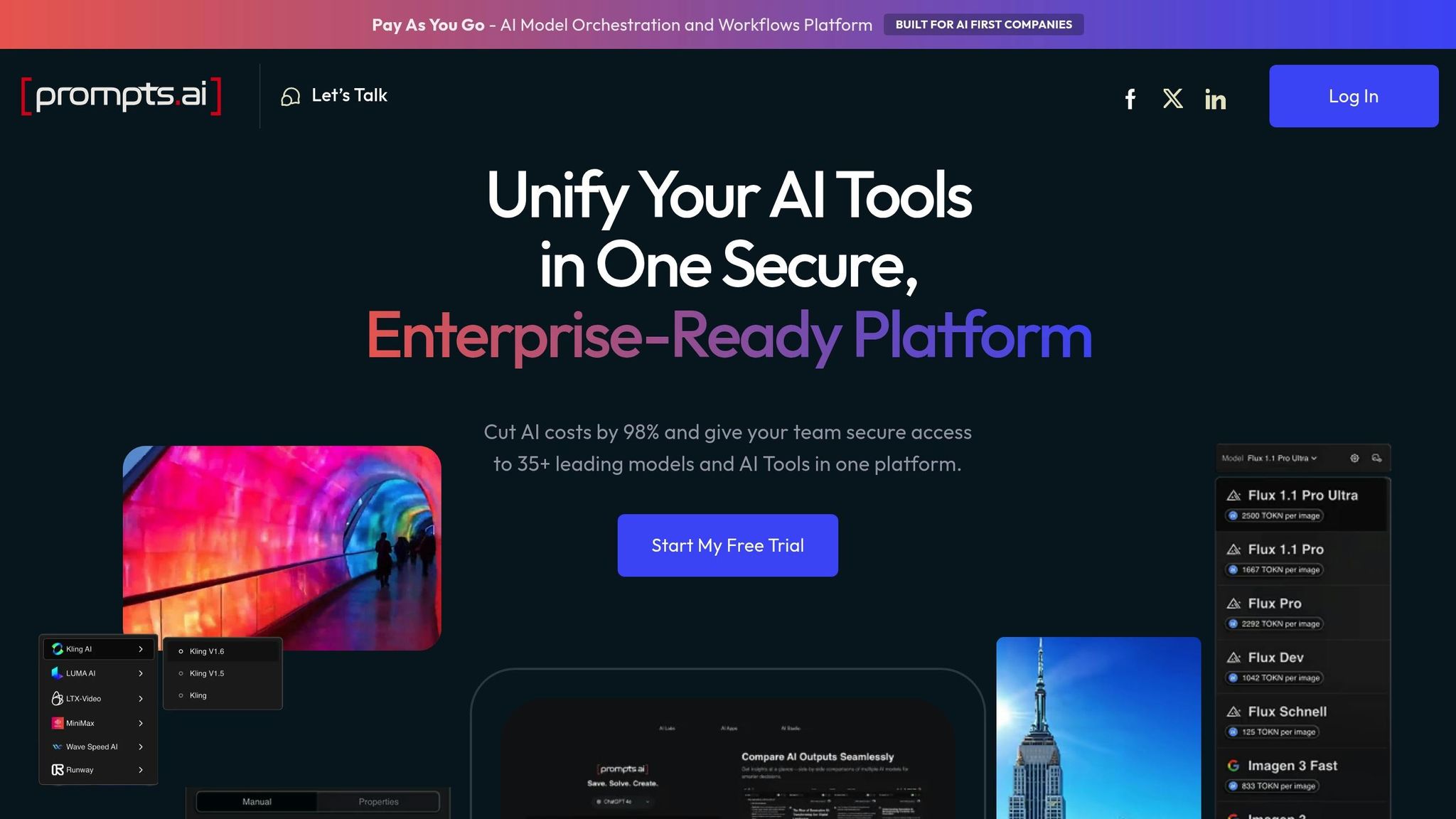

Prompts.ai is an all-in-one AI orchestration platform designed to simplify access to over 35 leading language models, including GPT-5, Claude, LLaMA, and Gemini. Developed by Steven P. Simmons, the platform addresses a major challenge for U.S. enterprises: managing multiple subscriptions and governance systems. By consolidating tools into a single, secure interface, it eliminates unnecessary complexity and streamlines the enterprise AI lifecycle, delivering benefits like interoperability, cost efficiency, compliance, and scalability.

Prompts.ai’s side-by-side comparison tool allows teams to test identical prompts across different models, making it easier to identify the best language model (LLM) for specific tasks. For instance, architect Ar. June Chow gave the platform a 4.8 out of 5.0 rating for its effectiveness in handling intricate architectural projects. The platform also supports workflows that integrate seamlessly across various providers and connects with tools like Slack, Gmail, and Trello to automate enterprise processes. Beyond helping users choose the right model, Prompts.ai enhances efficiency by optimizing resource usage through its advanced cost management features.

Prompts.ai can cut AI costs by up to 98% through its TOKN Pooling feature, which efficiently shares token credits across users. According to Steven Simmons, the platform’s workflows and LoRAs have transformed tasks like rendering and proposal creation - reducing timelines from weeks to just a single day. Pricing options range from a $0 Pay-As-You-Go plan to a Business Elite tier at $129 per member per month, which includes 1,000,000 TOKN credits and storage pooling. This cost-saving approach is paired with strong governance features to ensure secure and efficient operations.

On June 19, 2025, Prompts.ai took a major step toward security and compliance by initiating SOC 2 Type II audits and adopting HIPAA and GDPR best practices via Vanta’s continuous control system. The platform also offers a real-time Trust Center for tracking policies. For organizations managing sensitive data, Business-tier plans provide centralized governance tools, enabling full visibility and auditability of all AI interactions. These measures ensure that enterprises can scale their AI initiatives with confidence while maintaining strict compliance standards.

Prompts.ai is built to grow with your business. On higher-tier plans, the platform supports unlimited workspaces and collaborators. For example, the Problem Solver plan, priced at $99 per month, accommodates up to 99 collaborators. Mohamed Sakr, Founder of The AI Business, praised the platform for its ability to automate tasks across sales, marketing, and operations. This level of scalability helps U.S. businesses overcome operational challenges and transition smoothly from pilot projects to full-scale AI deployment.

Source:

Platform B takes a different approach to model orchestration compared to Prompts.ai, offering a unique architectural design. It utilizes a Model Abstraction layer that supports over 85 models from leading providers. This setup separates the orchestration logic from the models themselves, making it easy for U.S. enterprises to switch LLM vendors without needing to overhaul their workflows. This vendor-neutral design minimizes reliance on any single provider's ecosystem.

With tools like the Side-by-Side Prompt Editor and Playground, Platform B allows users to directly compare outputs, latency, and performance across various models - whether they're open-source or proprietary. This feature helps teams pinpoint the most efficient and budget-friendly model for their specific tasks before committing to production. Thanks to the abstraction layer, switching between models involves minimal effort, making it easier to adapt to new or evolving LLM options.

Platform B includes a robust evaluation framework for assessing Total Cost of Ownership (TCO), ensuring teams can avoid platforms that might become too costly as usage grows. Its comparison tools benchmark API costs, latency, and performance under real-world conditions, helping users make informed decisions. These features position Platform B as a practical choice for managing costs in dynamic AI operations.

For organizations in regulated industries, Platform B offers enterprise-level security with SOC 2 compliance and HIPAA support. Key security measures include Role-Based Access Control (RBAC), Single Sign-On (SSO), and secrets management to protect sensitive data. Additionally, the platform provides detailed audit logs, version control, and approval workflows, giving compliance teams the tools they need to oversee and monitor AI deployments effectively.

Vellum (Platform C) is designed to bring flexibility and teamwork to prompt engineering teams. Its Prompt Playground provides a model-neutral space where users can refine prompts simultaneously across multiple models. This feature supports both closed-source and open-source providers, including models from OpenAI and Anthropic, enabling teams to compare outputs and pinpoint the best fit for their specific workflows.

The Prompt Builder empowers both technical and non-technical users to iterate in real time. Teams can compare outputs, measure latency, and assess performance side by side, significantly cutting down on development time and labor expenses.

Vellum prioritizes security and compliance with SOC2 certification and HIPAA support. It offers private cloud deployments to ensure data remains isolated and secure, even for sensitive enterprise AI workflows. The platform also integrates human and automated evaluation pipelines to maintain high standards of quality and compliance throughout the development process. Additionally, its template and versioning system helps teams store, organize, and track prompt changes, creating an audit trail essential for overseeing AI deployments. These features ensure smooth scaling while meeting governance standards.

Vellum’s design promotes seamless collaboration across teams. Product managers, engineers, and subject matter experts can work together on prompt development without being hindered by technical challenges. This approach removes workflow bottlenecks and enables scalable operations across a variety of use cases.

When comparing platforms, it's clear that each offers distinct advantages and challenges. Here's a concise summary of their core strengths and limitations, highlighting the key factors to consider based on project requirements.

Prompts.ai provides access to over 35 top-tier large language models, ensuring enterprise-level security with SOC 2 certification and offering the potential to cut AI-related costs by as much as 98%. However, managing TOKN credits demands careful oversight, and users on lower-tier plans might encounter waitlists.

Vellum shines with its built-in evaluation and versioning tools, significantly reducing development time. That said, its specialized focus results in fewer general SaaS connectors, and new users may face a steeper learning curve during initial implementation.

| Platform | Key Strengths | Primary Limitations |

|---|---|---|

| Prompts.ai | Access to 35+ models; SOC 2-certified security; up to 98% cost savings | Requires careful TOKN credit management; potential waitlists on lower plans |

| Vellum | Built-in evaluation and versioning; production-ready workflows | Limited SaaS connectors; steeper onboarding curve |

Ultimately, the best choice depends on your project's technical needs and team capacity. Larger teams with technical expertise can benefit from platforms offering deeper customization, while smaller teams might prioritize user-friendly tools with pre-built integrations.

Choosing the right prompt engineering platform depends on your team’s technical expertise, regulatory requirements, and scalability needs. Prompts.ai stands out by offering access to over 35 models while maintaining rigorous security and compliance standards. Impressively, it can reduce AI costs by up to 98%, making it a game-changer for U.S. enterprises in sectors like healthcare, finance, and government, where operational efficiency and adherence to regulations are paramount.

For teams where product managers and engineers collaborate on production workflows, Vellum AI provides a practical solution. Its visual builder bridges the gap between technical and non-technical stakeholders, a vital feature considering that 95% of generative AI pilots fail to transition into production.

Each platform has its strengths, and your choice should align with your specific technical and compliance needs. Platform B’s model abstraction layer offers vendor-neutral flexibility, helping enterprises avoid vendor lock-in. Meanwhile, Vellum AI’s evaluation and versioning tools streamline development timelines. Prompts.ai, on the other hand, delivers extensive model access paired with enterprise-grade governance, making it ideal for organizations looking to consolidate their AI operations.

Prompt engineering has shifted from being an experimental endeavor to a critical function for modern enterprises. Whether you’re consolidating disparate tools, scaling from pilot projects to full-scale production, or strengthening your AI systems for enterprise use, your platform selection should reflect your organization’s current and future needs.

Start by auditing your existing AI tools, identifying any gaps in security or cost transparency, and then align those insights with the unique advantages each platform offers.

When selecting a prompt engineering platform for AI workflows in the United States, it’s important to focus on features that drive efficiency, ensure security, and support scalability. Choose a platform that accommodates a variety of AI models, allowing you to experiment and refine prompts without juggling multiple accounts. Clear cost management is another critical factor - real-time expense tracking and flexible pricing options can help you stay within budget.

Security should be a top priority, so look for platforms offering enterprise-grade protections, such as SOC 2 compliance, to safeguard sensitive data. Collaboration tools, like version control and shared libraries, can make teamwork smoother and more efficient. To further enhance usability, seek platforms with an intuitive interface, detailed analytics, and deployment options that fit your needs, whether in the cloud or on-premise. By focusing on these features, you’ll find a platform that simplifies prompt creation and delivers real results for your AI projects.

Prompt engineering platforms have the potential to slash AI costs by up to 98%, thanks to their ability to simplify operations and boost efficiency. By bringing various AI models together into one secure, centralized system, these platforms minimize redundant API calls and fine-tune how prompts are routed.

They also offer tools for real-time cost tracking and allow businesses to compare models side by side. This helps organizations pinpoint the most economical solutions for their needs. With these features, resources are used more effectively, reducing waste and delivering greater value.

Prompts.ai prioritizes enterprise-grade compliance standards to deliver secure and dependable AI workflows. It complies with SOC 2 Type II, HIPAA, and GDPR regulations, providing strong safeguards for data protection and privacy.

Highlighted features include secure data handling, automated compliance checks, and comprehensive audit trails, ensuring your AI operations are consistently compliant and reliable.