AI workflow tools are transforming enterprise automation by addressing inefficiencies in traditional systems. These platforms streamline processes, reduce costs, and ensure compliance, making them indispensable for modern businesses. Here's what you need to know:

| Aspect | Prompts.ai | Other Solutions |

|---|---|---|

| Cost Efficiency | Pay-as-you-go with TOKN credits | Activity-based pricing, higher costs |

| Model Integration | 35+ models in one platform | Separate connectors for each model |

| Compliance Features | Built-in audit trails and permissions | Requires extra configuration |

| Deployment Speed | Minutes with pre-built templates | Slower due to manual setups |

| Scalability | Simple, automated resource management | Resource-heavy scaling |

Prompts.ai offers a streamlined, secure, and flexible approach to managing enterprise AI workflows, ensuring businesses stay ahead in an evolving landscape.

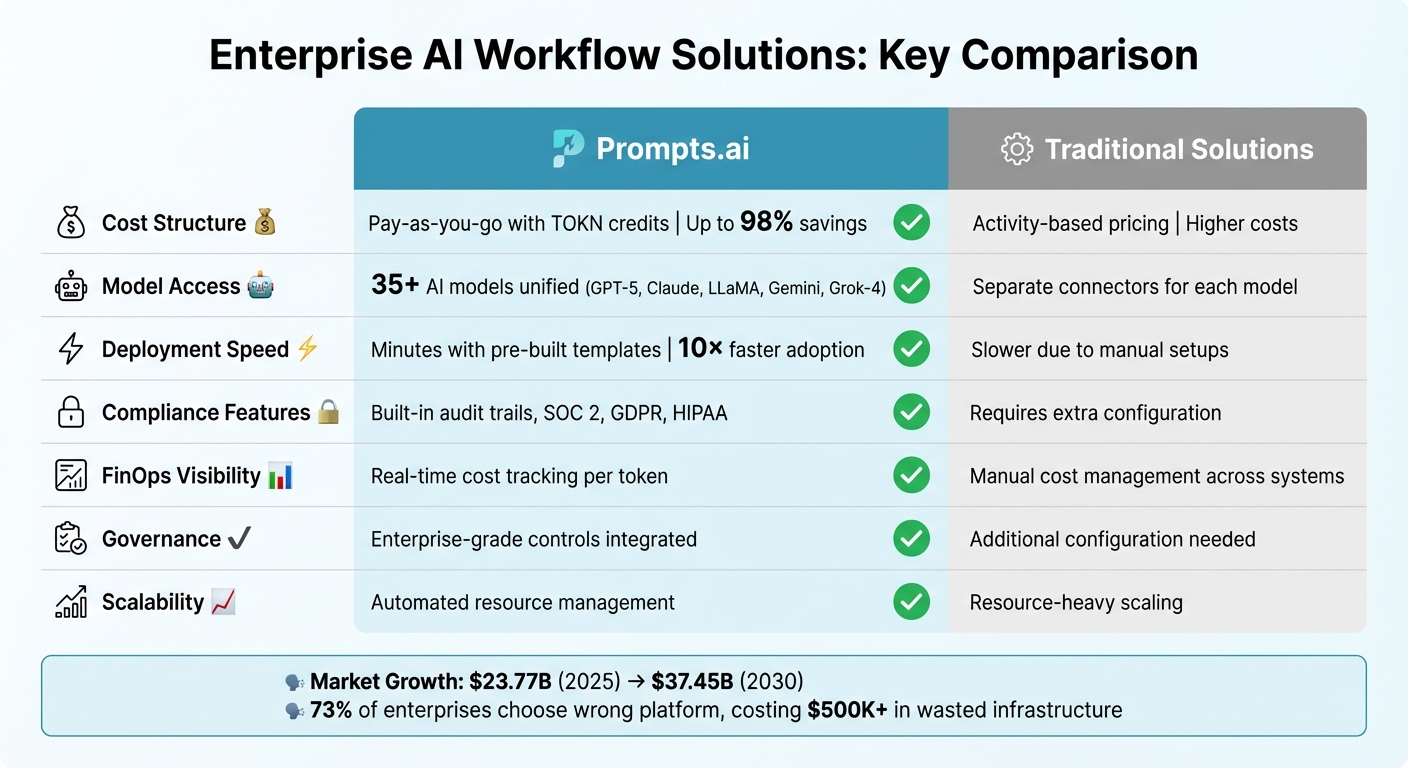

Prompts.ai vs Traditional AI Workflow Solutions: Feature Comparison

Prompts.ai tackles enterprise challenges by bringing together over 35 top-tier large language models - including GPT-5, Claude, LLaMA, Gemini, Grok-4, and Flux Pro - on a single platform. This unified system eliminates tool sprawl and streamlines operations with one dashboard for all major AI providers.

With its flexible multi-model setup, the platform lets you assign specific tasks to the best-suited model without being locked into a single vendor. For instance, route chatbots to Claude, delegate data extraction to LLaMA, and assign more complex tasks to GPT-5 - all within one seamless workflow. You can also test identical prompts across multiple models simultaneously, choosing the most effective one without needing to rewrite integration code.

This approach ensures both flexibility and efficiency in managing tasks and resources.

Prompts.ai uses a pay-as-you-go TOKN credit system paired with a FinOps layer to monitor token usage in real time. This setup can deliver up to 98% savings by consolidating vendor contracts and optimizing model selection based on clear cost-per-token data. Additionally, automated budget controls help prevent overspending by setting department-level limits and notifying managers as thresholds near.

For enterprises bound by SOC 2, GDPR, or HIPAA regulations, the platform offers enterprise-level governance with role-based permissions and comprehensive audit trails for every AI interaction. Proprietary data stays secure, as the system orchestrates models without transferring sensitive information to external providers. Compliance officers benefit from centralized oversight, with detailed records showing which models accessed specific data, when decisions were made, and who approved exceptions - meeting regulatory expectations for documentation.

This focus on security and governance ensures enterprises can maintain compliance without compromising functionality.

The platform’s architecture is designed to grow with your business, from pilot projects to full-scale production, without the need for major infrastructure changes. Adding models, users, or departments is quick and straightforward, thanks to automated provisioning and resource management. Teams can leverage pre-built workflows from the community library and participate in the Prompt Engineer Certification program to establish and share best practices. This combination of technical adaptability and knowledge-sharing enables enterprises to scale AI adoption 10× faster than traditional methods.

Large organizations often turn to specialized integration platforms to connect various AI models with their existing systems. These platforms aim to bridge the gap between advanced AI tools and the intricate infrastructure common in enterprise environments.

Integration platforms come equipped with extensive libraries of connectors, making it easier to link AI models with widely used business systems. For companies relying on legacy systems or on-premises databases, some platforms deploy on-prem agents. These agents establish secure connections using private IPs, ensuring local data accessibility while avoiding exposure to the public internet. This level of integration lays the groundwork for secure and efficient operations across the enterprise.

Keeping AI costs in check at scale requires careful planning. These platforms help by assigning tasks to the most suitable models and optimizing workflows. For instance, lightweight models handle simpler tasks like routing or classification, saving resources, while more complex tasks are assigned to premium models. Additionally, batching API calls is another way these platforms reduce expenses effectively.

To meet regulatory standards, these platforms incorporate strong governance frameworks. They ensure data sovereignty by preventing enterprise data from being used for third-party model training. For organizations with strict data requirements, self-hosted deployment options keep all workflows and sensitive data within their infrastructure. This approach not only safeguards proprietary information but also maintains compliance with data regulations. Furthermore, the platforms' scalable architecture ensures that even as workflows grow more complex, operational integrity remains intact.

These solutions are built to handle the demands of enterprise workflows, even when they involve long pauses or interruptions. Workflows can pause for days, awaiting human approvals, and then pick up seamlessly - even after server restarts. This resilience is critical for managing multi-step processes and complex approval chains, especially in environments where zero data loss is non-negotiable.

Here’s a side-by-side comparison highlighting the trade-offs between prompts.ai and traditional integration methods, based on the capabilities discussed earlier:

| Aspect | prompts.ai | Conventional Integration Methods |

|---|---|---|

| Cost Structure | Operates on a pay-as-you-go model with TOKN credits, avoiding recurring fees and lowering expenses. | Uses activity-based or execution-based pricing, which can become expensive with heavy AI usage. |

| Model Access | Offers unified access to 35+ leading LLMs, such as GPT-5, Claude, LLaMA, Gemini, and Grok-4, removing the need for individual connectors. | Requires separate connectors for each AI model, adding complexity to integration efforts. |

| FinOps Visibility | Delivers real-time cost tracking and built-in optimization for every token used. | Lacks automated, real-time spend tracking, leaving teams to manually manage costs across different systems. |

| Deployment Speed | Facilitates deployment of secure, compliant workflows in minutes using pre-built prompt templates and streamlined processes. | Involves significant setup time for connectors and custom integrations, delaying implementation. |

| Governance | Integrates enterprise-grade controls, complete with audit trails and compliance features, into every workflow. | Provides governance tools that often require extra configuration to meet compliance needs. |

| Learning Curve | Includes a Prompt Engineer Certification program and community support to ease onboarding and skill development. | Requires managing multiple endpoints and integrations, which can be more challenging to learn. |

| Scalability | Built to scale effortlessly, allowing organizations to expand models, users, and teams without infrastructure headaches. | Supports scaling but demands additional resources to maintain performance and manage complexity. |

For organizations prioritizing unified access to multiple AI models, transparent cost management, and streamlined workflows, prompts.ai offers a simplified and efficient solution. On the other hand, traditional methods may appeal to those who prefer manual configurations and are prepared to handle the complexities of separate integrations.

Enterprise AI workflows in 2026 require seamless access to models, clear cost oversight, and strong governance to remain effective.

Prompts.ai offers a straightforward solution by providing access to over 35 leading LLMs through a single, pay-as-you-go platform - eliminating the need for recurring fees. With real-time FinOps tracking and built-in compliance tools, organizations can deploy secure workflows in just minutes. These capabilities position prompts.ai as a critical tool for enterprises aiming to stay ahead in a rapidly evolving landscape.

The projected growth of the workflow automation market - from $23.77 billion in 2025 to $37.45 billion by 2030 - emphasizes the importance of choosing a platform that scales efficiently without adding infrastructure complexity [1].

Enterprises should carefully evaluate their needs, whether it’s integration with Microsoft 365, managing services across departments, or ensuring high-security, air-gapped operations. Transparent spending, detailed compliance audit trails, and the ability to switch models effortlessly are essential considerations. As Domo highlights:

AI only delivers when embedded in real business workflows. Models and insights must translate into automated actions.

Choosing an interoperable AI workflow platform enables reliable execution, supports human-in-the-loop processes, and ensures the flexibility to work with any model - helping protect your AI investments. Alarmingly, around 73% of enterprises select the wrong AI orchestration platform, leading to wasted infrastructure costs of over $500,000 [2].

To determine the most suitable model for a workflow task, begin by evaluating the task's complexity, the type of data involved, and specific requirements. For straightforward, clearly defined tasks, traditional workflows or smaller models might suffice. However, more intricate tasks often require advanced AI models. It's also essential to weigh factors such as cost, security, governance, and how well the model integrates with existing systems. Platforms that provide access to multiple models can streamline this process, allowing you to balance performance and cost by aligning the right model with each task.

Sensitive enterprise data is protected using a combination of encryption - both during storage and transmission - role-based access controls (RBAC), and single sign-on (SSO). These measures are reinforced by continuous monitoring, auditing, and centralized policy management, which help identify irregularities and maintain compliance. Many platforms align with key standards such as GDPR, CCPA, and HIPAA, offering features like data residency options and independent security assessments to ensure strong defenses while supporting AI-powered operations.

To effectively manage and control AI-related expenses, prioritize clear visibility, predictive tools, and a structured budgeting approach. Start by closely monitoring infrastructure, licensing, and operational costs in detail. Leverage predictive analytics to forecast future expenses using historical data, allowing for more strategic budget allocation. Adopt cost governance tools to gain real-time insights into spending, establish limits to prevent overruns, and conduct regular reviews with finance teams to stay on track and refine spending strategies.