Get the tools to simplify your AI workflows today. Managing AI models can be complex, but the right platforms help streamline deployment, reduce costs, and ensure security. From integrating multiple LLMs to automating workflows, here are the top five platforms to consider:

Quick Comparison

| Platform | Key Strengths | Starting Price | Best For |

|---|---|---|---|

| Prompts.ai | Unified LLM access, cost tracking, governance | $0/month (Personal Plan) | Teams centralizing AI workflows |

| Weights & Biases | Experiment tracking, debugging, scalability | Free tier available | Developers optimizing LLMs |

| Hugging Face | Open-source models, automation, affordability | $9/month (Pro Plan) | Open-source enthusiasts |

| DataRobot | Full automation, compliance, monitoring | Custom enterprise pricing | Regulated industries |

| Google Vertex AI | Enterprise-grade AI tools, security features | Pay-per-use | Large-scale cloud environments |

What’s next? Identify your team’s priorities - whether it’s cost control, scaling, or compliance - and explore free trials or demos to find the best fit.

AI Model Workflow Software Comparison: Features, Pricing, and Best Use Cases

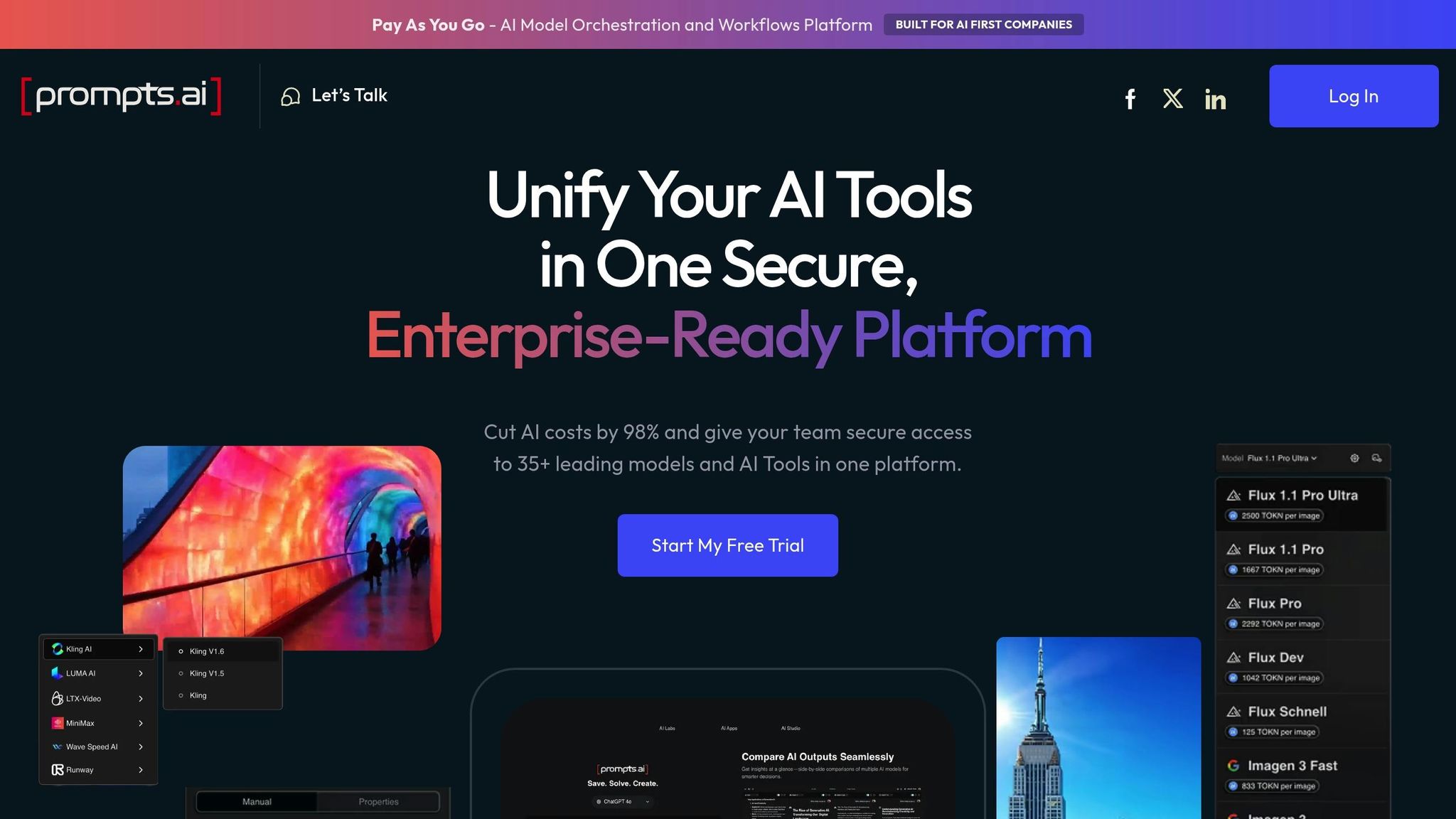

Prompts.ai is a platform designed to simplify AI orchestration by bringing together over 35 leading large language models (LLMs) - including GPT-5, Claude, LLaMA, Gemini, and Grok-4 - into a single, secure interface. By eliminating the need for separate subscriptions and logins across multiple providers, it streamlines access to these models through one centralized dashboard. This minimizes the hassle of managing multiple tools and reduces the complexity of switching between them.

Prompts.ai integrates LLMs directly into workflows, enabling tasks like summarization, classification, or content generation without requiring custom API coding. Its model-agnostic design allows seamless switching between models - such as using Claude for one task and GPT-5 for another - without disrupting workflows. Teams can compare performance across models side-by-side, select the best option, and deploy it quickly in production environments. This flexibility simplifies workflow automation and centralizes AI operations.

The platform connects AI models to tools like Slack, Google Sheets, and CRM systems, consolidating automation logic in one place. This reduces maintenance efforts and makes it easier to monitor how data moves through each stage of a process.

Prompts.ai offers real-time token usage tracking, helping teams identify inefficiencies, reduce latency, and align costs with business outcomes. Its pricing structure uses a pay-as-you-go TOKN credit system starting at $0, with business plans ranging from $99 to $129 per member per month. This approach can cut AI software expenses by as much as 98% compared to managing multiple standalone subscriptions. The platform is designed to scale effortlessly, supporting everything from small tests to enterprise-level systems without requiring major reconfigurations.

The platform includes built-in governance tools and audit trails to log every AI interaction, ensuring compliance with organizational policies. Teams can manage permissions, enforce usage rules, and protect sensitive data. Prompts.ai also offers a Prompt Engineer Certification program and provides access to a community library of expert-designed workflows. These resources help teams establish best practices and maintain consistency as they expand their AI capabilities.

Weights & Biases offers a robust platform for tracking, analyzing, and improving AI model workflows, with a focus on tools tailored for large language models (LLMs). Leading companies like OpenAI and Cohere use it to streamline model training and evaluation processes.

The platform's W&B Weave tool is specifically designed for LLM applications, enabling developers to trace inputs and outputs, debug agent steps, and monitor document retrieval in retrieval-augmented generation (RAG) systems. The Prompts feature helps identify chain breakpoints, making it easier to pinpoint failures compared to traditional cloud logs. As Adam McCabe, Head of Data, explains:

What I really like about Prompts is that when I get an error, I can see which step in the chain broke and why. Trying to get this out of the output of a cloud provider is such a pain.

W&B seamlessly integrates with popular LLM frameworks such as LangChain, LlamaIndex, Hugging Face, DSPy, and TorchTune. It also supports fine-tuning models from providers like OpenAI and Cohere, utilizing W&B Sweeps to automate hyperparameter optimization. With W&B Artifacts, version control becomes effortless, tracking components like prompt templates, datasets, and model checkpoints to ensure reproducibility at every stage of development. These integrations create a streamlined and efficient workflow for LLM development.

The platform extends its LLM capabilities with tools for workflow automation. W&B Launch simplifies job orchestration across clusters, while Automations enable event-triggered pipeline actions. It also integrates with orchestration tools like Kubeflow Pipelines, Metaflow, and Dagster, centralizing experiment tracking and metric logging. Real-time monitoring links model performance to GPU usage, helping teams identify inefficiencies and optimize resource allocation. Mike Maloney, Co-founder and CDO at Neuralift, highlights its benefits:

I love Weave for a number of reasons. The fact that I could just add a library to our code and all of a sudden I've got a whole bunch of information about the GenAI portion of our product... All those things that I'm watching for the performance of our AI, I can now report on quickly and easily with Weave.

These tools empower teams to deploy and manage AI models more effectively, saving time and resources.

W&B Weave includes built-in cost tracking for LLM usage, allowing teams to monitor expenses during development. The platform offers three deployment options to match organizational needs:

Additionally, W&B Training provides serverless reinforcement learning with managed GPU infrastructure that scales automatically for tasks requiring multiple interactions. This eliminates the need for manual provisioning and maintenance, making it easier to scale AI operations while keeping costs in check.

The platform is certified under ISO/IEC 27001:2022, 27017:2015, 27018:2019, and complies with SOC 2, HIPAA, and NIST 800-53 standards. It includes features like detailed audit logs and custom data controls for compliance monitoring. Audit logs track all user activity, while Prometheus monitoring ensures system health. Enterprise users can implement custom local embeddings and data access controls to safeguard sensitive information during development. The Model Registry acts as a centralized hub for managing model versions and lineage, ensuring smooth transitions between development and DevOps teams. These features help organizations maintain high security and compliance standards as they scale their AI workflows.

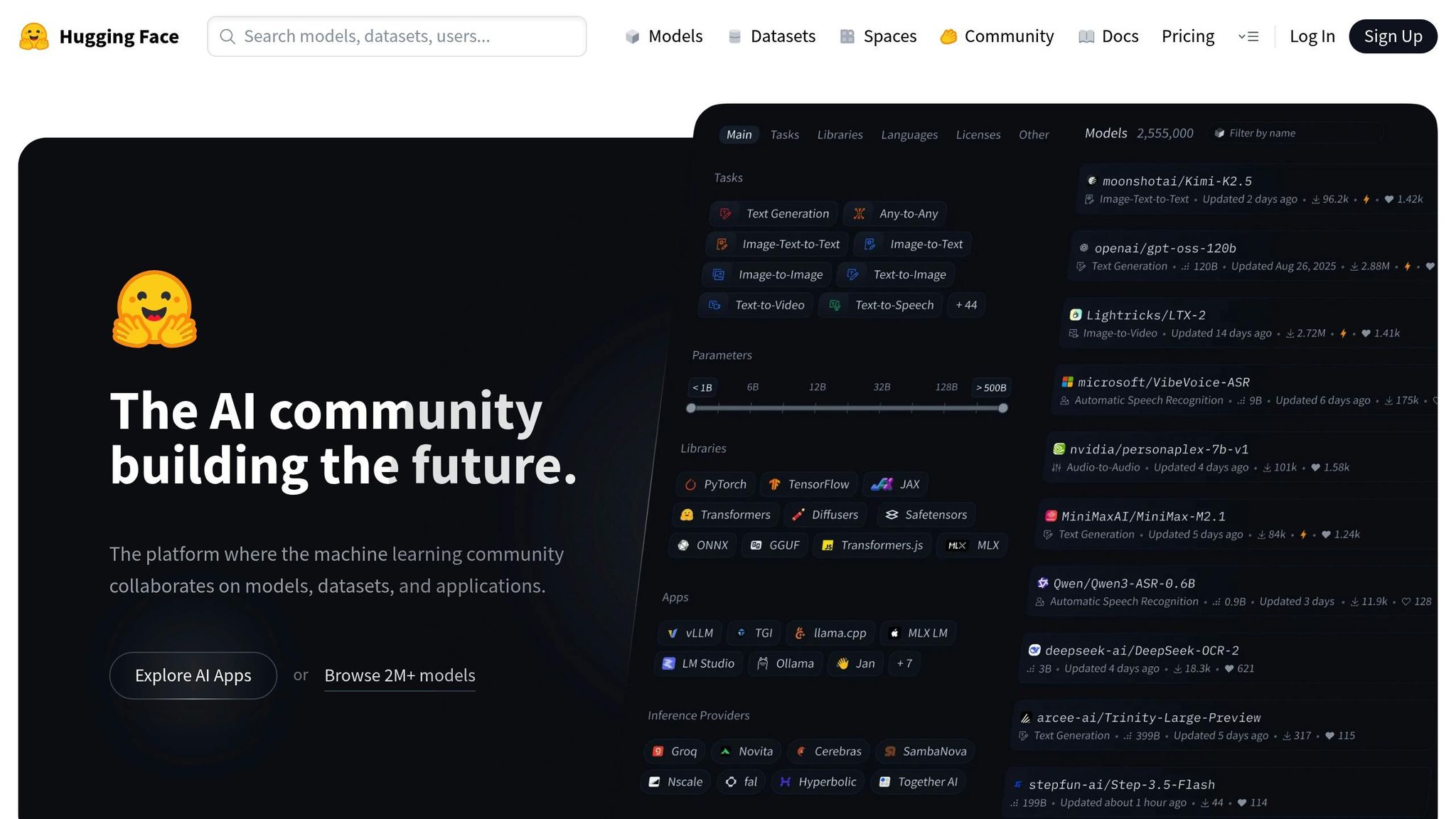

Hugging Face acts as a central platform for AI workflows, offering access to over 200,000 public models, datasets, and Spaces. Its Transformers library simplifies working with advanced LLMs, while the PEFT library allows for parameter-efficient fine-tuning, cutting down on computational demands. Developers can connect to models through more than 10 inference partners using just one API token, with zero markup pricing that aligns with direct provider rates.

Hugging Face integrates effortlessly with popular orchestration frameworks like CrewAI, LangChain, Haystack, LlamaIndex, and smolagents, enabling the creation of complex AI agent workflows. For teams prioritizing privacy or cutting costs, the platform supports local model execution via integrations with tools like Llama.cpp, Ollama, Jan, and LM Studio, completely bypassing API provider fees. Additionally, the ModelHubMixin class allows developers to connect custom ML frameworks, offering standardized methods for repository management, commits, and versioning. These features streamline automation and make deployment more efficient.

Hugging Face enhances productivity with Hugging Face Jobs, a system designed to automate repetitive tasks such as model training, batch inference, and synthetic data generation. These workflows run on Hugging Face’s infrastructure and can be scheduled using predefined intervals or custom CRON expressions. Event-driven triggers via webhooks allow Jobs to launch automatically when specific repository changes occur, creating a CI/CD-like environment for updating models. The platform also supports UV scripts, enabling Python scripts with inline dependencies to execute seamlessly without the need for elaborate setups.

Hugging Face operates on a pay-as-you-go model, charging per second of compute time for CPUs, GPUs, and TPUs. The Inference Providers API includes a :cheapest suffix, which automatically selects the most cost-effective infrastructure partner for a given model. Enterprise Plus plans offer extensive scalability, supporting up to 500TB of base storage and 100,000 API requests within a 5-minute window. Team and Enterprise plans also come with $2,000 in Hub credits or 5% of the Annual Contract Value for inference and compute jobs, making deployment more budget-friendly while enhancing automation capabilities.

Hugging Face ensures secure and compliant operations with advanced governance tools. Enterprise plans include OAuth token exchange, fine-grained access controls, and two-factor authentication. The platform uses Model Cards to provide metadata for discoverability, reproducibility, and detailed documentation, including licensing and citations. Enterprise Plus customers benefit from SCIM provisioning for automated user management, as well as network access controls and audit logs for tracking activity. Additionally, the Safetensors library offers a secure way to store and distribute neural network weights, mitigating risks associated with older formats.

DataRobot simplifies AI workflows by offering a fully automated solution that covers everything from data analysis to deployment. Its Leaderboard feature ranks models by accuracy, automatically preparing the best-performing one for production. With one-click deployments, users can create API endpoints and set up monitoring without needing manual input. Tom Thomas, Vice President of Data Strategy, Analytics & Business Intelligence at FordDirect, highlighted the platform's efficiency:

DataRobot helps us deploy AI solutions to market in half the time we used to do it before and easily manage the entire AI journey.

DataRobot takes a flexible approach to LLMs by supporting both open-source and proprietary models. Its provider-agnostic design ensures compatibility with various agentic frameworks, allowing users to build and test workflows in a dedicated playground. Custom tools handle tasks like data retrieval and external API communication. The Bolt-on Governance API centralizes monitoring to reduce risks in generative AI applications, while the ToolClient class from the datarobot-genai package processes tool calls through globally deployed tools, boosting performance. This integration creates a seamless foundation for automating workflows.

Leveraging its LLM integration, DataRobot automates tasks like feature engineering and blueprint selection while dynamically managing resources across cloud and on-premise systems. It also continuously tests models, replacing underperformers in real time. The platform's AI Accelerators offer predefined templates tailored to specific use cases, speeding up development through modular components. Users can switch between the NextGen UI or programmatic methods, such as REST API or Python client packages, to suit their workflow needs.

DataRobot includes serverless, autoscaling environments to reduce infrastructure costs and offers real-time monitoring of compute expenses. By integrating NVIDIA GPUs, it accelerates both training and inference processes. It also supports flexible deployment options, including on-premise, Virtual Private Cloud, or SaaS environments. With a 4.7/5 rating on Gartner Peer Insights and 90% of users recommending it, the platform has earned strong validation for its scalable solutions.

The platform simplifies compliance testing for global standards, including the EU AI Act, NIST AI RMF, NYC Law No. 144, and SR-117 for financial services. It tracks every model version with detailed artifacts and changelogs, while real-time safeguards prevent issues like PII leakage, prompt injections, and hallucinations. Tim Reed, Head of Data Science & Analytics at NZ Post, shared:

DataRobot gives us reassurance that we are accessing generative AI through a secure environment.

The Model Registry acts as a version-controlled repository for test results and metadata, while Role-Based Access Control ensures that only authorized users can make production changes. These features provide a secure and well-governed environment for AI deployment.

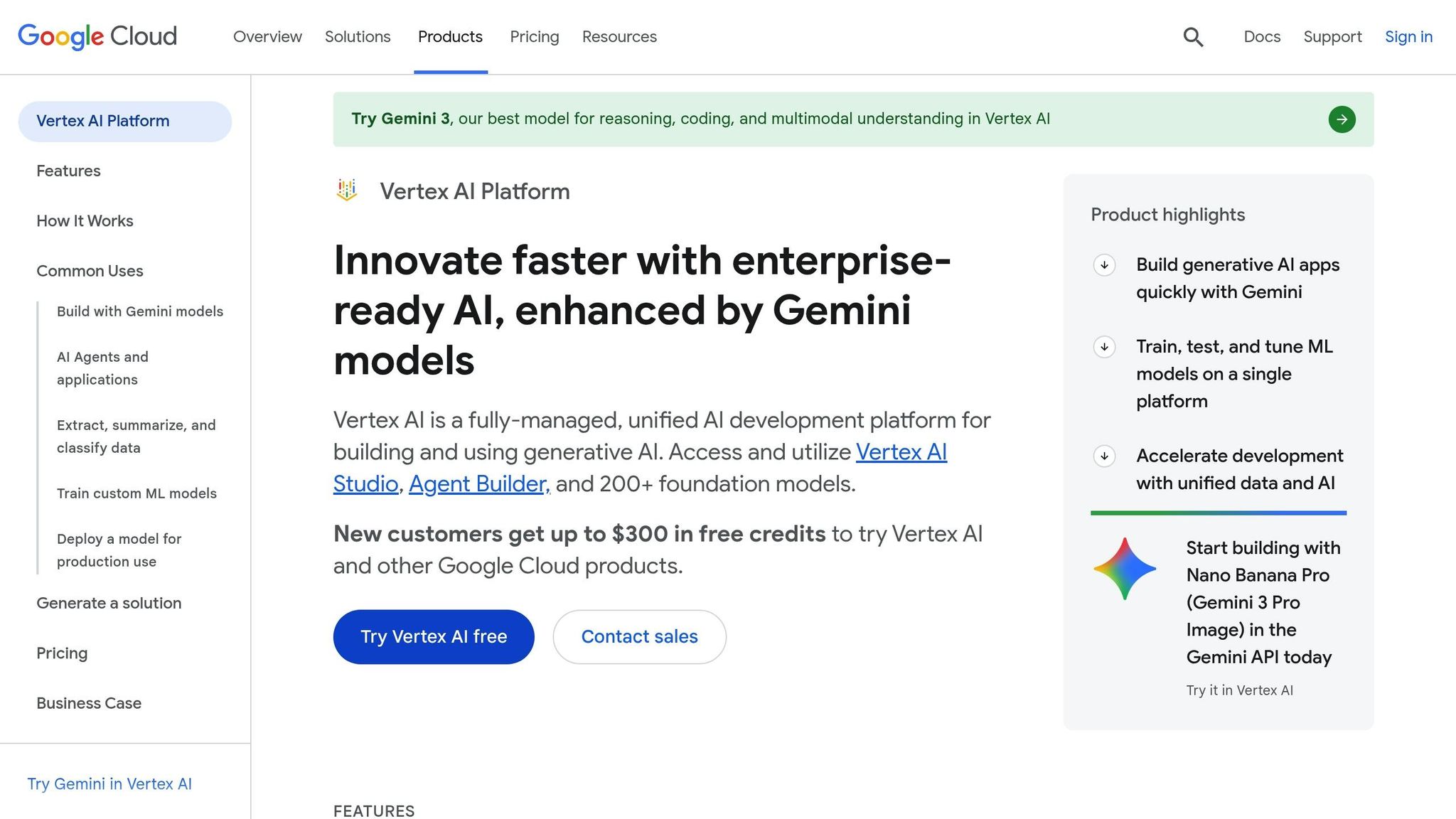

Google Vertex AI consolidates access to over 200 foundation models through its Model Garden, including Google's Gemini 3, Imagen, Veo, third-party models like Anthropic's Claude, and open-source options such as Llama 3.2. This centralized approach simplifies the management of multiple model providers. Recognized as a Leader in the 2025 IDC MarketScape for Worldwide GenAI Life-Cycle Foundation Model Software, Vertex AI is a trusted solution for enterprise AI workflows, enabling teams to adapt large language models (LLMs) to meet shifting business demands.

Vertex AI Studio provides a low-code interface for crafting and testing prompts across text, image, video, and code applications. It supports Supervised Fine-Tuning (SFT) and Parameter-Efficient Fine-Tuning (PEFT), allowing businesses to tailor models like Gemini to their specific needs. With Grounding and RAG (Retrieval-Augmented Generation), LLMs can access real-time data from Google Search or enterprise databases, reducing inaccuracies in production. GA Telesis highlighted the platform's impact with this statement:

The accuracy of Google Cloud's generative AI solution and practicality of the Vertex AI Platform gives us the confidence we needed to implement this technology into the heart of our business and achieve our long-term goal of a zero-minute response time.

The Vertex AI Agent Builder helps create enterprise-grade agents that are deeply connected to organizational data, while Model Armor provides protection against threats like prompt injection and data exfiltration during production.

Vertex AI enhances automation by simplifying the management of complex machine learning workflows. Vertex AI Pipelines organizes tasks into serverless, containerized processes using a Directed Acyclic Graph (DAG). Developers can use the Kubeflow Pipelines (KFP) SDK for general workflows or TensorFlow Extended (TFX) to handle large-scale structured data. Prebuilt Cloud Pipeline Components make it easy to integrate services like AutoML into workflows. Additionally, Vertex ML Metadata tracks ML artifact lineage and parameters, ensuring auditability and reproducibility throughout the model lifecycle. Developers can test locally with DockerRunner before deploying remotely, reducing the risk of errors.

Vertex AI offers flexible pricing, charging for training and predictions in 30-second increments, with no minimum usage time. Custom training options include Spot VMs and model co-hosting, which utilize spare Google Cloud capacity at reduced rates and allow resource sharing across endpoints to cut costs. High-utilization teams can opt for Vertex AI training clusters, which provide dedicated accelerator capacity for consistent performance on large workloads. Pipeline executions start at $0.03 per run, while generative AI services are priced as low as $0.0001 per 1,000 characters. The Agent Engine includes a monthly free tier, offering 180,000 vCPU-seconds (50 hours) and 360,000 GiB-seconds (100 hours), ensuring businesses are only billed for active runtime.

Vertex AI emphasizes security and accountability with features like IAM-based Agent Identity and granular permissions, replacing default service accounts with dedicated roles such as Vertex AI User. Vertex AI Model Monitoring identifies training-serving skew and inference drift, ensuring models perform as expected and reducing financial waste. The Model Registry centralizes model version management and deployment, while Vertex ML Metadata enables detailed lineage tracking for compliance and reproducibility. AutoML training jobs incur no charges for failures unrelated to user cancellation, offering cost protection during experimentation. These governance tools ensure that enterprise AI workflows remain secure, efficient, and easy to manage.

The table below provides a clear side-by-side view of key features across leading AI workflow platforms. Whether your priorities are model access, budget, scalability, or compliance, this comparison will help you choose the best solution for your needs.

| Platform | LLM Support | Pricing Model | Scalability | Compliance & Governance |

|---|---|---|---|---|

| prompts.ai | 35+ models (GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, Kling) via a unified interface | Pay-as-you-go TOKN credits; plans from $0/month (Personal) to $129/member/month (Elite); no fees for unused capacity | Scales from solo projects to enterprise-level deployments | Real-time FinOps cost controls, audit trails, role-based access control, and secure data handling |

| Weights & Biases | Model-agnostic tracking compatible with any LLM or framework | Free tier available; team and enterprise plans with custom pricing | Scales from individual experiments to thousands of concurrent runs; available as cloud-hosted or self-hosted options | Centralized governance dashboards, RBAC, audit logs, and options for VPC or on-premise deployment to meet data residency needs |

| Hugging Face | 100,000+ open-source models plus proprietary models via Inference Endpoints | Free public repositories; Pro plan at $9/month; Enterprise Hub with custom pricing | Autoscaling inference endpoints and horizontal scaling for training and deployment | SOC 2 Type II certified, GDPR compliant, with SSO, fine-grained access controls, and private model hosting |

| DataRobot | Supports major LLMs and custom models | Usage-based and subscription pricing; custom enterprise plans | Elastic compute resources that scale across cloud, on-premise, or hybrid environments | FedRAMP authorized, HIPAA compliant, ISO 27001 certified, with model monitoring, bias detection, and explainability tools |

| Google Vertex AI | 200+ foundation models available via Model Garden | Pay-per-use pricing based on consumption, with a free tier for select services | Supports spot VMs, model co-hosting, dedicated training clusters, and serverless pipelines | IAM-based agent identity, granular permissions, model monitoring for drift detection, model registry for version control, and ML metadata for lineage tracking |

This breakdown highlights how each platform supports AI workflows from development to deployment. For those prioritizing cost control and unified model access, prompts.ai stands out with its real-time spend tracking and straightforward pricing. Weights & Biases shines in experiment tracking, while Hugging Face fosters an extensive open-source ecosystem. DataRobot is ideal for teams with strict compliance needs, and Google Vertex AI offers seamless integration into enterprise cloud environments.

Selecting the right software for workflow automation, cost control, and compliance isn't about chasing the latest features - it’s about addressing your team’s specific needs. With 75% of global knowledge workers now using generative AI[1], the urgency to adopt is undeniable. However, hasty decisions can lead to tool sprawl, unexpected expenses, and management headaches.

The platforms discussed earlier cater to various challenges. If controlling costs and accessing multiple models seamlessly is your focus, look for solutions with real-time spend tracking and clear pricing structures. For teams struggling with integration, prioritize platforms that work well with your existing tools. And when compliance and security are critical, features like SOC 2 certification, role-based access control, and detailed audit trails are essential for smooth AI governance and deployment.

Start by auditing your current workflows to identify bottlenecks, then test potential solutions through free trials or demos. Modern AI workflows are designed to analyze data and make decisions autonomously. As Nicole Replogle, Staff Writer at Zapier, aptly says:

AI automation tools are the connective tissue between AI models and the systems where work happens.

Choose platforms that align with your goals, whether you're managing small-scale projects or enterprise-level operations. Evaluate each option based on key factors: productivity (can non-technical users easily adopt it?), cost predictability (does pricing scale fairly?), and regulatory compliance (does it meet your industry’s standards?). The right platform will depend on your organization’s current position and future aspirations.

To pick the best AI workflow platform, start by assessing your team's technical expertise, the complexity of your workflows, your budget, and your specific operational needs. If your team has varying skill levels, look for platforms that emphasize collaboration and include strong automation tools. For example, Prompts.ai provides centralized access to large language models, cost management tools, and governance features, making it a secure and scalable option. Focus on platforms that meet your integration requirements, ensure robust security, and fit within your budget to streamline your AI processes effectively.

To manage LLM costs effectively, keep an eye on token usage, monitor API requests, and refine your prompts for efficiency. Leverage real-time cost tracking tools to stay updated on expenditures and routinely review infrastructure expenses to uncover potential savings. These practices ensure better control over your budget while enhancing overall cost management.

In regulated industries, maintaining robust governance is essential. Critical features include compliance management, audit trails, data security, and cost control. Platforms like Prompts.ai simplify these tasks by offering:

With centralized governance, organizations can efficiently track models, reduce risks, and streamline audits. This approach not only ensures compliance but also supports operational accountability, making it easier to navigate complex regulatory landscapes.