AI orchestration is now a must-have for scaling AI projects, with the market valued at $11.47 billion in 2025. Yet, 95% of generative AI pilots fail to reach production, largely due to fragmented tools, compliance risks, and debugging challenges. This article breaks down the top 10 platforms helping businesses overcome these hurdles, offering solutions for secure, efficient, and scalable AI workflows.

| Platform | Key Strengths | Pricing Model | Ideal Users |

|---|---|---|---|

| Prompts.ai | 35+ LLMs, cost tracking | Pay-as-you-go TOKN credits | Enterprises, creative teams |

| Vellum AI | No-code + developer tools | Free tier; $25+/month | IT, product teams |

| n8n | Self-hosting, open-source | Free; $20+/month | Developers, technical teams |

| Zapier | User-friendly, 8,000+ integrations | $19.99+/month | Non-technical professionals |

| Workato | Enterprise-grade compliance | Usage-based | Large enterprises |

| Gumloop | Flexible workflows, AI assistant | $37+/month | Teams of all sizes |

| Lindy AI | No-code for repetitive tasks | $49.99+/month | Small teams, founders |

| Stack AI | Strong governance, compliance | Custom pricing | Regulated industries |

| Pipedream | Serverless, custom coding | Free; $29+/month | Developers, SaaS builders |

| Dify | Open-source, dual deployment | Free; $59+/month | Mixed teams, enterprises |

These platforms address key pain points like tool sprawl, compliance gaps, and debugging inefficiencies. Whether you're scaling AI agents or automating workflows, this guide helps you choose the right solution for your needs.

AI Workflow Platform Comparison: Top 10 Solutions for 2025

Prompts.ai brings together over 35 top-tier large language models, including GPT-5, Claude, and LLaMA, into a single, secure platform. Created by Emmy Award-winning Creative Director Steven P. Simmons, it simplifies AI workflows by eliminating tool sprawl, ensuring governance, and reducing AI costs by up to 98%. This platform is specifically designed for organizations grappling with the challenges of managing multiple tools and rising costs.

Prompts.ai is tailored for Fortune 500 companies, creative agencies, and research labs looking to expand their AI capabilities without compromising on security or budget. Whether it’s a solo knowledge worker or an enterprise team with hundreds of users, the platform provides a streamlined solution to meet regulatory and operational demands.

The platform operates on a Pay-As-You-Go TOKN credit system, removing the need for recurring subscription fees. Personal plans start at $0/month for initial exploration and scale to $29/month for creators. Team plans begin at $99/month, while business plans range from $99 to $129 per member/month, catering to general staff, specialized knowledge workers, and creative leaders. This flexible pricing ensures costs align with actual usage, avoiding fixed monthly commitments.

Prompts.ai goes beyond cost savings by offering tools to optimize workflows. Features include side-by-side model comparisons and real-time FinOps cost tracking, helping teams identify the most effective model for specific tasks while monitoring token usage. The platform also provides curated "Time Savers" - pre-built prompt workflows contributed by a global network of certified prompt engineers. These workflows transform experimentation into repeatable, compliant processes. Additionally, the Prompt Engineer Certification program establishes best practices and develops in-house experts to drive AI adoption across teams.

Prompts.ai prioritizes security with encryption, granular access controls, and detailed audit logs to monitor all platform activity. Enterprise-grade encryption, SSO, and role-based permissions create robust audit trails, addressing compliance needs in regulated industries. Built-in approval workflows and safeguards are specifically designed to meet the stringent requirements of sectors like finance and healthcare.

Vellum AI bridges the gap between pilot projects and production by providing a unified platform that combines the ease of no-code tools with the flexibility of developer-level customization. This setup helps tackle fragmented tools and regulatory challenges. Product managers can quickly create proof-of-concepts in under a week, while engineers refine workflows using Python or TypeScript for long-term reliability. Below is an overview of Vellum AI's audience, pricing, workflow features, and security protocols.

Vellum AI is designed for operations leaders, IT/security teams, product managers, and data/AI professionals aiming to scale AI projects from concept to production. For example, RelyHealth successfully deployed hundreds of custom healthcare agents in 2026, meeting strict industry standards for speed and accuracy.

"We accelerated our 9-month timeline by 2x and achieved bulletproof accuracy with our virtual assistant. Vellum has been instrumental in making our data actionable and reliable." – Max Bryan, VP of Technology and Design

The platform offers a free tier for testing, making it accessible for initial exploration. Paid plans start at $25/month, catering to growing teams. For larger deployments requiring advanced oversight and hosting options - like private VPC or air-gapped on-premises setups - custom enterprise pricing is available.

Vellum’s Agent Builder simplifies workflow creation using natural language prompts, removing the need for manual setup. The visual IDE supports complex operations like branching, recursion, looping, and parallel execution. Custom code nodes allow Python or TypeScript execution directly within workflows, with automatic schema inference for inputs and outputs. Built-in fault-tolerance features include automated retries, fallback options, and early-fail logic to handle third-party issues. Additionally, the platform integrates evaluation tools for offline and online testing, regression analysis, and model comparisons before final deployment.

Vellum AI prioritizes compliance with SOC 2 Type 2 and HIPAA standards. It employs AES-256 GCM encryption, role-based access control, SSO/SAML, SCIM provisioning, and immutable audit logs to ensure secure operations. The platform supports deployment across public cloud, private VPC, and on-premises environments, making it ideal for industries with strict regulatory requirements.

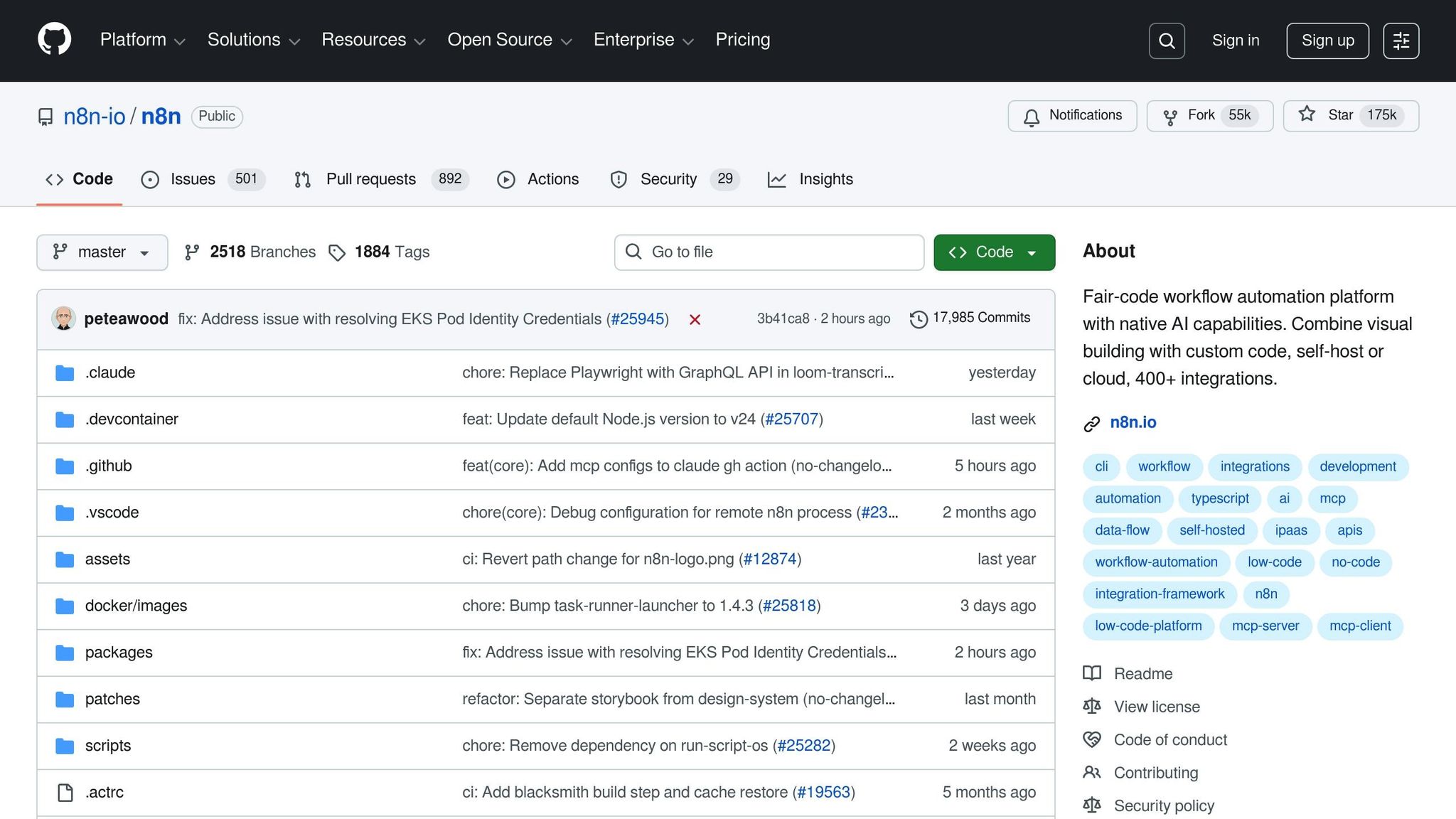

n8n stands out in the realm of AI workflow orchestration thanks to its exceptional self-hosting capabilities. Operating under a fair-code model, it combines visual workflow building with advanced technical control. With over 174,000 stars on GitHub, it has become one of the most sought-after open-source automation tools.

n8n is tailored for developers, IT professionals, and technical teams who need more flexibility than typical no-code platforms offer. It's particularly effective for organizations handling sensitive data or requiring intricate integrations. For instance, Vodafone revamped its threat intelligence processes in 2024 using n8n, achieving £2.2 million in operational cost savings. Similarly, Dennis Zahrt from Delivery Hero saved 200 hours per month with a single n8n workflow.

The Community Edition of n8n is free for self-hosting. For those who prefer a managed solution, cloud plans start at $20/month (billed annually), which includes 2,500 workflow executions and unlimited steps. Unlike some platforms, n8n charges per workflow execution rather than per step or credit. Enterprise pricing is available for organizations requiring advanced features like SSO, custom roles, and log streaming.

n8n offers over 400 pre-configured integrations and more than 1,700 starter templates. It also supports the Model Context Protocol (MCP) for standardized AI agent communication and integrates seamlessly with LangChain for modular AI application development. When pre-built options fall short, the HTTP Request node enables API connectivity via cURL imports, and the Code node supports custom JavaScript or Python executions with npm or Python library integration.

Luka Pilic, Marketplace Tech Lead at StepStone, highlighted n8n's efficiency:

"It takes me 2 hours max to connect up APIs and transform the data we need. You can't do this that fast in code."

His team completed two weeks' worth of integration work in just two hours, accelerating data source connections by 25X.

n8n features native AI nodes and integrates deeply with LangChain, enabling users to build multi-step agents, chains, and Retrieval-Augmented Generation (RAG) workflows with vector stores and embedding nodes. It supports a wide range of LLM providers, including OpenAI, Anthropic, DeepSeek, Mistral, and local models through Ollama. The platform also incorporates an AI Evaluations framework, allowing users to test workflows with real data before deployment, tracking metrics like accuracy and execution speed. With the ability to handle up to 220 workflow executions per second, n8n is well-suited for high-volume operations.

n8n is SOC 2 audited and offers robust enterprise-grade security features. These include SSO (SAML, LDAP), Role-Based Access Control (RBAC), and secret management through AWS Secrets Manager, Azure Key Vault, and HashiCorp Vault. The platform also supports Git-based version control, allowing for isolated environments for development, staging, and production. Human-in-the-loop nodes provide manual review at critical workflow points, while audit logging and external log streaming to tools like Datadog ensure comprehensive tracking of usage and changes. With 25% of Fortune 500 companies using n8n for critical AI workflows, its security and governance capabilities meet the demands of high-stakes applications.

Zapier has carved out a niche in the AI workflow space by blending traditional automation with cutting-edge AI tools, all designed for users without technical expertise. With over 8,000 app integrations and more than 350 million AI tasks completed, Zapier supports 3.4 million companies globally. Its ecosystem includes Zaps (automated workflows), Tables (data storage), Forms (data collection), and Canvas (visual planning), creating a comprehensive solution for automation.

Zapier appeals primarily to business operations teams, marketers, and non-technical professionals who need streamlined automation without coding. It’s particularly well-suited for mid-sized to enterprise organizations managing high-volume tasks. For instance, in 2024, a three-person IT team at Remote.com, led by Marcus Saito, automated over 11 million tasks using Zapier, cutting 28% of IT ticket resolution time and saving $500,000 in hiring costs. Similarly, Jacob Sirrs from Vendasta implemented an AI-driven lead enrichment system with Zapier, recovering $1 million in potential revenue and reclaiming 282 workdays annually from manual processes.

Zapier’s pricing is based on tasks, with only successful actions counting toward monthly limits. The Free plan offers 100 tasks per month with unlimited Zaps, Tables, and Forms. The Professional plan starts at $19.99/month (billed annually), providing 750+ tasks, multi-step Zaps, and AI fields in Tables. Team plans, beginning at $69/month (billed annually), include 2,000+ tasks, support for 25 users, SAML SSO, and shared app connections. For larger organizations, Enterprise pricing is tailored, offering unlimited users, VPC Peering, and advanced governance tools. A pay-as-you-go option is available for excess usage at 1.25x the base task rate, offering flexibility for scaling workflows.

With over 8,000 app integrations and access to 30,000 app actions, Zapier boasts the largest automation ecosystem in the market. Its MCP (Model Context Protocol) acts as a translator, enabling external AI platforms like ChatGPT, Claude, and Cursor to securely connect with Zapier’s ecosystem. AI by Zapier provides direct access to leading large language models within workflows, eliminating the need for separate API keys. For sensitive processes, human-in-the-loop features, such as Slack approvals, allow teams to review AI-generated outputs before finalizing. These integrations enhance the platform’s ability to simplify and accelerate workflow automation.

Zapier’s AI offerings include Zapier Agents, which use natural language to independently browse the web, access live company data, and perform actions across connected apps. Copilot helps users create workflows using natural language, while AI troubleshooting explains errors in plain terms. Teams can design custom features without coding using Custom Actions. Additionally, Zapier Tables includes AI fields to auto-populate or enrich records based on prompts. For example, Okta’s Senior Manager Korey Marciniak built a Slack-based escalation bot in 2025, reducing case escalation times from 10 minutes to seconds and automating 13% of all Okta Workforce Identity escalations.

Zapier prioritizes security with SOC 2 Type II and SOC 3 compliance, alongside GDPR and CCPA certifications. Enterprise customers are automatically excluded from AI model training, with opt-out options available for others. Data is encrypted using TLS 1.2 during transit and AES-256 at rest. IT teams can disable third-party AI integrations or restrict usage to approved apps via Application Controls. Real-time audit logs and execution logs provide full visibility into user actions and automation changes. Trusted by 87% of the Forbes Cloud 100 companies, Zapier’s security infrastructure, hosted on AWS with VPC Peering, ensures it meets the highest standards for managing sensitive AI workflows.

Workato is an enterprise-level integration and automation platform tailored for AI-driven workflows. It’s trusted by 50% of Fortune 500 companies and boasts over 1,200 pre-built connectors with compatibility for 10,000+ apps and data sources. In 2025, it was recognized as a Leader in the Gartner® Magic Quadrant™ for Integration Platform as a Service (iPaaS) for the seventh year in a row. Additionally, Workato was the only Gartner Peer Insights Customers' Choice winner for iPaaS, with an impressive 100% customer recommendation rate based on its functionality and pricing. These accolades highlight its appeal to organizations aiming to scale operations efficiently.

Workato is designed for both IT professionals and business teams, including HR, sales, marketing, and operations. Its low-code/no-code interface empowers users without coding expertise to create workflows, making automation accessible across departments. Companies like Atlassian and ThredUp have seen transformative results, such as 40% faster project completion, 53% lower total cost of ownership, and development speeds increasing by 5 to 6 times.

Workato’s pricing is usage-based, combining a base platform fee with variable charges depending on volume.

Pricing details are typically customized through total cost of ownership (TCO) comparisons. The platform holds a 4.9/5 rating on Gartner Peer Insights, reflecting its strong product capabilities and sales support.

Workato’s Enterprise MCP framework transforms APIs into actionable business processes, enabling seamless AI integration without requiring a rebuild. With the Skills Builder, users can combine APIs, recipes, and MCP servers to create governed tools tailored to their needs. Its decoupled, serverless runtime ensures high concurrency and 99.99% uptime, making it a reliable choice for critical operations. Over the past year, Workato introduced 775+ updates, reinforcing its commitment to an AI-first architecture.

Workato offers a suite of AI tools designed to streamline workflows:

These features have enabled users to achieve up to 6x faster development speeds and 53% lower operating costs compared to older systems.

Workato prioritizes security and governance to ensure smooth AI integration:

These robust measures ensure both security and operational transparency, making Workato a dependable choice for enterprises.

Gumloop is an automation platform built with AI at its core, having processed over 400 million tasks to date. Trusted by teams at Shopify, Instacart, and Webflow, it simplifies workflows while eliminating the need for technical expertise. The platform distinguishes between "Agents" (AI-powered assistants) and "Workflows" (automated processes triggered by events, schedules, or bulk actions), offering users a flexible approach to automation.

Gumloop caters to a wide range of users, from solo creators and freelancers to large-scale enterprise teams in operations, marketing, and engineering. Its Gummie AI assistant makes automation accessible by allowing users to describe tasks in plain language, which are then translated into fully functional workflows.

Fidji Simo, CEO of Instacart, shared: "Gumloop has enabled every team, technical or not, to adopt AI and automate workflows, significantly boosting our efficiency."

Bryant Chou, Co-Founder of Webflow, remarked: "Gumloop wins time back across an org. It puts the tools into the hands of people who understand a task and lets them completely automate it away."

Gumloop uses a credit-based pricing system with four clear tiers:

These pricing options make it easy for users to scale their automation needs while benefiting from Gumloop's integration features.

Gumloop employs the Model Context Protocol (MCP) to standardize how AI systems interact with external APIs, ensuring smooth and reliable tool integrations. The platform offers 130+ pre-built native nodes for popular apps like Salesforce, Slack, Gmail, and Airtable, with 70+ additional integrations in development. Users can also describe specific tasks (e.g., "Retrieve open opportunities worth more than $15,000"), and Gumloop generates custom Python-based nodes to handle the logic. For added flexibility, the platform supports BYOK (Bring Your Own Key) and provides REST APIs, SDKs, and webhooks.

Gumloop's automation capabilities are enhanced by its advanced AI features. The AI Router automatically determines the best next step based on input data, while the Gummie AI assistant uses a "Prompt to Create" approach, allowing users to design automations through simple conversational input rather than manual setup. These capabilities extend to tasks like image and video analysis, generating custom integration code, and adapting workflows in real time based on data patterns.

Security and compliance are at the forefront of Gumloop's design. The platform is SOC 2 Type II and GDPR compliant, offering enterprise-grade features like Virtual Private Cloud (VPC) deployment. Administrators can enforce AI model governance with allow/deny lists to control which providers (e.g., OpenAI, Anthropic, Google) or models are available across the organization. The Incognito Mode ensures sensitive data is wiped in real time and never stored on Gumloop servers. Additional features include:

With these measures, Gumloop ensures secure, compliant, and efficient automation for all users.

Lindy AI introduces autonomous AI agents that serve as digital teammates, handling execution-heavy tasks without requiring any coding skills. With over 400,000 professionals relying on it, the platform caters to founders, operators, and lean business teams. CEO Flo Crivello describes Lindy as "an AI agent builder for small teams that need to run lean", emphasizing its role in creating efficient, automated workflows.

Lindy AI focuses on non-technical business teams in areas like sales, support, recruiting, and operations. Its primary users include founders and operations leads who often deal with repetitive administrative tasks such as managing inboxes, scheduling meetings, updating CRMs, and following up with customers. The platform’s no-code visual builder, which combines natural language inputs and drag-and-drop tools, makes it easy for these teams to design and implement workflows without technical expertise.

Lindy AI uses a credit-based pricing system with several tiers to accommodate different needs:

For voice interactions, AI calling is charged at 20 credits per minute for calls within the U.S., while AI phone numbers are available for $10 per number per month.

Lindy AI supports 7,000+ integrations with over 1,600 apps, including native compatibility with popular tools like Slack, Gmail, Notion, HubSpot, and Salesforce. Additionally, the platform extends its reach with 2,500+ integrations via Pipedream and can extract data from 4,000+ sources using specialized web scrapers. A key feature, Autopilot, allows agents to operate virtual cloud-based computers, interacting with web interfaces just like a human would. This capability helps overcome the limitations of standard API integrations, offering a more flexible and powerful approach to task automation.

Lindy AI’s workflow tools are designed to maximize efficiency. The Agent Swarms feature enables a single agent to replicate itself and handle large-scale tasks, like sending 1,000 personalized emails simultaneously. It also supports multi-agent collaboration, allowing specialized agents to work together on complex projects. Agents maintain persistent memory, enabling them to make informed, context-aware decisions based on previous interactions. For tasks requiring oversight, the human-in-the-loop feature allows workflows to pause for approvals via Slack or email before executing critical actions. Additionally, the platform’s AI phone agents can communicate in over 30 languages, making it versatile for global operations.

Lindy AI ensures enterprise-level security and compliance through measures such as SOC 2 Type II certification and adherence to HIPAA, PIPEDA, and GDPR standards. Data is protected with AES-256 encryption at rest and TLS 1.2+ during transit, alongside encrypted backups and robust key management. Access controls include Role-Based Access Control (RBAC), Multi-Factor Authentication (MFA), and automated account deactivation. Hosted on the Google Cloud Platform (GCP), Lindy employs multi-zone redundancy and automated patching to ensure reliability. Additional features like audit trails and error pattern analysis provide transparency and strengthen governance.

Stack AI stands out as an enterprise-grade platform designed for building and deploying AI agents and workflows, with a strong focus on security and governance. It caters to AI-driven organizations in regulated industries like financial services, insurance, healthcare, and government. Guillermo Rauch, CEO of Vercel, highlights its accessibility and scalability:

"StackAI makes the promise of AI agents real. For everyone, at scale, with a simple point-and-click interface."

The platform is tailored for both technical and non-technical teams working in regulated sectors. Its no-code visual builder enables business users to create workflows, while Python nodes and API integrations provide developers with the tools they need. Companies managing document-heavy tasks, such as grant analysis or competitive research, find the platform particularly useful. In 2025, Brian Hayt, Director of BI at Noblereach, shared that Stack AI reduced the time needed to generate fully cited reports from one week to just five minutes[1].

Stack AI offers flexible pricing to meet diverse needs. Individuals and small teams can start with a free plan, while larger organizations benefit from usage-based SaaS pricing that scales with consumption. For enterprises with specific requirements - such as private cloud or on-premise deployments, dedicated support, or advanced governance - custom pricing is available. This model is especially suited for sectors like defense and finance, where data sovereignty is critical.

The platform integrates with over 100 enterprise applications, including SAP, Salesforce, SharePoint (NTLM), and Snowflake. It supports a range of AI models, such as OpenAI, Anthropic, Google, Meta, xAI, Mistral, and local LLMs via custom endpoints, giving teams flexibility in their AI choices. The Modular Connector Protocol (MCP) expands integration capabilities, enabling workflows to interact with third-party tools. Workflows can be deployed as REST APIs, chatbots, or web forms, or integrated directly into platforms like Slack, Microsoft Teams, and WhatsApp.

Stack AI excels in orchestrating complex workflows. Its visual engine features a 2D drag-and-drop canvas, enabling users to design intricate AI logic with parallel processing, AI-driven routing, and multi-agent orchestration - all without coding. The platform’s "Knowledge Base" nodes simplify document indexing, chunking, and retrieval with built-in citations, while OCR capabilities extract structured data from PDFs and forms. Batch processing handles large-scale document pipelines efficiently. Doug Williams, Gen AI Lead at MIT Martin Trust Center, used Stack AI to develop AI assistants for students in just weeks, without requiring advanced coding skills.

Security and governance are central to Stack AI’s design. Its 8-layer model includes RBAC, workspace controls, project locking, SSO, global admin policies, connection permissions, production analytics, and MFA. The platform meets SOC 2 Type II, GDPR, and HIPAA standards, ensuring compliance. Real-time PII detection and redaction safeguard sensitive data, while automatic audit logs track every input, output, and token usage. For organizations prioritizing data security, Stack AI supports on-premise or private infrastructure LLMs, ensuring sensitive data remains within internal systems. Karissa Ho, Growth at Stack AI, emphasizes:

"Governance shouldn't slow you down. With StackAI, platform and security teams get eight layers of control - from SSO and RBAC to version diffs, analytics, and exportable logs - while builders keep shipping."

This robust security framework reinforces Stack AI’s ability to simplify AI workflows in highly regulated industries.

[1] StackAI Customer Testimonials, 2026

Pipedream is a serverless automation platform tailored for developers who need code-level control over AI workflows. Unlike platforms that rely solely on visual builders, it combines pre-built actions with the ability to write custom code in Node.js, Python, Go, or Bash. This makes it a go-to choice for technical teams managing complex integrations. Trusted by over 1,000,000 developers, including those at startups and Fortune 500 companies, Pipedream supports data pipelines that handle over 10 million events per second.

Pipedream is designed for developers and technical teams who need more flexibility than no-code platforms can offer. It’s especially suited for teams building AI agents or SaaS applications that require integration with thousands of APIs. Daniel Lang, CEO & Co-Founder of Mangomint, shared their experience with Pipedream:

"We needed a solution that would make building this easy, and allow the team to quickly test and iterate on the integration while validating that it met the customer's needs."

The platform's Pipedream Connect toolkit allows developers to embed integrations directly into their products. It simplifies tasks like authentication and token refresh for up to 100 external users on the Connect plan.

Pipedream operates on a credit-based system, where one credit equals 30 seconds of compute time with 256MB of RAM. Plans also include AI token quotas for powering LLM-based workflows. Here’s a breakdown of the pricing:

This structure ensures flexibility for users ranging from hobbyists to enterprise teams.

Pipedream supports one-click OAuth and key-based authentication for over 3,000 APIs, alongside a registry of more than 10,000 pre-built triggers and actions. Its Model Context Protocol (MCP) enables AI agents like Claude or Cursor to interact with Pipedream's documentation and tools using natural language. Workflows run serverlessly with up to 10GB of RAM and a 750-second execution limit. For developers seeking more customization, the source-available component registry on GitHub allows modification of boilerplate code or the creation of new integrations.

Pipedream excels in serverless AI orchestration, offering advanced flow control features such as if/else branching, switch branching, parallel execution, and delays. Built-in key-value and file stores help maintain state across multi-step workflows. Tools like a real-time event inspector, GitHub sync for version control, and environment variable management streamline complex automation tasks. The platform’s ability to scale workflows to handle large datasets or demanding AI tasks, with up to 10GB of RAM, ensures it meets the needs of resource-intensive projects.

Pipedream adheres to SOC 2 Type II, HIPAA, and GDPR compliance standards, ensuring data is encrypted both at rest and in transit via HTTPS. Security features include Single Sign-On (SSO), two-factor authentication, and role-based access control (RBAC). For enterprise users, the Business tier offers HIPAA support, VPC peering, and uptime SLAs. Since December 2025, Pipedream has operated under Workday, focusing on building a robust AI agent development stack with enterprise-level security measures.

Dify is an open-source platform designed to combine visual orchestration with the flexibility of coding, catering to both developers and non-technical teams. With over 129,800 GitHub stars and more than 1 million deployed applications, it has proven to be a reliable tool for creating production-ready AI agents and workflows. The platform offers two deployment options: self-hosted for organizations wanting full control over their data and a managed cloud service for teams seeking quicker implementation. This dual approach reflects the balance between ease of use and advanced control.

Dify aims to serve both "citizen developers" and technical teams, making AI agent development more accessible. Ricoh, under the leadership of Yoshiaki Umezu, Division General Manager, utilized Dify's no-code interface to speed up internal AI adoption. They developed an Enterprise Q&A Bot for over 19,000 employees across 20+ departments, saving an estimated 300 man-hours monthly. Umezu highlighted:

"What makes Dify stands out is its ability to democratize AI agent development. By combining powerful AI/ML capabilities on a no-code platform, its rapid deployment and intuitive interface make it highly accessible even for beginners."

The platform has also been adopted by enterprises like Volvo Cars. Ewen Wang, Head of AI & Data APAC, praised its ability to quickly validate complex AI projects. This focus on accessibility and efficiency aligns with the broader goal of simplifying AI workflows while maintaining security and scalability.

Dify’s pricing structure is designed to suit a variety of users, from small-scale projects to enterprise applications. Key tiers include:

Annual billing provides a 17% discount for Professional and Team plans. Additionally, the Community Edition is available for free deployment via Docker or Kubernetes, ensuring complete data sovereignty for organizations.

Dify supports Model Context Protocol (MCP), enabling integration with a wide range of MCP servers for seamless workflow connectivity. The platform offers two workflow types:

Its Domain Specific Language (DSL) allows workflows to be exported and imported across workspaces, ensuring consistency and avoiding vendor lock-in.

Dify provides a drag-and-drop interface for building complex workflows without requiring extensive prompt engineering. Specialized nodes include:

Triggers enhance workflows by enabling proactive services, such as:

Knowledge Retrieval nodes support multimodal RAG pipelines, enabling LLMs to pull data from internal knowledge bases. The platform also includes robust testing tools and detailed logs for troubleshooting.

Dify’s workspace-first design ensures complete resource isolation between organizations. Role-Based Access Control (RBAC) offers five roles:

For industries like healthcare and finance, the self-hosting option ensures full data control. Observability features track usage and provide detailed logs, helping administrators monitor activity and troubleshoot issues. The platform’s RAG capabilities allow private documents to be uploaded to local knowledge bases, ensuring LLMs access only authorized internal data. This focus on compliance and resource isolation addresses the governance issues highlighted earlier.

When deciding on the right AI workflow platform in 2026, three key factors stand out: pricing model, observability features, and deployment flexibility. With the AI orchestration market hitting $11.47 billion in 2025 and growing by 23% annually, the stakes are higher than ever. Yet, despite this growth, 95% of generative AI pilots fail to transition into production. The main hurdle? Platforms often lack the robust debugging tools needed to bridge the gap between prototypes and production-ready systems. These considerations play a crucial role in evaluating a platform's value, beginning with its pricing approach.

Cost management is a significant challenge, especially when scaling workflows for production. Activity-based pricing can become costly in high-frequency environments, whereas execution-based models are often more suitable for complex, high-frequency tasks. Platforms like UiPath cater to RPA teams with enterprise-level pricing, while AI-native platforms offer more affordable entry points for smaller teams. Meanwhile, platforms such as Domo use custom pricing to support extensive data connector libraries, ideal for large-scale business intelligence needs. The takeaway? A platform's pricing model can either accelerate or stall the journey from pilot to production, depending on how well it aligns with an organization’s budget and scaling needs.

Observability is another critical differentiator, separating platforms that are production-ready from those that remain stuck at the prototype stage. According to Digital Applied, workflow debugging tools are indispensable for diagnosing why agents fail. Some platforms integrate with third-party debugging tools, while others offer built-in capabilities to identify failures. This functionality is especially crucial as only 2% of organizations have successfully deployed AI agents at scale.

Deployment flexibility is equally important, particularly for organizations with strict data sovereignty or compliance requirements. Self-hosting options are attractive for companies needing full control over data, while VPC and on-premise deployments are better suited for industries with heavy compliance demands. However, there’s often a trade-off: platforms offering quick setup may lack the customization needed for more complex, AI-driven workflows.

Selecting the right AI workflow platform in 2026 hinges on aligning your team’s technical skills, budget, and production goals. With the AI orchestration market projected to hit $11.47 billion, there’s no shortage of options, but starting simple is crucial. Begin with single-agent workflows to track key metrics like latency, cost per execution, and error rates. Scaling to multi-agent systems introduces higher API costs and debugging challenges, so it’s essential to establish measurable success early on.

Once the basics are in place, financial considerations take center stage. Pricing models can make or break scalability. Usage-based pricing is suitable for infrequent automations but tends to strain budgets in high-frequency scenarios. Execution-based pricing often proves more sustainable for teams running complex, iterative workflows. For those operating with limited resources, platforms offering self-hosting options provide greater control over long-term expenses.

Observability is what separates production-ready platforms from prototypes. With only 5% of enterprise AI pilots making it to production, having tools to debug agent failures is critical. Platforms with time-travel debugging or integrations with tools like LangSmith ensure teams can pinpoint and resolve workflow issues effectively. As DigitalApplied puts it:

"An orchestration platform is significantly less effective without a 'Debugger for AI Thoughts.'"

Technical flexibility is another key factor. Teams with strong technical expertise should prioritize platforms that allow custom code, self-hosting, and framework-agnostic architectures. Meanwhile, non-technical teams benefit from drag-and-drop interfaces and pre-built templates that simplify automation without requiring developer input. For industries like finance or healthcare, where compliance is non-negotiable, deployment options such as VPC and on-premise hosting are essential.

With the AI orchestration market growing at 23% annually, the demand is clear. However, success lies in matching platform capabilities with your team’s actual needs, rather than chasing the flashiest features. The best platform is the one your team will use consistently, making usability and alignment with organizational goals the ultimate factors for long-term success.

Most AI pilots stumble not because the technology itself is flawed, but due to challenges in integrating with existing workflows and operational processes. Research reveals that 71% of AI tools are abandoned within six months, primarily because they create disruptions rather than streamlining tasks. Issues like manual data entry, requiring extra logins, or isolating data into silos often discourage users and stall adoption. To successfully scale AI solutions in practical settings, it's critical to design seamless, integrated workflows that fit naturally into how teams already operate.

To select a pricing model that supports scalable AI workflows, focus on flexibility, cost control, and the ability to handle changing demands. A pay-as-you-go approach works well, as it allows you to pay only for the resources you actually use while maintaining predictable expenses. Prioritize features such as real-time cost monitoring and enterprise-level options like governance and security. These tools help you scale your AI operations responsibly without letting costs spiral out of control.

An AI workflow platform must prioritize robust security measures to safeguard sensitive information. This includes data encryption both at rest and during transit, ensuring that information remains protected at all times. Additionally, role-based access control (RBAC) is essential to limit access to authorized personnel only, while real-time monitoring and audit trails help detect and prevent potential breaches or unauthorized access.

To meet compliance requirements, the platform should align with regulations such as GDPR, HIPAA, and SOC 2. Key features like automated checks, secure data handling, and detailed audit logs play a critical role in maintaining regulatory adherence. Certifications such as SOC 2 Type II further demonstrate the platform’s commitment to stringent security and compliance standards.