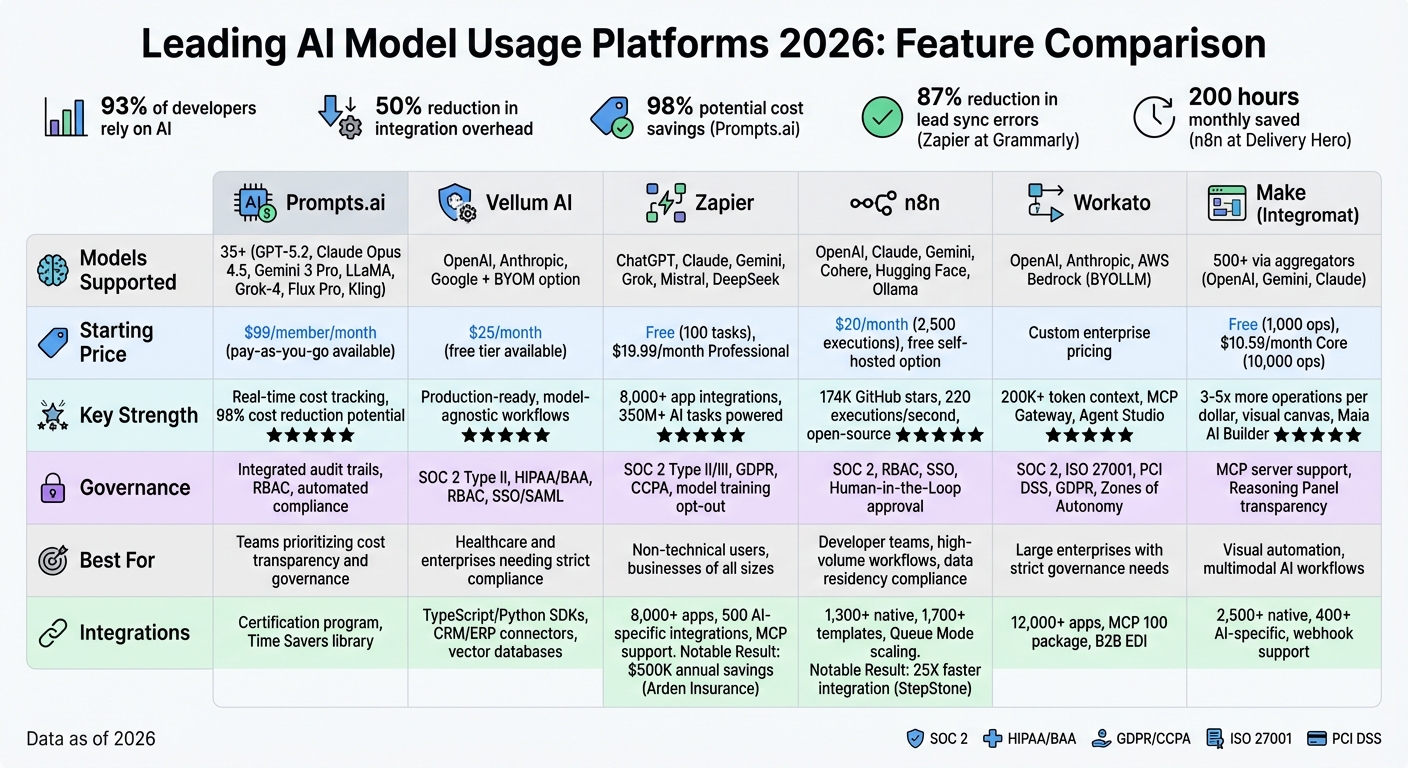

AI tools are now essential, but managing multiple platforms is a challenge. 93% of developers rely on AI, yet juggling logins, APIs, and subscriptions wastes time and increases costs. AI model usage platforms solve this by unifying access to top models like GPT-5.2, Claude Opus 4.5, and Gemini 3 Pro into one interface. They simplify workflows, cut integration overhead by 50%, and provide pay-as-you-go pricing with clear cost tracking.

Here’s a breakdown of six platforms transforming AI workflows in 2026:

These platforms cater to various needs, from startups to enterprises. Whether you prioritize cost transparency, governance, or automation, there’s a platform to streamline your operations in 2026.

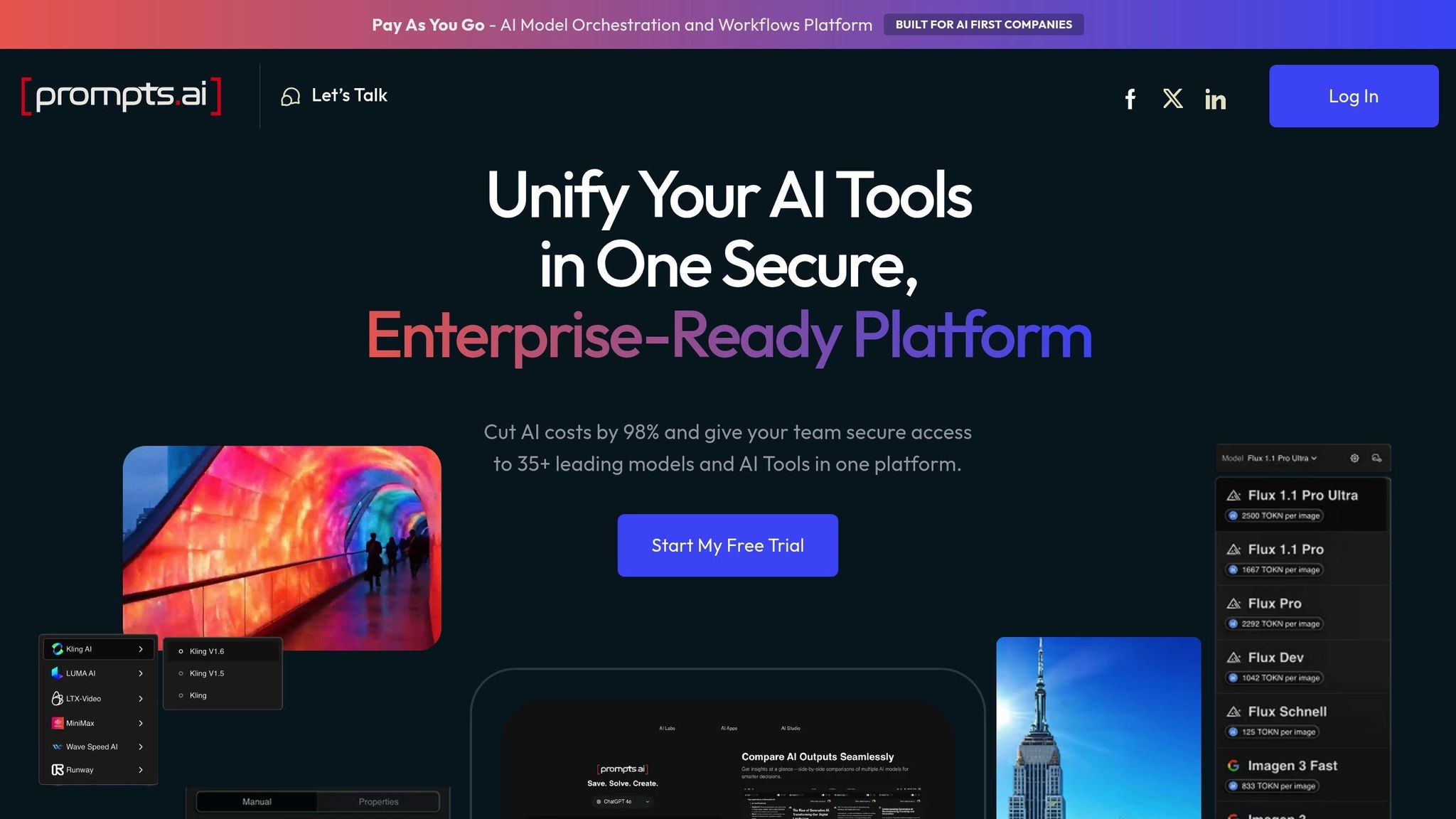

Prompts.ai brings together over 35 top-tier AI models - including GPT-5.2, Claude Opus 4.5, Gemini 3 Pro, LLaMA, Grok-4, Flux Pro, and Kling - into one unified platform. By eliminating the hassle of juggling multiple logins and billing systems, teams can effortlessly access all these resources in a single, streamlined interface.

Prompts.ai supports both commercial and open-source AI models, ensuring users can tap into the latest advancements. For example, reasoning models like OpenAI's o1 and o3 leverage chain-of-thought processing to tackle complex challenges. Teams can easily switch between models - testing GPT-5.2 for one task and Claude Opus 4.5 for another - all in real time. This flexibility removes vendor lock-in, allowing organizations to select the best model for each task, making operations smoother and more adaptable.

Governance is built into the platform, ensuring both control and compliance. Features like integrated audit trails, role-based access, and automated compliance enforcement allow organizations to track and manage model usage effectively. These tools turn scattered AI experiments into structured, scalable workflows that align with data security policies and organizational standards.

Prompts.ai includes a FinOps layer that tracks token usage across all supported models, linking costs directly to business outcomes. With pay-as-you-go TOKN credits available at no monthly cost for exploration, and business plans starting at $99 per member per month, the platform provides clear cost visibility. Real-time dashboards help teams monitor spending and reduce software expenses by up to 98%, compared to managing separate subscriptions.

The platform also offers a certification program and a shared library of expert workflows known as "Time Savers." These pre-built prompts enable teams to quickly tackle tasks like content creation or data analysis without starting from scratch. This collaborative space helps organizations establish best practices and train internal AI leaders, making it easier for non-technical teams to adopt and integrate AI solutions into their workflows efficiently.

Vellum AI is a production-ready platform designed for building AI agents and workflows. It supports foundation models from OpenAI, Anthropic, and Google, while also offering a BYOM (Bring Your Own Model) option for private or hybrid deployments. Its model-agnostic design allows teams to assign different steps of a workflow to different models, providing flexibility and avoiding vendor lock-in. Below, we’ll explore its model support, governance features, cost transparency, and integrations.

Vellum’s orchestration layer enables seamless routing of models for each workflow step, delivering structured outputs and supporting function calls. Built-in tools like evaluations, side-by-side comparisons, and prompt versioning with instant rollbacks make it easy to prototype without disrupting production. For example, RelyHealth has used Vellum to deploy hundreds of custom healthcare agents, meeting the high reliability standards essential in the healthcare industry.

The platform adheres to SOC 2 Type II compliance and supports HIPAA through Business Associate Agreements (BAA). It includes robust security measures like Role-Based Access Control (RBAC), Single Sign-On (SSO/SAML), and SCIM for automated user provisioning. Data is encrypted, and secure communication protocols are in place to ensure compliance. Enterprise customers can customize data retention policies, with options to automatically delete monitoring data after 30, 60, 90, or 365 days. Additional features like immutable audit logs, end-to-end traces, and environment separation between staging and production ensure accountability and operational governance. Approval workflows further enhance control before agents go live.

Vellum provides detailed cost tracking with per-run visibility, budget caps, and usage alerts. Token optimization tools help teams manage expenses across various models. Pricing includes a free tier for prototyping, paid plans starting at $25 per month, and custom enterprise pricing for larger deployments requiring advanced features and governance.

The platform supports TypeScript and Python SDKs, REST APIs, and webhooks for custom development. Native connectors integrate seamlessly with CRM, ERP, and ITSM systems, as well as data warehouses. It also supports vector databases for Retrieval-Augmented Generation (RAG) workflows. After deployment, Vellum provides code snippets that allow teams to call workflows directly in production, eliminating the need to store prompts in local codebases. Deployment options include cloud, private VPC, on-premises environments, and even air-gapped setups for maximum security.

Zapier connects over 8,000 apps and executes more than 30,000 actions, making it a go-to platform for businesses of all sizes. By late 2025, it had powered over 350 million AI tasks for 3.4 million companies. Its appeal lies in its ability to simplify AI automation for non-technical users while still meeting the demands of large enterprises. The platform’s unified dashboard helps users effectively manage a variety of AI tools in one place.

Zapier integrates seamlessly with leading LLMs like ChatGPT, Claude, and Gemini, as well as Grok by xAI, Mistral AI, and DeepSeek. It also supports specialized tools like AssemblyAI and Deepgram for speech-to-text tasks, Hume AI for emotion-based applications, and Pinecone for vector database management. The built-in AI by Zapier tool provides direct access to these models within workflows, eliminating the hassle of managing API keys. A standout feature is the Model Context Protocol (MCP), which allows external AI tools like ChatGPT, Claude, and Cursor to interact with Zapier’s extensive app library.

In 2025, Marcus Saito highlighted how Zapier’s automation saved his team 2,200 workdays each month and resolved 28% of IT tickets, showcasing the platform’s efficiency.

Zapier ensures compliance with SOC 2 Type II and SOC 3 standards, as well as GDPR and CCPA regulations. Enterprise users benefit from features like a model training opt-out option, which protects company data from being used to train third-party AI models. Administrative tools include centralized access management, detailed permission settings, audit trails, and single sign-on via SAML 2.0.

For example, Megan Tsang, Grammarly’s Marketing Operations Manager, implemented Zapier workflows in September 2025, cutting lead sync errors by 87% and saving the Support Operations team six hours daily.

Zapier uses a task-based billing model, charging only for successfully completed workflow actions. Users are notified when approaching plan limits, and a pay-as-you-go option is available at 1.25× the base cost. Tools like Zapier Tables, Forms, Filter, Formatter, Paths, and Looping do not consume tasks, while MCP tool calls count as two tasks. Pricing starts with a Free tier ($0/month for 100 tasks), followed by Professional ($19.99/month billed annually), Team ($69/month), and custom Enterprise plans.

In 2025, Tyler Diogo, Operations Manager at Arden Insurance Services, reported automating over 34,000 work hours using Zapier, resulting in $500,000 in annual overhead savings.

Zapier’s ecosystem includes over 8,000 apps and nearly 500 AI-specific integrations. It supports three workflow types: standard "Zaps" for straightforward automation, "Agents" for tasks requiring decision-making, and "Chatbots" for customer interactions. AI steps can draw context from sources like Google Drive, Notion, and Confluence.

Ben Leone, CTO of Contractor Appointments, credited Zapier with an $300,000 annual revenue boost by automating 80-90% of lead handling. Additionally, the Workflow API allows developers to embed Zapier’s integrations directly into their own products.

Next, we’ll look at another platform pushing the boundaries of AI automation.

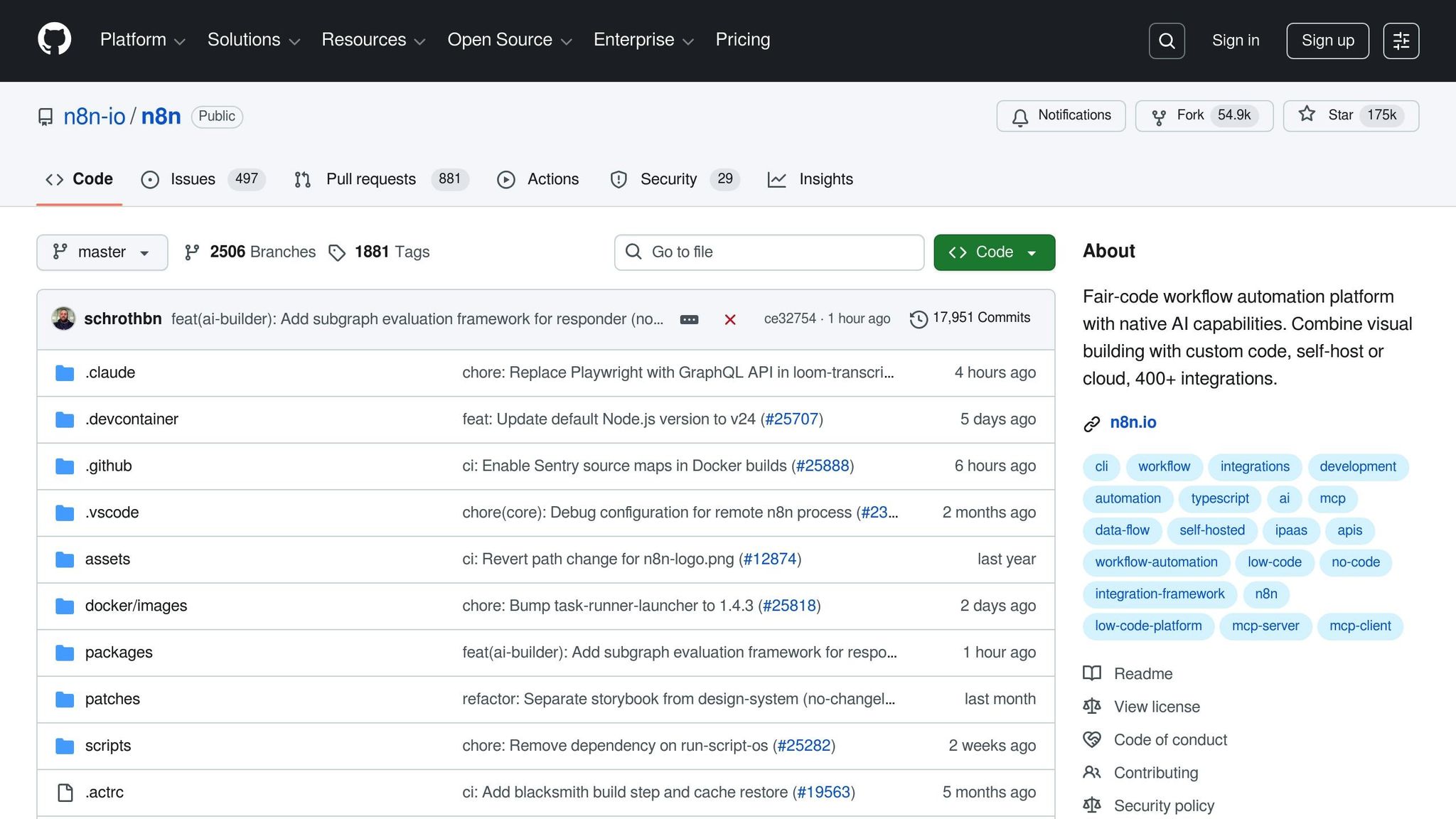

n8n stands out as a developer-first automation platform, offering technical teams complete control over their AI workflows. With over 174,000 stars on GitHub, it has become one of the most popular open-source automation tools. The platform supports up to 220 workflow executions per second on a single instance, making it ideal for businesses managing high-volume AI tasks. Its self-hosting option is particularly valuable for industries like healthcare and finance, where strict data residency compliance is non-negotiable.

n8n integrates seamlessly with major LLM providers such as OpenAI, Anthropic (Claude), Google Gemini, Cohere, and Hugging Face. Built on LangChain, it enables modular AI applications with nodes for vector stores like Pinecone, Milvus, Supabase, Qdrant, and Weaviate. The platform also supports multi-agent orchestration through the Model Context Protocol (MCP), allowing a Manager Agent to delegate specialized tasks to Worker Agents (e.g., Research or Coder). For those looking to run local models, n8n supports Ollama. Developers can also execute native code in JavaScript or Python, leveraging libraries like Pandas or NumPy directly within workflows.

Luka Pilic, Marketplace Tech Lead at StepStone, shared: "We've sped up our integration of marketplace data sources by 25X. It takes me 2 hours max to connect up APIs and transform the data we need. You can't do this that fast in code."

n8n is SOC 2 audited and offers robust security features, including Role-Based Access Control (RBAC), audit logs, and SSO via SAML/LDAP. It integrates with external secret management tools like AWS Secrets Manager, HashiCorp Vault, and Google Cloud Platform to avoid the need for hard-coding API keys. A standout feature is the Human-in-the-Loop (HITL) capability, which allows workflows to pause for human approval through platforms like Slack or custom forms before AI takes final actions. This ensures oversight and governance for autonomous agents.

Dennis Zahrt, Director of Global IT Service Delivery at Delivery Hero, highlighted: "We have seen drastic efficiency improvements since we started using n8n for user management. It's incredibly powerful, but also simple to use." His team saved 200 hours monthly by implementing a single ITOps workflow for user management.

n8n employs a per-execution billing model, where a single execution can include unlimited steps and process thousands of items in a loop for the same price. Pricing starts at $20/month (billed annually) for 2,500 executions on the Starter plan, $50/month for 10,000 executions on the Pro plan, and $800/month for 40,000 executions on the Business plan. Enterprise plans are available with custom pricing. Users receive weekly usage emails and alerts when they reach 80% of their quota. For high-volume businesses, self-hosting the free Community Edition can reduce operational costs by 70–90%, replacing variable subscriptions with fixed infrastructure expenses.

With over 1,300 native integrations and a library of 1,700+ starter templates, n8n simplifies project launches. It also lets users connect to any API by pasting cURL requests. The platform supports three workflow types: standard automations, AI Agents for decision-making, and Wait Nodes for human approval. For high-throughput environments, the Queue Mode uses Redis to distribute workflow executions across multiple workers, enabling horizontal scaling. Git-based version control ensures smooth transitions from staging to production and allows rollbacks if needed, especially when AI agents behave unpredictably.

Workato is an automation platform designed for enterprises, offering a balance of strict control and AI-driven innovation. Its "Bring Your Own LLM" (BYOLLM) approach allows businesses to integrate pre-approved models like OpenAI, Anthropic, and AWS Bedrock directly into its Agent Studio environment. Through the MCP Gateway, Workato ensures secure interactions between AI applications and business systems. Every interaction is authenticated and authorized before execution, providing a reliable framework for AI operations.

Workato supports top-tier AI models and offers an extensive 200,000+ token context window for models such as Claude 3.5 Sonnet. Its Agent Studio enables users to create "Genies" - AI agents capable of handling complex, multi-step tasks. These agents leverage a centralized Agent Knowledge Base to support Retrieval-Augmented Generation (RAG). The MCP 100 package includes over 100 customizable servers, enabling seamless connections to tools like Jira, Slack, Salesforce, and Google Directory in just minutes. Beyond AI workflows, Workato also manages high-volume data operations for platforms like Snowflake and Databricks, and supports B2B integrations through modern EDI, covering over 12,000 apps.

Workato integrates strong governance features to ensure secure and efficient AI workflow management. It employs Verified User Access, requiring agents to operate under specific end-user OAuth credentials, which limits access to authorized data only. To manage risk, the platform uses "Zones of Autonomy" (Green, Yellow, Red, Black), categorizing workflows by risk level. For instance, Red zones demand pre-approval for critical operations, while Black zones entirely restrict agent activity. Every action is logged, creating detailed audit trails that support compliance. The platform also holds certifications like SOC 2 Type II, ISO 27001, PCI DSS, and GDPR, and enforces data residency requirements across regions such as the US, EMEA, and APAC.

Nicole Ike from Workato's Product Hub remarked: "Workato Enterprise MCP is the foundation for the agentic era, providing the context, trust, and accuracy that production AI deployments require."

Workato follows a usage-based pricing model, combining a fixed platform fee with scalable usage fees. It offers four editions - Standard, Business, Enterprise, and Workato One - each tailored to different levels of functionality. A unified "common billing unit" applies across all features, allowing users to pay only for what they use and allocate resources as needed. Rate limits for AI connector actions in direct customer workspaces are set at 60 requests per minute, ensuring predictable and fair pricing.

Make underwent a significant transformation in 2026, moving from basic automation to what’s now called Agentic Automation. This leap allows AI agents on the platform to reason and adapt while processing multimodal inputs like images, audio, and complex PDFs. Its visual drag-and-drop canvas simplifies even the most intricate branching logic with advanced tools like "If-Else" and "Merge", eliminating the need for tedious workarounds. With access to over 2,500 native integrations and support for 500+ AI models via aggregators like CometAPI, Make has become a cost-efficient solution for managing sophisticated AI workflows.

Make aligns with the needs of modern AI workflows by integrating top-tier AI models. It supports major players like OpenAI, Google Gemini, and Anthropic Claude through native modules while also acting as a Model Context Protocol (MCP) server. This feature allows external models, including custom GPTs, to securely leverage Make scenarios for executing real-world tasks. The platform’s Maia AI Builder takes natural language prompts and seamlessly converts them into fully functional workflows, making it easier for users to create complex automations. A new Reasoning Panel introduced in 2026 brings added transparency, showing users the logic behind AI-driven actions and decisions. Additionally, Make’s advanced data tools - such as iterators, aggregators, and array functions - enable bulk data processing without requiring custom code.

Make’s credit-based pricing system, launched in 2026, ensures users get maximum value for their operations. Standard actions cost 1 credit, while more resource-intensive AI tasks consume additional credits. This system delivers 3–5x more operations per dollar compared to traditional pricing models. The Core plan starts at $10.59 per month, offering 10,000 operations with unlimited scenarios. For those just starting, the Free tier provides 1,000 operations monthly. However, scheduled triggers come with a "polling tax", where each data check uses credits - even if no new data is found. For example, a scenario running every minute consumes 43,200 credits monthly just for trigger checks. Using native AI modules without personal API keys adds a convenience fee, and complex prompts can sometimes use hundreds of credits per execution. To offer flexibility, unused credits on paid plans roll over for one month, accommodating seasonal variations in workload.

The platform’s extensive integration library, paired with its credit-based pricing, ensures efficient and comprehensive workflows. Make offers over 3,000 pre-built app connections, including more than 400 AI-specific integrations with tools like OpenAI, Anthropic Claude, Google Vertex AI, and Mistral AI. Its MCP support enables seamless two-way communication, allowing external AI models to trigger Make scenarios and vice versa. The Scenario Run Replay feature, introduced in 2026, streamlines debugging by letting developers re-run failed executions using the original trigger data. Additionally, Make Grid visualizes interconnected automations, helping organizations pinpoint bottlenecks and latency issues across their scenarios. Unlike polling triggers, webhooks execute in real time without consuming idle credits, making them a more resource-efficient option.

AI Model Usage Platform Comparison 2026: Features, Pricing, and Integrations

Prompts.ai brings together over 35 advanced language models within a single, secure platform, eliminating the complexity of managing multiple tools. It offers real-time cost tracking, enabling users to cut AI-related expenses by as much as 98%, while its strong governance features ensure compliance and protect data.

As mentioned earlier, Prompts.ai stands out in areas such as model versatility, transparent cost management, and strict governance. However, when choosing the right solution, organizations should weigh factors like automation preferences, integration capabilities, and deployment requirements. Think about whether your main focus is broad model access, precise cost oversight, or straightforward implementation to find the best fit for your needs.

Selecting the right AI platform in 2026 hinges on your organization's priorities. Prompts.ai brings together over 35 models with transparent token-based pricing and enterprise-grade governance. Teams adopting unified platforms often see dramatic cost savings while retaining complete oversight of every token used.

Prompts.ai also ensures that workflows integrate effortlessly with your existing tech stack. For organizations prioritizing workflow automation, its streamlined integrations and secure orchestration meet the needs of enterprises with strict data sovereignty requirements.

The AI landscape has shifted from simple chatbots to fully integrated, high-performance systems - a transformation that Prompts.ai is designed to support. As Jorick van Weelie from Datanorth.ai puts it, "The differentiator is no longer 'who uses AI,' but who has successfully orchestrated these tools into a cohesive, high-performance stack." This underscores the importance of aligning your platform choice with your team's technical expertise, budget, and compliance goals.

Start by auditing your workflows to pinpoint repetitive, high-volume tasks that AI can manage. Avoid using expensive reasoning models for basic queries to conserve resources. Instead, match the right tool to the task, whether your focus is broad model access, cost efficiency, or automation.

Prompts.ai stands out with its model versatility, clear pricing, and seamless integration capabilities. Organizations that thrive in 2026 will be those that transition from experimentation to governed, repeatable AI workflows that drive measurable results.

To select the most suitable AI model in 2026, start by identifying your specific needs, whether it's for tasks like writing, coding, or automating processes. For tasks with minimal risk, prioritize models that are quicker and more budget-friendly. For critical or high-risk applications, choose more reliable models and incorporate human oversight when necessary. Evaluate models based on benchmark scores, cost, and performance. Establish clear evaluation standards, such as accuracy and consistency, to ensure the model aligns seamlessly with your workflow requirements.

In 2026, strong governance controls play a critical role in ensuring compliance, managing risks, and maintaining transparency. Essential practices include tracking models in real time, managing costs effectively, and automating compliance processes. Platforms such as Prompts.ai provide centralized oversight, help meet regulatory requirements, and prepare organizations for audits. Notable features include multi-LLM orchestration, SOC 2 Type II compliance, and cost tracking. These capabilities ensure AI workflows stay aligned with organizational policies and adapt to changing regulations, all while minimizing the need for manual intervention.

To keep AI expenses under control, consider strategies such as prompt caching, model routing, and infrastructure optimization - all of which can lead to substantial savings. Leveraging platforms like prompts.ai, which offer pay-as-you-go orchestration and workflow management, can simplify cost management even further. Additionally, selecting budget-friendly models, fine-tuning performance across the AI stack, and closely monitoring pricing can help strike the right balance between performance and scalability while avoiding extra costs.