Les plateformes d'orchestration de l'IA simplifient la gestion de divers flux de travail, modèles et outils à grande échelle. Ils aident les entreprises à réduire les coûts, à automatiser les processus et à maintenir la gouvernance. Sans eux, les équipes sont confrontées à des défis tels que la fragmentation des outils, des dépenses imprévisibles et des risques liés aux données. Ce guide couvre 7 meilleures plateformes pour vous aider à trouver la solution la mieux adaptée à vos besoins.

Chaque plateforme possède des atouts uniques. Pour choisir la bonne solution, évaluez les compétences techniques, les besoins de conformité et le budget de votre équipe. Les plateformes de test avec des exemples de flux de travail peuvent aider à identifier la meilleure solution.

Prompts.ai est une plateforme conçue pour l'orchestration de l'IA au niveau de l'entreprise, regroupant plus de 35 grands modèles de langage de premier plan tels que GPT-5, Claude, Lama, Gémeaux, Grok-4, Flux Pro et Kling dans une interface sécurisée et rationalisée. En centralisant l'accès, il élimine les tracas liés à la gestion de plusieurs abonnements, connexions et systèmes de facturation, offrant aux entreprises un moyen de consolider leurs outils d'IA tout en conservant une supervision et un contrôle complets.

La plateforme met l'accent sur la transparence des coûts, la gouvernance et l'automatisation. Grâce à ses contrôles FinOps en temps réel, Prompts.ai suit chaque jeton utilisé sur tous les modèles et associe directement les dépenses à des résultats commerciaux mesurables. Cette approche permet aux entreprises d'optimiser leur utilisation de l'IA et de réduire leurs dépenses logicielles de 98 %.

Outre les économies de coûts, Prompts.ai permet de standardiser l'expérimentation de l'IA, en la transformant en un processus reproductible et conforme. Ses fonctionnalités de gouvernance garantissent le respect des politiques, tiennent à jour des pistes d'audit complètes et sécurisent les données sensibles, essentielles pour des secteurs tels que la santé et la finance.

Voyons comment Prompts.ai donne vie à ces fonctionnalités grâce à son architecture cloud native.

Prompts.ai fonctionne comme une plateforme SaaS basée sur le cloud, gérant automatiquement les mises à jour et le matériel. Les utilisateurs peuvent accéder à sa suite de modèles d'IA via une interface Web, tandis que la plateforme s'occupe de l'hébergement, de la gestion des versions et de l'optimisation des performances.

« Un directeur artistique lauréat d'un Emmy avait l'habitude de passer des semaines à réaliser des rendus dans 3D Studio et un mois à rédiger des propositions commerciales. Grâce aux LoRas et aux flux de travail de Prompts.ai, il réalise désormais les rendus et les propositions en une seule journée. Plus besoin d'attendre, plus de stress lié aux mises à niveau matérielles. »

- Steven Simmons, PDG et fondateur

Pour les organisations qui accordent la priorité à la sécurité et à la résidence des données, Prompts.ai garantit que tous les flux de travail s'exécutent dans un environnement sécurisé. Il applique des politiques d'accès robustes, surveille l'utilisation et génère des rapports de conformité, permettant aux entreprises de tirer parti de l'évolutivité du cloud sans compromettre les normes de gouvernance ou de sécurité.

Ce modèle de déploiement est conçu pour évoluer sans effort, ce qui le rend adapté aux organisations de toutes tailles.

L'architecture de Prompts.ai est conçue pour soutenir la croissance sans alourdir les opérations. Il permet aux organisations d'ajouter instantanément des modèles, des utilisateurs et des équipes, avec des forfaits de niveau supérieur offrant une création d'espace de travail illimitée et un nombre illimité de collaborateurs. Des fonctionnalités telles que TOKN Pooling et Storage Pooling améliorent encore la gestion des ressources.

Le Plan de résolution de problèmes est proposé au prix de 99$ par mois (89$ par mois en cas de facturation annuelle) et comprend 500 000 crédits TOKN, des espaces de travail illimités, 99 collaborateurs et 10 Go de stockage dans le cloud. Pour les grandes organisations, les plans Business AI Tools proposent une tarification par membre avec des ressources mises en commun :

« J'ai passé des années à jongler entre des productions haut de gamme et des délais serrés. En tant que directeur de l'IA visuelle primé, il utilise désormais Prompts.ai pour prototyper des idées, affiner les visuels et réaliser des mises en scène avec rapidité et précision, transformant ainsi des concepts ambitieux en réalités époustouflantes, plus rapidement que jamais. »

- Johannes Vorillon, directeur de l'IA

Le système de crédit TOKN par répartition de la plateforme transforme les coûts fixes en une efficacité flexible basée sur l'utilisation, en alignant les dépenses sur les besoins réels.

Prompts.ai aborde le problème de la prolifération des outils en unifiant plus de 35 modèles et outils d'IA au sein d'une interface unique. Cette consolidation permet aux équipes de comparer les performances des modèles côte à côte, ce qui leur permet de choisir le meilleur outil pour chaque tâche sans changer de plateforme. Sa couche d'orchestration automatise le routage des demandes entre les modèles en fonction de critères tels que le coût, les performances ou la conformité, ce qui facilite la création de flux de travail intégrant plusieurs modèles.

Pour les entreprises disposant de technologies existantes, Prompts.ai fait office de hub central, se connectant de manière fluide à divers fournisseurs d'IA. Il gère l'authentification, la limitation du débit et la gestion des erreurs sur tous les modèles, évitant ainsi aux équipes de développement d'avoir à gérer le code d'intégration et de se concentrer sur la création de fonctionnalités pilotées par l'IA.

Prompts.ai intègre la gouvernance à chaque flux de travail, répondant ainsi aux besoins de conformité des secteurs réglementés. Il conserve des pistes d'audit détaillées qui documentent quels modèles ont été utilisés, par qui, dans quel but et à quel coût. Les administrateurs peuvent définir les autorisations des modèles, appliquer des limites de dépenses et exiger des approbations pour les tâches sensibles, afin de garantir la transparence et le respect des lois sur la protection des données et des politiques internes.

Un tableau de bord de gouvernance centralisé fournit des informations en temps réel sur toutes les activités liées à l'IA, ce qui permet d'identifier les violations des politiques ou les habitudes de dépenses inhabituelles avant qu'elles ne s'aggravent.

La sécurité des données est la pierre angulaire de la conception de Prompts.ai. Les informations sensibles traitées par le biais de ses flux de travail restent sous le contrôle de l'organisation, avec l'application automatique du cryptage, des politiques d'accès et des règles de traitement des données. Les contrôles FinOps en temps réel permettent aux équipes financières de définir des budgets, de recevoir des alertes à l'approche des seuils et de générer des rapports de coûts détaillés liés à des unités commerciales ou à des projets spécifiques. Cela renforce l'accent mis par la plateforme sur la gestion centralisée et la responsabilité financière.

Apache Airflow fournit une solution axée sur les développeurs pour gérer les flux de travail d'IA, constituant une alternative solide aux plateformes axées sur le cloud telles que Prompts.ai.

Cet outil open source est conçu pour orchestrer les flux de travail d'IA en définissant, planifiant et surveillant les tâches à l'aide de Python. Il est particulièrement adapté à la gestion d'opérations telles que la formation à l'apprentissage automatique, les déploiements d'IA et les processus de génération augmentés par récupération.

Au cœur d'Airflow se trouvent les graphes acycliques dirigés (DAG), qui décrivent la séquence et les dépendances des tâches. Cette structure attire les équipes qui accordent la priorité à la précision, au contrôle et à la reproductibilité de leurs flux de travail.

Apache Airflow a acquis une solide réputation, obtenant une note de 4,5/5 parmi les plateformes d'orchestration d'IA en 2025. Sa capacité à étendre les fonctionnalités via des bibliothèques Python et des plugins personnalisés permet de proposer des solutions d'automatisation personnalisées au niveau de l'entreprise.

Airflow prend en charge diverses configurations de déploiement, offrant une compatibilité avec les environnements basés sur le cloud et sur site. Sa nature open source en fait une option économique pour les startups et les équipes hautement qualifiées.

Qu'il s'agisse de projets à petite échelle ou d'opérations au niveau de l'entreprise, l'architecture d'Airflow peut évoluer pour répondre à divers besoins. Bien que ses capacités de mise à l'échelle horizontale soient robustes, la mise en œuvre de déploiements à grande échelle nécessite souvent une expertise spécialisée.

Grâce à sa prise en charge de plugins personnalisés et de bibliothèques Python, Airflow s'intègre parfaitement à un large éventail d'outils. Cette adaptabilité en fait un excellent choix pour créer des pipelines d'IA complexes, offrant le contrôle et la flexibilité nécessaires aux tâches d'orchestration avancées. Ces fonctionnalités positionnent Airflow comme un concurrent sérieux par rapport aux autres solutions d'orchestration abordées plus loin.

Prefect met désormais l'accent sur les outils destinés aux développeurs vers une solution cloud native qui simplifie la gestion des flux de travail. Conçu dans un souci de flexibilité et de facilité d'utilisation, il améliore l'observabilité pour les équipes qui gèrent des flux de travail complexes d'apprentissage automatique. En réduisant les problèmes liés à l'infrastructure, Prefect permet aux organisations de se concentrer sur le perfectionnement de leurs pipelines d'IA au lieu de résoudre les problèmes techniques.

La configuration cloud native de Prefect permet aux équipes de tirer parti de l'infrastructure cloud gérée pour leurs flux de travail d'IA et de ML. Cela élimine le besoin de configurations auto-hébergées, ce qui permet aux équipes de se concentrer sur la création et l'optimisation des flux de travail sans avoir à gérer les serveurs.

L'architecture de Prefect est conçue pour évoluer en fonction de vos besoins, que vous meniez des expériences à petite échelle ou que vous gériez des opérations au niveau de l'entreprise. Il gère l'augmentation des volumes de données et la complexité des flux de travail, ce qui en fait une option fiable pour les équipes qui cherchent à étendre leurs capacités d'IA à mesure que la demande augmente. Cette évolutivité fait de Prefect un choix efficace pour l'orchestration moderne des flux de travail d'IA.

Kubeflow fournit une solution native de Kubernetes pour orchestrer les flux de travail d'apprentissage automatique, ce qui en fait le choix idéal pour les organisations qui s'appuient déjà sur l'infrastructure Kubernetes. En tant que plateforme open source, elle simplifie la gestion des pipelines de machine learning au sein de l'écosystème Kubernetes, ce qui lui a valu d'être reconnue pour son intégration fluide à Kubernetes. Explorons comment le modèle de déploiement et les fonctionnalités de Kubeflow utilisent Kubernetes pour optimiser la gestion des ressources et l'évolutivité.

Kubeflow est conçu pour fonctionner de manière native avec Kubernetes, offrant une orchestration des conteneurs, une mise à l'échelle et une gestion efficace des ressources. Il prend en charge le déploiement dans des environnements hybrides, des configurations multicloud et des infrastructures sur site, offrant aux entreprises la flexibilité nécessaire pour exécuter leurs charges de travail de machine learning là où cela leur convient le mieux. Qu'il s'agisse d'un déploiement via des manifestes ou sa CLI, Kubeflow s'intègre directement dans les clusters Kubernetes existants, permettant aux équipes de tirer parti de leur expertise actuelle en matière de Kubernetes. Cela signifie que les data scientists et les ingénieurs ML peuvent se concentrer sur la création et le perfectionnement des pipelines au lieu de se concentrer sur les problèmes d'infrastructure.

Grâce à sa fondation Kubernetes, Kubeflow fournit des performances évolutives qui évoluent en fonction des besoins de l'organisation. Il prend en charge tout, des expériences à petite échelle à la formation sur les modèles d'entreprise à grande échelle. Des fonctionnalités telles que la formation et la diffusion distribuées garantissent que les flux de travail ML restent portables et peuvent évoluer efficacement à mesure que les demandes augmentent.

Les points forts de Kubeflow vont au-delà des opérations, offrant une excellente compatibilité avec les frameworks ML les plus courants. Il prend en charge TensorFlow, PyTorch, XG Boostet des frameworks de machine learning personnalisés, tandis que son architecture extensible permet des opérateurs, des plugins et des intégrations personnalisés avec divers services cloud et solutions de stockage.

Par exemple, une grande entreprise qui gère plusieurs projets de machine learning dans différents frameworks peut utiliser Kubeflow pour rationaliser ses flux de travail. Les data scientists peuvent concevoir des pipelines pour prétraiter les données, entraîner des modèles sur des modules GPU distribués, valider les résultats et déployer les modèles les plus performants sur les terminaux de service. Tout au long de ce processus, Kubeflow gère l'allocation des ressources, la gestion des versions et la mise à l'échelle en arrière-plan. Il automatise même le recyclage lorsque de nouvelles données sont disponibles, ce qui permet aux équipes de se concentrer sur le développement de modèles.

Kubeflow centralise également la gestion du cycle de vie des modèles, couvrant la formation, le déploiement, la surveillance, etc., le tout dans un environnement unifié. Son intégration étroite à l'ensemble de l'écosystème Kubernetes permet aux équipes de continuer à utiliser leurs outils préférés tout en maintenant une orchestration cohérente pour toutes les opérations de machine learning. Ces fonctionnalités font de Kubeflow une solution puissante pour gérer des flux de travail d'IA évolutifs et cohérents.

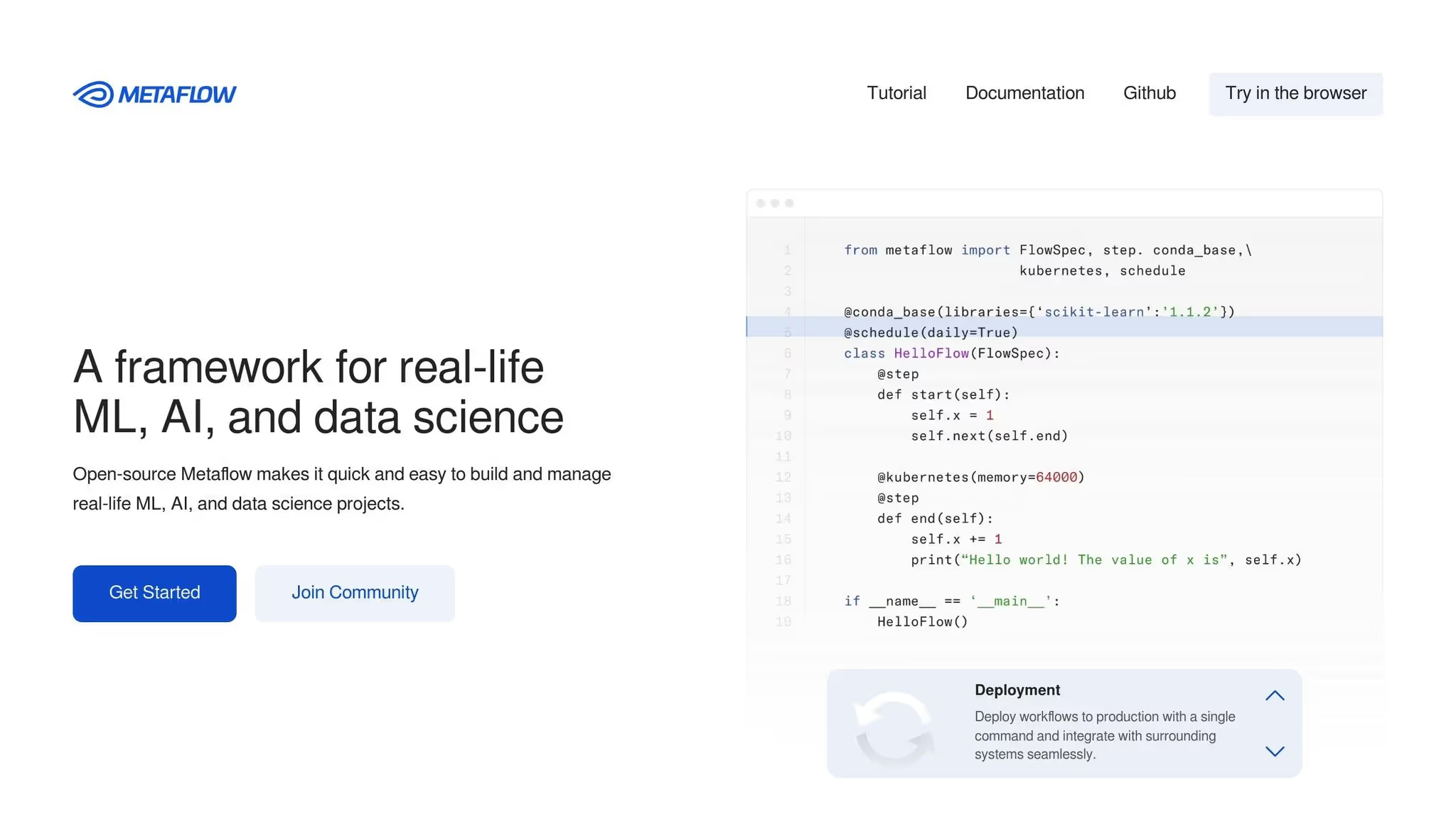

Metaflow, initialement créé par Netflix pour relever ses défis en matière d'apprentissage automatique, est conçu en mettant l'accent sur la facilité d'utilisation et l'évolutivité pratique. Il simplifie le déploiement des flux de travail en gérant les complexités sous-jacentes, garantissant ainsi une transition en douceur de l'expérimentation à la production dans le monde réel.

Metaflow adopte une approche intégrée au cloud, ce qui facilite le travail dans les environnements cloud. Les utilisateurs peuvent développer des flux de travail sur leurs machines locales et les déplacer facilement vers le cloud sans avoir à reconfigurer quoi que ce soit. Cela garantit un passage sans tracas du prototypage à la production.

Grâce à ses fonctionnalités d'intégration dans le cloud et de gestion des versions, Metaflow évolue efficacement pour gérer de grands ensembles de données et augmenter les exigences de calcul.

Metaflow fonctionne sans effort avec des outils de science des données largement utilisés, des bibliothèques Python standard et des frameworks d'apprentissage automatique. Aucun adaptateur supplémentaire n'est nécessaire. Il se connecte également aux principaux fournisseurs de cloud, permettant aux équipes de tirer parti de services natifs en matière de stockage, de puissance informatique et de fonctionnalités spécialisées. Cette configuration prête à la production permet aux entreprises d'intégrer facilement les flux de travail Metaflow dans leurs pipelines de données plus larges. Metaflow renforce ainsi sa position d'outil clé pour l'orchestration unifiée de l'IA dans le cadre de flux de travail évolutifs et prêts pour la production.

Dagster se concentre sur le maintien d'une qualité de données élevée en incorporant des contrôles approfondis et une surveillance détaillée des flux de travail.

Grâce à ses systèmes de typographie avancés et à ses fonctionnalités d'orchestration, Dagster jette les bases fiables d'une mise à l'échelle efficace des flux de travail.

Dagster inclut également des outils intégrés pour la validation, l'observabilité et la gestion des métadonnées, garantissant ainsi la cohérence de la qualité des données dans tous les systèmes d'IA.

IBM Watsonx Orchestrate est conçu pour apporter une automatisation de l'IA de niveau entreprise à des flux de travail complexes couvrant plusieurs départements. En intégrant de grands modèles de langage (LLM), des API et des applications d'entreprise, il gère en toute sécurité les tâches à grande échelle, ce qui le rend particulièrement utile dans les secteurs qui exigent des mesures strictes de gouvernance, d'audit et de contrôle d'accès.

IBM Watsonx Orchestrate propose une gamme d'options de déploiement pour répondre aux besoins des secteurs hautement réglementés. Les entreprises peuvent choisir entre des configurations de cloud hybride, entièrement basées sur le cloud ou sur site, afin de garantir le respect de leurs exigences spécifiques en matière de sécurité et de transparence [6,9]. Cette flexibilité permet aux entreprises de conserver des données sensibles sur site tout en utilisant les ressources du cloud pour l'évolutivité ou de s'appuyer entièrement sur des opérations basées sur le cloud. De plus, sa connectivité fluide avec IBM Watson les services améliorent les capacités d'automatisation cognitive, les rendant adaptables à divers environnements informatiques.

Les capacités d'intégration de la plateforme constituent un autre point fort. IBM Watsonx Orchestrate est livré avec des connecteurs prédéfinis pour des systèmes tels que l'ERP, le CRM et les ressources humaines, et s'intègre facilement aux principaux fournisseurs de cloud tels qu'AWS et Azure [8,9]. Grâce à des connecteurs visuels et à des API, il relie les systèmes dorsaux, les services cloud et les sources de données au sein d'une organisation. Cette fonctionnalité permet une automatisation fluide des flux de travail entre les départements tels que le service client, les finances et les ressources humaines.

Une importante institution financière a mis en œuvre avec succès Watsonx Orchestrate pour rationaliser les tâches de support client et de back-office. Les employés utilisent désormais des commandes en langage naturel pour lancer des flux de travail, tels que le traitement des demandes de prêt ou la gestion des demandes de service. La plateforme garantit la conformité en intégrant des politiques de gouvernance à ces opérations, ce qui permet d'accélérer les délais de traitement, de réduire les erreurs manuelles et d'améliorer la satisfaction des clients.

Pour les organisations soumises à des exigences de conformité rigoureuses, IBM watsonx Orchestrate fournit des fonctionnalités de gouvernance intégrées. Il intègre les politiques de gouvernance directement dans les flux de travail, applique des contrôles d'accès stricts et offre des fonctionnalités d'audit complètes [8,9]. Cela garantit que la plateforme répond aux normes élevées de sécurité et de transparence exigées par des secteurs tels que les services financiers, la santé et le gouvernement. En maintenant ces mesures de protection, les entreprises peuvent faire évoluer en toute confiance leur Automatisation pilotée par l'IA sans compromettre les exigences réglementaires.

Les plateformes d'orchestration de l'IA présentent chacune leurs points forts et leurs propres défis. Il est donc essentiel pour les organisations d'aligner leurs choix sur des flux de travail, des besoins techniques et des exigences de conformité spécifiques.

Voici un aperçu de la situation de certaines des plateformes les plus populaires :

Prompts.ai simplifie la gestion chaotique de plusieurs outils d'IA en proposant une interface unifiée et un suivi FinOps en temps réel, ce qui peut réduire les dépenses logicielles jusqu'à 98 %. Le système de crédit TOKN pay-as-you-go garantit que les équipes ne paient que pour ce qu'elles utilisent, tandis que des fonctionnalités telles que le programme de certification Prompt Engineer et « Time Savers » aident les équipes de tous niveaux de compétence à adopter rapidement la plateforme. Toutefois, pour les organisations qui investissent massivement dans des outils open source ou qui ont besoin d'intégrations étendues de code personnalisé, l'intégration de Prompts.ai dans leur configuration existante peut nécessiter une attention particulière.

Flux d'air Apache offre un contrôle inégalé et un écosystème robuste, mais sa complexité peut constituer un obstacle. La configuration, la maintenance et la mise à l'échelle d'Airflow nécessitent une expertise considérable, ce qui complique la tâche des petites équipes qui ne disposent pas de ressources DevOps dédiées. La courbe d'apprentissage abrupte retarde souvent les délais de déploiement, les faisant passer de plusieurs semaines à plusieurs mois.

Préfet répond à certains des défis d'Airflow grâce à une architecture moderne et à une courbe d'apprentissage plus fluide. Son modèle d'exécution hybride permet aux équipes de développer des flux de travail localement et de passer en douceur à une orchestration basée sur le cloud pour la production. Des fonctionnalités telles que la génération dynamique de flux de travail et une meilleure gestion des erreurs améliorent la résilience du pipeline. Cependant, l'écosystème plus restreint de Prefect signifie moins de connecteurs prédéfinis, ce qui peut entraîner des efforts d'intégration personnalisés plus fréquents.

Kubeflow est idéal pour les équipes de machine learning qui utilisent déjà Kubernetes. Il prend en charge l'ensemble du cycle de vie du machine learning, de la préparation des données au déploiement du modèle, et permet une formation distribuée sur plusieurs GPU sans nécessiter l'expertise des data scientists en matière d'infrastructure. Cela dit, l'expertise de Kubernetes est indispensable, ce qui peut créer des défis opérationnels pour les petites équipes ou pour les débutants en matière d'orchestration de conteneurs.

Métaflow vise à améliorer la productivité des data scientists en analysant les complexités de l'infrastructure, ce qui permet aux chercheurs de hiérarchiser les expériences. Sa transition fluide de l'exécution locale à l'exécution dans le cloud et le contrôle de version intégré pour les données, le code et les modèles accélèrent les cycles d'itération. Cependant, sa conception opiniâtre offre moins de flexibilité, et son approche centrée sur AWS peut ne pas convenir aux organisations engagées dans d'autres fournisseurs de cloud ou dans des stratégies multicloud.

Poignard adopte une approche axée sur l'ingénierie logicielle en matière de pipelines de données. Son modèle basé sur les actifs traite les données comme des citoyens de premier ordre, en définissant explicitement les dépendances et en promouvant la réutilisation. Des fonctionnalités telles que la saisie forte aident à détecter les erreurs à un stade précoce, réduisant ainsi le temps de débogage. Cependant, l'adoption de Dagster nécessite que les équipes adoptent un nouveau modèle mental, ce qui peut être intimidant pour ceux qui ne disposent pas de pratiques d'ingénierie logicielle établies.

IBM Watsonx Orchestrate répond aux besoins des secteurs ayant des besoins de sécurité et de conformité stricts, en proposant une gouvernance robuste et des intégrations d'entreprise. Ses options de déploiement flexibles (cloud hybride, sur site ou entièrement basé sur le cloud) en font un choix judicieux pour des secteurs tels que la finance, la santé et le gouvernement. Les utilisateurs non techniques peuvent déclencher des flux de travail via des interfaces en langage naturel, mais les coûts de licence élevés de la plateforme peuvent décourager les petites organisations ou celles qui commencent tout juste à se lancer dans l'IA.

Le choix de la bonne plateforme dépend de l'expertise technique de votre équipe, de l'infrastructure existante, des besoins de conformité et du budget. Les équipes à forte intensité d'ingénierie qui ont des préférences en matière d'open source se tournent souvent vers Airflow ou Prefect. Les équipes de machine learning qui utilisent déjà Kubernetes bénéficient des fonctionnalités de Kubeflow axées sur le ML. Les entreprises qui jonglent avec plusieurs modèles d'IA trouvent l'approche unifiée de Prompts.ai attrayante, tandis que les secteurs hautement réglementés donnent la priorité à IBM watsonx Orchestrate pour sa gouvernance et sa sécurité.

Pour faire le meilleur choix, pensez à piloter deux ou trois plateformes avec de véritables flux de travail. Évaluez non seulement les caractéristiques techniques, mais également la rapidité avec laquelle votre équipe peut adopter l'outil, le temps nécessaire pour créer de la valeur et les efforts de maintenance à long terme. Une plateforme qui semble idéale sur le papier peut révéler des défis inattendus lorsqu'elle est mise en pratique.

Choisir la bonne plateforme d'orchestration de l'IA revient à aligner vos besoins spécifiques sur les atouts de chaque solution. La meilleure solution dépendra de facteurs tels que votre expertise technique, vos exigences de conformité et vos contraintes budgétaires.

Pour les équipes d'ingénieurs possédant de solides compétences DevOps et une préférence pour les outils open source, Flux d'air Apache ou Préfet peut parfaitement s'intégrer dans les flux de travail existants. Cependant, préparez-vous à la configuration et à la maintenance continue requises par ces plateformes. Si votre équipe exploite déjà l'infrastructure Kubernetes, Kubeflow fournit un support complet pour l'ensemble du cycle de vie de l'apprentissage automatique. D'autre part, les data scientists qui se concentrent sur l'expérimentation rapide et une gestion minimale de l'infrastructure peuvent trouver Métaflow un choix idéal, en particulier pour les environnements AWS.

Les entreprises qui jonglent avec plusieurs outils d'IA peuvent bénéficier de Prompts.ai, qui regroupe plus de 35 modèles dans un écosystème unifié. Son système de crédit TOKN à paiement à l'utilisation élimine les frais d'abonnement, liant les coûts directement à l'utilisation et réduisant potentiellement les dépenses liées à l'IA jusqu'à 98 %. Des fonctionnalités telles que le programme de certification Prompt Engineer et la bibliothèque « Time Savers » permettent aux équipes de différents niveaux d'expertise de devenir rapidement opérationnelles. Cependant, les organisations qui s'appuient largement sur des intégrations open source personnalisées devraient évaluer dans quelle mesure Prompts.ai s'aligne sur leur infrastructure existante.

Pour les équipes qui créent des pipelines de données, Poignard propose des flux de travail robustes et basés sur les actifs, attrayants pour les ingénieurs logiciels. N'oubliez pas que l'adoption de l'approche unique de Dagster peut nécessiter plus de temps pour s'adapter. Entre-temps, IBM Watsonx Orchestrate s'adresse à des secteurs tels que la finance, la santé et le gouvernement, où une gouvernance stricte et des options de déploiement hybride justifient son prix plus élevé.

En fin de compte, l'essentiel est de faire correspondre vos flux de travail à la plateforme qui les prend le mieux en charge. Le test de deux ou trois plateformes avec des flux de travail réels peut fournir des informations précieuses sur la productivité des équipes, le délai de rentabilisation et le coût total de possession sur une période de 12 à 24 mois. Déterminez dans quelle mesure chaque plateforme s'intègre à vos outils actuels, déterminez si la courbe d'apprentissage est gérable pour votre équipe et si les coûts globaux, y compris les dépenses d'infrastructure et de maintenance cachées, correspondent à votre budget.

La bonne plateforme n'est pas celle qui possède la plus longue liste de fonctionnalités. C'est celui qui élimine les obstacles, stimule la productivité et évolue parallèlement à vos initiatives en matière d'IA.

Prompts.ai simplifie la gestion de plusieurs modèles d'IA en combinant l'accès à plus de 35 grands modèles de langage au sein d'une seule plateforme. Cette intégration permet aux utilisateurs de comparer facilement les modèles et de conserver un contrôle centralisé, évitant ainsi de jongler avec différents outils et de créer un flux de travail plus organisé.

Avec Prompts.ai, les utilisateurs gagnent des opérations plus fluides, coûts réduits, et visibilité instantanée dans les performances et les dépenses du modèle. Ces fonctionnalités permettent aux entreprises et aux développeurs d'affiner leurs stratégies d'IA et d'étendre leurs capacités avec une plus grande efficacité.

Lorsque vous choisissez une plateforme d'orchestration de l'IA adaptée aux organisations soumises à des exigences strictes en matière de conformité et de gouvernance, concentrez-vous sur les plateformes proposant mesures de sécurité strictes. Recherchez des fonctionnalités telles que les contrôles d'accès basés sur les rôles, le cryptage et les certifications telles que SOC 2, GDPR ou HIPAA. Ces éléments sont essentiels pour garantir la protection des données et la conformité réglementaire.

Il est également essentiel que la plateforme fournisse capacités de surveillance et d'audit détaillées, vous permettant de suivre les performances et de vérifier le respect des normes réglementaires. Plateformes proposant options de résidence des données et les réseaux privés peuvent renforcer davantage la sécurité et le contrôle des informations sensibles.

Pour maintenir la gouvernance, hiérarchisez les plateformes avec flux de travail d'approbation intégrés et des outils pour appliquer les politiques relatives à l'utilisation des modèles et à la confidentialité des données. En outre, les fonctionnalités qui vous permettent de surveiller les résultats de l'IA pour détecter d'éventuels problèmes, tels que des biais ou des contenus dangereux, sont essentielles pour respecter les directives de conformité et d'éthique.

Prompts.ai fonctionne sur un Payez à l'utilisation structure tarifaire, vous permettant d'acheter des crédits TOKN et de payer uniquement pour ce que vous utilisez. Cette approche vous permet de contrôler vos dépenses sans être lié à des coûts supplémentaires inutiles.

Avec un accès à plus de 35 grands modèles de langage, Prompts.ai intègre un couche FinOps qui fournit des informations en temps réel sur l'utilisation, les dépenses et le retour sur investissement. Cette fonctionnalité permet aux équipes de suivre de près leurs dépenses et d'ajuster les coûts de manière efficace, offrant ainsi un moyen évolutif et économique de gérer les flux de travail d'IA.