AI governance tools are essential for managing complex workflows, ensuring compliance, and controlling costs in organizations using artificial intelligence. This article highlights six leading platforms designed to simplify AI orchestration while addressing governance, security, and scalability challenges:

Each tool addresses specific organizational needs, from managing LLMs to automating machine learning pipelines. Below is a comparison to help you choose the right fit for your team.

Choose a platform that aligns with your technical expertise, compliance requirements, and workflow complexity. For LLM-heavy operations, Prompts.ai simplifies orchestration and governance, while tools like Kubeflow or Apache Airflow cater to data engineering and machine learning needs.

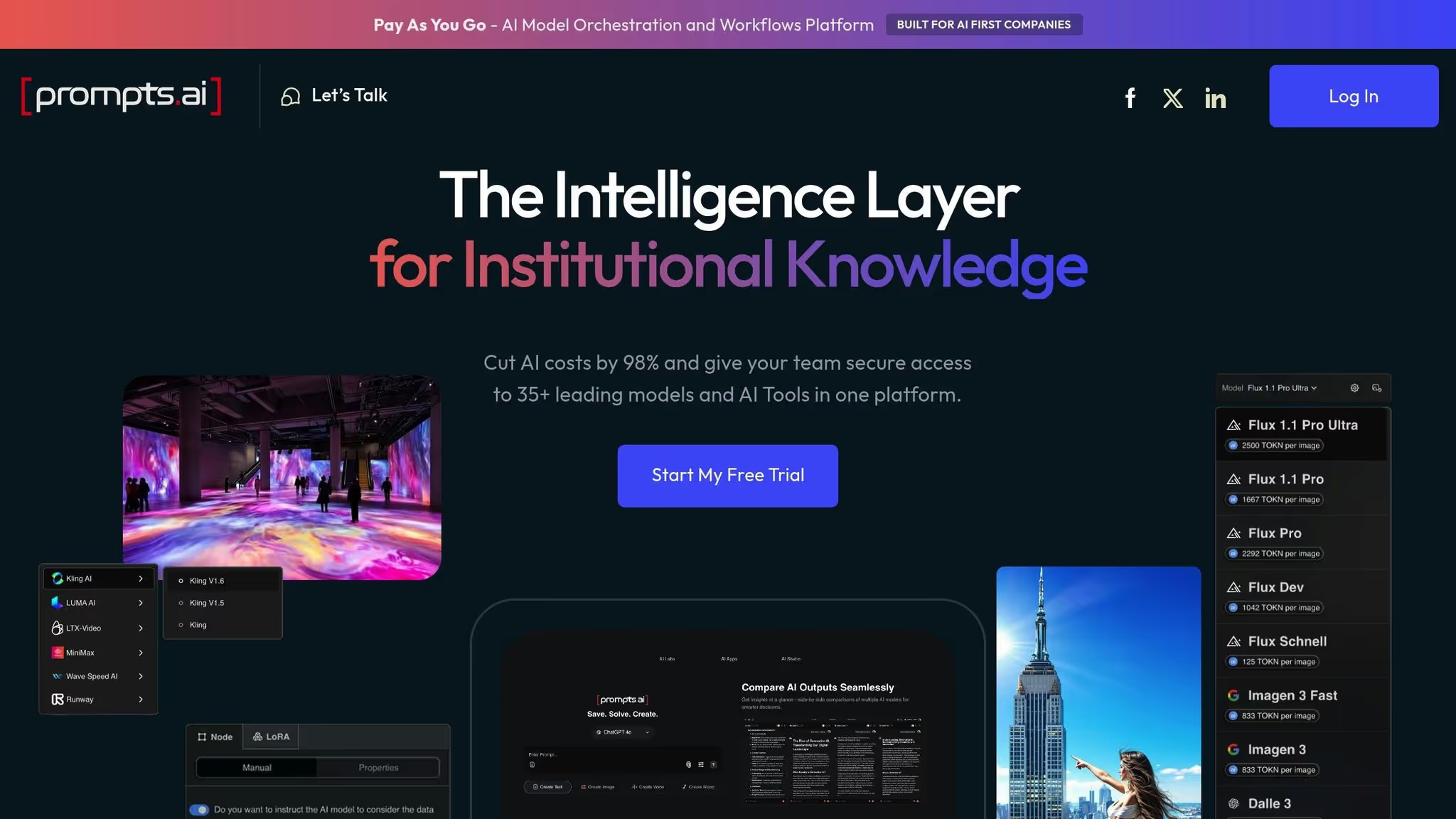

Prompts.ai brings together access to over 35 AI models - including GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, and Kling - into a single enterprise-ready platform. By consolidating these tools, it eliminates the chaos of managing multiple systems, reducing compliance risks and hidden costs. This unified approach turns scattered AI experiments into streamlined, scalable processes, all supported by built-in governance controls that document every interaction.

Prompts.ai provides comprehensive oversight and accountability for all AI activities. It creates detailed logs for compliance teams to review and enforces governance at scale through automated policy controls. These controls help prevent unauthorized access to models and protect against data-sharing violations. Administrators can set and enforce rules across teams, while the platform’s continuous compliance monitoring flags potential issues before they escalate into regulatory problems.

The platform also automates AI workflows, transforming one-off tasks into structured, repeatable processes. This ensures departments across the organization follow the same security protocols and usage guidelines. Every subscription plan includes features for compliance monitoring and governance, making these essential tools accessible to organizations of any size.

Prompts.ai adheres to strict industry standards, including SOC 2 Type II, HIPAA, and GDPR, with continuous monitoring through Vanta to maintain these benchmarks. The company initiated its SOC 2 Type 2 audit process on June 19, 2025, reflecting its dedication to robust security and compliance practices. Users can access detailed information on policies, controls, and certifications by visiting the Trust Center at https://trust.prompts.ai/.

The platform’s security framework ensures sensitive data stays within the organization’s control during AI operations. Role-based access controls restrict access to specific models and workflows, while detailed audit logs provide a clear record of all actions for accountability.

Offered as a cloud-based SaaS solution, Prompts.ai is accessible from any web browser, eliminating the need for software installations. This design supports seamless use across desktops, tablets, and mobile devices, making it ideal for distributed and remote teams while maintaining security and governance standards.

Organizations can easily scale their operations by adding models, users, and teams through flexible subscription tiers. Individual users can choose between the $0 Pay As You Go or $29 Creator plans, while businesses can opt for Core, Pro, or Elite plans, which include unlimited workspaces and collaborators.

Prompts.ai simplifies AI management by connecting enterprise users to a unified ecosystem of models through a single interface. This eliminates the hassle of juggling multiple subscriptions and billing systems. Teams can switch between models based on their needs and compare performance side-by-side, all while adhering to consistent governance policies.

Real-time FinOps cost controls track every token used across models and users, giving finance teams a clear view of AI spending and its alignment with business goals. By replacing fragmented billing systems with an integrated approach, Prompts.ai makes it easier for organizations to manage costs while scaling their AI capabilities.

The platform’s architecture, combined with its TOKN credit system, supports seamless growth. It allows organizations to integrate new models and scale operations effortlessly, adapting to actual usage demands.

IBM watsonx Orchestrate provides a powerful AI automation solution tailored for businesses operating under strict regulatory requirements. By combining large language models (LLMs), APIs, and enterprise applications, the platform enables secure, scalable task completion while maintaining compliance. Its design emphasizes both security and transparency, making it a dependable choice for industries where these qualities are essential.

Governance is at the core of IBM watsonx Orchestrate. The platform includes role-based access controls, allowing administrators to manage permissions effectively and ensure accountability throughout the system. Organizations can also define workflow-specific rules, helping to create structured, transparent processes driven by AI.

Built to meet enterprise compliance standards, IBM watsonx Orchestrate is ideal for businesses in regulated industries. Its focus on security ensures that automated tasks align with strict regulatory guidelines.

The platform’s seamless integration of AI tools supports expanding automation efforts without compromising compliance. As organizations grow, tasks can be executed securely and efficiently, ensuring smooth scaling of operations.

Kubiya AI simplifies DevOps and IT operations through conversational interfaces. By automating workflows and managing infrastructure with natural language commands, the platform reduces complexity and shortens the learning curve for users.

Kubiya AI ensures accountability with detailed audit logs that track all conversational actions. This level of transparency provides distributed teams with the documentation needed for compliance reviews and operational clarity.

The platform also enforces strict policies for critical operations. Sensitive changes require human approval, with teams able to set up workflows to manage these approvals. Its permission system integrates seamlessly with existing identity management tools, maintaining consistent access controls across the organization.

These governance measures work hand-in-hand with Kubiya AI’s robust security framework.

Security is a core element of Kubiya AI’s design. The platform employs encryption both in transit and at rest, safeguarding sensitive data throughout orchestration workflows. For organizations in regulated industries, Kubiya AI helps meet compliance standards by automating enforcement, minimizing the risk of human error in critical processes.

The platform’s context-aware security system adjusts based on the sensitivity of each action. High-risk tasks trigger additional verification, while routine operations proceed smoothly with minimal interruptions. This adaptive approach balances security with operational efficiency.

Kubiya AI provides flexible deployment models to meet diverse organizational needs. Companies can choose cloud-hosted deployments for quick implementation or on-premises installations to meet data sovereignty requirements. A hybrid model is also available, enabling businesses to keep sensitive workloads on their own infrastructure while utilizing cloud resources for less critical tasks.

The platform integrates effortlessly with leading DevOps tools using REST APIs, webhooks, and direct connections. Teams can coordinate workflows across multiple systems without the need to write custom code, relying on natural language commands to streamline operations.

For specialized needs, Kubiya AI supports custom integrations. Its development framework allows organizations to build new connections while upholding the same governance standards applied to native tools.

This seamless integration capability is matched by the platform’s ability to scale effectively.

Kubiya AI’s distributed architecture supports horizontal scaling, ensuring it can handle increased workflows without sacrificing performance. The system dynamically adjusts resource allocation to maintain optimal operation during peak usage.

With centralized management, teams can oversee development, staging, and production environments under unified governance policies. This setup simplifies oversight while maintaining the necessary isolation for safe testing and deployment, ensuring smooth and efficient operations at every stage.

Apache Airflow is an open-source tool designed for creating, scheduling, and monitoring workflows programmatically. Initially developed by Airbnb in 2014, it has grown into a popular solution for managing complex data pipelines and AI workflows across organizations of various sizes.

The platform uses Directed Acyclic Graphs (DAGs) to define workflows as code, offering clear visibility into task dependencies. This code-centric approach enables data engineers and AI teams to use standard Git practices for version control, simplifying collaboration and tracking changes.

Apache Airflow’s DAG-based architecture supports detailed governance capabilities. Every workflow run generates logs that document task statuses, execution times, and error messages, creating an audit trail for teams to review and troubleshoot.

The platform also offers Role-Based Access Control (RBAC), allowing administrators to assign specific permissions to users and teams. This ensures only authorized personnel can create, modify, or execute workflows, safeguarding sensitive AI operations. Integration with LDAP and OAuth systems ensures alignment with existing organizational security frameworks.

Airflow automatically enforces task execution order. If a critical governance check fails, downstream tasks are paused until the issue is resolved. This safeguard prevents incomplete or non-compliant workflows from advancing into production environments.

Security is a core focus in Apache Airflow, particularly when handling sensitive credentials and data. The platform integrates with tools like HashiCorp Vault, AWS Secrets Manager, and Google Cloud Secret Manager via its secrets backend. This prevents sensitive information, such as API keys and database passwords, from being exposed in plain text. Additionally, Airflow supports encrypted connections to external systems, protecting data during transfers between workflow components - an essential feature for organizations in regulated industries.

The logging system can be customized to exclude sensitive details from audit trails, striking a balance between operational transparency and data security. Teams can decide what gets logged and what remains private, ensuring compliance with privacy standards while maintaining visibility.

Apache Airflow offers flexible deployment options, making it a versatile tool for orchestrating AI workflows. Teams can deploy Airflow on local servers, in cloud environments like AWS, Google Cloud, or Azure, or through managed services that handle infrastructure upkeep. This adaptability allows organizations to meet their specific data residency and operational needs.

For containerized setups, Airflow integrates with Kubernetes through the KubernetesExecutor. This setup creates isolated pods for each task, enabling efficient scaling and resource allocation. For distributed environments, the CeleryExecutor supports parallel task execution across multiple worker nodes, ensuring high-throughput performance without bottlenecks.

Apache Airflow features an extensive library of operators and hooks, enabling seamless connections to a wide range of external systems without the need for custom code. Teams can orchestrate workflows involving databases, cloud storage, machine learning platforms, and business intelligence tools using these pre-built components.

The platform’s provider packages simplify integration with popular services, enabling workflows that handle tasks like compliance reporting, model training, and notifications - all within a single system. For scenarios requiring unique integrations, Airflow’s Python-based framework allows for the creation of custom operators that adhere to the same governance standards as native ones.

Apache Airflow is designed to scale horizontally by adding worker nodes to meet growing workflow demands. Its scheduler can be configured for high availability, ensuring multiple instances run simultaneously to eliminate single points of failure.

The platform uses a metadata database to store workflow states and execution histories. As workflow volumes increase, organizations can optimize this database to maintain fast query times, even with millions of logged task executions.

Airflow also includes resource pools, which limit concurrent task execution to prevent any single workflow from monopolizing system resources. This ensures fair resource allocation across multiple AI projects, maintaining stability even during periods of heavy usage.

Launched by Google in 2017, Kubeflow is an open-source toolkit designed to simplify the deployment, monitoring, and management of machine learning pipelines on Kubernetes.

This platform provides a centralized space for data scientists and ML engineers to create end-to-end workflows - from preparing data and training models to deployment and ongoing monitoring. Built on Kubernetes, Kubeflow benefits from its robust container orchestration features, making it ideal for handling complex, distributed AI tasks.

Kubeflow offers strong governance tools, focusing on pipeline versioning and experiment tracking. It logs every pipeline run, capturing model parameters, datasets, and performance metrics, creating a detailed audit trail essential for compliance and troubleshooting.

The Kubeflow Pipelines component allows teams to define workflows as reusable, versioned artifacts. Each pipeline run is meticulously documented, recording inputs, outputs, and intermediate results. This ensures experiments can be reproduced and decisions traced back to specific workflow versions - an invaluable feature for industries with strict regulations, such as healthcare and finance.

Additionally, Kubeflow includes metadata management through its ML Metadata (MLMD) component. This tracks the lineage of datasets, models, and deployments, enabling teams to identify the root cause of issues when a model behaves unexpectedly. By examining metadata, it becomes easier to pinpoint the training data or pipeline version responsible for anomalies.

These governance tools provide a solid foundation for implementing advanced security and compliance measures.

Kubeflow leverages Kubernetes' built-in security features to protect AI workflows. It supports namespace isolation, which separates projects or teams into distinct environments, each with its own access controls. This ensures sensitive data and workflows remain secure from unauthorized access.

Role-Based Access Control (RBAC) lets administrators assign permissions based on roles, ensuring that team members can only perform actions appropriate to their responsibilities. For example, junior staff can run experiments but cannot deploy models to production. Integration with enterprise identity providers, such as OAuth and OIDC, ensures seamless authentication within existing systems.

To safeguard data, Kubeflow facilitates encrypted communication between components and integrates with secrets management systems to handle sensitive credentials. Teams working with confidential data can configure pipelines to operate in secure environments that meet data residency requirements, ensuring compliance with local regulations.

Kubeflow is compatible with any Kubernetes cluster, whether on-premises or on cloud platforms like AWS, GCP, or Azure. This flexibility allows organizations to choose deployment options based on their specific needs for compliance, cost, or performance.

The platform provides distribution packages tailored to various cloud providers, streamlining the setup process. For example, teams using Google Cloud can rely on AI Platform Pipelines, a managed Kubeflow service that reduces infrastructure management. Meanwhile, organizations with Kubernetes expertise can deploy Kubeflow on self-managed clusters, giving them full control over configurations and resources.

Kubeflow's modular design means teams can install only the components they require. A small team might focus on notebook servers and pipelines, while a larger enterprise could implement the full stack, including model serving, hyperparameter tuning, and distributed training.

This modularity ensures Kubeflow integrates smoothly with a wide range of machine learning tools.

Kubeflow works seamlessly with popular frameworks like TensorFlow, PyTorch, and XGBoost, allowing teams to use their preferred tools without disruption.

The KFServing component (now called KServe) standardizes model serving across frameworks. Whether models are trained in TensorFlow or scikit-learn, teams can deploy them using consistent APIs, simplifying the transition from experimentation to production.

Thanks to its component-based architecture, Kubeflow supports workflows that combine various tools. For instance, data preprocessing steps written in Python can easily connect with model training tasks that run on specialized hardware. This flexibility enables teams to build workflows tailored to their specific needs.

Kubeflow harnesses Kubernetes' horizontal scaling to handle large datasets or models efficiently. It automatically provisions nodes as needed, ensuring resources are used effectively.

The platform's distributed training operators manage jobs across multiple GPUs or machines. For TensorFlow models, the TFJob operator oversees parameter server setup and worker distribution. Similarly, PyTorch users can rely on the PyTorchJob operator for distributed training.

To maintain fairness in resource usage, Kubeflow enforces resource quotas and limits. Teams can allocate CPU, memory, and GPU resources for different pipeline components, ensuring no single workflow monopolizes cluster resources. This is particularly valuable in shared environments where multiple teams compete for computational power.

Launched in 2018, Prefect is a platform designed to orchestrate workflows, enabling teams to build, run, and manage data pipelines with ease. Unlike older tools that impose rigid structures, Prefect allows workflows to be written as Python code, giving developers the flexibility to design pipelines tailored to their unique needs.

The platform simplifies the process of creating, testing, and debugging workflows. Teams can develop pipelines locally using familiar Python tools and then deploy them to production with minimal adjustments. This seamless transition reduces friction between development and deployment, helping organizations iterate faster on their data and AI workflows.

Prefect offers detailed observability, capturing logs, task states, runtime metrics, and audit trails for every workflow run. This transparency provides insights into task execution, timing, and the data processed - essential for meeting data governance standards.

The flow versioning feature automatically tracks changes to workflows. Each update is logged with metadata, including who made the change and when, making it easy to trace modifications or revert to earlier versions if needed. This history fosters accountability within teams.

Built-in task retries and failure handling allow teams to set retry policies for individual tasks and capture detailed error data when something goes wrong. Additionally, parameter tracking records inputs and outputs for each workflow run, which is crucial for reproducing results and diagnosing anomalies in AI models.

Prefect strengthens its governance capabilities with robust security features. Role-based access control lets administrators manage permissions, ensuring sensitive workflows remain accessible only to authorized users. This granular control helps organizations comply with internal and external security requirements.

The platform integrates secrets management, allowing teams to store sensitive information like API keys and database credentials securely. These secrets are accessed at runtime and never exposed in logs or version control systems, ensuring data security.

For organizations handling sensitive data, Prefect supports hybrid deployment models. This setup enables data to stay within an organization’s infrastructure while leveraging cloud-based orchestration. This is particularly beneficial for industries like healthcare, finance, and government, where data residency is a top priority.

Audit logging tracks administrative actions, such as user logins and permission changes, ensuring a clear record of all activities. These logs can be exported to external systems for centralized monitoring, helping security teams maintain oversight.

Prefect offers flexible deployment options to suit various organizational needs. The Prefect Cloud solution provides a fully managed service that handles infrastructure, monitoring, and scaling, freeing teams to focus on workflow development without worrying about backend management.

For teams that prefer more control, self-hosted deployment is available. Organizations can run Prefect on their own infrastructure, whether that’s Kubernetes clusters, virtual machines, or on-premises data centers. This option ensures complete control over data, network configurations, and resources.

A hybrid execution model combines the benefits of cloud orchestration with local workflow execution. Tasks are processed within the organization’s secure environment while leveraging the cloud for orchestration. This approach balances security with convenience, making it ideal for sensitive workflows.

Prefect also supports containerized environments, allowing teams to package workflows in Docker containers. This ensures workflows perform consistently across development, testing, and production environments, solving the common “it works on my machine” problem.

Prefect connects seamlessly with a variety of tools and frameworks. Its task library supports databases like PostgreSQL and MongoDB, cloud storage options like AWS S3 and Google Cloud Storage, and processing frameworks such as Apache Spark. This simplifies integration without requiring extensive custom code.

The platform’s Python-first approach makes it compatible with popular machine learning libraries like TensorFlow, PyTorch, scikit-learn, and Hugging Face Transformers. Teams can handle model training, evaluation, and deployment directly within their workflows.

Through API integrations, workflows can interact with external services via HTTP requests. For instance, teams can trigger workflows with webhooks, send notifications to Slack, or update project management tools as tasks are completed. Prefect’s event-driven orchestration allows workflows to respond to triggers like file uploads or database changes, enabling real-time data processing pipelines.

Prefect is designed to handle growing demands with ease. By adding worker nodes, the platform scales horizontally to manage large datasets or resource-intensive AI models without bottlenecks.

Task concurrency controls let teams define how many tasks can run simultaneously, ensuring downstream systems aren’t overwhelmed. Additionally, dynamic workflow generation creates tasks at runtime based on input data, making it easy to scale pipelines without manual adjustments.

To boost efficiency, Prefect employs caching mechanisms that store results from expensive computations. If a task is rerun with the same inputs, the platform retrieves the cached result instead of recomputing, saving time and resources - especially in workflows with repetitive preprocessing or feature engineering steps.

Selecting the right orchestration platform depends on factors like your team's technical expertise, governance requirements, and the complexity of your workflows. Below is a comparison of key platforms, highlighting their strengths and considerations.

Prompts.ai is ideal for organizations looking to simplify AI tool management while maintaining strict governance. It offers a unified interface for over 35 top language models, including GPT‑5, Claude, LLaMA, and Gemini, which streamlines managing multiple models securely. Its pay‑as‑you‑go TOKN credit system can reduce AI costs by up to 98%. Additional resources like the Prompt Engineer Certification program and the community-driven "Time Savers" library help users adopt best practices quickly. However, for teams focused on traditional data pipelines, this platform might feel more tailored to large language model workflows.

IBM watsonx Orchestrate excels in providing enterprise-level security and compliance, making it a strong choice for organizations with rigorous governance needs. Its integration within IBM's broader AI ecosystem supports secure connectivity and automation. However, the platform's steep learning curve and enterprise-focused pricing may pose challenges for smaller teams or those new to AI governance.

Kubiya AI takes a conversational approach, enabling teams to manage workflows using natural language commands. This lowers the technical barrier for non-developers. That said, its governance capabilities may need further development to meet stricter compliance requirements.

Apache Airflow is favored by teams with Python expertise who want complete control over their workflows. Its open-source design eliminates licensing costs, and a vibrant community offers a wealth of integrations. However, users must handle infrastructure, scaling, and security on their own, with governance often requiring custom development.

Kubeflow is a strong fit for organizations running AI workloads on Kubernetes. It supports the entire machine learning lifecycle, from data preparation to distributed training, but requires in-depth knowledge of container orchestration. Its governance features are more focused on tracking experiments and model metadata rather than comprehensive compliance.

Prefect offers a developer-friendly platform with Python-based workflows and hybrid execution models, making it easy to transition from development to production. While it works well for general data pipelines, teams may need to build custom solutions for AI-specific governance, such as tracking prompt versions or monitoring model drift.

Cost models vary significantly across platforms. Prompts.ai uses a pay‑as‑you‑go system, aligning costs with usage and avoiding wasted resources. Open-source platforms like Apache Airflow and Kubeflow have no licensing fees but require investments in infrastructure and skilled personnel. Enterprise solutions such as IBM watsonx Orchestrate typically involve annual contracts that bundle support and compliance features.

Security measures differ across platforms. Enterprise solutions often come with built-in role-based access control, secrets management, and detailed audit logs. Open-source options like Apache Airflow and Kubeflow require teams to implement these safeguards independently. Prefect provides solid baseline security, but teams in regulated industries may need to enhance these features.

Scalability also varies. Prompts.ai is designed to handle high volumes of LLM calls without requiring custom scaling logic. Kubeflow excels at scaling compute-heavy training jobs across nodes, while Apache Airflow and Prefect allow horizontal scaling by adding worker nodes, though manual configuration is needed. Integration ecosystems play a significant role as well. Apache Airflow benefits from a vast library of community-built connectors, while Prompts.ai focuses on deep integrations with leading LLM providers and enterprise systems. Kubeflow integrates seamlessly with popular ML frameworks, making it essential to align your technology stack with the platform’s native capabilities to minimize custom development.

Transitioning from experimental to production AI systems often reveals a governance gap. Traditional orchestrators focus on task execution and data lineage but lack features like prompt versioning, model output comparisons, or AI-specific compliance controls. Prompts.ai addresses these needs by treating prompts as first-class entities, incorporating features like version tracking, performance comparisons, and cost attribution. General-purpose orchestrators require teams to build these capabilities in-house.

Support and community resources are critical. Open-source platforms have broad community support, though formal assistance often requires paid contracts. Prompts.ai provides hands-on onboarding and enterprise training to speed up adoption, while IBM offers extensive documentation and dedicated support. Deployment flexibility also varies: Prefect and Prompts.ai accommodate specific data residency and infrastructure needs, whereas Kubeflow requires a Kubernetes environment.

Choosing the right platform depends on whether your focus is general data workflows or managing AI models. Teams working on traditional ETL processes with occasional machine learning components may find Apache Airflow or Prefect sufficient. However, organizations deploying AI across multiple departments can benefit from a specialized solution like Prompts.ai, which consolidates model access, cost management, and compliance into a single platform. This comparison highlights the importance of governance, cost efficiency, and scalability in orchestrating AI workflows.

The analysis above showcases the distinct advantages each platform offers, emphasizing the importance of choosing an AI governance tool that aligns with your organization's specific needs, capabilities, and long-term AI objectives. Each platform reviewed targets a unique aspect of the orchestration challenge, from managing traditional data pipelines to handling specialized large language models.

For organizations juggling multiple large language models, Prompts.ai stands out by offering unified model access, robust governance enforcement, and cost control through its pay-as-you-go TOKN system. Its integrated FinOps layer and prompt versioning address governance gaps often seen in general-purpose orchestrators.

Enterprises deeply integrated into IBM's ecosystem and requiring enterprise-level security with comprehensive compliance support will find IBM watsonx Orchestrate a solid choice. However, teams should be prepared for a steeper learning curve and higher initial investment. Meanwhile, organizations with Python-savvy engineering teams that value complete control over workflow logic may lean toward Apache Airflow, understanding the trade-offs of managing infrastructure and building custom governance solutions.

For those running AI workloads on Kubernetes infrastructure, Kubeflow offers seamless integration and full lifecycle support for machine learning. However, leveraging its capabilities effectively requires expertise in container orchestration. Prefect provides a balanced option for data teams seeking user-friendly workflows and hybrid deployment options, though custom development may be needed to address AI-specific governance requirements.

Lastly, Kubiya AI simplifies technical barriers with its conversational interface, though its governance capabilities should be carefully assessed for compliance-heavy use cases.

Ultimately, the right platform is the one that matches your organization's technical expertise and strategic priorities. While general-purpose orchestrators may suffice for traditional ETL processes, core AI tasks - such as prompt engineering, model evaluation, and cost management - are better supported by specialized platforms. Addressing the governance gap between experimental and production AI systems from the outset can save significant time and resources. Choose a solution that balances the agility of experimentation with the rigor of production-grade governance to set the stage for long-term AI success.

Prompts.ai adheres to top-tier compliance standards to safeguard your data and maintain secure operations. It aligns with established frameworks such as SOC 2 Type II, HIPAA, and GDPR, meeting stringent security and compliance benchmarks.

To reinforce these efforts, Prompts.ai collaborates with Vanta for ongoing monitoring of security controls and initiated its SOC 2 Type II audit process on June 19, 2025. These steps ensure your AI workflows are handled with clarity, reliability, and strong protections.

When choosing an AI governance tool to manage workflow orchestration, there are several key aspects to keep in mind to ensure it aligns with your organization's objectives. Begin by clearly identifying your goals and the specific workflows you need to oversee. This clarity will guide you in selecting a tool tailored to your requirements.

Focus on platforms that offer scalability, compliance features, and transparency to effectively manage the complexities of AI systems. Tools with automated workflow capabilities and strong monitoring features are particularly valuable, as they can help you streamline operations while ensuring everything runs smoothly and efficiently.

Lastly, evaluate the tool's ability to integrate effortlessly with your current systems and its approach to secure data management. These elements are essential for maintaining operational continuity and achieving long-term success.

The TOKN credit system on Prompts.ai streamlines managing AI costs by acting as a universal currency for a wide range of AI services. Each TOKN represents the computing power required for tasks such as content creation, model training, and other complex AI operations.

This approach ensures clear and flexible resource allocation, helping users manage their budgets effectively while maintaining predictable expenses. It’s built to make handling AI workflows straightforward and reliable for organizations.