Generative AI is transforming industries, growing from $191 million in 2022 to $25.6 billion in 2024, with projections aiming for $1.3 trillion by 2032. Businesses are turning to AI for content creation, customer support, and software development, with 44% of U.S. companies adopting AI tools in 2024, up from just 5% in 2023. Key players like NVIDIA, Microsoft, AWS, and Google dominate the market, while startups like Prompts.ai and Hugging Face offer unique solutions.

| Company | Key Features | Challenges |

|---|---|---|

| Prompts.ai | Unified access to 35+ models, cost transparency | Newer player, relies on third-party models |

| OpenAI | GPT models, multimodal capabilities | Higher costs for large-scale use |

| Gemini models, Workspace integration | Fragmented offerings, complex pricing | |

| Anthropic | AI safety, enterprise-grade security | Fewer models, higher costs |

| Hugging Face | Open-source, vast model repository | Requires in-house expertise |

This dynamic market offers solutions for enterprises, developers, and investors, with options tailored to diverse needs like cost savings, security, and customization.

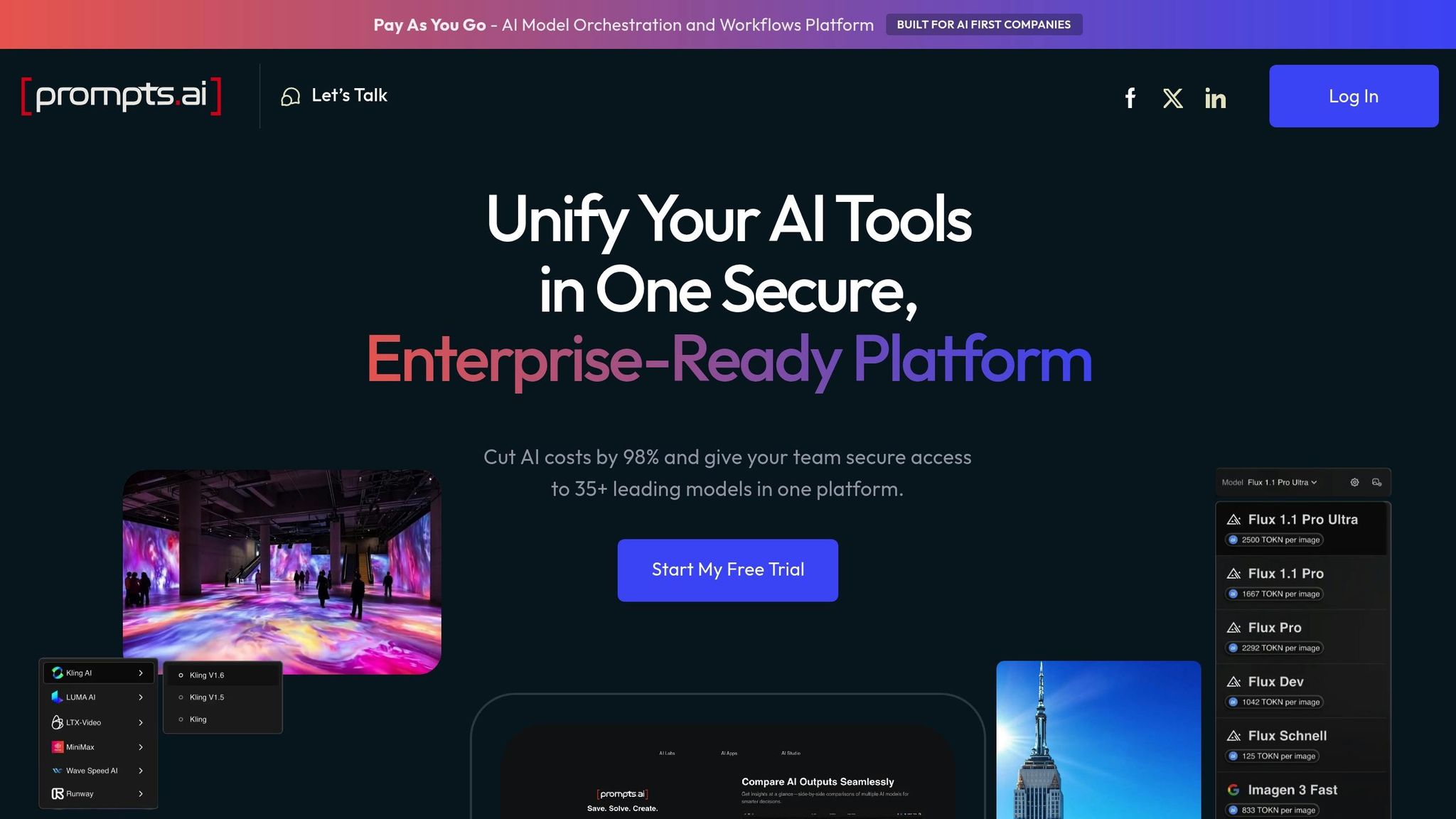

Prompts.ai is an enterprise-grade platform designed to streamline AI operations by integrating over 35 large language models into a single, secure interface. By consolidating models like GPT-5, Claude, LLaMA, and Gemini, it eliminates the inefficiencies of managing multiple tools, offering businesses a unified solution to control costs, enhance security, and improve performance.

Prompts.ai stands out by offering access to a wide range of AI models. Unlike platforms that lock users into one ecosystem, it brings together over 35 models, including popular options like GPT, Gemini, Claude, and LLaMA, each suited to different tasks and industries.

This variety ensures that businesses can match the right tool to the right job. For instance, a marketing team might lean on Claude for crafting creative content, while a data science team could rely on LLaMA for technical problem-solving. Instead of juggling multiple subscriptions and interfaces, teams can use Prompts.ai’s centralized dashboard to compare models side by side and pick the best fit for their specific needs.

The platform goes beyond simple AI experimentation by enabling scalable and consistent workflows. With features like built-in prompt management, version control, and collaborative editing, Prompts.ai transforms one-off tests into repeatable processes that meet compliance standards.

This capability allows organizations to automate multi-step tasks, schedule recurring AI activities, and ensure consistent results across teams. Whether it’s a single user or an entire department, Prompts.ai makes it easier to integrate AI into everyday operations without sacrificing accuracy or efficiency.

Prompts.ai tackles one of the biggest challenges in enterprise AI: unpredictable costs. Its FinOps-driven TOKN credits system provides detailed insights into AI spending, tracking usage across all models and teams down to the token level. This level of visibility helps organizations avoid surprise bills and manage budgets effectively.

With a usage-based pricing model, companies can reduce AI software expenses by up to 98%, eliminating the need for recurring subscriptions. Real-time dashboards further simplify cost management, showing exactly how much is being spent on each project, team, or model. This allows finance teams to allocate resources more strategically, focusing on measurable outcomes rather than guesswork.

Prompts.ai is built to handle the demands of large organizations, offering features like single sign-on, role-based access controls, and detailed audit trails. These tools ensure that even high-volume workloads remain secure and compliant - an essential requirement for Fortune 500 companies handling sensitive data.

The platform also supports flexible deployment options, including on-premises and private cloud setups, giving organizations full control over their data. To help businesses scale their AI efforts, Prompts.ai offers tailored training programs and hands-on onboarding, enabling teams to develop in-house expertise rather than relying on external consultants. Dedicated community initiatives further strengthen internal capabilities.

Prompts.ai isn’t just a technology provider - it’s a hub for fostering collaboration and knowledge sharing. Through its Prompt Engineer Certification program, the platform helps organizations build a network of internal AI champions who can lead adoption efforts.

This emphasis on community sets Prompts.ai apart. Users can participate in training sessions, share workflows, and access a growing library of proven AI applications. By connecting professionals across industries, Prompts.ai creates an ecosystem where best practices and insights are continuously exchanged, driving collective progress in AI implementation.

OpenAI has been at the forefront of generative AI, reshaping how businesses and individuals interact with technology. Its flagship models have become staples across various industries, thanks to their user-friendly interfaces and powerful capabilities. Like Prompts.ai, OpenAI focuses on delivering scalable, purpose-driven models that make AI integration more accessible and effective.

At the heart of OpenAI's offerings is the GPT series, known for advancing reasoning, creativity, and adaptability with each new version. The GPT-4 lineup, for instance, includes a variant capable of processing multimodal inputs, pushing the boundaries of what AI can achieve. Another standout is DALL-E 3, which highlights OpenAI's dedication to creative applications by generating detailed, high-quality images from text prompts. A unique feature of ChatGPT, when paired with DALL-E 3, is the ability to refine image outputs through conversational adjustments, offering users a seamless blend of text and visual creativity.

To address the demands of large organizations, OpenAI introduced ChatGPT Enterprise. This service grants access to advanced models while prioritizing security and administrative control. OpenAI's API infrastructure enables large-scale operations, with major companies like Microsoft embedding GPT models into their widely used tools. Additionally, the Azure OpenAI Service provides enterprise-level deployment options, including private cloud setups and custom fine-tuning, solidifying OpenAI's position as a leader in enterprise AI solutions.

OpenAI employs a token-based pricing system, charging users based on their consumption. To help manage expenses, the platform includes tools for monitoring usage, setting spending limits, and receiving alerts. Enterprises can also benefit from volume discounts and tailored pricing arrangements, making it easier to align costs with business needs.

OpenAI actively supports its developer community by offering comprehensive documentation, practical code examples, and a wealth of educational resources. The company engages with developers through regular events and maintains vibrant community forums where users exchange tips and address challenges together. Beyond this, OpenAI promotes learning and experimentation through partnerships with universities and coding bootcamps, as well as an API credits program designed for students and researchers. These initiatives aim to inspire innovation and broaden access to AI tools.

Google has established itself as a powerhouse in generative AI by combining cutting-edge research with immense computational resources. Its solutions cater to both creative and enterprise needs, seamlessly integrating into its existing product ecosystem. Developers gain access to a suite of tools designed to build and customize AI applications, supported by advancements in model variety, scalable infrastructure, streamlined workflows, and clear pricing structures.

Google’s generative AI lineup includes the Gemini family of models, which are designed for advanced text generation. Beyond text, the company offers Imagen for producing high-quality images and MusicLM for generating audio content. Additionally, its PaLM 2 model powers Bard and enhances features across Google Workspace, showcasing its versatility across different applications.

Google Cloud’s Vertex AI platform serves as the backbone for enterprise-level generative AI projects. It provides managed infrastructure for training, deploying, and scaling AI models, all while ensuring robust security and compliance. Businesses can utilize pre-trained models via APIs or fine-tune them with their own data. With auto-scaling built in, the platform adjusts resources based on demand, and Google’s global cloud network ensures fast, reliable access across multiple regions.

Google’s AI Platform Pipelines simplify the creation of machine learning workflows that incorporate generative AI. From data preprocessing and model training to evaluation and deployment, the platform offers tools like visual pipeline builders and code configurations to streamline the process. Integration with Google Workspace further enhances productivity by embedding AI-powered tools into everyday tasks, such as drafting documents or designing presentations.

Google adopts a usage-based pricing model for its generative AI services, offering clarity through detailed billing dashboards. These dashboards break down costs by model, usage, and time period. Additionally, discounts and committed-use contracts are available to help businesses optimize expenses for consistent workloads.

Beyond its technical offerings, Google fosters a thriving developer community. It provides extensive documentation, tutorials, and educational resources to guide users, whether they’re integrating APIs or fine-tuning models. The company also shares research papers and case studies to demonstrate practical applications of generative AI. To encourage innovation, Google hosts hackathons and developer conferences, creating opportunities for collaboration and real-world problem-solving.

Anthropic has carved a niche by emphasizing AI safety, accountability, and enterprise-grade solutions. With a focus on secure and scalable AI, the company aligns its advancements with the needs of industries requiring dependable and ethical AI for critical operations. The Claude platform, Anthropic's flagship offering, is particularly notable for its sophisticated reasoning capabilities and ability to handle extensive context, making it a strong choice for organizations managing complex workflows.

At the heart of Anthropic's offerings is the Claude family of models, with the Claude 3.7 Sonnet model leading the way. This model combines quick, responsive reasoning with the ability to perform deep, analytical tasks. Its hybrid reasoning approach ensures flexibility, while its support for one of the largest context windows available makes it ideal for processing lengthy documents. This design reflects Anthropic's commitment to integrating AI seamlessly into existing business operations.

The Claude platform is built to excel in automating workflows through its robust API integration. Businesses can embed Claude into their products to streamline tasks such as large-scale text processing and advanced question answering. The platform's hybrid reasoning framework is particularly effective in addressing both real-time operational needs and more intricate analytical challenges, all within a unified system. This approach simplifies enterprise adoption and enhances productivity.

Anthropic has demonstrated its ability to scale for enterprise needs through key partnerships. In November 2024, AWS invested $4 billion in Anthropic, becoming its primary training partner and utilizing AWS Trainium and Inferentia chips for the development and deployment of future models. Following this, Google made a $1 billion investment in January 2025, incorporating Claude into its Vertex AI platform. This integration enhances fine-tuning capabilities for foundational models, further solidifying Anthropic's position as a leader in enterprise AI.

Beyond its technical achievements, Anthropic supports innovation by fostering a developer-friendly ecosystem. Through its API-driven initiatives, developers and enterprises can create custom applications powered by Claude's advanced conversational AI. Additionally, Anthropic's dedication to AI safety research makes it a trusted choice for industries and government sectors that prioritize secure and ethical AI solutions. This dual emphasis on performance and safety underscores Anthropic's role in shaping industry benchmarks.

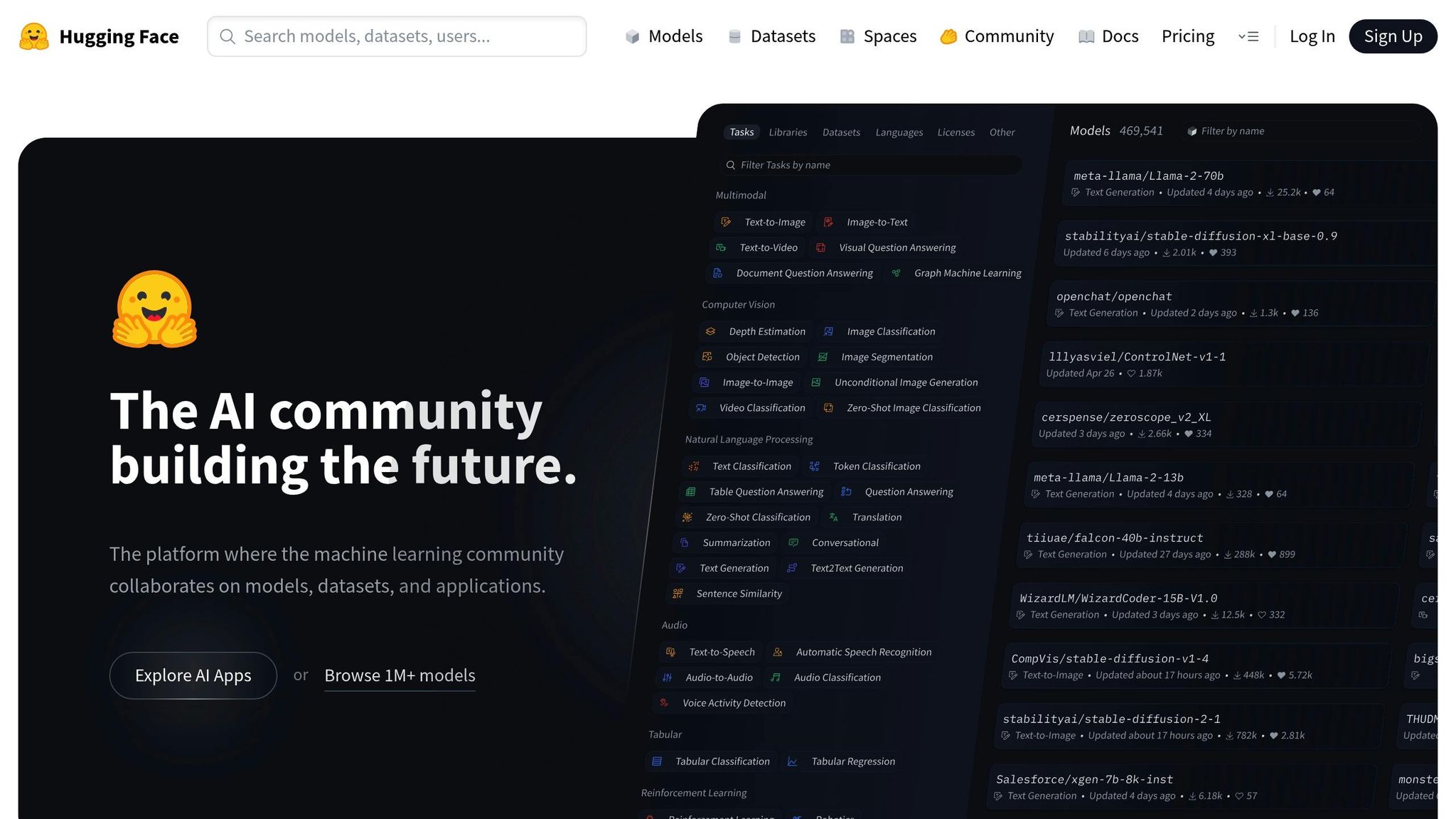

Hugging Face has transformed how developers interact with generative AI. Established in 2016 and based in Brooklyn, New York, the company has created an open, GitHub-style platform tailored for AI development. With $395.2 million in funding and a valuation of $4.5 billion as of August 2023, Hugging Face stands out as a strong alternative to more restricted AI ecosystems. This robust financial backing and innovative approach fuel its wide-ranging model offerings.

The platform boasts an impressive collection of over 2.1 million models and 450,000+ datasets, making it one of the most comprehensive repositories available. Among its highlights is BLOOM, an open-source model capable of supporting 46 human languages and 13 programming languages. This commitment to multilingual support underscores Hugging Face’s dedication to inclusivity and broad accessibility. Its vast repository caters to a wide spectrum of AI applications, providing tools for everything from natural language processing to computer vision.

Hugging Face equips developers with tools to seamlessly integrate AI models into applications. These tools lower the barriers to experimentation and enable rapid deployment of cutting-edge generative AI technologies, making it easier for teams to innovate and iterate.

At its core, Hugging Face champions an open-source philosophy, fostering collaboration and knowledge sharing. Developers can customize and refine models to suit their unique needs. A prime example of this ethos came in 2024, when Hugging Face teamed up with ServiceNow Research to release StarCoder, a free code-generating model designed to rival offerings from major tech players. This initiative highlights the platform’s focus on empowering developers and driving open innovation.

Each generative AI company offers a unique set of strengths and faces its own challenges in a fast-changing market. Understanding these trade-offs can help organizations choose the platform that aligns best with their goals.

| Company | Advantages | Disadvantages |

|---|---|---|

| Prompts.ai | • Unified platform with access to 35+ top models (e.g., GPT-5, Claude, Gemini) | • Newer player in the market compared to established tech giants |

| • Potential to cut AI software costs by up to 98% | • Dependence on third-party models for core functionality | |

| • Enterprise-grade security with built-in governance tools | • Requires adjustment when transitioning from single-model workflows | |

| • Pay-as-you-go TOKN credits, eliminating recurring fees | ||

| • Strong community support via the Prompt Engineer Certification program | ||

| OpenAI | • Market leader with popular products like ChatGPT | • Higher costs for large-scale enterprise use |

| • Advanced models such as GPT-4 and DALL-E | • API rate limits during peak usage | |

| • Comprehensive APIs supporting a robust developer ecosystem | • Data privacy concerns for sensitive information | |

| • Regular updates showcasing cutting-edge advancements | • Limited customization for specialized use cases | |

| • Extensive infrastructure and unparalleled computing power | • Offerings spread across multiple product lines, leading to fragmentation | |

| • Smooth integration with Google Workspace and Cloud services | • Slower adoption in enterprise settings compared to consumer-facing products | |

| • Deep research capabilities, strengthened by the DeepMind acquisition | • Privacy concerns tied to data collection practices | |

| • Free access to tools like Bard for basic use | • Complex pricing structures | |

| Anthropic | • Focus on safety-first principles with Constitutional AI methodologies | • Fewer model options compared to larger competitors |

| • Transparent research and a strong commitment to responsible AI | • Premium safety features come with higher costs | |

| • High-quality outputs designed to minimize harmful content | • Smaller ecosystem of third-party integrations | |

| • Strong emphasis on enterprise security and compliance | • Limited market validation as a newer entrant | |

| Hugging Face | • Large repository of community-contributed models and datasets | • Quality can vary across community-generated models |

| • Open-source approach allowing for extensive customization | • Requires in-house expertise to manage technical complexity | |

| • Support for multiple languages, as seen with the BLOOM model | • Less enterprise support compared to fully commercial platforms | |

| • Developer-friendly tools that encourage community-driven innovation | • High resource demands for hosting and running large-scale models |

These comparisons underline the key factors enterprises should evaluate when selecting a generative AI platform. Cost is often a deciding factor. For instance, Prompts.ai’s consolidated platform and transparent pricing can significantly lower expenses, while others like OpenAI may carry higher costs for enterprise-scale operations.

Security and compliance are equally critical. Many enterprise customers require features like audit trails, data residency controls, and governance frameworks. Platforms integrating these elements can justify their premium pricing, while open-source options may demand additional internal resources to ensure security.

Another point of consideration is the balance between community-driven innovation and commercial support. Open platforms like Hugging Face provide access to cutting-edge research but often require in-house expertise to manage. In contrast, commercial solutions deliver structured support and detailed documentation but may limit the flexibility to customize.

Finally, integration complexity plays a pivotal role. Some providers excel as standalone solutions, while others prioritize seamless integration into existing enterprise systems. The right choice depends on how well a platform aligns with an organization’s strategic priorities and technical ecosystem.

The generative AI landscape is evolving with key players addressing diverse organizational demands. OpenAI maintains its edge through widespread adoption and developer-friendly APIs, while Google is making waves with its ambitious $75 billion AI investment aimed at strengthening infrastructure and enterprise solutions by 2025. Anthropic has carved out a niche in safety-focused AI, bolstered by AWS's substantial $4 billion investment in November 2024.

For enterprises seeking streamlined AI management, Prompts.ai offers a standout solution. By uniting over 35 leading models on a single platform, it provides enterprise-grade governance, real-time FinOps controls, and integrated compliance tools, enabling organizations to significantly reduce AI costs.

Developers have an array of options tailored to their needs. OpenAI’s robust API ecosystem and consistent updates make it an excellent choice for teams building AI-powered applications. Google’s Vertex AI shines with its ability to fine-tune proprietary models while seamlessly integrating with Google Cloud infrastructure. Meanwhile, Hugging Face caters to those prioritizing open-source flexibility, offering access to community-driven models and datasets, though it requires substantial in-house expertise.

Startups, often balancing cost and capability, face unique challenges. Hugging Face’s open-source approach democratizes AI development but demands more internal resources. In contrast, Prompts.ai simplifies workflows with its pay-as-you-go TOKN credits, eliminating recurring fees while granting access to premium models and advanced features - delivering high-end capabilities without the typical high costs.

As the generative AI market continues its rapid growth, investments and new opportunities abound. The emergence of autonomous AI is reshaping business functions across industries.

For organizations, the choice of platform should align with strategic goals, addressing security, integration, and scalability needs. Platforms offering strong governance and access to multiple models, as demonstrated by solutions like Prompts.ai, deliver a seamless and integrated experience that can often outperform reliance on a single provider’s ecosystem.

The generative AI market is expanding at an extraordinary pace, driven by its widespread adoption across various industries and its capacity to reshape workflows and content creation. Companies are tapping into generative AI to improve efficiency, spark new ideas, and address shifting industry needs.

Several elements are fueling this growth: rapid advancements in AI technologies, increased funding for AI research, and a growing appetite for tools that merge creativity with automation. These developments are establishing generative AI as a game-changer in fields such as healthcare, entertainment, marketing, and beyond.

Prompts.ai provides businesses with access to more than 35 large language models, allowing them to directly compare options and select the best fit for their specific needs. This all-in-one platform streamlines prompt management, enhances the quality of outputs, and gives teams complete control over their workflows.

With real-time insights into usage, costs, and ROI, organizations can make informed, data-driven decisions to cut expenses and boost efficiency. By bringing tools and insights together in a single, unified space, Prompts.ai makes it simple for enterprises to fine-tune their AI-powered processes and achieve better results.

When choosing a generative AI platform, businesses need to focus on a few key factors. Security should be at the top of the list to safeguard sensitive data and ensure compliance with relevant regulations. Next, assess the platform’s compatibility with your current tools and workflows. This helps avoid unnecessary disruptions and keeps operations running smoothly. Finally, pay close attention to cost transparency - opt for platforms with clear pricing models to steer clear of hidden fees and ensure the solution aligns with your budget.