AI orchestration platforms are essential for managing complex workflows involving multiple models, diverse data sources, and intricate processes. They help businesses scale AI operations, reduce costs, and ensure compliance with regulations, especially in sectors like finance and healthcare. Below is a quick overview of the top tools covered:

| Platform | Best For | Key Feature | Limitation | Pricing Model |

|---|---|---|---|---|

| Prompts.ai | Enterprise AI workflows | 35+ LLMs, cost transparency | Complex for small teams | Pay-as-you-go TOKN |

| Domo | Business intelligence | Real-time analytics, dashboards | Limited AI-specific features | Subscription-based |

| Apache Airflow | Complex data pipelines | Community plugins, scalability | Steep learning curve | Open-source |

| Kubiya AI | DevOps and infrastructure | Multi-agent, cloud integration | DevOps-focused | Subscription-based |

| IBM watsonx Orchestrate | Large enterprises | Compliance, integration | High complexity | Enterprise licensing |

| n8n | Small teams, visual workflows | Open-source, low-cost | Limited scalability | Freemium |

| Dagster | Data engineering | Asset-centric, lineage tracking | Newer ecosystem | Open-source |

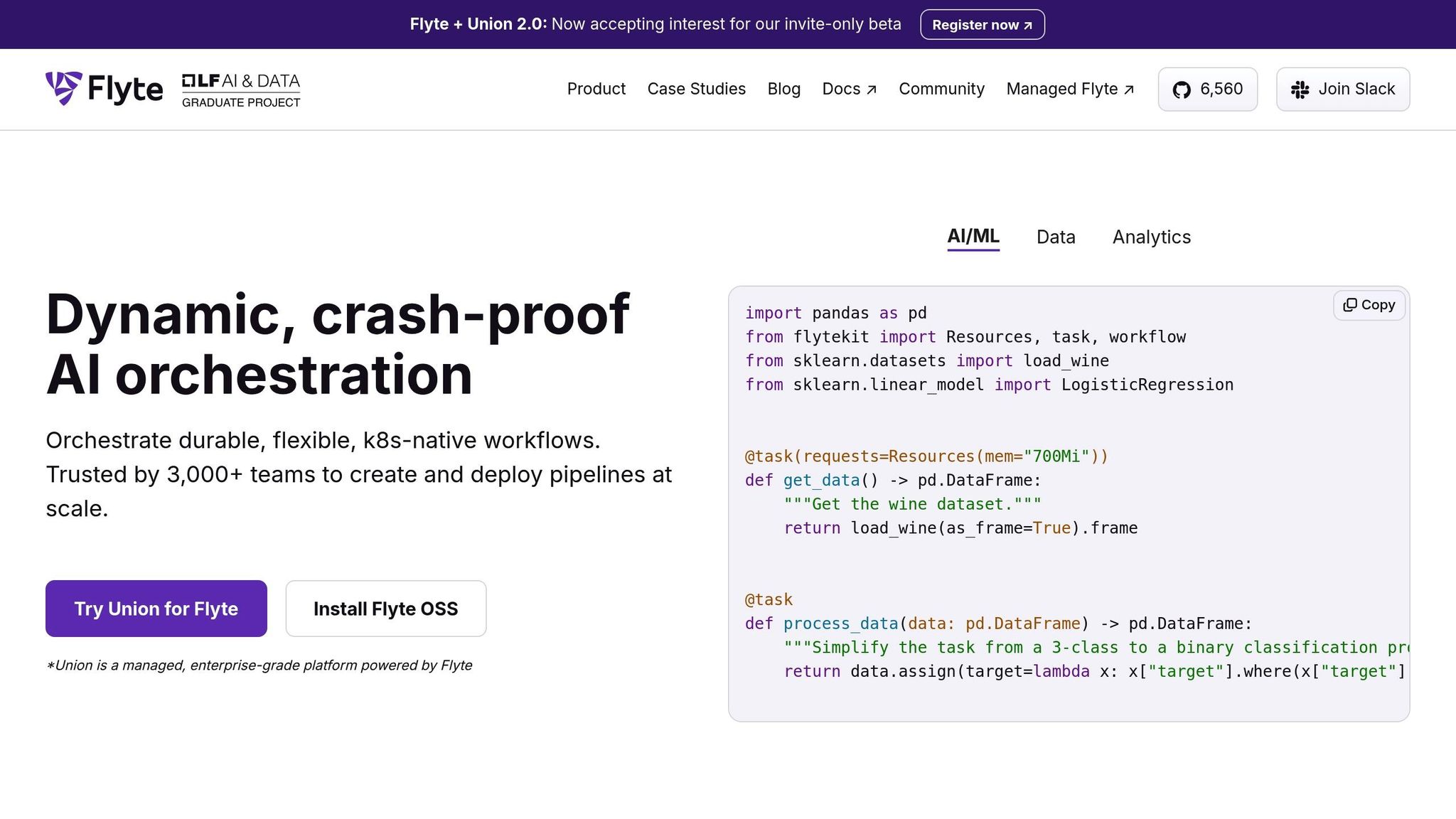

| Flyte | Scalable ML workflows | Kubernetes-native, reproducible | Infrastructure complexity | Open-source |

| Kedro | Data science workflows | Modular pipelines, Python-based | Requires Python expertise | Open-source |

These platforms address different needs, from enterprise-grade governance to open-source flexibility. Choose based on your team's size, technical expertise, and workflow complexity.

Prompts.ai is an AI orchestration platform that brings together over 35 advanced AI models under one roof. Designed to simplify prompt engineering and LLM orchestration, it’s particularly useful for organizations aiming to streamline their AI-driven workflows.

By offering a centralized solution, Prompts.ai tackles a common challenge for businesses - managing a sprawling collection of AI tools. Instead of juggling multiple subscriptions and interfaces, teams can access models like GPT-4, Claude, LLaMA, and Gemini through a single, intuitive dashboard. This consolidation can cut AI costs by as much as 98% while replacing fragmented tools with a cohesive system. Below, we explore the platform’s standout features.

Prompts.ai excels at bridging various AI models through a single interface. With over 35 models integrated, users can effortlessly compare LLMs side-by-side and switch between them based on workflow needs. The platform removes technical barriers, enabling seamless multi-model deployments. For instance, teams can use one model for generating content and another for refining it, all within a unified process.

The platform is built to handle the growing demands of enterprise AI. Business plans include unlimited workspaces and collaborators, allowing organizations to scale their AI operations without limitations. Fixed costs are transformed into flexible, on-demand efficiency, empowering teams of any size to develop enterprise-grade AI workflows. Additionally, Prompts.ai automates workflows, turning one-off tasks into repeatable, AI-driven processes. Its usage-based pricing further supports scalability while keeping costs manageable.

Prompts.ai prioritizes governance, offering features that meet the strict compliance standards of industries like finance and healthcare. The platform adheres to frameworks such as SOC 2 Type II, HIPAA, and GDPR, ensuring data security and privacy. Through its dedicated Trust Center (https://trust.prompts.ai/), users can monitor security in real-time, track compliance policies, and maintain detailed audit trails. Role-based access control ensures users only access resources relevant to their roles, simplifying compliance reporting for regulated sectors.

One of Prompts.ai’s most valuable features is its detailed cost management system. Organizations can track spending by workflow, department, or individual user through comprehensive analytics and dashboards. Its TOKN credit system ensures predictable, usage-based pricing, while features like TOKN pooling and storage pooling optimize resource distribution across teams. These tools also provide insights into model performance and cost efficiency, helping organizations make smarter financial decisions.

Prompts.ai is built with enterprise-grade security measures to safeguard sensitive workflows. Data is encrypted both in transit and at rest, and secure API authentication aligns with enterprise security requirements. The platform supports single sign-on (SSO) and OAuth integration, making it easy to integrate with existing systems. Continuous control monitoring, powered by Vanta, enhances security, and the SOC 2 Type II audit process, active as of June 19, 2025, underscores its commitment to protection. Additional safeguards like network segmentation and vulnerability scanning add extra layers of security for critical AI operations.

Domo stands out as a cloud-based business intelligence platform designed to simplify AI workflows while offering advanced tools for data visualization and workflow management. By uniting real-time analytics and automation in one platform, Domo transforms how organizations handle data-driven AI processes, providing a centralized hub where teams can visualize, analyze, and streamline even the most complex workflows.

One of Domo's key strengths is its ability to unify data from multiple sources into seamless AI workflows. With integration capabilities spanning over 1,000 data connectors, the platform allows organizations to pull real-time data from databases, cloud services, and third-party applications. This is critical for workflows that rely on continuous data streams and immediate processing. Teams can monitor AI model performance, assess data quality, and quickly address bottlenecks, ensuring workflows remain efficient and adaptable to changing conditions.

Domo simplifies the visualization of complex AI workflow data through its intuitive dashboard features. With drag-and-drop tools, users can create custom visualizations to track AI model outputs, resource usage, and performance metrics. These dashboards are designed to be accessible to both technical and non-technical stakeholders, enabling better decision-making across teams. By presenting data in a clear and actionable format, Domo makes it easier to identify areas for improvement and resolve issues quickly.

Built on a cloud-native architecture, Domo is well-suited for enterprise-scale AI operations. It can manage large data volumes while maintaining high performance, making it ideal for organizations running multiple AI models simultaneously. Collaboration tools are integrated directly into the platform, allowing teams to share workflows, annotations, and insights across departments. Role-based permissions add an extra layer of security, ensuring sensitive workflows are protected while still enabling effective teamwork.

Domo also helps organizations manage costs and optimize resources. By tracking resource consumption and workflow efficiency, the platform identifies cost-heavy processes and suggests ways to streamline operations. Its ability to consolidate data sources reduces the need for additional analytics tools, potentially lowering infrastructure expenses tied to AI operations.

Up next, we’ll explore how Apache Airflow brings an open-source approach to managing AI workflows, offering yet another perspective on orchestration tools.

Apache Airflow has become a go-to open-source platform for orchestrating complex workflows. It enables organizations to design, schedule, and monitor data pipelines with precision. Its Directed Acyclic Graph (DAG) structure ensures tasks are executed in a specific sequence, covering everything from data preprocessing and model training to deployment and monitoring. Being open-source, it offers teams the flexibility to tailor orchestration processes while maintaining complete transparency into operations.

Airflow excels at connecting various AI frameworks through its built-in operators and hooks. It supports integration with widely-used machine learning tools like TensorFlow, PyTorch, and Scikit-learn, as well as cloud platforms such as AWS, Google Cloud, and Microsoft Azure.

This versatility is especially valuable for organizations managing hybrid AI environments. Airflow simplifies workflows that involve moving data between on-premises systems and cloud services, initiating model training across different platforms, and coordinating inference pipelines using multiple technologies. For example, the KubernetesPodOperator makes it possible to run containerized tasks on Kubernetes clusters while keeping centralized control through Airflow.

The platform’s XCom feature facilitates data sharing between tasks, allowing seamless transfer of model artifacts, performance metrics, and configuration details across tools and frameworks.

Apache Airflow is designed to scale effortlessly, whether for small setups or large distributed systems managing thousands of tasks. The CeleryExecutor supports horizontal scaling by distributing tasks across multiple worker nodes, while the KubernetesExecutor dynamically adjusts resources based on workflow needs.

For resource-intensive tasks, Airflow’s compatibility with container orchestration platforms is a game-changer. Workflows can scale GPU-enabled workers for model training while handling data preprocessing and post-processing with standard CPU workers.

Its ability to execute tasks in parallel is another major advantage. This feature is particularly useful for running A/B tests or conducting hyperparameter optimization experiments, where multiple models need to be processed simultaneously.

Airflow provides robust tools for governance, including detailed audit logging and Role-Based Access Control (RBAC). These features help track execution details and enforce strict permissions, ensuring sensitive workflows and data are handled securely.

For organizations in regulated sectors, Airflow’s comprehensive logging of task outputs, error messages, and resource usage creates a reliable documentation trail, supporting compliance with industry standards.

Security is a core focus for Apache Airflow. It protects data with encryption both in transit and at rest, supporting SSL/TLS for external connections. The platform integrates with enterprise authentication systems like LDAP, OAuth, and SAML, enabling organizations to use their existing identity management solutions.

Airflow also includes a secure connection management system for storing credentials and API keys required to access external services. These credentials can be managed via environment variables or external tools like HashiCorp Vault and AWS Secrets Manager. Additionally, running Airflow in containerized environments with restricted network access ensures sensitive data and proprietary models remain secure.

Next, we’ll dive into how Kubiya AI uses a conversational approach to streamline AI workflow orchestration.

Kubiya AI brings autonomous operational AI to life by executing complex tasks with built-in safeguards and contextual business logic. It enhances orchestration by combining autonomous decision-making with seamless integration into cloud and DevOps workflows.

Kubiya AI uses a modular, multi-agent framework to deploy specialized agents tailored for tools like Terraform, Kubernetes, GitHub, and CI/CD pipelines. This setup allows smooth coordination of intricate workflows while integrating effortlessly with leading cloud providers and DevOps platforms.

These agents tap into real-time infrastructure data, APIs, logs, and cloud resources, enabling decisions based on the full system's state rather than isolated data points. This holistic visibility ensures greater reliability and precision when orchestrating AI workflows across various environments and technologies.

The platform also guarantees deterministic execution, meaning workflows consistently produce the same results when given identical inputs. This predictability is essential for safe automation, especially in sensitive infrastructure and DevOps settings.

Kubiya AI is built to scale effortlessly across teams and projects. Its Kubernetes-based architecture supports enterprise-grade deployments, meeting the demands of production environments.

The platform enables multi-environment operations across Kubernetes clusters and cloud providers like AWS, Azure, Google Cloud, and DigitalOcean, covering everything from development to production. Organizations can choose to run Kubiya on its serverless infrastructure or deploy it on their own systems, offering flexibility for varying operational needs. This dual deployment option allows teams to start small and expand as workflows grow more complex.

Even as systems scale, Kubiya's design ensures consistent performance by adhering to engineering principles that prevent slowdowns under heavier workloads.

Kubiya AI integrates guardrails, context, and real business logic directly into its operations, ensuring that AI-driven decisions align with organizational policies and compliance standards. This built-in framework simplifies the process of tracking decision-making and demonstrating adherence to regulatory requirements.

Kubiya AI is well-suited for AIOps use cases focused on infrastructure cost optimization. It provides intelligent resource scaling without relying on predefined thresholds, automates resource cleanup, and supports context-aware workload placement. These features help organizations efficiently manage their AI infrastructure spending.

The platform also enables rightsizing and policy-driven cost governance, ensuring resources are used effectively while maintaining budget control. These cost-saving measures work hand in hand with Kubiya AI's robust security framework, which is detailed below.

Security is a cornerstone of Kubiya AI's architecture. The platform adopts a security-first design to address common vulnerabilities often found in traditional AI agent systems. It includes self-healing integrations that quickly recover from security issues, minimizing downtime and risk.

With built-in security controls, Kubiya ensures consistent protection across development, staging, and production environments. Sensitive data and proprietary models remain secure, thanks to deterministic execution that reduces unpredictable behaviors and potential vulnerabilities.

Next, we’ll take a closer look at how IBM watsonx Orchestrate leverages enterprise-grade AI to optimize business workflows.

IBM watsonx Orchestrate simplifies AI workflow automation by uniting different AI models, maintaining strong security measures, and scaling operations to meet the demands of large enterprises. Built on IBM's enterprise-focused AI foundation, this platform centralizes the management of AI workflows by automating model integration, ensuring compliance with enterprise standards, and optimizing resources for scalable deployments. It tackles the challenges U.S. enterprises face when moving from isolated AI experiments to fully operational, production-level systems.

IBM watsonx Orchestrate stands out for its ability to connect various AI models and business applications through extensive integration options. It seamlessly integrates with popular enterprise tools like Salesforce, ServiceNow, and Microsoft Office 365, alongside IBM's proprietary AI models and third-party machine learning frameworks. This connectivity allows businesses to create unified workflows across multiple systems without needing extensive custom development.

The platform’s skill-based design enables users to combine pre-built automation tools with custom AI models, building workflows capable of managing even the most complex business processes. Teams can coordinate data flows between different AI models, trigger actions based on model outputs, and ensure consistency across diverse technology ecosystems.

Designed for large-scale operations, watsonx Orchestrate supports thousands of users and workflows simultaneously. Its cloud-native infrastructure adjusts resource allocation automatically based on demand, maintaining reliable performance even during peak usage. The platform is built to handle intricate, multi-step workflows that involve numerous AI models and business systems, all without compromising speed or efficiency.

Organizations can roll out watsonx Orchestrate across multiple departments or business units while maintaining centralized governance. This ensures consistent policies and procedures are followed. With its ability to process extensive data volumes and coordinate numerous AI models at once, the platform is well-equipped for enterprise-wide AI initiatives.

IBM watsonx Orchestrate includes robust governance tools tailored for industries with strict regulations. Features like detailed audit trails, role-based access controls, and policy enforcement mechanisms help organizations comply with regulations such as SOX, GDPR, and other industry-specific standards.

The platform’s built-in governance workflows ensure that AI models and automated processes align with organizational policies. Comprehensive logging capabilities provide the documentation needed for regulatory reporting. Additionally, its integration with IBM’s broader governance framework offers enhanced oversight for sensitive AI operations.

Security is a core element of watsonx Orchestrate, with enterprise-grade encryption safeguarding data both in transit and at rest. The platform integrates with existing identity management systems like LDAP, SAML, and OAuth, allowing organizations to maintain their established security protocols.

IBM employs a zero-trust security model, ensuring that every interaction between AI models, data sources, and business applications is both authenticated and authorized. Regular security assessments and compliance certifications provide added assurance for organizations managing sensitive data through AI workflows.

watsonx Orchestrate includes tools to help organizations manage their AI-related expenses effectively. The platform offers detailed analytics on resource usage, workflow performance, and model efficiency, enabling data-driven decisions about resource allocation.

By automating routine tasks and enhancing workflow efficiency, the platform helps reduce operational costs while boosting productivity. Its usage-based pricing model ensures cost-effectiveness, allowing organizations to scale their AI initiatives while paying only for the resources they use.

After IBM watsonx Orchestrate, the next platform, n8n, provides a different approach to workflow automation with its open-source flexibility.

n8n offers an open-source platform tailored for AI workflow automation, giving organizations the ability to maintain complete control over their infrastructure and data. This visual workflow tool empowers teams to design intricate AI systems through an intuitive interface while retaining flexibility in deployment. Unlike many enterprise-oriented platforms, n8n allows deployment on-premises or in any cloud environment, making it a compelling choice for organizations with unique security needs or tight budgets. Let’s explore how n8n facilitates seamless AI model integration and supports diverse applications.

One of n8n's standout features is its ability to connect a wide range of AI models and services, thanks to its library of over 400 pre-built integrations. The platform supports connections to leading services like OpenAI, Hugging Face, Google Cloud AI, and AWS Machine Learning, while also enabling custom API integrations for proprietary models. This ensures compatibility with established industry standards for interoperability.

n8n's visual workflow builder makes it easy to link multiple AI models into a single workflow. Users can combine tools for natural language processing, computer vision, and predictive analytics, routing data between models, transforming outputs, and triggering actions - all without needing to write complex integration code.

Additionally, n8n bridges AI with traditional business tools like Slack, Google Sheets, Salesforce, and hundreds of other applications. This integration capability enables seamless automation across an organization’s technology ecosystem, from data gathering to actionable outcomes.

n8n is built for scalability, using a queue-based system to process tasks asynchronously and distribute workloads efficiently. Teams can scale horizontally by adding more worker nodes, ensuring the platform meets the needs of organizations both large and small.

The platform supports webhook-based triggers capable of handling thousands of simultaneous requests, enabling real-time AI applications such as chatbots, content moderation, and automated decision-making. Its lightweight design ensures workflows use minimal resources, keeping operations cost-efficient even for complex AI tasks.

For advanced scalability, n8n integrates seamlessly with Kubernetes, allowing workflows to scale automatically based on demand. This ensures that as AI workflows grow, resources are used efficiently, maintaining consistent performance even during peak activity.

n8n’s open-source framework eliminates expensive licensing fees, providing a budget-friendly solution for organizations. Teams can run unlimited workflows and handle unlimited executions without worrying about per-transaction charges, offering predictable and manageable costs.

For organizations opting for self-hosted deployments, n8n enables workflows to run on existing infrastructure, removing the need for recurring cloud service fees. This setup can lead to substantial savings, particularly for high-volume operations compared to pay-as-you-go pricing models.

For those using n8n’s cloud services, pricing starts at $20 per month for small teams, with clear and straightforward tiers based on workflow executions. The absence of hidden fees or complicated pricing structures simplifies budget planning as AI initiatives expand.

Security is a top priority for n8n. The platform ensures end-to-end encryption for all workflow communications and supports deployment in air-gapped environments, catering to organizations with stringent security demands. For self-hosted deployments, sensitive data remains entirely within the organization’s infrastructure.

n8n includes strict access controls, allowing administrators to assign permissions based on user roles. Detailed audit logs track workflow changes and executions, offering a transparent record for security oversight.

To further secure external connections, n8n supports OAuth 2.0, API key authentication, and custom authentication methods, ensuring safe integration with AI services and data sources. Its modular design also allows organizations to implement additional security measures without sacrificing functionality.

Motion serves as an AI workflow orchestration tool, but its available documentation falls short in providing clear, detailed information about its primary features. Specifics about task management, model compatibility, scalability, cost clarity, and security measures remain vague or unverified. To gain a complete understanding, organizations are encouraged to review the vendor's official resources or reach out directly to their representatives. It’s also wise to cross-check this information with other platforms for a well-rounded comparison.

Dagster is a data orchestration platform designed to streamline AI workflows by treating models, datasets, and transformations as core assets. This approach ensures data quality, traceability, and efficient management of AI pipelines.

The platform excels in managing complex data workflows, making it a go-to solution for AI teams handling intricate processes like model training, validation, and deployment. Below, we’ll explore how Dagster’s features - ranging from interoperability to governance - make it a standout choice for orchestrating AI pipelines.

Dagster’s asset framework enables seamless integration of AI models, datasets, and tools, regardless of the underlying technology stack. It works effortlessly with popular frameworks like TensorFlow, PyTorch, and scikit-learn, while also supporting traditional data workflows, such as Apache Spark jobs and Kubernetes-based model serving.

The platform’s resource system allows teams to configure various execution environments, making it possible to run traditional data tasks alongside modern AI workloads within a unified framework. This flexibility ensures that all components of your workflow, from preprocessing to deployment, remain interconnected.

To prevent integration issues, Dagster’s type system validates data as it moves between components. This ensures compatibility, even when connecting models that use different frameworks or expect varying data formats.

Dagster’s scalability is powered by multi-process distributed execution, supported by either a Celery-based executor or Kubernetes for containerized, parallel processing.

For machine learning projects that involve massive datasets, Dagster’s partitioning system enables incremental data processing. This is particularly useful for handling historical data during model training or batch inference. The platform can automatically split tasks across time-based or custom partitions, ensuring efficient processing.

When models or data requirements change, Dagster’s backfill functionality allows teams to reprocess historical data while maintaining consistency. This capability is especially valuable for large-scale AI projects that require both precision and adaptability.

Dagster ensures full traceability and auditability through its comprehensive lineage tracking. Teams can easily trace data transformations and model dependencies, which is critical for compliance in regulated industries.

The platform’s asset materialization system logs detailed execution records, including metadata on data quality checks, model performance metrics, and resource usage. This robust audit trail ensures transparency and supports compliance requirements.

Automated data quality checks are built directly into Dagster pipelines, allowing teams to validate input data before it’s used for model training or inference. These checks provide a permanent record of data quality, further supporting governance needs.

Dagster’s open-source core platform is available without licensing fees, making it accessible to organizations of all sizes. For those seeking additional features, Dagster Cloud offers managed hosting with transparent, usage-based pricing that scales with actual compute and storage needs. This pricing model eliminates the unpredictability often associated with traditional enterprise software costs.

The platform also includes resource optimization tools to help manage AI infrastructure expenses. Features like efficient resource allocation and automatic cleanup of temporary assets ensure that organizations can control costs during model training and evaluation.

Dagster prioritizes security with robust measures to protect sensitive data and models. Role-based access controls allow organizations to restrict access based on user permissions, adhering to the principle of least privilege.

For secure management of credentials and keys, Dagster integrates with systems like HashiCorp Vault and AWS Secrets Manager. This ensures that sensitive information, such as API keys and database credentials, remains protected throughout the pipeline.

Additionally, Dagster’s execution isolation keeps workloads separate, reducing the risk of security breaches and ensuring that sensitive model parameters are not exposed across projects or teams.

Flyte is an open-source, cloud-native platform designed to orchestrate workflows for machine learning and data processing pipelines. It focuses on delivering reproducibility, scalability, and reliability at scale.

Flyte stands out as a robust open-source alternative, offering seamless integration with popular machine learning frameworks. Using the Flytekit SDK, developers can define workflows in Python that incorporate tools like TensorFlow, PyTorch, XGBoost, and scikit-learn. Its containerized execution model ensures compatibility across environments, while its type system flags data mismatches early, reducing development errors and improving workflow efficiency.

Built on Kubernetes, Flyte dynamically scales to meet varying computational demands. Users can configure resources like CPU, memory, and GPU on a per-task basis, allowing efficient execution of everything from small experiments to large-scale training jobs. This flexibility ensures workflows can grow without compromising performance or oversight.

Flyte provides immutable audit trails that document every step of the data processing pipeline. This traceability ensures that model predictions can be linked back to their original inputs and processing steps. Additionally, its granular access controls integrate seamlessly with enterprise identity management systems, supporting strict security and compliance requirements.

As an open-source solution, Flyte eliminates licensing fees and can be deployed on existing Kubernetes infrastructure. This not only reduces costs but also offers organizations clear visibility into resource usage. By managing computational expenses more effectively, Flyte helps maintain predictable costs without sacrificing security or performance.

Flyte secures workflows by leveraging Kubernetes' built-in capabilities. It uses TLS encryption to protect data and integrates with external secrets management systems for added security. Multi-tenancy is supported through isolated namespaces and strict access controls, ensuring teams and projects operate securely and independently.

Kedro stands out as an engineering-focused, open-source framework designed for reproducible data science and machine learning workflows. Created by QuantumBlack, now part of McKinsey & Company, Kedro introduces software engineering principles to data science through its structured, modular pipeline approach. Let's explore how Kedro's features contribute to efficient AI workflow management.

Kedro is compatible with any Python-based machine learning library, such as TensorFlow, PyTorch, scikit-learn, and XGBoost. Its flexible node system allows each step in the workflow to function as a reusable component. This means you can swap out models or preprocessing steps without needing to overhaul the entire pipeline.

At the heart of Kedro is its data catalog, which serves as a centralized registry for all data sources and destinations. This abstraction layer simplifies managing data, whether it's stored locally, in the cloud, in databases, or accessed via APIs. Developers can focus on the logic of their models while the catalog handles data loading and saving seamlessly.

While Kedro is designed to run on a single machine, it integrates effortlessly with distributed systems like Kedro-Docker and Kedro-Airflow. This allows teams to develop workflows on smaller datasets locally and then deploy them to production environments with ease.

Kedro's modular pipeline architecture is another key to its scalability. By breaking down complex workflows into smaller, independent components, teams can optimize and scale individual parts of the pipeline. Parallel execution is possible wherever dependencies allow, making it easier to pinpoint bottlenecks and improve performance without disrupting the entire system.

Kedro enhances governance by automatically tracking data lineage through dependency graphs. These graphs trace the flow of data and model outputs, making it easier to comply with regulations and debug production issues.

The platform also separates code from environment-specific configurations, ensuring consistent behavior across development, testing, and production. Parameters are version-controlled and well-documented, creating a transparent audit trail for all changes to models and data processes.

As an open-source tool, Kedro eliminates licensing fees and operates on existing infrastructure. Its lazy loading and incremental execution features minimize unnecessary recomputation, cutting down on memory usage, processing time, and cloud expenses.

Kedro prioritizes security by managing credentials outside of the codebase, using environment variables and external stores to keep sensitive information out of version control. Its project template incorporates security best practices, like proper .gitignore configurations, to reduce the risk of data exposure. This focus on secure workflows aligns with the broader goals of scalable and compliant AI systems.

When tackling the orchestration challenges previously discussed, it's essential to weigh the benefits and limitations of various platforms. The right AI orchestration tool depends on your specific needs and technical expertise. Each option offers unique advantages and challenges that influence how effectively you can implement AI.

Enterprise platforms excel in governance and cost management, while open-source and low-code solutions prioritize flexibility and usability. Enterprise-focused platforms like Prompts.ai and IBM watsonx Orchestrate shine in areas such as governance, security, and cost control. Prompts.ai, for instance, provides access to over 35 top language models through a single interface and includes FinOps tools to help cut AI costs. Its pay-as-you-go TOKN credit system eliminates recurring subscriptions, making it an appealing choice for organizations looking to streamline AI expenses. However, enterprise tools often require more upfront setup and may be overkill for smaller teams.

Open-source solutions like Apache Airflow, Dagster, Flyte, and Kedro offer unmatched flexibility and customization without licensing fees. Apache Airflow stands out for its community support and broad plugin ecosystem, making it ideal for complex data pipelines. However, its steep learning curve can be a hurdle for teams without strong engineering skills. Meanwhile, Kedro focuses on applying software development principles to data science workflows, but it demands Python expertise.

Low-code platforms such as n8n and Domo cater to users who favor visual workflow builders over coding. These platforms enable quick deployment and straightforward maintenance for basic automation tasks. However, their limited customization options make them less suitable for handling complex AI workflows.

Here’s a breakdown of the key features and drawbacks of various platforms:

| Platform | Best For | Key Strength | Main Limitation | Pricing Model |

|---|---|---|---|---|

| Prompts.ai | Enterprise AI orchestration | 35+ LLMs, FinOps integration | Complexity for smaller teams | Pay-as-you-go TOKN credits |

| Domo | Business intelligence teams | Visual dashboards, data connectivity | Limited AI-specific features | Subscription-based |

| Apache Airflow | Complex data pipelines | Large community, plugin ecosystem | Steep learning curve | Open source (infra costs) |

| Kubiya AI | DevOps automation | Conversational AI interface | Focused on DevOps | Subscription-based |

| IBM watsonx Orchestrate | Large enterprises | Integration, compliance | High complexity, vendor lock-in | Enterprise licensing |

| n8n | Workflow automation | Visual builder, ease of use | Scalability challenges | Freemium model |

| Motion | Task management | AI-powered scheduling | Limited workflow orchestration | Subscription-based |

| Dagster | Data engineering | Asset-centric approach, observability | Newer ecosystem | Open source |

| Flyte | ML workflows | Kubernetes-native, reproducibility | Infrastructure complexity | Open source |

| Kedro | Data science pipelines | Engineering best practices | Python-only, technical expertise | Open source |

Cost structures vary significantly across platforms. Open-source tools eliminate licensing fees but require investments in infrastructure and maintenance. Platforms like Prompts.ai provide transparent, usage-based pricing, helping organizations optimize costs, while traditional enterprise solutions often come with complex and expensive licensing models.

The usability of these platforms also differs. Visual builders cater to non-technical users, offering simplicity and faster deployment. In contrast, platforms with advanced features often require technical expertise but can handle larger workloads and more complex AI operations. Tools with extensive APIs and pre-built connectors speed up development, while those relying on custom integrations may take longer to deploy but offer greater flexibility.

For some organizations, a hybrid approach works best - combining Prompts.ai’s unified interface with the adaptability of open-source tools. While this strategy can address diverse workflow requirements, it demands careful planning to avoid the very tool sprawl that unified platforms aim to solve.

Choosing the right AI orchestration platform depends on your specific needs, expertise, and long-term goals. If cost efficiency is a priority, Prompts.ai offers a straightforward TOKN credit system combined with integrated FinOps tools, helping to cut AI software expenses by up to 98%. Its flexible pay-as-you-go model removes the uncertainty of surprise costs, making it an excellent option for U.S.-based companies working within tight budgets and aiming for financial predictability.

When it comes to scalability, Prompts.ai simplifies growth with its unified interface, eliminating the hassle of juggling multiple vendors. This consolidated approach ensures smooth deployment and allows your AI workflows to expand effortlessly alongside your business.

For industries with strict regulations, compliance and governance are non-negotiable. Prompts.ai is built with enterprise-grade controls and detailed audit trails, meeting the rigorous security requirements of sectors such as healthcare, finance, and government. These features provide a dependable framework for organizations needing to maintain high levels of oversight and accountability.

With integrated model access and governance tools tailored for U.S. enterprises, Prompts.ai positions itself as a platform that aligns with both current capabilities and future ambitions. By selecting a solution that meets your present needs while supporting strategic growth, you can create scalable AI workflows that deliver real, measurable results.

When choosing an AI orchestration platform, it’s important to focus on a few critical aspects to ensure it meets your organization’s demands. Start with scalability and infrastructure - the platform should align with your preferred deployment model, whether that’s cloud-based, on-premises, or a hybrid setup. It must also handle enterprise-level workloads, offering features like GPU/TPU acceleration and dynamic scaling to adapt to your needs.

Next, assess the platform’s AI/ML capabilities. It should support a wide range of technologies, from traditional machine learning to newer advancements like generative AI. Look for orchestration tools that simplify workflows, automate repetitive tasks, and provide monitoring features to fine-tune performance. Interoperability is another key factor - ensure the platform integrates smoothly with your existing systems, data sources, and tools to avoid disruptions.

Finally, weigh usability and cost. A good platform should feature intuitive interfaces that cater to different roles within your organization, while keeping licensing and infrastructure expenses manageable. The right choice will streamline your operations and help you unlock the full potential of AI.

Open-source AI orchestration tools offer a great deal of flexibility and are backed by active developer communities, making them an appealing, budget-friendly option for teams with strong technical skills. That said, these tools often demand considerable effort to set up, tailor to specific needs, and maintain over time - especially when scaling or meeting strict governance requirements.

In contrast, enterprise-grade platforms are purpose-built for scalability and governance. They come equipped with advanced features like role-based access controls, compliance certifications, and easy integration with hybrid or multi-cloud systems. These capabilities make them particularly well-suited for industries such as healthcare and finance, where regulatory compliance and data security are non-negotiable.

Cost clarity plays a key role when choosing an AI workflow tool, as it allows you to grasp the complete financial picture from the start. Unexpected costs - like onboarding fees, training sessions, premium support, or integration charges - can quickly disrupt your budget if overlooked.

Reviewing the pricing structure, including subscription levels and any optional add-ons, helps you sidestep these surprises. This thoughtful approach ensures the tool fits within your financial plans, enabling better management of your AI operations budget and supporting long-term financial efficiency.