AI command centers are transforming enterprise operations by centralizing AI tools, ensuring compliance, and cutting costs. If you're navigating the complex AI landscape, these platforms can unify workflows, enforce governance, and optimize expenses. Here's what you need to know about the top five solutions:

Each platform offers unique strengths in interoperability, compliance, cost management, and scalability. Below is a quick comparison to help you decide which fits your enterprise needs.

| Platform | Interoperability | Governance & Compliance | Cost Management | Scalability |

|---|---|---|---|---|

| Prompts.ai | 35+ LLMs, multi-cloud compatibility | SOC 2, ISO 27001, GDPR, HIPAA | Pay-as-you-go TOKN credits, 98% savings | Flexible: cloud, hybrid, on-prem |

| Microsoft Copilot | Deep Microsoft 365 and Azure integration | Azure AD, Purview, DLP | Consumption-based, Azure tracking | Scales within Microsoft ecosystem |

| IBM Watson Orchestrator | Modular, hybrid, multi-cloud | SOC 2, ISO 27001, bias detection | Usage-based, IBM Cloud dashboards | Hybrid, OpenShift, regulated industries |

| Amazon Bedrock | AWS-native, unified API | IAM, VPC, CloudWatch | Pay-as-you-go, AWS Cost Explorer | Serverless, scalable within AWS |

| Google Vertex AI | Google Cloud and Workspace integration | Workspace Admin, DLP | Consumption-based, GCP observability tools | Scales within Google Cloud ecosystem |

Next Steps: Choose a platform that aligns with your infrastructure, compliance needs, and cost goals. Focus on governance, scalability, and ease of integration to maximize AI efficiency in 2026.

AI Command Center Solutions Comparison 2026: Features, Costs, and Scalability

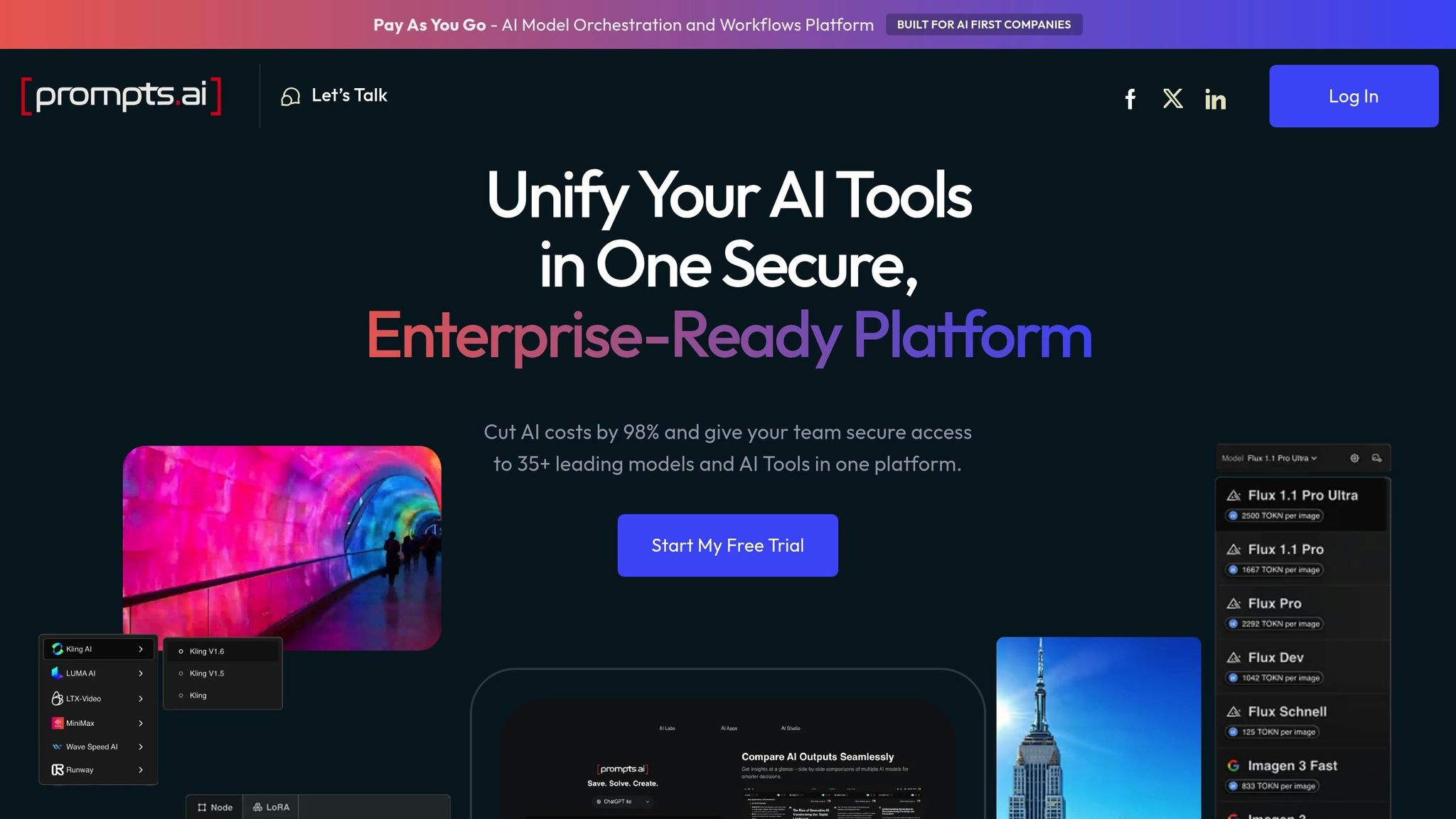

Prompts.ai brings together over 35 AI models - including GPT, Claude, LLaMA, and Gemini - into a single, secure platform. This eliminates the hassle of juggling multiple subscriptions and allows for quick, scalable workflow automation. Teams can easily compare models, streamline workflows across departments, and turn experimental projects into repeatable processes. Below are the standout features that make Prompts.ai a game-changer for enterprise AI operations.

Prompts.ai provides a unified interface that connects various AI tools, removing vendor lock-in and minimizing tool overload. Teams can compare large language models side by side, selecting the best one for each task without leaving the platform. This streamlined approach has enabled organizations to complete projects in a single day that previously took weeks or even months. GenAI.Works has acknowledged Prompts.ai as a top platform for solving enterprise challenges and automating workflows.

Designed with SOC 2 Type 2, HIPAA, and GDPR-grade security, Prompts.ai ensures top-tier protection for enterprise use. The platform initiated its SOC 2 audit on June 19, 2025, with continuous monitoring provided by Vanta. Enterprises can access the Trust Center at https://trust.prompts.ai/ to monitor their security posture in real time, including policies, controls, and compliance progress. By offering complete visibility and auditability for all AI interactions, Prompts.ai brings order and governance to an otherwise chaotic AI ecosystem.

Prompts.ai slashes AI-related expenses by up to 98%, consolidating multiple subscriptions into one platform. Pricing starts at $0/month for the Pay As You Go tier, with the Creator plan available at $29/month and the Problem Solver plan at $99/month. Both paid plans include unlimited workspaces and workflow creation. Additionally, the TOKN credit system allows teams to pool usage, transforming fixed AI costs into scalable, on-demand solutions.

The Problem Solver plan supports unlimited workspaces, up to 99 collaborators, and limitless workflows to accommodate large-scale enterprise needs. By managing hybrid and multi-cloud AI resources through one interface, Prompts.ai ensures teams, models, and users can expand operations without unnecessary complexity or disruption.

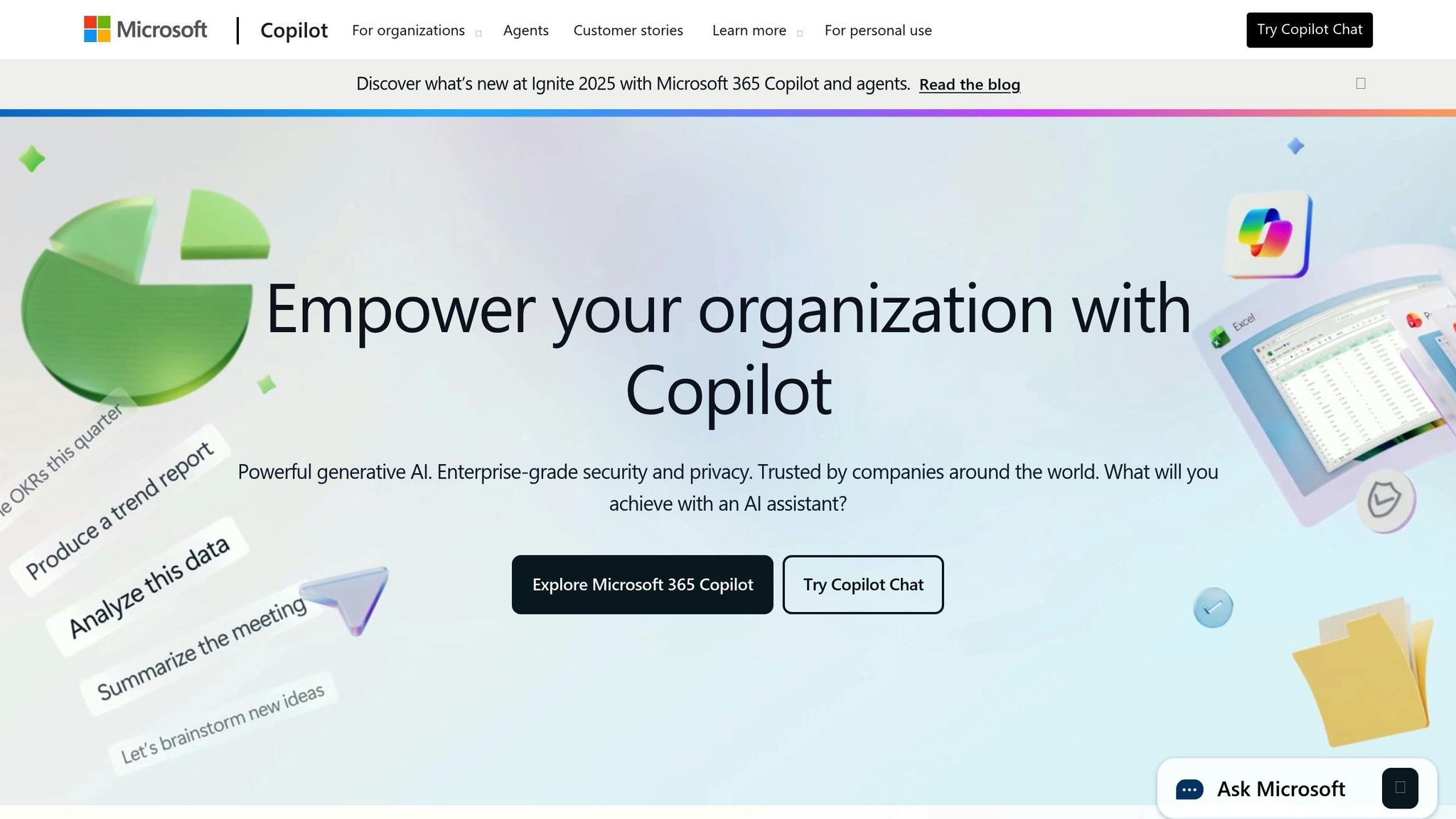

Microsoft Copilot Studio provides a low-code platform seamlessly integrated into the Microsoft 365 ecosystem, including tools like Teams, SharePoint, Power Platform, Dynamics 365, and Azure. This integration allows businesses already utilizing Microsoft infrastructure to streamline and automate workflows. As Microsoft CEO Satya Nadella remarked:

"AI will be the biggest productivity revolution of our lifetimes"

This alignment ensures smooth interoperability across a wide range of business systems.

Copilot Studio offers connectivity to hundreds of business systems through a vast library of prebuilt connectors and Power Automate flows. With natural language commands, agents can extract data from SharePoint lists, initiate automated workflows, and update CRM or ERP systems. One standout feature, "computer use", allows agents to interact with older applications that lack APIs, bridging gaps in legacy systems. However, the platform is primarily tailored to the Microsoft AI stack, which limits its flexibility in working with models beyond this ecosystem.

The platform leverages Azure's enterprise-grade security framework, including Azure AD authentication, data residency controls, and Data Loss Prevention (DLP). Governance is further bolstered by Microsoft Purview, which offers role-based access controls and environment-specific permissions. Additionally, Microsoft’s collaboration with ServiceNow AI Control Tower introduces unified governance for its AI agents, helping organizations manage risks, follow best practices, and meet compliance requirements.

Microsoft Power Automate pricing starts at roughly $15 per user per month, with Copilot Studio available as an enterprise add-on. Azure AI Services operate on a pay-as-you-go basis, charging for tokens, API requests, or compute hours. The low-code approach reduces development costs by eliminating the need for complex API integrations. However, token-based pricing can lead to higher expenses as workflows scale. Businesses should carefully monitor usage to manage costs and avoid budget overruns as their operations grow.

IBM Watson Orchestrator, a key component of the watsonx platform, is designed to simplify enterprise AI operations by combining model development with enterprise-level oversight. This integrated AI studio allows businesses to build, fine-tune, and deploy foundation models alongside traditional machine learning models, all within their existing infrastructure. Tailored specifically for enterprise workflows, it optimizes AI agents to meet the demands of business environments. Let’s delve into its standout technical features.

Watson Orchestrator provides seamless integration across various AI models and tools, creating a unified environment for enterprise use. It supports a range of model types and runtimes, making it easier for businesses to manage diverse AI models in one place. Deployment options include IBM Cloud, OpenShift, and on-premises infrastructure, offering flexibility to fit different operational needs. Additionally, it orchestrates AI agents and enterprise tools, streamlining workflows for greater efficiency.

Built with compliance-sensitive industries in mind, watsonx prioritizes governance and transparency throughout the entire AI lifecycle. Features like bias detection, drift monitoring, explainability, audit trails, model approval workflows, and risk assessments ensure that businesses can maintain strict oversight of their AI systems. The platform adheres to key regulatory standards, including ISO, NIST, GDPR, and HIPAA, making it a reliable choice for industries with stringent compliance requirements. As noted by aufaittechnologies.com:

"Watsonx.ai is one of the strongest options for enterprises where compliance is as important as innovation. It brings enterprise-grade governance to every phase of the AI lifecycle."

The platform also enables secure customization of models using private datasets, ensuring that sensitive information remains protected throughout the development process.

Watson Orchestrator’s scalable architecture supports hybrid and multi-cloud deployments, offering flexibility for businesses with complex IT landscapes. It can be deployed on IBM Cloud, OpenShift clusters, or integrated directly into a company’s infrastructure, making it adaptable for enterprises transitioning between cloud providers. Its modular design allows for targeted scaling to meet specific needs, though pricing depends on the usage of watsonx.ai, watsonx.data, and watsonx.governance components. This flexibility ensures that organizations can grow their AI capabilities without compromising operational efficiency.

Amazon Bedrock Enterprise Suite brings an AWS-focused solution for managing AI at scale, following the trend of enterprise offerings like those from IBM. This managed platform is tailored for businesses that demand strong AI controls within the AWS ecosystem. It offers access to a variety of foundation models - including Anthropic Claude, Amazon Titan, Meta Llama, Mistral, and Stability models - via a single API. This unified approach allows users to switch between models seamlessly without needing to rewrite code. Bedrock is designed for enterprises prioritizing security, governance, and operational reliability as they scale their AI efforts.

Bedrock’s unified API simplifies the process of integrating AI models by eliminating the need to manage separate connections for each one. It includes built-in RAG pipelines and Knowledge Bases to ground AI models in enterprise data, streamlining workflows. The platform also enables the creation of agentic AI systems that interact with AWS services like DynamoDB, S3, and Lambda, connecting AI models directly to enterprise data sources. Bedrock agents allow large language models to call APIs and execute tasks with minimal coding, reducing complexity for developers. These capabilities lay the groundwork for detailed governance, discussed further in the next section.

Security and compliance are at the core of Bedrock’s design. The platform utilizes AWS tools such as IAM, VPC, KMS, and CloudWatch to ensure data security and enforce compliance. Configurable guardrails provide safety filters and policy enforcement for prompts, responses, and RAG pipelines, giving enterprises control over AI behavior. Key features include data residency options, private networking through VPC, fine-grained role-based access control, SSO/SAML support, and immutable audit logs.

Bedrock operates on a pay-as-you-go pricing model, based on actual compute and service usage. While this flexible approach benefits enterprises scaling their AI operations, high compute workloads can lead to rising expenses. To manage costs effectively, businesses need to optimize their AWS configurations and closely monitor usage patterns. The platform’s serverless architecture reduces infrastructure overhead, but careful planning is necessary to keep production costs under control.

Bedrock is built for scalability, particularly within the AWS ecosystem. Its serverless architecture supports global workloads, making it ideal for enterprise-scale deployments with strong security and infrastructure reliability. However, its AWS-centric nature limits portability, meaning it’s not as flexible for cross-cloud environments. For organizations already deeply integrated with AWS, this close alignment is a benefit. However, companies looking for broader cross-cloud capabilities might find the platform less suitable for their needs.

Google's Vertex AI Command Center serves as a centralized hub for enterprise AI within the Google Cloud Platform (GCP) ecosystem. This platform offers a robust, cloud-native solution tailored to organizations leveraging GCP. By combining generative AI, model customization, and seamless integration with Google's extensive data and analytics tools, Vertex AI provides a comprehensive machine learning (ML) environment. Known also as Vertex AI Agent Builder, the platform goes beyond standard AI functionalities by directly connecting with Google Workspace applications like Gmail, Docs, Sheets, Slides, Drive, and Meet. This integration transforms Workspace content into a cohesive intelligence layer for enterprise use.

Vertex AI supports a variety of AI needs, accommodating multi-modal and custom models for businesses with diverse requirements. The platform offers expanded language capabilities and prebuilt plugins, making it adaptable for different use cases. Enterprises can integrate custom models, orchestration pipelines, and specialized datasets directly into their Google Workspace applications, ensuring a smooth connection between AI workflows and daily business operations. Additionally, observability dashboards provide insights into token usage, latency, errors, and tool performance, giving teams a clear view of how their AI systems are functioning. These features are complemented by strong governance tools designed for enterprise environments.

Vertex AI incorporates robust governance features to meet the demands of enterprise-scale operations. With centralized management tools, the platform ensures comprehensive data governance and operational monitoring throughout the AI lifecycle. The integration of Gemini for Workspace enhances security through admin-level controls, including Data Loss Prevention (DLP). By aligning with Google's data and analytics stack, Vertex AI provides end-to-end oversight, helping organizations address risks and maintain compliance in their AI initiatives.

Operating on a consumption-based pricing model, Vertex AI charges for training, predictions, and model hosting within GCP. While this model allows for scalability, managing costs can become complex, especially with multi-model deployments. Observability tools within the platform help businesses monitor and optimize expenses, though these features are largely limited to the Google Cloud environment. Enterprises need to carefully strategize their deployments to avoid unexpected costs, particularly when scaling operations across multiple models.

Vertex AI is designed to perform exceptionally well within the Google Cloud ecosystem, but it has limited portability across other cloud platforms. This focus on Google Cloud makes it an excellent choice for organizations already committed to GCP infrastructure, offering streamlined operations and reliable performance. However, businesses seeking hybrid or multi-cloud solutions may encounter challenges due to the platform's vendor-specific design. For enterprises prioritizing flexibility and avoiding vendor lock-in, this could pose a significant limitation.

When selecting an AI command center, it’s crucial to evaluate how each platform addresses the essential challenges of enterprise AI deployment. Below is a detailed comparison of five leading solutions, focusing on interoperability, governance & compliance, cost management, and scalability to help you determine which platform best fits your organization's goals and infrastructure.

| Platform | Interoperability | Governance & Compliance | Cost Management | Scalability |

|---|---|---|---|---|

| Prompts.ai | Unified access to 35+ LLMs (GPT-5, Claude, LLaMA, Gemini, Flux Pro, Kling) | Enterprise-grade RBAC, SSO/SAML, immutable audit logs, and compliance with SOC 2, ISO 27001, GDPR, and HIPAA | Real-time FinOps layer tracking token usage; pay-as-you-go TOKN credits that can reduce AI costs by up to 98% | Flexible deployment options (cloud, private VPC, on-prem, hybrid); multi-model and multi-cloud compatibility; regional isolation and disaster recovery for global resilience |

| Microsoft Copilot and Fabric | Seamless integration with Microsoft 365, Azure, and Dynamics 365; extensive prebuilt connectors; GUI automation for workflows without APIs | Azure AD authentication, data residency, DLP controls, and governance via Microsoft Purview | Consumption-based pricing combined with Azure Cost Management for tracking | Enterprise-scale deployment leveraging Azure's global infrastructure |

| IBM Watson Orchestrator | Modular platform with agentic workflows and preconfigured agents tailored for complex enterprise setups | Fine-grained RBAC, audit logs, and governance for regulated industries; compliant with SOC 2 and ISO 27001 | Consumption-based pricing with cost monitoring through IBM Cloud dashboards | Hybrid and multi-cloud deployment across IBM Cloud, OpenShift, and customer environments - suited for regulated industries |

| Amazon Bedrock Enterprise Suite | Native AWS integration with infrastructure-grade controls like VPC, PrivateLink, and CloudFormation; broad range of enterprise connectors | Integrated governance with AWS identity, networking, and security features (IAM, VPC, KMS, CloudWatch) | Consumption-based pricing; serverless architecture with cost insights via AWS Cost Explorer | Fully managed, serverless architecture for global scalability |

| Google Vertex AI Command Center | Tight integration with Google Cloud and Workspace tools (Gmail, Docs, Sheets, Drive, Meet) and support for multi-modal and custom model development | Centralized governance with Google Workspace Admin Center, including DLP, data region settings, and auditability | Consumption-based pricing for training, predictions, and hosting; cost monitoring through observability dashboards | High performance within the Google Cloud ecosystem, though portability outside GCP is limited |

This table highlights the distinct strengths of each platform. Prompts.ai stands out with its multi-cloud deployment options, significantly reducing vendor lock-in. Its real-time FinOps layer provides granular token-level cost tracking, ensuring organizations can optimize expenses without being tied to a single provider.

All platforms meet core enterprise security standards like SOC 2, ISO 27001, GDPR, and HIPAA. Prompts.ai enhances governance with immutable audit logs and fine-grained RBAC across its architecture. Microsoft leverages Azure AD and Purview for unified governance, while IBM Watson Orchestrator emphasizes compliance through its modular framework. AWS Bedrock Enterprise Suite and Google Vertex AI Command Center integrate governance directly into their robust cloud security systems.

On the cost front, Prompts.ai offers a pay-as-you-go model through TOKN credits, avoiding recurring subscription fees and aligning spending with actual usage. In contrast, Microsoft, Amazon, and Google use consumption-based pricing bundled with their broader cloud services. Prompts.ai’s transparency, aided by its FinOps layer, provides real-time insights into AI costs, a feature not as prominent in other platforms.

Scalability depends largely on your current infrastructure. Prompts.ai delivers flexibility with regional isolation and disaster recovery, making it ideal for enterprises operating across multiple environments or requiring on-premises options. Meanwhile, Microsoft, AWS, and Google excel in scalability within their ecosystems but may introduce vendor lock-in. IBM Watson Orchestrator bridges these approaches with hybrid and multi-cloud support, catering to businesses with diverse global infrastructure needs.

AI command centers have become a cornerstone for managing the intricate AI ecosystems of 2026. These platforms address a pressing need: how to efficiently design, coordinate, and oversee AI agents at scale while ensuring productivity, compliance, and operational efficiency. By automating routine tasks, they allow teams to focus on more strategic, impactful work.

Selecting the right platform begins with aligning it to your business strategy. The solution should directly address your organization's unique challenges and opportunities. A strong emphasis on data quality and management is crucial - clean, accessible data with seamless retrieval and retention capabilities is non-negotiable.

Interoperability and scalability are equally critical to your AI strategy's success. The most effective AI command centers integrate no-code tools, model orchestration, and governance features, enabling you to prototype, test, and safely deploy intelligent agents across diverse systems. Platforms with built-in connectors that effortlessly integrate with your existing enterprise infrastructure are especially valuable.

Each of the five solutions discussed offers distinct advantages in enterprise AI orchestration. The key is to choose one that aligns with your infrastructure, compliance requirements, budget, and long-term AI goals - prioritizing overall fit rather than focusing solely on individual features, as outlined in the comparison above.

AI command centers enable businesses to cut costs by simplifying workflows, unifying access to various AI tools, and automating routine tasks. By bringing multiple AI models together on one platform, they eliminate the need for separate systems and reduce reliance on manual labor, which can lead to noticeable reductions in operational expenses.

These platforms also ensure smarter resource management, allowing companies to use only the computing power and storage necessary for their needs. This level of efficiency not only saves money but also supports scalable growth without unnecessary spending.

When considering an AI command center for enterprise use, prioritize compliance features that meet industry standards and regulatory requirements. Look for tools that provide secure data handling to protect sensitive information, detailed audit trails to track system activities, and governance controls to address potential bias or performance challenges.

It's also essential that the platform supports automatic updates to adapt to changing regulations and aligns with industry-specific standards such as HIPAA for healthcare or SOX for financial services. These capabilities not only ensure operational security but also enhance trust and reliability in AI-powered workflows.

To build a scalable AI command center, businesses need to prioritize dynamic resource allocation to effectively manage fluctuating demands. Equally important is the use of interoperable platforms that can seamlessly connect with a variety of AI technologies, ensuring workflows remain efficient and cohesive. Lastly, establishing robust governance and security measures is essential to maintain compliance and support the organization’s growth and evolution.