AI workflow tools are transforming how teams manage machine learning (ML) projects, offering solutions to tackle inefficiencies caused by disconnected systems. This article highlights five standout platforms - Prompts.ai, Kubeflow, Metaflow, Gumloop, and n8n - each addressing scalability, integration, governance, and cost control in unique ways. Here's what you need to know:

These tools cater to diverse needs, from Fortune 500 compliance to budget-conscious startups. Choosing the right one depends on your team’s expertise, budget, and project goals.

Quick Comparison

| Tool | Key Features | Best For | Cost Model |

|---|---|---|---|

| Prompts.ai | 35+ AI models, governance, cost tracking | Enterprises prioritizing cost savings | Pay-as-you-go (TOKN credits) |

| Kubeflow | Kubernetes-based, advanced ML features | Teams with strong DevOps expertise | Open-source, infra costs |

| Metaflow | Python-based, simple workflow management | Data scientists | Open-source, cloud costs |

| Gumloop | Compliance controls, centralized management | Regulated industries | Subscription-based |

| n8n | Open-source, flexible automation | Budget-conscious teams | Workflow-based pricing |

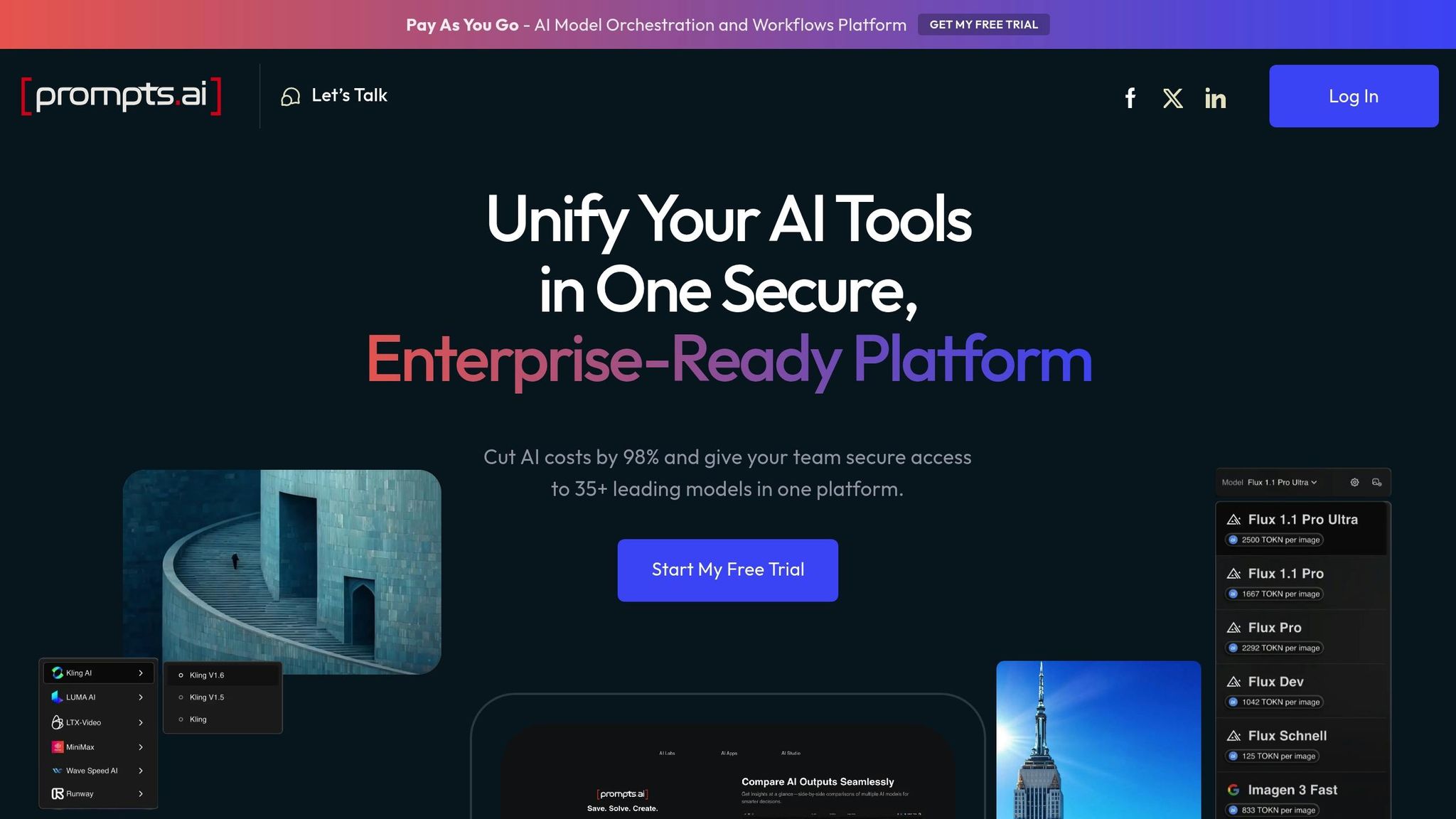

Prompts.ai has established itself as a standout AI orchestration platform, addressing the key challenges faced by modern ML teams. By offering a unified interface for over 35 leading language models - such as GPT-5, Claude, LLaMA, and Gemini - it eliminates the need for juggling multiple disconnected tools. This streamlined approach is especially beneficial for teams looking to reduce the complexity of managing various AI tools while adhering to strict governance standards. Prompts.ai’s ability to simplify and organize ML workflows sets it apart in the crowded field of AI solutions.

Built on a cloud-native architecture, Prompts.ai is designed to handle growing data volumes and user demands effortlessly. The platform enables teams to orchestrate complex workflows with parallel processing, making it suitable for both small businesses and large enterprises like Fortune 500 companies.

One of its key strengths is the ability to scale new models, users, and teams within minutes. This rapid deployment capability is critical for organizations needing to adapt quickly to evolving business needs or expand AI initiatives across multiple departments. With its forward-thinking design, Prompts.ai ensures that ML pipelines can grow in step with organizational demands.

Prompts.ai’s extensive connectors and APIs allow it to integrate seamlessly with a wide range of tools and platforms. It works effortlessly with ML frameworks like TensorFlow and PyTorch, cloud storage options such as AWS S3 and Google Cloud, and business applications like Slack and Salesforce.

This interoperability enables teams to automate workflows across diverse environments without overhauling existing infrastructure. By fitting into established tech stacks, organizations can maximize their current investments while accessing cutting-edge AI capabilities - all while meeting U.S. data residency and compliance standards.

For industries with stringent regulations, Prompts.ai offers robust governance features. Role-based access control ensures that only authorized individuals can interact with specific workflows and models, while comprehensive audit logs provide full traceability of AI activities.

The platform also includes version control for workflows and prompts, giving teams the ability to manage and monitor their AI processes with precision. This level of oversight is essential for compliance with regulations such as GDPR and HIPAA, making Prompts.ai an ideal choice for organizations requiring secure and compliant AI operations.

Prompts.ai takes a transparent and optimized approach to cost management. A built-in FinOps layer monitors token usage in real time, ensuring that expenses align directly with outcomes. This level of cost tracking helps organizations avoid unexpected charges often associated with rapid AI adoption.

The platform’s pay-as-you-go TOKN credit system eliminates traditional subscription fees, allowing costs to reflect actual usage instead of projections. Many organizations report savings of up to 98% on AI software costs by consolidating tools and optimizing resource allocation. This flexibility is especially helpful for teams with variable workloads, as they can scale resources up or down as needed without committing to rigid pricing structures.

Additionally, by automating repetitive tasks and accelerating deployment, Prompts.ai boosts productivity and enhances return on investment. It’s a solution designed to deliver both efficiency and value, no matter the size or scope of the team.

Kubeflow, developed by Google, is an open-source machine learning platform built on Kubernetes. It aims to make machine learning workflows portable and scalable, accommodating a wide range of environments. This platform has become a go-to choice for enterprises seeking to standardize their ML operations on cloud-native infrastructure. Its ability to orchestrate complex pipelines while leveraging Kubernetes for container management has made it particularly appealing.

Kubeflow’s foundation on Kubernetes allows it to dynamically allocate resources based on workload demands, ensuring scalability for both small experimental projects and large production deployments. Its microservices-based architecture enables individual components to scale independently, which optimizes both resource use and overall performance. For instance, teams can deploy approximately 30 Pods within the Kubeflow namespace to efficiently handle varying ML workloads.

Kubeflow is designed with cloud-native principles, making it compatible with major cloud providers and on-premises setups. It supports widely used ML frameworks such as TensorFlow, PyTorch, and scikit-learn, allowing teams to continue using their preferred tools without being locked into a specific vendor. Its pipeline system further enhances interoperability by enabling the creation of workflows that run consistently across diverse environments. This feature is especially valuable for organizations operating in hybrid cloud setups or planning migrations, as it ensures workflow portability while helping to manage infrastructure costs effectively.

While Kubeflow is open-source and free to use, the associated infrastructure costs can be significant, particularly for smaller projects. As MLOps Engineer Ines Benameur from Gnomon Digital notes:

"While Kubeflow is open source, it does incur costs associated with maintaining infrastructure, including the need for container environments and computing resources. This upfront investment and ongoing expenses might not be feasible for all companies, as deploying a full suite of Kubeflow components and add-ons requires a considerable resources allocation".

Organizations can mitigate these costs by employing strategies such as using Spot VMs for compute needs and fine-tuning node counts, machine types, and resource configurations (CPU, memory, and GPUs) to align with workload requirements. Kubeflow Pipelines also include features like built-in caching and parallel task execution, which help eliminate redundant computations and maximize resource efficiency. For cloud deployments, managed services like Amazon RDS for metadata storage, Amazon S3 for artifacts, and Amazon EFS for file storage can further reduce operational overhead. Intelligent resource management plays a key role in keeping expenses under control while maintaining performance. With careful planning and ongoing optimization, the initial infrastructure investment in Kubeflow can lead to significant operational efficiencies and reduced manual effort over time.

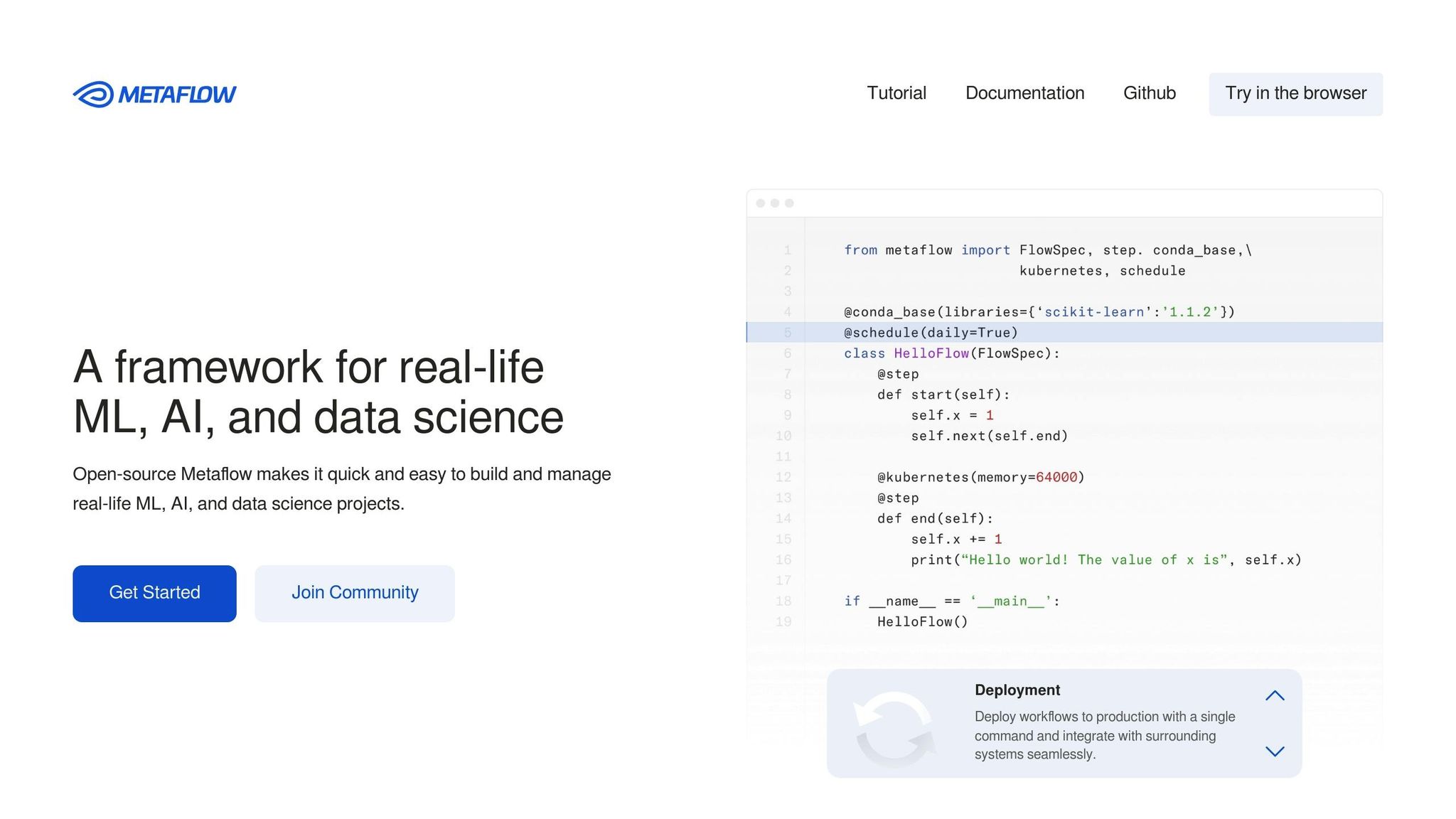

Metaflow is a Python-based framework designed to simplify data science workflows, allowing teams to focus more on developing models rather than managing operations.

Metaflow is crafted to handle workflows of all sizes. Its step-based structure not only organizes tasks efficiently but also supports parallel execution, cutting down processing time. By dynamically adjusting compute resources for each workflow step, it ensures efficient resource use. Plus, it integrates effortlessly with widely-used Python libraries, making it a flexible choice for various projects.

Metaflow is deeply rooted in the Python ecosystem, ensuring seamless compatibility with essential Python tools. It includes a built-in artifact management system, which simplifies data versioning and tracks lineage. This feature bolsters experiment reproducibility and streamlines team collaboration, making it easier to manage and share results.

With a focus on cost-conscious development, Metaflow encourages local testing and development before scaling to the cloud. Its ability to allocate resources intelligently and deactivate unused ones helps avoid unnecessary expenses. This thoughtful approach to resource management ensures that teams can operate efficiently without overspending.

Gumloop is a platform designed to streamline workflow automation while ensuring enterprise-level oversight for machine learning (ML) operations. It tackles challenges like compliance, security, and centralized management, which are common hurdles when scaling AI workflows.

Gumloop stands out with its strong governance tools. At the heart of its system is the AI Model Governance & Configuration feature, which gives administrators full control over AI usage, credentials, and routing.

"AI Model Governance & Configuration provides enterprise organizations with comprehensive control over AI usage, credentials, and routing. These features enable administrators to implement security policies, manage costs, ensure compliance, and maintain centralized control over AI automation workflows."

Another key capability is AI Model Access Control, which allows administrators to enforce detailed restrictions on which AI models can be accessed by team members. This feature offers two modes: Allow List Mode and Deny List Mode. The Allow List Mode is particularly suited for organizations that must adhere to strict compliance standards, as it limits access to pre-approved models that meet specific regulatory or data residency requirements.

"Allow List Mode: Best for strict control environments. Users can only access explicitly permitted models. Recommended for compliance-heavy organizations."

For industries with strict regulations, Gumloop’s AI Proxy Routing feature ensures all AI requests are directed through compliant infrastructure. For instance, an organization could set up a proxy URL like https://eu-ai-proxy.company.com/v1 to ensure requests comply with EU regulations while maintaining detailed audit trails.

These governance tools not only enhance security but also pave the way for better cost management.

Gumloop simplifies cost control with its Organization Credentials system, which centralizes API key management. This ensures all AI calls are routed through organization-controlled accounts, reducing the risk of unauthorized usage and providing clear billing oversight.

"Security and Governance: All AI calls use audited, organization-controlled credentials to prevent unauthorized usage."

The Model Access Control feature also helps avoid accidental use of costly or inappropriate models. Additionally, administrators can configure fallback models to maintain workflow continuity when restricted models are requested. By centralizing credential management and providing precise tracking, Gumloop helps organizations keep costs in check without compromising functionality.

Beyond governance and cost management, Gumloop integrates effortlessly with existing enterprise AI gateways. This compatibility allows organizations to retain their current security policies while leveraging Gumloop’s workflow capabilities. Its features - Model Access Control, Organization Credentials, and AI Proxy Routing - work together to ensure seamless deployment without disrupting established compliance or security frameworks.

All configurations are safeguarded with encrypted storage, secure data transmission, and detailed audit logs, making Gumloop a reliable choice for even the most security-conscious environments.

As we continue exploring advanced ML workflow platforms, n8n stands out as a prime example of how open-source tools can deliver enterprise-grade performance while keeping operational costs low. This platform has become a go-to choice for data science teams seeking flexible automation solutions that align with tight budgets.

n8n's queue mode is built to handle enterprise-level demands, supporting a high volume of users and workflows seamlessly. Its modular architecture allows workflows to be easily adapted and reused across departments, enabling organizations to expand their ML operations without unnecessary complexity.

For AI-driven applications, n8n integrates a Simple Memory node that stores and retrieves conversation context. This feature is crucial for maintaining coherent interactions in growing conversational AI projects. In production settings, it can connect to external databases like PostgreSQL for persistent storage of context, ensuring reliability at scale.

In August 2025, Vinod Chugani showcased n8n's scalability by creating an AI-powered feature engineering workflow. This system transformed individual expertise into an organization-wide resource by integrating large language models for intelligent recommendations. It also connected seamlessly with ML training pipelines like Kubeflow and MLflow, enabling even junior data scientists to tap into insights from seasoned professionals. These capabilities highlight n8n's ability to support both emerging and established AI initiatives.

n8n's pricing model offers a refreshing alternative to traditional workflow platforms. Rather than charging per operation or task, it charges only for complete workflow executions. This approach means even intricate AI workflows with thousands of tasks can run without ballooning costs. For instance, workflows that might cost hundreds of dollars on other platforms can operate for around $50 per month on n8n’s pro plan.

One of n8n's strongest features is its ability to connect various systems and services, making it an excellent choice for ML workflows that rely on data from multiple sources and need to deliver results across diverse platforms. Its self-hosted deployment option provides full infrastructure control, allowing for tailored implementations. The platform’s extensive library of integrations includes cloud storage services, ML platforms, and communication tools, ensuring seamless interoperability.

In August 2025, a user leveraged n8n to build an AI customer support system using ChatGPT, n8n, and Supabase. This system classified user intents, routed requests to specialized sub-agents for tasks like order tracking and product assistance, and maintained conversation context through session-based memory. This example underscores n8n’s ability to bridge systems and create cohesive, efficient workflows for complex AI applications.

After diving into detailed platform reviews, it's time to weigh the advantages and disadvantages of each tool. This comparison highlights key factors such as scalability, interoperability, governance, and cost efficiency.

Prompts.ai stands out by offering access to over 35 leading AI models, including GPT-5 and Claude, all within a secure platform. Its centralized model access, paired with real-time FinOps controls, can slash costs by up to 98%, making it an attractive option for enterprises prioritizing cost savings and governance.

Kubeflow, on the other hand, provides a robust suite of machine learning features like hyperparameter tuning, distributed training, and real-time serving capabilities. However, it comes with high operational demands, often requiring significant DevOps expertise to manage deployments effectively.

Metaflow, developed by Netflix, takes a designer-focused approach. By abstracting much of the infrastructure complexity, it allows data scientists to focus on building models rather than wrestling with operational challenges, significantly improving productivity.

| Tool | Strengths | Weaknesses |

|---|---|---|

| Prompts.ai | Centralized access to 35+ AI models; up to 98% cost reduction; enterprise governance | |

| Kubeflow | Advanced ML features like distributed training and real-time serving | High operational overhead; requires DevOps expertise |

| Metaflow | Simplifies infrastructure management; designer-focused productivity boost |

Ultimately, the best choice depends on your team's technical expertise and organizational goals. For those with strong Kubernetes experience, Kubeflow offers a feature-rich environment. If simplifying infrastructure management is a priority, Metaflow is a great fit. Meanwhile, Prompts.ai is ideal for organizations seeking centralized model access and cost efficiency.

This comparison sheds light on how different AI workflow tools cater to various organizational needs. For enterprises seeking streamlined AI orchestration and significant cost savings, Prompts.ai stands out, offering up to 98% cost reduction and access to over 35 leading models - an attractive option for those prioritizing efficiency and scalability.

Kubeflow provides robust technical features tailored for teams with strong Kubernetes expertise. However, its higher operational demands make it more suitable for organizations with dedicated DevOps support. On the other hand, Metaflow simplifies infrastructure management, allowing data science teams to focus on model development without getting bogged down by operational complexities.

For specialized needs, Gumloop and n8n shine by offering no-code automation and custom integration capabilities, making them valuable additions to a larger machine learning workflow.

Choosing the right tool depends on your team’s technical expertise, budget constraints, and governance priorities. Teams with limited DevOps resources may benefit from platforms that reduce infrastructure complexity, while those with strict compliance requirements should prioritize tools with strong audit and security features. Transparent pricing and real-time cost tracking are especially appealing for budget-conscious teams.

Ultimately, aligning the platform’s strengths with your specific challenges - whether it’s cutting costs, simplifying operations, or enhancing model accessibility - will help ensure the best fit for your team and drive both innovation and efficiency.

Prompts.ai empowers businesses to cut AI software costs by as much as 98% through a combination of dynamic routing, real-time cost tracking, and a pay-as-you-go model. These tools are designed to streamline resource use and eliminate wasteful spending.

By offering features like token savings of around 6.5% and reducing prompt routing costs by up to 78%, Prompts.ai provides a cost-effective way for enterprises to scale their AI operations. This approach helps businesses achieve better returns on their AI investments while keeping operational expenses in check.

Kubeflow demands a strong technical background, particularly in Kubernetes and DevOps, due to its intricate architecture and the significant customization it often requires. Teams working with Kubeflow typically need expertise in managing cloud infrastructure and advanced deployment strategies to utilize it effectively.

In contrast, Metaflow emphasizes ease of use and accessibility, making it a more suitable choice for data science teams with limited technical expertise. Its design minimizes the need for deep knowledge of Kubernetes or DevOps, streamlining the implementation process. Simply put, Kubeflow is better suited for technically advanced teams, while Metaflow caters to those who value simplicity and straightforward deployment.

Gumloop supports organizations in regulated industries by prioritizing security and compliance. With features like audit logging, it enables tracking of workflow executions, data access, and system activities, promoting accountability and meeting regulatory demands.

The platform also complies with established security standards, including SOC 2 Type 2 and GDPR, ensuring data protection and integrity. These safeguards help businesses navigate stringent compliance requirements while fostering trust and reliability in their AI processes.