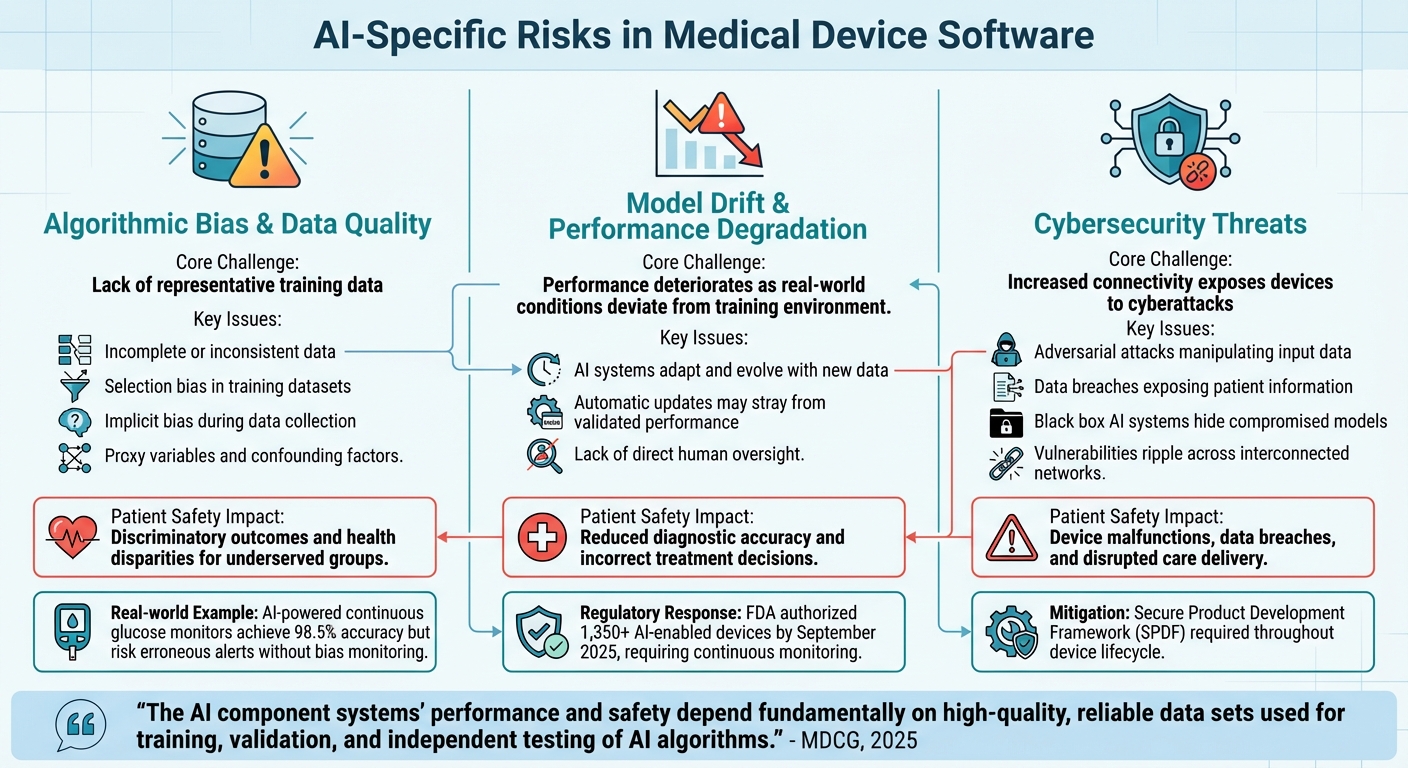

Artificial intelligence (AI) is transforming healthcare, but it comes with risks that demand careful management. AI in medical devices offers advanced diagnostic capabilities, personalized treatments, and continuous learning from clinical data. However, its dynamic nature introduces challenges like algorithmic bias, performance degradation (model drift), and cybersecurity vulnerabilities. These issues can directly impact patient safety, making structured risk management critical.

Key highlights:

To address these, regulators like the FDA have established frameworks such as Predetermined Change Control Plans (PCCPs) and Good Machine Learning Practice (GMLP). These ensure AI systems remain safe and effective throughout their lifecycle. Risk assessment tools like the NIST AI Risk Management Framework and continuous real-time monitoring are vital for maintaining device reliability and compliance.

Manufacturers must prioritize high-quality, diverse training data, implement safeguards against model drift, and establish cybersecurity protocols. By integrating these strategies, healthcare organizations can balance innovation with safety, ensuring AI-powered devices deliver reliable outcomes.

AI Risk Categories in Medical Devices: Challenges and Patient Safety Impact

AI-powered medical devices operate dynamically, relying on data-driven decision-making rather than fixed "if-then" rules. This shift introduces three major risk areas that manufacturers must address to ensure safety and effectiveness. Below, we explore these risks and their potential consequences.

The reliability of AI medical devices hinges on the quality and diversity of their training data. When datasets fail to represent a wide range of patients - spanning race, ethnicity, age, sex, and clinical contexts - algorithms can yield biased outcomes, potentially leading to unsafe results for underrepresented groups. This not only raises fairness concerns but also poses real risks to patient safety by exacerbating health disparities.

Bias can stem from various sources, such as incomplete or inconsistent data, selection bias when training data doesn't mirror real-world populations, and implicit bias introduced during data collection. Additional factors, like proxy variables and confounding influences, can further distort model accuracy.

"The AI component systems' performance and safety depend fundamentally on high-quality, reliable data sets used for training, validation, and independent testing of AI algorithms." - MDCG, 2025

Take, for example, continuous glucose monitors powered by AI. These devices have achieved accuracy rates as high as 98.5% in predicting hypoglycemic events. However, without ongoing monitoring to address potential biases, they could produce erroneous alerts that put diabetic patients at risk. Ensuring demographic representation in training datasets and implementing strong data management practices are critical to minimizing such dangers.

Unlike traditional software, AI systems adapt and evolve with new data. This adaptability, while powerful, introduces the risk of model drift - where the system's performance deteriorates as real-world conditions deviate from the environment in which it was initially trained. Adaptive systems that make automatic updates may even stray from their validated performance without direct human oversight.

To mitigate this, manufacturers are turning to Predetermined Change Control Plans (PCCPs), which outline specific changes and protocols to ensure safe updates. Effective lifecycle management is becoming indispensable. By September 2025, the FDA had already authorized over 1,350 AI-enabled devices, underscoring the importance of continuous monitoring and robust change management strategies.

As connectivity in medical devices grows, so does the risk of cyberattacks. Adversarial attacks can manipulate input data to trigger incorrect AI outputs, while data breaches may expose sensitive patient information and compromise model integrity. The opaque "black box" nature of some AI systems can make it even harder to detect compromised models or unreliable results.

To combat these threats, manufacturers should adopt a Secure Product Development Framework (SPDF). This approach integrates cybersecurity measures throughout a device's lifecycle, including securing data transmission channels, using strong authentication systems, monitoring for anomalies, and deploying security patches promptly without affecting clinical performance. With healthcare systems becoming increasingly interconnected, vulnerabilities in one device can ripple across networks, amplifying the risks.

Addressing these challenges requires ongoing risk assessment and proactive mitigation strategies to safeguard both device functionality and patient safety.

| Risk Category | Core Challenge | Patient Safety Impact |

|---|---|---|

| Data Quality & Bias | Lack of representative training data | Discriminatory outcomes and health disparities for underserved groups |

| Model Drift | Performance degradation as conditions change | Reduced diagnostic accuracy and incorrect treatment decisions |

| Cybersecurity | Vulnerabilities through increased connectivity | Device malfunctions, data breaches, and disrupted care delivery |

Manufacturers require well-structured strategies to identify and address AI-related risks effectively. Building on regulatory standards discussed earlier, these frameworks provide actionable steps for ongoing risk management. They strike a balance between advancing technology and maintaining safety, allowing companies to adapt their algorithms without submitting new applications for every update. These approaches offer a clear path for evaluating risks in AI software proactively.

The Predetermined Change Control Plan (PCCP) has emerged as a vital tool for managing risks in AI medical devices. This framework enables manufacturers to outline and gain approval for planned modifications to AI-enabled software without needing new marketing submissions for every update. The PCCP operates through three essential components:

"One of the greatest potential benefits of AI and ML resides in the ability to improve model performance through iterative modifications, including by learning from real-world data." – U.S. Food and Drug Administration

The NIST AI Risk Management Framework (AI RMF) complements this by focusing on four key functions: Govern (fostering a risk-aware culture), Map (identifying risks and their impacts), Measure (evaluating risks through quantitative or qualitative methods), and Manage (prioritizing and addressing risks based on their likelihood and impact).

Another approach, the FDA's Risk-Based Credibility Assessment Framework, introduces a seven-step process. It begins with defining the Context of Use (COU) and assessing risks by examining the model's influence and the consequences of its decisions. This framework ensures an AI model's performance aligns with its intended purpose.

"Credibility refers to trust, established through the collection of credibility evidence, in the performance of an AI model for a particular COU." – FDA

In January 2025, the International Medical Device Regulators Forum (IMDRF) introduced 10 guiding principles for Good Machine Learning Practice (GMLP). The Total Product Lifecycle (TPLC) Approach further emphasizes managing risks from premarket development to postmarket performance, fostering a commitment to quality and excellence. Once risks are assessed, targeted mitigation strategies become crucial.

Effective risk mitigation begins with robust data management. Training and test datasets must be both relevant - representing factors like race, ethnicity, disease severity, sex, and age - and reliable, meaning accurate, complete, and traceable . Separating training, tuning, and test datasets is essential to ensure independent data sourcing .

To combat algorithmic bias, manufacturers should assess performance across demographic subgroups and confirm that the training data reflects the intended use population . For addressing model drift, performance triggers should be established to determine when re-training or intervention is necessary.

Human-in-the-loop (HITL) testing is critical in scenarios where AI outputs influence clinical decisions. Engaging clinicians during testing and validation ensures the model's safety and effectiveness in real-world applications. Additionally, the user interface should clearly outline the AI system's intended use, limitations, and the characteristics of the data used during its development.

To mitigate cybersecurity risks, manufacturers should implement encryption for data transmission, strong authentication protocols, and continuous monitoring for anomalies. The PCCP framework also mandates rigorous verification and validation processes, ensuring updates - whether global or local - are managed safely and effectively.

Manufacturers can leverage the FDA's Q-Submission Program for feedback on PCCPs for high-risk devices. Non-compliance with an approved PCCP, such as failing to meet re-training or performance criteria, could result in a device being deemed "adulterated and misbranded" under the FD&C Act. These frameworks and mitigation strategies are essential for maintaining patient safety in dynamic clinical settings.

| PCCP Component | Key Elements | Purpose |

|---|---|---|

| Description of Modifications | Scope of changes, intended performance gains, impact on intended use | Specifies what will change in the AI system |

| Modification Protocol | Data management, re-training practices, performance evaluation, update procedures | Defines how changes will be implemented safely |

| Impact Assessment | Risk–benefit analysis, mitigation plans, TPLC safety considerations | Assesses whether benefits outweigh risks |

Once AI-powered medical devices are deployed, the focus shifts to ongoing risk management. This continuous oversight is essential to address challenges like model drift and to ensure the devices maintain their safety and effectiveness. A Total Product Lifecycle approach plays a critical role, monitoring how these devices perform in real-world scenarios while supporting compliance with regulatory standards. By building on established risk assessment practices, continuous monitoring ensures that the devices continue to meet the safety and efficacy benchmarks set during their development.

AI models face a unique challenge known as model drift, where their performance can degrade over time as real-world data evolves and diverges from the training data. Recognizing this, the International Medical Device Regulators Forum (IMDRF) emphasizes in Principle 10 of Good Machine Learning Practice (GMLP) the need for deployed models to be actively monitored for performance, with re-training risks carefully managed.

Real-time monitoring is a key component of post-market surveillance. It continuously evaluates an AI device's accuracy and reliability against pre-established acceptance criteria outlined in its Modification Protocol. If performance metrics fall below these thresholds, it indicates a deviation from the authorized Predetermined Change Control Plan (PCCP). Effective monitoring systems track critical metrics like sensitivity, specificity, and positive predictive value, with the level of scrutiny tailored to the device’s risk profile. Manufacturers must also define clear performance triggers in their Algorithm Change Protocol (ACP) to determine when intervention, such as re-training, is necessary.

"One of the greatest benefits of AI/ML in software resides in its ability to learn from real-world use and experience, and its capability to improve its performance".

However, this adaptability requires robust safeguards. Monitoring systems need to automatically detect failures and, if necessary, revert the device to a stable version or halt potentially unsafe changes. For high-risk devices used in critical diagnoses or treatments, clinical evaluation results should be reviewed by independent experts to ensure unbiased assessments.

To further enhance monitoring, manufacturers can collect Real-World Performance Data (RWPD). This data includes safety records, performance outcomes, and user feedback, offering insights into how the device operates across various clinical settings. Additionally, maintaining version control and detailed documentation of all changes creates a clear audit trail. This not only supports regulatory compliance but also helps identify and address performance issues efficiently.

By documenting these changes meticulously, manufacturers can transform continuous monitoring into actionable steps that enhance safety and compliance.

Accurate and consistent documentation of modifications, performance assessments, and deviations is vital for meeting regulatory requirements and maintaining transparent audit trails.

Prompts.ai simplifies this process by offering enterprise-grade governance tools and automated audit trails for AI workflows. Through a unified interface, manufacturers can document model changes, track performance metrics, and manage version control across more than 35 leading large language models - within a secure, centralized environment. These features ensure consistent documentation practices while providing real-time FinOps cost controls, helping organizations meet the transparency and reporting standards regulators demand.

The platform’s audit trail capabilities align with Quality System regulations (21 CFR Part 820), which require manufacturers to maintain a detailed "change history" and rigorous version control within the device master record. For organizations managing PCCPs across multiple AI-enabled devices, Prompts.ai’s centralized governance framework streamlines compliance by making all modifications, performance evaluations, and risk assessments easily accessible for regulatory reviews. This approach not only ensures transparency but also fosters trust among regulators and healthcare providers, allowing teams to concentrate on innovation without being bogged down by administrative tasks.

AI-powered medical devices that continuously learn demand a dynamic approach to risk management. The Total Product Lifecycle (TPLC) framework addresses this need by focusing on safety from the design phase all the way through real-world implementation. This method acknowledges that managing AI risks isn’t a one-time task but an ongoing process throughout the device’s lifespan. By connecting the dots between initial design and real-world application, the TPLC framework lays the groundwork for continuous regulatory and clinical integration.

Recent updates to regulatory guidelines, such as the revised PCCPs and GMLP, provide manufacturers with clearer pathways for advancing their technologies.

"Our vision is that with appropriately tailored regulatory oversight, AI/ML-based SaMD will deliver safe and effective software functionality that improves the quality of care that patients receive." - FDA

Building trust in AI-enabled devices requires more than meeting regulatory standards - it hinges on transparency. Tackling issues like bias, monitoring for model drift, and thoroughly documenting changes are essential components of post-market surveillance. Companies that align these practices with established Quality Management System standards, such as ISO 13485, create a robust foundation for risk-based decision-making that benefits all stakeholders, including manufacturers, clinicians, and patients.

The transition from static, "locked" algorithms to adaptive, continuously learning systems brings both opportunity and responsibility. When paired with ongoing surveillance, these strategies ensure that safety remains a priority over time. By adopting comprehensive risk management approaches aligned with the TPLC framework, healthcare organizations can fully leverage AI's potential while keeping patient safety at the forefront at every stage of a device’s lifecycle.

Manufacturers can take meaningful steps to address algorithmic bias in AI medical devices by starting with diverse and representative training datasets. These datasets should encompass a broad range of patient demographics, including variations in age, gender, ethnicity, and clinical subgroups. Ensuring this diversity minimizes the risk of underrepresentation, which can lead to biased outcomes.

Before deployment, it’s essential to test for bias using statistical measures, such as analyzing differences in sensitivity or false-positive rates across groups. This proactive approach helps identify and address potential disparities early. Once the device is in use, continuous monitoring of its real-world performance across all subpopulations is crucial. If any discrepancies emerge, manufacturers can recalibrate or retrain the algorithm using updated, more representative data.

Transparency also plays a key role. By maintaining comprehensive documentation of data sources, preprocessing methods, and model training processes, manufacturers enable thorough audits and foster trust. These practices contribute to the development of safer and more equitable AI medical devices, ensuring they perform reliably across all patient groups.

Managing model drift in AI-powered medical devices requires ongoing vigilance to maintain safety and performance. Start by setting clear baseline performance metrics and reference distributions for both input features and model outputs during deployment. From there, keep a close eye on key indicators, such as shifts in prediction patterns, changes in input features, confidence levels, and - when possible - accuracy or error rates.

When a metric crosses a predefined threshold (for instance, a drop in accuracy or a noticeable shift in data), trigger an alert to investigate further. Conduct a root-cause analysis to determine the type of drift - whether it’s related to data, concepts, or covariates. After identifying the issue, retrain or fine-tune the model using recent, representative datasets. Make sure to validate the updated model thoroughly, and only redeploy it after confirming it meets all safety and compliance standards.

Every drift event, analysis, and corrective action should be documented as part of the device’s lifecycle management. Adhering to a Predetermined Change Control Plan (PCCP) is critical for regulatory compliance. This plan provides a structured approach for monitoring, retraining, and implementing updates safely, ensuring that manufacturers can uphold patient safety and model reliability in practical, real-world applications.

Effective cybersecurity in AI-powered medical devices is essential for safeguarding patient safety and protecting data integrity throughout the device's lifecycle. To achieve this, several best practices should be followed, starting with comprehensive threat and vulnerability assessments. Secure software development practices, alongside encryption for data both at rest and in transit, play a crucial role in mitigating risks. Additionally, strong authentication protocols, role-based access controls, and routine code reviews are necessary to ensure robust defenses.

Manufacturers must also prioritize secure patch management and establish mechanisms for safely updating AI models after deployment. Continuous monitoring for unusual activity, regular vulnerability scans, and a clearly outlined incident response plan enable quick action in the event of a breach. Network segmentation is another important strategy, as it isolates medical device traffic from other IT systems, thereby reducing exposure to potential threats. By combining these measures, manufacturers can ensure AI-driven medical devices remain secure, dependable, and compliant.