If you’re diving into the world of generative AI, especially with tools like ChatGPT or other language models, you’ve probably heard about tokens. These tiny units of text are how models break down and process your input, and they play a huge role in both functionality and pricing. That’s where a tool to estimate token usage becomes a game-changer for developers and writers alike.

When crafting prompts or content for AI, knowing the approximate number of tokens helps you stay within a model’s context window—basically, how much it can “remember” at once. Go over that limit, and your input might get truncated, messing up the response. On top of that, many platforms bill per token, so a quick calculation can save you from unexpected costs. Whether you’re drafting a blog post or coding a chatbot, having a sense of text-to-token conversion keeps your workflow smooth.

Tokens aren’t just a technical detail; they’re a window into how AI “thinks.” By managing them effectively, you can optimize prompts for better outputs. A utility like this simplifies the process, letting you focus on creativity rather than crunching numbers manually. Stick with us for more tips on mastering AI tools!

Tokens are the building blocks AI models use to process text—think of them as small chunks of words or punctuation. Every model has a token limit for input and output, so knowing your count helps you avoid getting cut off mid-conversation. Plus, since many AI services charge based on token usage, this helps you predict costs and optimize your content. It’s all about working smarter with the tech!

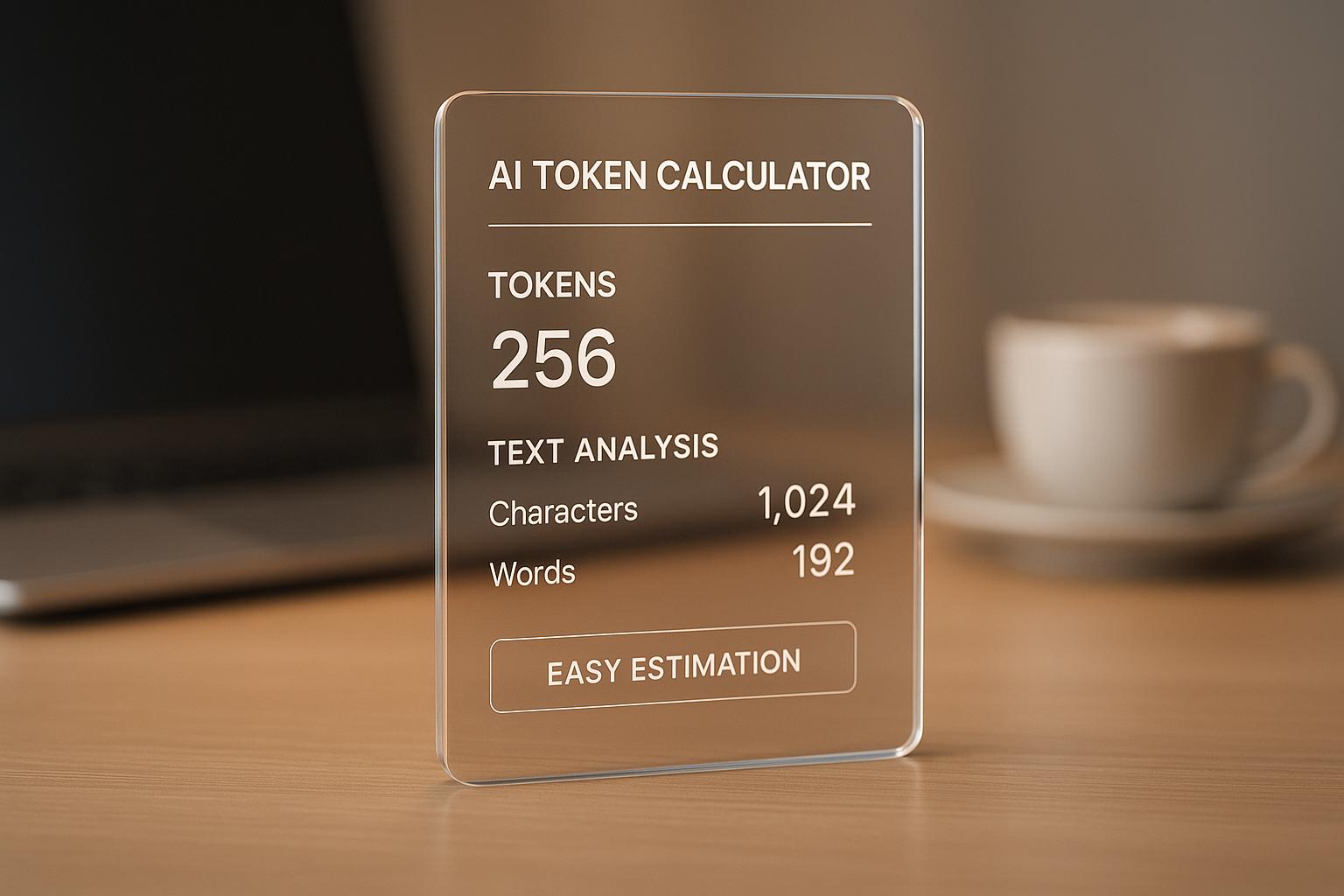

Our tool uses a rough estimate based on common tokenization rules, like splitting text by spaces and punctuation, with an average of 4 characters per token. It’s not exact since different models might tokenize slightly differently, but it’s close enough for practical use. For most GPT-based projects, it’ll give you a solid ballpark figure to plan around. If you need precision, check the specific model’s documentation.

Absolutely, you can paste text in any language! The calculator still splits by spaces and punctuation, so it works decently across scripts. However, keep in mind that tokenization can vary more with non-Latin alphabets or languages without clear word boundaries, like Chinese. The 4-characters-per-token average might be a bit off in those cases, but it’s still a handy starting point.