Cut AI Costs by Up to 40% with Smarter Prompt Routing

Scaling AI workflows can get expensive fast. Every prompt you send to a model incurs token-based fees, and advanced models cost significantly more. For businesses processing high volumes, efficient routing can save 20–40% on expenses by directing simpler tasks to lower-cost models.

Here’s what you need to know:

Quick Tip: Use tools like Prompts.ai's FinOps dashboard to monitor token usage and adjust routing strategies. Companies have reduced costs by 35% in 60 days by prioritizing efficiency.

For a side-by-side comparison, see the table below.

Prompts.ai takes a smart approach to managing costs by optimizing how prompts are structured and routed. Through intelligent model selection and refined prompt techniques, the platform reduces token usage by 3–10%, all while maintaining high-quality outputs. This dual focus on efficiency not only lowers token-related expenses but also trims routing costs, paving the way for a transparent, usage-based pricing system.

The platform operates on a credit-based pricing model, using TOKN credits. It offers a pay-as-you-go structure, with personal plans starting at $0 per month for exploration. For businesses, plans range from $99 to $129 per member per month. This system ensures organizations only pay for the AI resources they actually utilize.

To help users manage spending effectively, Prompts.ai includes a FinOps dashboard. This tool provides detailed insights into token usage, breaking it down by agent, use case, or department. Armed with this data, users can make informed decisions about model selection and prompt optimization.

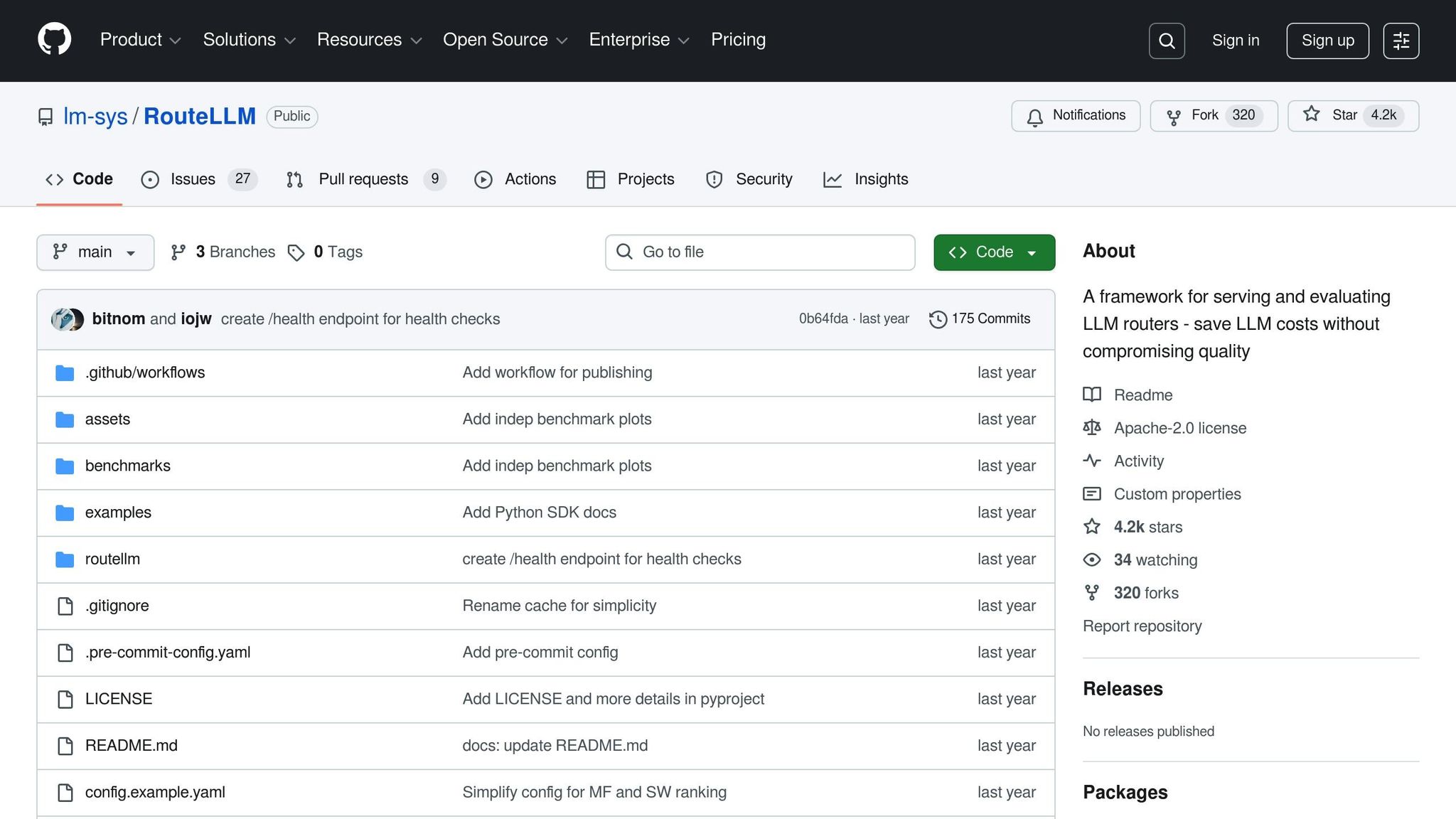

The platform's LLM router dynamically chooses the best model based on performance needs and cost considerations. With access to over 35 models, the router automatically selects the most cost-efficient option, reducing both token consumption and routing expenses.

Prompts.ai also features a prompt optimization engine that uses machine learning and regex filtering to streamline inputs before they reach the selected model. For instance, a company handling millions of AI interactions each month achieved an average token savings of 6.5% through these techniques. By keeping prompts concise yet contextually relevant, the system minimizes token usage and lowers costs.

As organizations scale their usage, Prompts.ai offers volume discounts and advanced enterprise features. These include governance tools like audit trails and compliance controls, which help manage AI spending across multiple teams. Additionally, the platform supports a community-driven initiative that provides "Time Savers" - expert-designed prompt workflows that cut development time and reduce the costs tied to prompt engineering.

Platform B takes a straightforward approach to pricing, using a pay-per-token model. Costs range from as low as $0.15 per million input tokens for lightweight models to $15 per million tokens for more advanced options like premium models.

However, when it comes to tracking expenses, Platform B offers only basic usage reports. It does not include advanced features like real-time spend tracking, which can make it harder for organizations to stay on top of their budgets - especially during periods of high activity. While the platform does provide structured volume discounts, the absence of detailed cost monitoring tools limits proactive expense management.

Platform B offers discounts based on usage volume:

Another challenge with Platform B is its lack of intelligent, semantic routing. Users must manually select models for their tasks, which can lead to inefficient spending, particularly for simpler prompts. For instance, the platform provides access to models like GPT-4o Mini at $0.15 per million input tokens and Anthropic Claude 3.5, which ranges from $3 to $15 per million tokens. Without automated routing, users may unintentionally choose higher-cost models for tasks that could be handled by cheaper alternatives.

Adding to the complexity, 73% of companies report underestimating their API expenses by 40–60% because of hidden costs. The lack of a pre-submission token calculator further complicates budgeting, as users cannot estimate costs before running their prompts.

Platform C, powered by Google's Vertex AI, offers a variety of pricing structures tailored to different models and input types. These include pay-as-you-go plans and provisioned throughput options, with costs calculated based on tokens or other units like characters, images, or seconds of video/audio. While this flexibility can be beneficial, it introduces layers of complexity in cost management, as detailed below.

Vertex AI's token pricing varies significantly depending on the model. For instance, Gemini 2.0 Flash charges $0.15 per million input tokens and $0.60 per million output tokens, whereas Gemini 2.5 Pro ranges between $1.25 and $2.50 per million tokens, depending on the context. Output text costs for this model can fall between $10 and $15 per million tokens.

For multimodal content, pricing is calculated differently. Gemini 1.5 Flash uses character-based pricing at $0.00001875 per 1,000 characters for short text, $0.00002 per second for video, and $0.000002 per second for audio. Despite the intricacy of these pricing models, Vertex AI ensures cost clarity through comprehensive management tools.

One of Vertex AI's standout features is its emphasis on cost transparency. Google Cloud provides tools such as budgets, spending alerts, quota limits, and AI-driven recommendations to help organizations control expenses effectively. Additionally, the Vertex AI Model Optimizer simplifies pricing by offering a single meta-endpoint with dynamic rates based on the model's intelligence level. For businesses with consistent workloads, the Provisioned Throughput option allows for long-term commitments, enabling reduced costs over time.

This section brings together the key strengths and weaknesses of various platforms, offering a side-by-side comparison to help organizations weigh their options. Each platform has its own approach to managing prompt routing and costs, and understanding these differences is crucial for choosing a solution that fits specific needs and budget considerations.

prompts.ai is notable for its integrated AI orchestration, providing access to multiple models and cost control through a single interface. This eliminates the hassle of juggling multiple subscriptions and reduces administrative work. Its built-in token tracking system gives teams real-time insight into spending, making it easier to manage costs across different projects and teams.

On the flip side, prompts.ai’s TOKN credit system might take some getting used to for teams accustomed to traditional subscription models. Additionally, its wide range of features could feel excessive for organizations with simpler prompt routing needs.

Platform B keeps things simple with its clear per-token pricing model. For instance, GPT-4o Mini costs $0.15 per million input tokens and $0.60 per million output tokens, offering strong performance at a lower price point. However, Platform B lacks advanced cost management tools, which can lead to organizations underestimating their API expenses by 40–60% due to hidden costs and inefficient usage.

Platform C provides flexibility with both pay-as-you-go and provisioned throughput pricing options. While this approach allows for customization, its complex pricing - ranging from $0.15 per million tokens for entry-level models to $15 per million tokens for premium outputs - can make cost forecasting and budgeting more difficult.

| Feature | prompts.ai | Platform B | Platform C |

|---|---|---|---|

| Cost Transparency | Real-time tracking dashboard | Basic usage reports | Budgeting tools |

| Model Access | Access to 35+ models on one platform | OpenAI models only | Wide range of enterprise-grade models |

| Pricing Structure | TOKN credits with volume tiers | Simple per-token rates | Complex, varied pricing |

| Team Management | Unlimited collaborators (Pro+) | Individual API keys | Advanced enterprise controls |

| Cost Optimization | Automated routing and governance | Manual routing | AI-driven recommendations |

| Setup Complexity | Medium (unified platform) | Low (direct API access) | High (enterprise configurations) |

Choosing the right platform ultimately comes down to organizational priorities. For those looking to minimize costs while accessing multiple models in a unified system, prompts.ai may be the best fit. Teams with simpler requirements might prefer the ease and clarity of Platform B, while large enterprises with complex needs and dedicated AI teams could find Platform C’s advanced features worth the added complexity.

Selecting the right AI platform means striking a balance between managing costs and maximizing value. By 2025, cost efficiency in generative AI will shift from being a mere technical concern to a core business strategy. Companies that fail to optimize their prompt routing costs could face significant overspending by relying on unnecessarily complex models. This recommendation builds on earlier discussions around cost transparency and dynamic routing.

Given these challenges, prompts.ai emerges as an ideal solution for organizations aiming to streamline prompt routing affordably. Its unified platform eliminates the hassle of juggling multiple subscriptions and offers real-time cost tracking across over 35 leading models. The pay-as-you-go TOKN credit system ensures you’re only billed for what you use, while built-in governance tools help prevent unexpected cost spikes.

For smaller-scale projects or individual users, the Creator plan at $29/month provides excellent value. Enterprise teams managing higher volumes can benefit from the Pro or Elite plans, which come with additional features. Notably, organizations that implement intelligent prompt routing have reported savings of 20% to 40% in model inference costs. This flexibility in pricing has been validated in real-world applications.

For example, a legal tech company built an AI-powered assistant to help users navigate contract clauses and compliance questions. By implementing intelligent routing, they directed simple factual queries to smaller, more cost-efficient models, while reserving advanced models for complex document summaries. In just 60 days, the company reduced inference costs by 35% and improved response times for lightweight tasks by 20%.

To avoid unnecessary expenses, it’s essential to route prompts strategically. Simple queries - like “What’s the office Wi-Fi password?” - can be handled by faster, lower-cost models, while advanced models should be reserved for tasks requiring deeper analysis, such as reviewing 10K filings. Overuse of large models for all prompts remains a common challenge for product and FinOps teams.

Start by testing your use cases and tracking spending over a 30-day period to establish a baseline. From there, you can refine your routing strategy to achieve optimal efficiency.

The TOKN credit system on Prompts.ai provides an easy and straightforward approach to managing AI expenses. Rather than dealing with complicated billing setups, you can simply purchase credits to cover AI resource usage, making budgeting more predictable and easier to handle.

With real-time tracking features, you can keep an eye on spending across agents, use cases, or teams, ensuring you stay on budget. This system allows businesses to allocate resources wisely, prevent surprise costs, and simplify AI operations - all while offering complete cost visibility.

Prompts.ai provides practical solutions to help you cut costs in AI prompt routing. With its built-in token tracking and a transparent pricing dashboard, you can monitor spending in real time, broken down by agent, use case, or team. This gives you the clarity needed to manage your budget effectively.

For even greater savings, you can tap into volume discounts and craft prompts thoughtfully to reduce token usage. By examining spending trends and routing prompts more efficiently, you can make informed choices to streamline expenses. Prompts.ai equips you with the tools to implement and oversee these strategies effortlessly.

The FinOps dashboard in Prompts.ai makes managing AI expenses straightforward with real-time cost tracking. It includes built-in token monitoring and a clear, transparent pricing interface, allowing users to see spending broken down by agent, use case, or team. This clarity helps users better allocate budgets and maintain control over their expenses.

By providing detailed insights into spending trends, the dashboard supports smarter prompt routing and helps cut down on unnecessary costs, ensuring operations run more efficiently.