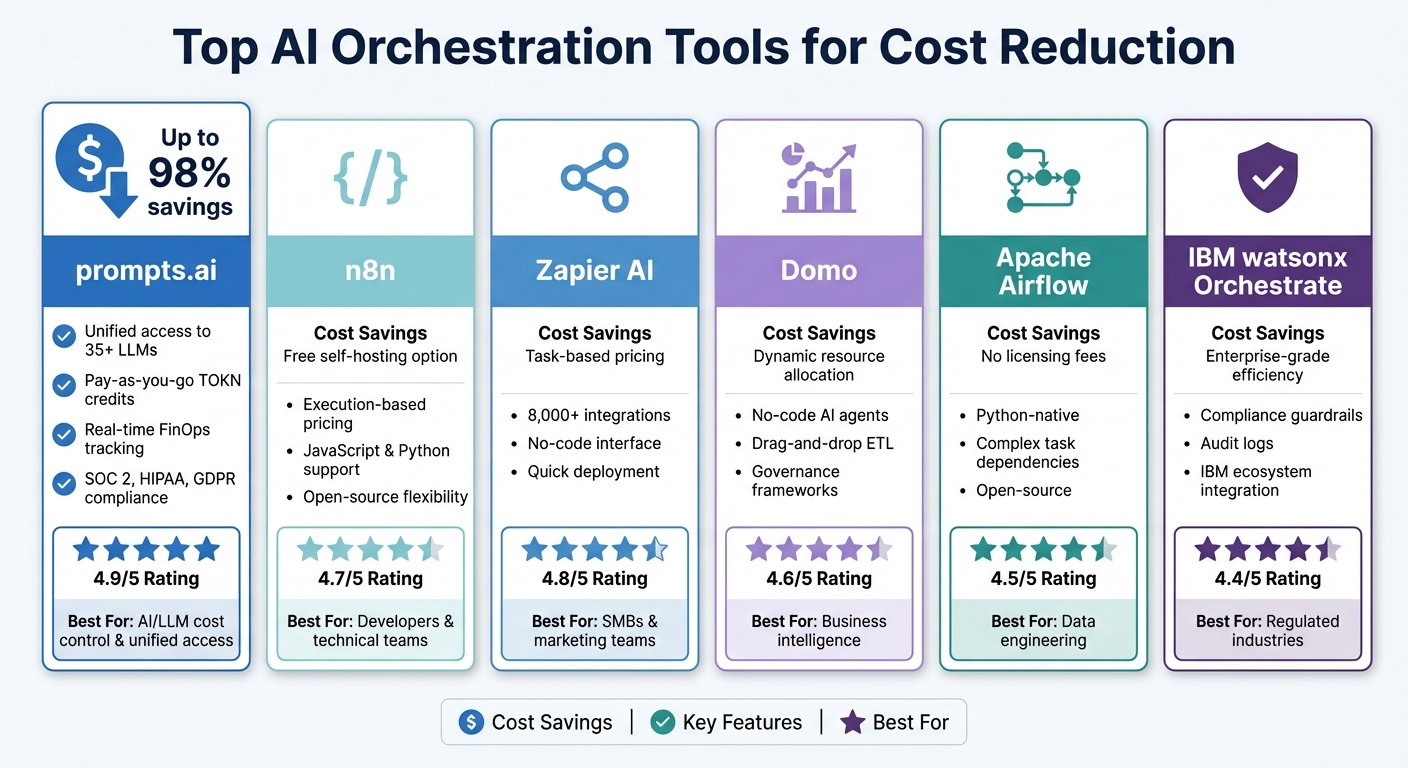

AI orchestration tools simplify managing multiple AI systems, helping businesses cut costs and improve efficiency. Tools like prompts.ai, Kubiya AI, and Domo offer solutions that reduce expenses, automate workflows, and enhance governance. For instance, prompts.ai can lower AI costs by up to 98% with its pay-as-you-go TOKN credit system, while Kubiya AI automates DevOps tasks, saving time and resources. Here's a quick overview of key tools and their strengths:

| Tool | Cost Savings | Key Features | Best For |

|---|---|---|---|

| prompts.ai | Up to 98% savings | Unified AI access, TOKN credits, FinOps | AI/LLM cost control |

| Kubiya AI | Reduced DevOps costs | Natural language automation, Kubernetes-native | DevOps automation |

| Domo | Dynamic resource use | No-code data workflows, governance tools | Business intelligence |

These platforms address specific needs, from reducing AI costs to automating DevOps and managing data workflows. Choose the one that aligns with your business goals to maximize efficiency and savings.

AI Orchestration Tools Comparison: Cost Savings and Key Features

Prompts.ai offers up to a 98% reduction in AI software costs, eliminating the need for recurring fees from multiple providers. With access to 35+ models - including GPT-5, Claude, LLaMA, Gemini, and Grok-4 - through a pay-as-you-go TOKN credit system, you only pay for the tokens you use. This eliminates the financial drain of subscription fees that persist even when services go unused.

The platform includes a FinOps layer that tracks token usage in real time, giving finance teams detailed insights into costs. This visibility allows organizations to pinpoint expensive workflows and replace high-cost models with more affordable options - without disrupting production. By turning cost control into a data-driven process, Prompts.ai takes the guesswork out of managing AI budgets, combining financial transparency with seamless integration tools.

Prompts.ai doesn’t just save money - it simplifies workflows. Acting as a centralized prompt management hub, the platform connects seamlessly with major LLM providers through a unified interface. Teams can easily switch between providers like OpenAI and Anthropic without rewriting application code or juggling multiple API keys. For developers, the REST API allows programmatic access to prompts, separating prompt logic from core application code and streamlining maintenance across large projects.

The platform also supports versioning and environment tagging, enabling teams to manage development, staging, and production workflows independently. This setup allows new prompts or model configurations to be tested in isolated environments before deployment, minimizing risks of errors or performance issues. By maintaining consistent performance across the software lifecycle, teams can work on separate features simultaneously without stepping on each other’s toes.

Prompts.ai ensures compliance and security at scale with robust governance features. Sensitive data remains protected while enabling AI-driven innovation. Through role-based access controls (RBAC), administrators can define which team members have access to specific models, prompts, or datasets. Every interaction is logged, generating an audit trail that supports compliance with standards like SOC 2, HIPAA, and GDPR - without the need for manual tracking.

These governance tools reduce operational costs by automating compliance workflows. Instead of dedicating staff to manually review AI interactions or track data usage across disconnected systems, security teams can monitor all activity through a single dashboard. This centralized approach transforms compliance from a time-consuming task into an efficient, scalable process, supporting growth without adding unnecessary overhead.

Kubiya AI helps businesses cut costs by automating DevOps tasks using natural language commands, eliminating the need for manual scripting and complex infrastructure management. With this streamlined approach, repetitive tasks are handled quickly and efficiently, reducing time spent on routine operations and removing the need for specialized scripting skills.

The platform’s Policy-as-Code engine ensures that organizational rules are embedded into workflows, guaranteeing every automated action adheres to security and compliance standards before execution. This proactive approach minimizes the risk of costly errors caused by misconfigured infrastructure changes. By automating compliance checks, companies can avoid the expense of production issues or regulatory penalties, all while reducing the workload on DevOps teams. These features promote smooth operations and pave the way for effortless integration.

Kubiya AI integrates seamlessly with tools like AWS, Kubernetes, GitHub, Jira, Terraform, Slack, and Microsoft Teams through its modular multi-agent framework. This enables teams to perform tasks such as infrastructure changes, code deployments, and incident management directly within the collaboration tools they already rely on. Additionally, the platform provides open-source CLI tools and agent templates via GitHub, allowing customization with YAML and Python while avoiding vendor lock-in.

Its deterministic execution model guarantees that automated actions deliver consistent results every time, a critical factor for maintaining stability in production environments. Developers can also make "self-service" resource requests through Slack or Teams, cutting down on delays caused by bottlenecks that require direct DevOps involvement.

Kubiya AI enhances its cost and integration advantages with a strong focus on security. Operating on a Zero Trust architecture, the platform incorporates Role-Based Access Control (RBAC), Single Sign-On (SSO), and Just-In-Time (JIT) approvals. Every automated action requires role-based authorization, ensuring that infrastructure changes comply with organizational security policies. This model not only prevents unauthorized access but also retains the speed and efficiency of automation.

As a Kubernetes-native platform, Kubiya AI scales effortlessly alongside enterprise workloads without necessitating a complete infrastructure overhaul. Teams can start small by automating high-frequency, low-risk tasks like environment provisioning and gradually expand to more complex workflows, such as CI/CD pipelines. This modular approach allows organizations to grow their automation capabilities at their own pace, keeping costs and complexity under control.

Domo helps businesses save money by dynamically adjusting resource allocation to meet workload demands. This approach minimizes the costs associated with idle resources. Paired with its easy-to-use data management interface, it ensures efficient operations without unnecessary expenses.

With Domo's drag-and-drop ETL interface, handling data workflows becomes straightforward. This tool simplifies extracting, transforming, and loading data, speeding up processes while reducing the need for extensive engineering resources.

Efficient data management is only part of the equation - strong governance is equally crucial. Domo strengthens data security by implementing scalable governance frameworks that enforce access policies. This reduces the need for manual compliance work, cutting down on administrative overhead while ensuring secure operations.

AI orchestration tools come with their own sets of benefits and challenges, often balancing operational efficiency against costs. Here's a closer look at how some popular tools measure up:

prompts.ai provides access to over 35 LLMs through a unified platform, coupled with real-time FinOps tracking via its TOKN credit system. This eliminates the need for multiple subscriptions. Its pay-as-you-go pricing structure avoids per-seat license fees, making it a cost-effective option for AI and LLM orchestration. However, its focus is limited to AI and LLM workflows, without branching into broader automation needs.

n8n offers a free self-hosted option and execution-based pricing, making it an appealing choice for those seeking cost control. It also supports JavaScript and Python code fallbacks, giving users flexibility. That said, the initial setup can be daunting for non-technical users, requiring a certain level of expertise. Despite this, its 4.7/5 rating reflects its utility for technical teams.

Zapier AI simplifies workflow creation for non-technical teams, offering over 8,000 integrations that enable marketing and sales teams to implement workflows in minutes without coding. However, its task-based pricing can lead to steep costs at high usage levels. Additionally, its support for handling complex logic is limited, as noted in its 4.8/5 rating.

Domo shines with its no-code AI agents, making it accessible for users without technical skills. On the downside, its licensing costs can rise significantly with larger deployments, which may deter some users.

Apache Airflow is a Python-native tool that excels at managing complex task dependencies without locking users into a specific vendor. While it offers flexibility, it demands substantial server resources and lacks official enterprise support, making it better suited for experienced data engineers.

IBM watsonx Orchestrate stands out for its strong governance frameworks, including compliance guardrails and audit logs, making it ideal for regulated industries. However, its deep integration within the IBM ecosystem and complex configuration requirements can prolong implementation timelines.

The table below summarizes the key strengths, limitations, and ideal use cases for each tool:

| Tool | Key Strengths | Key Limitations | Best For |

|---|---|---|---|

| prompts.ai | Unified access to 35+ LLMs, Real-time FinOps, Pay-as-you-go | Focused solely on AI/LLM workflows | Cost control & unified AI access |

| n8n | Free self-hosting, Execution-based pricing, Code fallback | Complex setup for non-technical users | Developers & technical teams |

| Zapier AI | 8,000+ integrations, No-code simplicity | High costs at scale, Limited deep logic | SMBs & marketing teams |

| Domo | No-code AI agents | Expensive licensing for large deployments | Business intelligence |

| Apache Airflow | Python-native flexibility, Open-source | Resource-heavy, No enterprise support | Data engineering |

| IBM watsonx Orchestrate | Compliance guardrails, Audit logs | IBM ecosystem dependency, Complex setup | Regulated industries |

This comparison highlights the trade-offs between functionality, cost, and ease of use, helping teams make informed decisions tailored to their specific needs.

Selecting the right AI orchestration tool means balancing technical expertise, budget considerations, and workflow requirements. For enterprises focused on managing costs, prompts.ai stands out by offering access to over 35 LLMs through a pay-as-you-go TOKN credit system. This approach can cut AI expenses by up to 98% by consolidating subscriptions. Additionally, its real-time FinOps tracking ensures teams can monitor spending closely to avoid budget overruns, all while maintaining full control over AI operations. That said, other tools cater to specific needs and user profiles.

For those without technical expertise who need quick deployment, Zapier provides a no-code interface and supports over 8,000 integrations for tasks like lead enrichment and ticket triage. However, its task-based pricing model can lead to higher costs with heavy usage.

On the other hand, engineering teams often prefer Apache Airflow for its flexibility and lack of licensing fees. Meanwhile, industries with strict regulatory requirements benefit from the governance features of platforms like prompts.ai and IBM watsonx Orchestrate, which include SOC 2 compliance and detailed audit trails.

As highlighted earlier, unified access to models and real-time cost tracking are shaping the future of AI orchestration. Consolidating AI subscriptions with integrated financial monitoring provides a practical, long-term solution. For organizations prioritizing cost efficiency and simplified management, prompts.ai offers a scalable and sustainable way to streamline AI operations while keeping expenses in check.

Prompts.ai helps businesses slash AI expenses by up to 98% with its flexible pay-as-you-go TOKN credit system. This approach unifies access to more than 35 AI models within a single, user-friendly dashboard, removing the hassle of juggling multiple licenses and cutting down on operational complexities.

The platform also offers real-time FinOps monitoring, giving businesses full visibility into their AI spending. This transparency allows organizations to fine-tune their usage and eliminate unnecessary costs. By centralizing AI tools and simplifying workflows, Prompts.ai delivers a more efficient and cost-effective solution tailored for businesses of all sizes.

Kubiya AI provides real-time orchestration that integrates effortlessly with widely-used DevOps tools such as Kubernetes and Terraform. Its design supports hybrid deployments, offering broad compatibility with a range of tools and APIs to streamline infrastructure automation and simplify workflow provisioning.

With these features, businesses can enhance operational efficiency, cut down on manual tasks, and ensure seamless interaction between AI-driven systems, ultimately saving both time and money.

Domo’s easy-to-use, drag-and-drop workflow builder allows business users to create and adjust AI-powered automations without needing help from specialized developers. This user-friendly approach helps cut down on labor costs by reducing the dependency on expensive technical expertise.

By simplifying workflows and removing the need for complicated licensing or external tools, businesses can save money while keeping operations running smoothly and efficiently.