AI ecosystems are transforming business operations by unifying tools, models, and workflows into centralized platforms. As of 2026, companies using these systems report a 64% productivity boost and 81% increase in job satisfaction, while cutting costs and improving governance. With over 11,000 AI models available, platforms like Microsoft Foundry, Google Vertex AI, and Oracle AI Data Platform dominate the market, enabling seamless integration, automated compliance, and scalable workflows.

| Feature | Microsoft Foundry | Google Vertex AI | Oracle AI Data Platform |

|---|---|---|---|

| Model Access | 11,000+ models | 200+ curated models | Foundational LLMs + OCI AI |

| Integration Tools | 1,400+ connectors | AI Agent Builder | Autonomous Database |

| Governance | Entra ID, RBAC | Model Armor | Catalog-based lineage |

| Cost Management | Serverless GPUs, insights | Managed APIs | Free tiers, $300 credit |

Unified AI ecosystems eliminate inefficiencies, improve security, and deliver measurable results. Whether automating workflows or managing costs, these platforms are reshaping how businesses deploy AI at scale. Now is the time to simplify your AI strategy and unlock its full potential.

Unified AI ecosystems bring together model access, governance, and automation into one centralized system, eliminating the inefficiencies of disconnected tools. This unified approach enables seamless integration and stronger oversight.

These ecosystems provide access to thousands of AI models and pre-built integrations through standardized frameworks. For example, the Azure AI Agent Service offers over 1,400 connectors via Azure Logic Apps, enabling integration with tools like Jira, SAP, and ServiceNow. This is powered by the Model Context Protocol (MCP), which ensures smooth connectivity.

Multi-agent orchestration takes integration a step further, allowing agents to function as supervisors, routers, or planners. Platforms support both visual and code-based development, making deployment faster and more efficient. By using semantic models or ontologies, these systems can interpret and manage complex operations effectively.

Unified platforms come equipped with governance tools that automate compliance processes and enforce security policies across all AI interactions. Centralized dashboards offer real-time insights into agent activity, session tracking, and performance metrics. Role-Based Access Control (RBAC) integrates seamlessly with identity frameworks like Microsoft Entra ID, SAML, and Active Directory, ensuring consistent permission management.

Safety measures, such as content filters to detect harmful outputs and defenses against Cross-Prompt Injection Attacks (XPIA), further secure operations. Ethan Sena, Executive Director of AI & Cloud Engineering at Bristol Myers Squibb, highlighted the benefits of these features:

"Azure AI Agent Service gives us a robust set of tools that accelerate our enterprise-wide generative AI journey... By leveraging the service, we're able to shift our engineering time away from custom development and support the differentiators that matter to us."

Organizations can also adopt "Bring Your Own Storage" (BYOS) and virtual private networks (VNETs) to keep data traffic secure and compliant with regulatory standards. This combination of governance and integration ensures smooth, secure operations.

Orchestration automates the entire AI lifecycle, from model deployment to data pipelines and workflow templates. Standardized frameworks, such as Directed Acyclic Graphs (DAGs), help create repeatable workflows, reducing manual effort and ensuring consistency.

Platforms dynamically allocate compute resources, often using Kubernetes, to adapt to changing demands in real time. Human-in-the-loop (HITL) orchestration introduces checkpoints where human oversight is required for sensitive processes. These efficiencies translate directly into improved business outcomes.

For instance, Marcus Saito, Head of IT and AI Automation at Remote.com, implemented an AI-powered helpdesk that resolves 28% of tickets for 1,700 employees worldwide. Similarly, Okta cut support escalation times from 10 minutes to just seconds by automating 13% of case escalations.

Ritika Gunnar, IBM's General Manager of Data and AI, summed up the importance of these capabilities:

"Orchestration, integration and automation are the secret weapons that will move agents from novelty into operation."

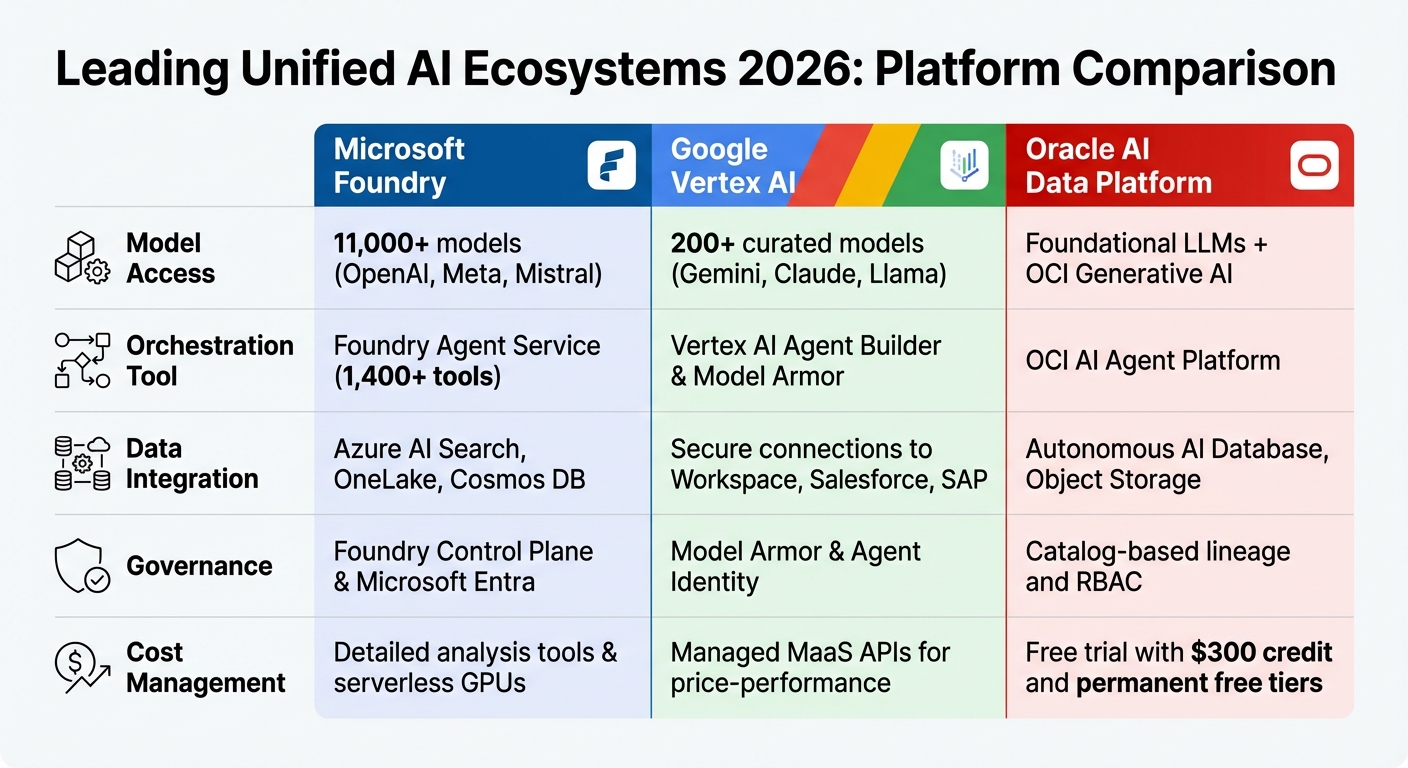

Leading Unified AI Platforms 2026: Microsoft Foundry vs Google Vertex AI vs Oracle AI

By 2026, the AI landscape has shifted dramatically, with platforms evolving far beyond basic chatbot systems. Microsoft Foundry, Google Vertex AI, and Oracle AI Data Platform now dominate the scene, driving autonomous agents capable of planning, executing, and collaborating within enterprise workflows. These platforms feature unified API contracts, enabling developers to switch seamlessly between providers like OpenAI, Llama, and Mistral without requiring code rewrites. With a strong focus on data grounding and governance, they tackle the fragmentation issues discussed earlier.

Oracle's "Gold Medallion" layer ensures AI agents access only high-quality, governed enterprise data to minimize errors like hallucinations. Google's Vertex AI Model Garden offers a curated selection of over 200 enterprise-ready models, while Microsoft Foundry connects to an impressive catalog of more than 1,400 tools. Centralized dashboards, such as the "Operate" dashboards, now provide enterprises with a comprehensive view of their AI operations, tracking agent health, performance, and security across thousands of deployments. This robust foundation is reflected in how these platforms compare in key areas.

Here's a closer look at how these leading platforms measure up in terms of model access, orchestration tools, governance, and cost management:

| Feature | Microsoft Foundry | Google Vertex AI | Oracle AI Data Platform |

|---|---|---|---|

| Model Access | 11,000+ models (OpenAI, Meta, Mistral) | 200+ curated models (Gemini, Claude, Llama) | Foundational LLMs + OCI Generative AI |

| Orchestration Tool | Foundry Agent Service (1,400+ tools) | Vertex AI Agent Builder & Model Armor | OCI AI Agent Platform |

| Data Integration | Azure AI Search, OneLake, Cosmos DB | Secure connections to Workspace, Salesforce, SAP | Autonomous AI Database, Object Storage |

| Governance | Foundry Control Plane & Microsoft Entra | Model Armor & Agent Identity | Catalog-based lineage and RBAC |

| Cost Management | Detailed analysis tools & serverless GPUs | Managed MaaS APIs for price-performance | Free trial with $300 credit and permanent free tiers |

Microsoft Foundry allows users to explore its platform for free, with pricing applied only at deployment based on consumed models and API usage. Google Vertex AI employs serverless training, charging users for compute resources during custom jobs. Meanwhile, Oracle Cloud offers a $300 credit for a 30-day trial and permanent free tiers for services like OCI Speech and Vision.

These platforms have already delivered transformative results across industries, showcasing their potential for streamlining operations and boosting ROI.

"Having our infrastructure and AI foundation on Microsoft is a competitive advantage to Carvana. It puts us in a position to run fast, adapt to the market, and innovate with less complexity."

"The governance and observability in Microsoft Foundry provide what KPMG firms need to be successful in a regulated industry."

These examples illustrate how unified AI ecosystems can drive efficiency, reduce costs, and deliver measurable returns, making them indispensable for enterprises aiming to scale securely and effectively.

Unified platforms depend on more than just model orchestration - they require a solid data infrastructure to power intelligent automation. Successful AI orchestration hinges on having reliable systems that deliver accurate, timely information. By 2026, many organizations will have moved beyond basic data lakes, adopting medallion architectures and Lakehouse architectures to transform raw data into trusted, query-ready assets. Oracle’s gold medallion layer ensures that AI agents access only high-quality, verified data. Similarly, OCI Object Storage handles the massive volumes of unstructured data required by AI pipelines. Together, these advancements provide a seamless foundation for AI-native orchestration across ecosystems.

The evolution from rigid, rule-based workflows to AI-native orchestration has reshaped how data flows are managed. Instead of relying on static rules, modern platforms now use event-driven architectures, where specific business events - like uploading a document or completing a transaction - automatically trigger AI agents or workflows as needed. This reactive approach eliminates bottlenecks and allows different parts of the system to scale independently. AWS Prescriptive Guidance captures this shift:

"Orchestration is no longer just about rules, it's about intent interpretation, tool selection, and autonomous execution."

Semantic models play a critical role in keeping AI agents aligned across departments by serving as a single source of truth. These models precisely define business-specific terms, such as "enterprise customer" or "Q3 targets", ensuring consistent data interpretation across the organization. Databricks highlights the importance of this foundation:

"A unified semantic layer provides consistent business definitions across all tools and users. This semantic foundation gives AI deep knowledge of enterprise data and business concepts unique to each organization."

Event streaming builds on this consistency by enabling real-time responsiveness. Instead of relying on database polling or batch jobs, AI agents monitor event streams and respond immediately when certain thresholds are met - whether it’s adjusting prices based on inventory levels or triggering restock alerts. This event-driven approach also decouples AI logic from backend systems using barrier layers like the Model Context Protocol (MCP). This separation allows developers to update databases or APIs without disrupting orchestration workflows.

Knowledge graphs and shared identity frameworks further enhance enterprise-wide data governance by ensuring consistent semantic interpretation. Knowledge graphs do more than store data; they represent the decisions and relationships within an organization, integrating logic, data, and actions into a semantic layer interpretable by both humans and AI. Palantir’s Ontology illustrates this concept:

"The Ontology is designed to represent the decisions in an enterprise, not simply the data."

These graphs function as an operational bus, using SDKs to connect systems across the organization. They enable bidirectional synchronization between modeling tools and data catalogs, ensuring that updates to one system are reflected across all connected tools and agents.

Shared identity frameworks complement these systems by maintaining consistent permissions as data moves between tools. Platforms like AWS IAM Identity Center provide dynamic access management, integrating with existing SAML and Active Directory systems to enforce role-based, classification-based, or purpose-based permissions. This centralized approach ensures AI agents operate within strict security and compliance boundaries, even as workflows span multiple models and data sources.

As AI workloads continue to expand in 2026, managing costs has become just as important as optimizing performance. Unified ecosystems have embraced agent-driven FinOps, where AI agents monitor billions of cost signals in real time. These agents identify inefficiencies like idle GPU resources, overprovisioned clusters, or unnecessary data egress, and automatically initiate corrective workflows within predefined policy limits. According to IBM research, companies adopting AI-powered FinOps agents have reported cloud cost reductions of 20–40%. For instance, one global financial institution reduced GPU idle time by about 35% through automated resource rightsizing and scheduling.

This shift is powered by tools that combine natural language querying with precise resource tracking. Platforms like Amazon Q Developer, Azure Copilot, and Gemini Cloud Assist allow teams to explore cost drivers conversationally. These tools provide detailed insights into GPU usage, idle periods, and token-based consumption, covering both proprietary models and third-party providers such as OpenAI, Anthropic, and Cohere. Karan Sachdeva, Global Business Development Leader at IBM, explains:

"Traditional FinOps was built for dashboards and decisions made by humans... AI agents go beyond reporting. They observe, analyze and act."

This level of resource tracking enables organizations to achieve real-time cost oversight.

Centralized platforms consolidate billing data into a single system, eliminating the inefficiencies of fragmented cost reporting. These platforms provide immediate insights into which models, teams, or projects are driving expenses. With token-based cost simulation, teams can estimate the financial impact of switching from GPT-3.5 to GPT-4 or increasing usage by specific percentages before committing resources. For example, BP used Microsoft Cost Management to cut cloud costs by 40%, even as their overall usage nearly doubled, according to John Maio, BP’s Microsoft Platform Chief Architect.

These platforms also monitor custom models, which incur hourly hosting fees even when idle. Deployments inactive for over 15 days are flagged automatically. For predictable workloads, many organizations are moving from pay-as-you-go pricing to commitment tiers, securing fixed fees that can lower costs by up to 72% for Reserved Instances and 90% for Spot Instances. Achieving this precision often relies on key-value tagging across resources, such as labeling environments with tags like Environment="Production", enabling faster and more accurate cost queries when using AI assistants.

However, tracking costs is only part of the equation - connecting spending to measurable business outcomes is essential.

Cost visibility alone isn’t enough to measure success. Leading platforms use Total Cost of Ownership (TCO) modeling to break AI expenses into six categories: model serving (inference), training and fine-tuning, cloud hosting, data storage, application setup, and operational support. This level of detail allows architecture review boards to evaluate projects based on cost, performance, governance, and risk. High-resource systems, such as reasoning models and agents, are deployed only when they deliver measurable value.

Sophisticated organizations are also adopting intelligent triage and routing strategies. Routine queries are directed to Small Language Models (SLMs), while only complex tasks are escalated to more expensive frontier models. This approach can reduce calls to large models by 40% without compromising quality. Processing one million conversations through an SLM costs between $150 and $800, compared to $15,000 to $75,000 for traditional LLMs - a cost reduction of up to 100×. Dr. Jerry A. Smith, Head of AI and Intelligent Systems Labs at Modus Create, captures this shift perfectly:

"The shift to SLMs isn't driven by ideology or technical elegance. It's driven by the CFO's spreadsheet."

This financial focus also influences infrastructure decisions. Organizations are deploying workloads across a three-tier hybrid architecture: public cloud for flexibility and experimentation, on-premises systems for high-volume predictable inference (cost-effective when cloud expenses exceed 60–70% of equivalent on-premises systems), and edge computing for tasks requiring response times under 10ms. Aligning infrastructure with key outcomes - such as customer satisfaction, revenue per transaction, or time-to-market - ensures that AI investments not only reduce costs but also deliver meaningful results.

The future of unified AI ecosystems is taking a bold step forward with autonomous agents. These aren't just tools that follow instructions - they're designed to understand context, evaluate goals, and take deliberate actions across complex backend systems. This evolution shifts AI's role from simple conversational tasks to executing intricate, multi-step processes that once required human involvement. By late 2025, 35% of organizations were already leveraging agentic AI, with another 44% gearing up for deployment. The financial impact speaks volumes: companies built around AI are generating 25 to 35 times more revenue per employee compared to their traditional counterparts. This transformation paves the way for deeper integration, as we examine the role of autonomous agents within these ecosystems.

Expanding on earlier discussions of unified orchestration, autonomous agents are now at the heart of real-time decision-making. Acting as a "nervous system" for ecosystems, these agents seamlessly connect tools, memory, and data to enable immediate, informed actions. For example, in December 2025, a global consumer goods company reimagined its innovation processes by deploying meta-agents to oversee worker agents, slashing cycle times by 60%. Kate Blair, Director of Incubation and Technology Experiences at IBM Research, highlighted the significance of this shift:

"2026 is when these patterns are going to come out of the lab and into real life."

Organizations are embracing graduated autonomy through a four-tier "Trust Protocol." These tiers include Shadow Mode (agent makes suggestions), Supervised Autonomy (human approval required), Guided Autonomy (human oversight), and Full Autonomy (no human involvement). By January 2026, Lockheed Martin consolidated 46 separate data systems into one integrated platform, cutting its data and AI tools in half. This new foundation now powers an "AI Factory", where 10,000 engineers use agentic frameworks to manage sophisticated workflows. The results are striking: autonomous agents can speed up business processes by 30% to 50% and cut down low-value tasks for employees by 25% to 40%. To unlock the full potential of these agents, the development of open standards is becoming a priority.

One key challenge is ensuring agents from different vendors can work together seamlessly, which has driven the creation of open protocols. The Model Context Protocol (MCP), initially introduced by Anthropic and now governed by the Linux Foundation, allows AI agents to integrate with external tools and data sources. Similarly, Google Cloud's Agent2Agent (A2A) protocol uses HTTP and JSON-RPC 2.0 to enable direct communication between independent agents across platforms. Oracle has also contributed with its Open Agent Specification (Agent Spec), a declarative framework that ensures agents and workflows are portable across different systems. Sungpack Hong, VP of AI Research at Oracle, explains:

"Agent Spec is a framework-agnostic declarative specification designed to make AI agents and workflows portable, reusable, and executable across any compatible framework."

These protocols are being unified under neutral governing bodies to prevent vendor lock-in. A striking 93% of executives believe that factoring AI sovereignty into their strategies will be critical by 2026. Yet, fewer than 33% of organizations have implemented the interoperability and scalability needed for agentic AI to thrive. The emergence of Agentic Operating Systems (AOS) - standardized runtimes that oversee orchestration, safety, compliance, and resource management for agent swarms - marks a significant step toward making autonomous systems production-ready. With 96% of organizations planning to deploy agents for optimizing systems and automating core processes, the race to establish universal standards is intensifying.

For enterprises aiming to scale AI without succumbing to overwhelming complexity, unified AI ecosystems offer a powerful solution. These platforms dismantle the silos that have long hindered AI initiatives, enabling seamless collaboration across departments and functions. The evolution from basic chatbots to proactive agents capable of orchestrating multi-step workflows is driving tangible results. As highlighted earlier, such orchestrated workflows are boosting efficiency, cutting down low-value tasks by 25% to 40%, and accelerating business processes by 30% to 50%.

The true game-changer lies in orchestration. By unifying models, data, and governance into a cohesive system, these platforms empower AI to go beyond answering queries and start executing complex, end-to-end processes. This approach not only speeds up operations but also reduces the need for large teams, paving the way for agile workflow management across entire organizations.

A growing number of executives - 88% to be exact - are increasing their AI budgets to harness agentic capabilities, fueling the demand for interoperability standards. The introduction of graduated autonomy frameworks, ranging from Shadow Mode to Full Autonomy, provides a structured path for organizations to scale AI responsibly and effectively.

By 2026, leading companies will not just automate tasks - they will reimagine workflows to be inherently AI-driven. With 78% of organizations already leveraging AI in at least one business area and Gartner forecasting that 60% of IT operations will integrate AI agents by 2028, the time to adopt unified AI ecosystems is now. Acting early ensures a competitive edge in an increasingly AI-centric landscape.

Shifting from fragmented tools to unified platforms addresses both immediate operational needs and future innovations. These ecosystems are redefining workflows, enabling operational excellence and scalable transformation. For enterprises aiming to stay ahead, embracing unified AI platforms is no longer optional - it’s essential.

Unified AI ecosystems offer businesses an integrated environment where data, tools, and applications work in harmony, removing the hassle of juggling disconnected systems. By bringing large language models and other AI tools into a single platform, companies can avoid vendor lock-in, simplify customizations, and speed up their workflows.

These ecosystems deliver measurable time and resource savings by slashing development cycles by up to 70%, cutting evaluation times by 40%, and shortening launch timelines for AI-driven workflows. On a larger scale, this means substantial financial gains - saving hundreds of thousands of dollars in operational costs while driving revenue growth. All of this is achieved without compromising on enterprise-grade security or data governance. Treating AI as core infrastructure enables businesses to innovate faster, boost productivity, and scale solutions to meet their unique needs.

Autonomous AI agents act as virtual assistants that interpret user intent, break it down into manageable tasks, and execute them seamlessly across various tools and systems within a unified AI platform. By handling APIs, web interfaces, and internal applications, they simplify intricate workflows, enabling users to automate processes with straightforward commands - no advanced technical skills required.

At the core of their functionality is a central orchestration engine, which dynamically assigns tasks to the most appropriate agent or AI model. This ensures tasks are handled efficiently, with minimal delays and the right tools for the job. The orchestration layer also enforces governance by monitoring outputs, maintaining context, and avoiding unnecessary complications, keeping workflows dependable and scalable.

These agents go beyond automating repetitive tasks; they tackle complex decision-making processes as well. This allows organizations to save time, minimize errors, and increase productivity. By integrating AI-driven solutions at scale, businesses can free up employees to focus on strategic, high-impact work.

Governance and compliance are essential to keeping unified AI platforms secure, ethical, and aligned with industry regulations. By integrating policies for data management, model oversight, and automated audit trails, these platforms can ensure transparency and accountability in AI-driven decisions while adhering to standards like GDPR, HIPAA, or financial regulations.

Strong governance serves as a protective barrier against challenges such as unintended bias, security vulnerabilities, and regulatory violations. Features like role-based access, data lineage tracking, and model monitoring empower organizations to maintain control over their AI workflows. These tools not only safeguard sensitive data but also build trust, simplify platform adoption, and ensure consistent, dependable performance.