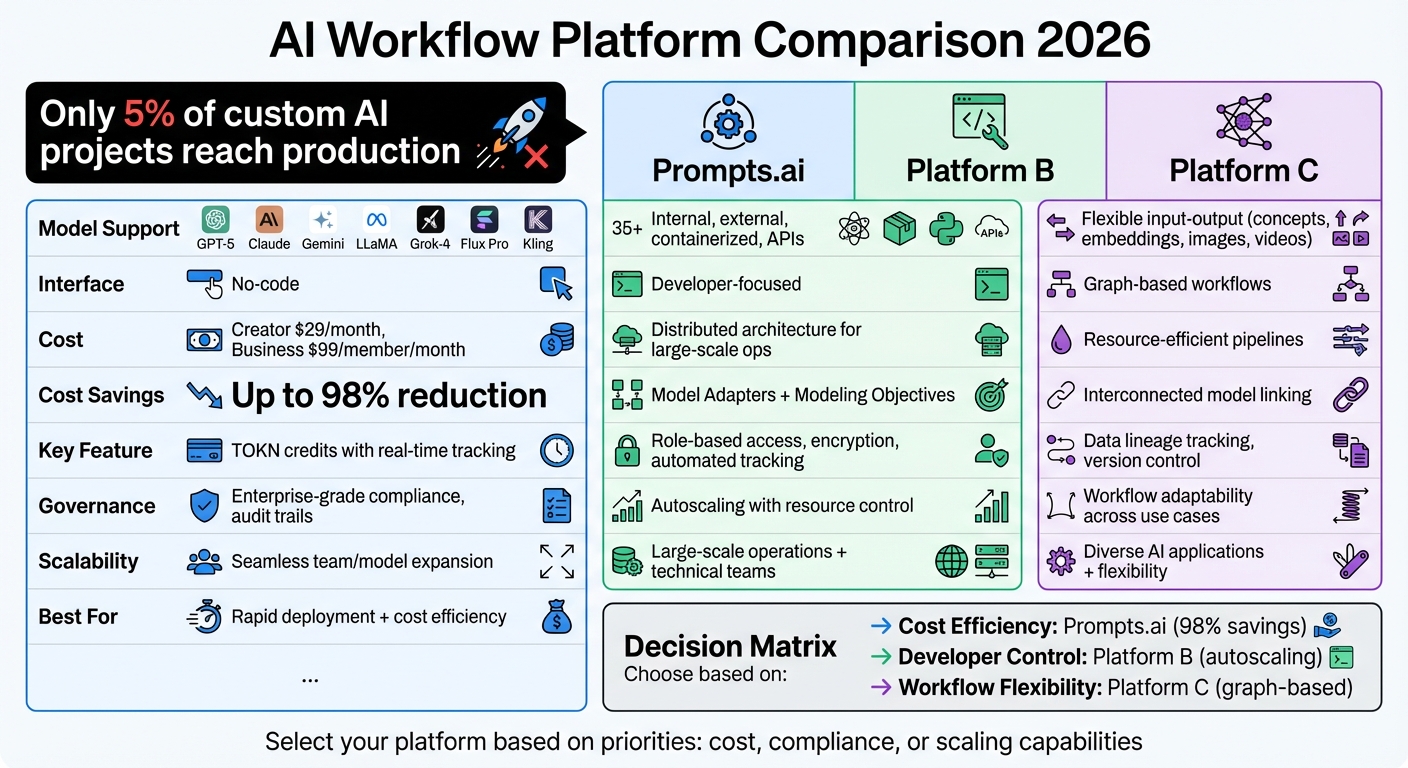

By 2026, integrating AI into workflows has become a necessity for businesses aiming to optimize operations and control costs. However, only 5% of custom AI projects reach production, often due to challenges in turning AI's potential into practical results. This guide evaluates three leading platforms - Prompts.ai, Platform B, and Platform C - based on their strengths in model support, cost management, governance, and scalability.

Key Takeaways:

Quick Comparison:

| Feature | Prompts.ai | Platform B | Platform C |

|---|---|---|---|

| Model Support | 35+ models, no-code interface | Internal, external, and APIs | Flexible input-output integration |

| Cost Management | TOKN credits, real-time tracking | Distributed architecture | Resource-efficient pipelines |

| Governance | Enterprise-grade compliance | Role-based access, audit trails | Data lineage, version control |

| Scalability | Seamless team/model expansion | Autoscaling, resource control | Workflow adaptability |

Each platform caters to different needs, from rapid prototyping to strict compliance. Choose based on your priorities - cost efficiency, compliance, or scaling capabilities.

AI Workflow Platform Comparison: Prompts.ai vs Platform B vs Platform C

Prompts.ai brings together over 35 leading language models - including GPT-5, Claude, Gemini, LLaMA, Grok-4, Flux Pro, and Kling - into a single, secure platform. This integration allows teams to choose the best model for their tasks without needing to alter their workflows.

The platform’s adaptable architecture leverages the unique strengths of each model, whether it’s for in-depth reasoning, nuanced creativity, or fast data processing.

Prompts.ai addresses cost concerns with a unified subscription model tailored for both individuals and businesses. The Creator plan is priced at $29 per month, while business plans start at $99 per member per month. By consolidating access to premium AI tools and streamlining billing, it reduces administrative burdens. A real-time FinOps layer tracks token usage, enforces spending caps, and the flexible TOKN credit system ensures costs align with actual usage. These features can help teams cut AI expenses by as much as 98%, all while maintaining high standards of security and compliance.

Built with enterprise needs in mind, Prompts.ai ensures secure workflows and detailed audit trails. These features are especially critical for industries like healthcare and finance, where safeguarding sensitive data and meeting regulatory requirements are non-negotiable.

Prompts.ai scales effortlessly, accommodating new models and teams without interruptions. The Prompt Engineer Certification program promotes consistency in best practices across expanding teams. Additionally, automated workflows handle tasks like content creation, data management, and reporting, enabling quick and efficient deployment.

Palantir's AIP leverages an Ontology layer to connect AI models and digital assets with real-world entities, enabling practical decision-making. This architecture integrates machine learning logic, Large Language Models, Vision-Language Models, and specialized models designed for simulation and optimization tasks. This robust foundation supports Platform B's focus on managing models effectively and enhancing operational efficiency.

Platform B supports models from four key sources: internally trained models, uploaded model files, containerized models, and externally hosted APIs. It uses Model Adapters to handle environment dependencies, ensuring smooth loading, initialization, and inference processes. Additionally, Modeling Objectives act as a central hub for managing models - streamlining tasks like evaluation, release, and aligning models with specific business goals.

The platform's distributed architecture is designed to handle large-scale data operations and real-time analytics efficiently. Combined with a unified developer toolchain for building production-ready workflows, this approach helps reduce operational costs without compromising performance.

Automated systems ensure critical aspects such as model lineage, security, versioning, reproducibility, and auditing. Sensitive data is protected through role-based access controls and encryption, making it suitable for regulated industries. Comprehensive audit trails and systematic evaluations of model decisions further promote transparency and accountability in deployments.

With autoscaling capabilities and precise control over resource allocation, Platform B can adapt to changing organizational demands. Continuous feedback loops from production data and user interactions enable ongoing monitoring and improvement of model performance, ensuring workflows remain efficient as teams and projects expand.

Clarifai employs a graph-based approach to streamline AI workflow integration. This method allows users to create workflows by linking models into a series of interconnected steps. Whether you're using pre-built models or custom ones, they can be seamlessly connected to form pipelines where outputs from one model feed directly into another. This design makes model integration straightforward and efficient.

Platform C accommodates a wide range of input types - such as concepts, embeddings, images, videos, and regions - and output types, including concepts, clusters, and regions. Models can be linked as long as the receiving model is compatible with the input format. This flexibility ensures adaptability across various AI use cases.

Platform C prioritizes secure and compliant workflows through its Modeling Objectives feature. This tool oversees the entire model lifecycle, covering evaluation, review, release, and deployment. Integrated compliance measures include data lineage tracking, security protocols, version control, reproducibility, and auditing. These features help organizations adhere to regulatory standards while maintaining complete transparency and control over their AI processes.

When it comes to integrating AI models into workflows, each platform offers its own distinct advantages and trade-offs. Prompts.ai stands out with its intuitive, no-code/low-code interface, making prompt engineering accessible to non-technical users. It supports major LLM providers like OpenAI and Anthropic, enabling quick prototyping and experimentation. On the other hand, Platform B is tailored for developer-centric environments, offering seamless integration with orchestration frameworks and advanced debugging and tracing tools - though it requires a higher level of technical expertise. Below, we explore how these platforms differ in terms of cost, scalability, and governance.

Cost efficiency, scalability, and governance are critical factors that set these platforms apart, each addressing operational needs in unique ways:

Selecting the right platform ultimately depends on your priorities - whether it’s rapid experimentation, enterprise compliance, distributed scaling, or cost management. Each solution is designed to align with specific operational demands and objectives.

When selecting an AI workflow integration solution, it’s essential to weigh your priorities - whether it’s model support, cost efficiency, governance, or scalability. These factors will guide you toward a platform that aligns with your team’s goals and technical needs.

Prompts.ai stands out as a compelling choice for enterprises aiming to streamline AI operations. By leveraging its unified subscription model and TOKN credit system, organizations can cut costs by up to 98%. With support for over 35 leading large language models, a no-code interface, and enterprise-grade compliance features, it’s designed for rapid deployment without compromising on governance or security.

Every organization has unique challenges, from ecosystem integration and developer autonomy to tight budgets and advanced agent orchestration. The right platform should bridge these gaps, aligning seamlessly with your technical infrastructure and operational goals.

Emerging trends like "describe-to-build" interfaces and human-in-the-loop approval processes are reshaping AI workflows, making them both easier to use and more dependable. This shift highlights the importance of choosing a solution that not only meets current needs but also supports long-term growth.

Focus on identifying high-impact tasks where AI can deliver immediate value. Then, select a platform that balances cost-effectiveness, compliance, flexibility, and ease of use - ensuring it matches your team’s expertise and scalability requirements.

Prompts.ai’s TOKN credit system works like a prepaid wallet, giving teams the flexibility to purchase AI processing power in bulk and allocate it as needed. This system intelligently assigns credits in real time, directing tasks to the most cost-efficient AI model. For example, smaller models handle routine tasks, while premium models are reserved for situations requiring higher quality. This smart allocation prevents waste from unused subscriptions and eliminates hidden fees, resulting in substantial cost savings.

The system also tracks credit usage across more than 35 integrated models, empowering administrators to set budgets, enforce usage policies, and shift tasks to more affordable models - all without manual intervention. With centralized credit management and real-time cost analysis, organizations can sidestep duplicate tool expenses and unexpected charges, cutting AI-related costs by as much as 98%.

Prompts.ai features a scalable and modular workflow architecture, built to meet a wide range of operational requirements. To complement this, it offers enterprise-level cost-control tools that help maintain efficiency as your AI operations expand.

While there isn’t specific comparative data available to measure Prompts.ai’s scalability against other platforms, its emphasis on seamless integration and adaptability makes it a strong option for businesses aiming to streamline and enhance their workflows.

Prompts.ai delivers enterprise-grade security and compliance through a comprehensive, multi-layered approach. The platform streamlines the management of more than 35 AI models, enabling IT teams to implement role-based access controls, define detailed permissions, and maintain immutable audit logs for complete transparency in model usage. With data encryption both during transit and at rest, it operates on a secure, SOC-2-compliant cloud infrastructure, adhering to rigorous standards such as HIPAA and GDPR.

To strengthen compliance efforts, Prompts.ai offers tools like ready-to-use policy templates, automated governance checks, and customizable data-handling rules that align workflows with corporate and industry requirements. Real-time monitoring keeps costs and usage in check, while advanced security features automatically prevent non-compliant activities. These capabilities provide businesses with a secure and auditable foundation to scale AI with confidence and accountability.