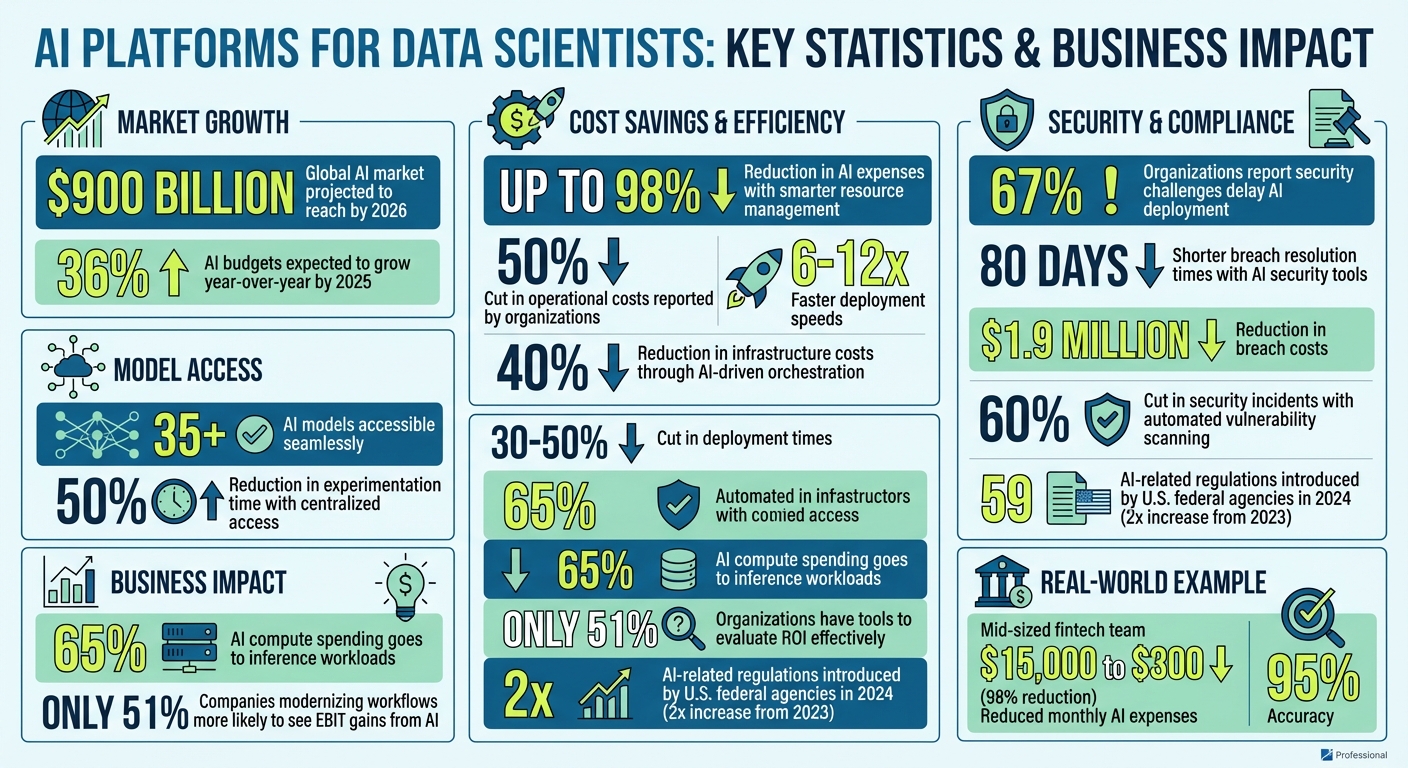

AI is reshaping how data scientists work, but fragmented tools and integration challenges often slow progress. With the global AI market projected to reach $900 billion by 2026, businesses must streamline workflows to stay competitive. This guide explores how unified AI platforms solve common issues like tool sprawl, hidden costs, and deployment delays. Key takeaways include:

Discover how platforms like Prompts.ai simplify operations, cut costs, and accelerate AI adoption. Move from complexity to clarity with scalable, secure workflows that deliver results.

AI Platform Benefits: Cost Savings, Model Access, and Deployment Speed Statistics

For data scientists, the ideal AI workflow is one that integrates smoothly, scales efficiently, and adheres to regulatory standards. They need unified systems that eliminate the hassle of juggling disconnected tools, allowing them to concentrate on building models and delivering results.

At the core, these workflows should provide access to multiple AI models and frameworks under one roof, automate deployment pipelines, and include governance features that support creativity while ensuring compliance. When these elements are in sync, teams can move from experimentation to production in a matter of days, not months. These needs highlight the challenges data teams frequently face.

One of the primary hurdles is fragmented tools. Teams often manage multiple compute clusters, each requiring its own maintenance and integration. This scattered approach drains resources - both time and money - while also increasing security and compliance risks.

Another persistent issue is the "glue code" problem. Data scientists often spend excessive time writing custom code to connect disparate tools, time that could be better spent on actual data science tasks.

Inconsistent environments also pose significant challenges. The classic "works on my machine" scenario occurs when models that perform well locally fail in production due to mismatched configurations. Debugging these issues wastes time and delays deployments. Additionally, limited local resources - like insufficient RAM, CPU, or GPU power - make scaling experiments difficult without resorting to complex solutions like Kubernetes.

Security concerns further complicate workflows. A striking 67% of organizations report that security challenges significantly delay AI deployment. Without standardized processes for packaging and serving models, projects often stall during experimentation and fail to reach production. Hugo Lu, CEO of Orchestra, emphasizes this point:

"The advantage of having a single control plane is that architecturally, you as a data team aren't paying 50 different vendors for 50 different compute clusters, all of which cost time and money to maintain."

To address these problems, a unified orchestration platform becomes essential.

AI orchestration platforms tackle these challenges by offering a single control plane that integrates all essential tools while meeting the key requirements of scalability, automation, and governance. Instead of managing separate systems for tasks like data ingestion, model training, and deployment, teams gain an end-to-end environment with complete visibility.

These platforms streamline workflows by automating repetitive processes. Features like automated evaluation, continuous integration, and deployment pipelines ensure smooth transitions from development to production. They allow teams to link ingestion, transformation, and inference steps into dependable workflows that run consistently.

Cost management is another major advantage. Unified visibility into compute usage helps teams identify resource consumption and scale down unused infrastructure automatically. Many organizations report cutting operational costs by 50% while achieving deployment speeds that are 6–12 times faster. Additionally, these platforms simplify interoperability, enabling seamless transitions between different model backends without requiring significant code changes.

Choosing the right AI platform is crucial for ensuring smooth integration with data science workflows while meeting scalability, cost, and compliance requirements. A poor choice can lead to costly contracts, workflow inefficiencies, or regulatory headaches. A structured evaluation process can help you avoid these pitfalls and select a platform that grows with your needs. Research indicates that companies modernizing workflows and infrastructure are twice as likely to see EBIT gains from adopting AI.

An effective platform must adapt to fluctuating workloads. Elastic compute resources that automatically scale up or down help cut costs and eliminate the need for constant manual adjustments.

It’s also vital that the platform operates across various environments. Whether you’re working with multiple cloud providers or hybrid setups, seamless compatibility prevents vendor lock-in and strengthens your bargaining power. For instance, Uber’s MLOps platform, Michelangelo, addressed the limitations of training models on individual machines by scaling to handle massive data volumes and streamlining production workflows.

Another key factor is framework support. The platform should work with popular tools like PyTorch, TensorFlow, Hugging Face, and Ray without requiring significant code changes. Stitch Fix’s ML platform exemplifies this principle:

"Data scientists only have to think about the where and when to deploy a model in a batch, not the how. The platform handles that." - Elijah Ben Izzy and Stefan Krawczyk, Stitch Fix.

Once scalability is secured, managing costs becomes the next priority.

Inference workloads now dominate AI compute spending, accounting for 65% - far surpassing training costs. Platforms that provide detailed resource tagging by project, team, or model allow for precise tracking of expenses. With AI budgets expected to grow by 36% year-over-year by 2025, it’s concerning that only 51% of organizations currently have tools to evaluate ROI effectively.

Look for features like automated anomaly detection to flag unexpected spending spikes. AI-driven orchestration can reduce infrastructure costs by up to 40% and cut deployment times by 30–50%. Tools such as adaptive batching, dynamic GPU allocation, and model compression can significantly lower compute demands and save money.

Pay-as-you-go pricing models offer flexibility, but they require careful management. A good platform should provide clear insights into unit economics, automate resource scaling to avoid idle time, and allow hardware to be tailored to workload complexity. For example, use high-performance H100 GPUs for complex tasks, while switching to L40S or A100 GPUs for simpler processes to avoid overspending.

While performance and cost management are critical, security and governance are equally important to protect your AI investments.

Security is a top concern, with 67% of organizations reporting AI deployment delays due to security challenges. A reliable platform must include encryption both at rest and in transit, Virtual Private Cloud (VPC) perimeters, and role-based access control (RBAC) based on the principle of least privilege to safeguard sensitive data and model assets.

Comprehensive audit logs are essential for tracking user actions, model interactions, and administrative changes. In 2024, U.S. federal agencies introduced 59 AI-related regulations - more than double the number from 2023. Platforms that lack robust logging and compliance features can quickly become liabilities.

Model governance is another critical area. Features like lineage tracking, provenance verification, and versioning ensure reproducibility and accountability. Security teams using AI have reported shorter breach resolution times - by as much as 80 days - and reduced breach costs by $1.9 million. Automated vulnerability scanning of open-source code can cut security incidents by up to 60%, highlighting the importance of securing the software supply chain. Platforms offering curated open-source libraries and continuous monitoring of dependencies provide an additional layer of protection.

Prompts.ai provides a streamlined solution for data science teams, addressing challenges like tool sprawl, hidden costs, and governance issues. By consolidating model access, ensuring compliance, and offering real-time cost tracking, the platform simplifies AI workflows through a single, unified interface. This eliminates the hassle of juggling multiple vendor contracts and APIs while giving users the flexibility to select the best model for each task.

Built with enterprise needs in mind, Prompts.ai prioritizes security and governance. Features such as SOC 2 Type II compliance, role-based access controls, and detailed audit logs ensure every prompt and model interaction is tracked. The platform supports workflows that meet HIPAA and GDPR requirements, maintaining performance and speed. For industries like finance and healthcare, on-premises deployment options provide the necessary control over sensitive data.

Prompts.ai’s practical design stands out. Teams can authenticate via SSO, search for models using cost and performance metrics like latency, and build workflows with user-friendly drag-and-drop tools that include A/B testing capabilities. Performance tracking is displayed on U.S.-formatted dashboards, showing metrics such as accuracy, speed, and costs (e.g., $1,234.56), making it easier to refine workflows without requiring custom code.

Prompts.ai brings together over 35 large language models (LLMs) from providers like OpenAI, Anthropic, Google, Meta, and specialized vision models. Users can filter models based on latency, cost per million tokens, or task type, enabling seamless switching between options. For instance, Claude can be used for complex reasoning tasks, while Llama is ideal for cost-sensitive fine-tuning - all without the need to manage separate API keys or integration efforts.

This centralized access reduces experimentation time by as much as 50% compared to disjointed setups. The platform also ensures compatibility with frameworks like PyTorch, TensorFlow, and Hugging Face, allowing data scientists to focus on selecting the right models instead of worrying about infrastructure.

Prompts.ai doesn’t just simplify workflows - it also delivers major cost reductions. By leveraging advanced routing and scaling mechanisms, inference costs can drop by as much as 98%. For example, a mid-sized fintech team reduced their monthly AI expenses from $15,000.00 to just $300.00 by routing 90% of queries to fine-tuned open-source Llama models, all while maintaining 95% accuracy.

The platform’s real-time dashboards track token usage and spending, while FinOps alerts notify teams when budgets near their limits. This visibility helps teams make adjustments before costs escalate.

Prompts.ai includes a robust library of tools to accelerate project timelines. It offers over 100 pre-built workflows for tasks like outlier detection, synthetic data generation, RAG pipelines, and A/B testing. Additionally, its collection of 500+ vetted prompt templates, tailored for data science, allows teams to go from setup to execution in hours instead of days.

These workflows integrate LLMs with tools like Pandas for data preparation, scikit-learn for validation, and Streamlit for dashboards, enabling smooth end-to-end pipelines. Teams can also export workflows to PyTorch or TensorFlow for custom scaling, ensuring compatibility across the tech stack. To further support users, Prompts.ai provides pre-built Jupyter-style notebooks and a Prompt Engineer Certification program, helping teams onboard quickly and adopt standardized best practices.

Integrating Prompts.ai into your data science operations can be done without overhauling your existing systems. The platform is designed to work seamlessly with tools you may already be using, such as PyTorch, TensorFlow, Hugging Face, or cloud services like AWS and Azure. Start by connecting your data warehouses, feature stores, and deployment environments. This setup not only simplifies your processes but also strengthens the cost management and governance tools previously mentioned. To measure the platform's impact, record baseline metrics like model development time, deployment frequency, and infrastructure expenses. From there, focus on selecting models, creating standardized workflows, and ensuring compliance throughout the integration process.

The setup begins with secure single sign-on (SSO), allowing your team to access the platform using existing credentials. With Prompts.ai's powerful search feature, you can explore over 35 models filtered by criteria like latency, cost, and task type. For simpler tasks such as data preprocessing or exploratory analysis, a high-speed, cost-effective model like LLaMA could be a good starting point. On the other hand, for more advanced tasks like feature engineering or anomaly detection, models such as Claude or GPT-5 offer greater precision. Be sure to document your model selection process to align with governance requirements.

After selecting your models, focus on creating standardized workflows to improve efficiency and ensure compliance. Standardization helps avoid redundant tasks and integrates compliance checks right from the start. Prompts.ai offers pre-built workflow templates for common processes such as data validation, feature engineering, and model monitoring. These templates, which cover tasks like outlier detection, synthetic data generation, and retrieval-augmented generation (RAG) pipelines, provide a solid starting point. You can further customize these templates to include logic specific to your domain, preserving organizational expertise while speeding up production by automating repetitive tasks.

Prompts.ai's security and FinOps tools make it easy to maintain compliance and monitor performance. Enable comprehensive audit logs to track who accesses models, when changes are made, and what data is processed. This is especially critical in regulated industries like finance, healthcare, and government. Use role-based access controls to restrict sensitive data and model modifications to authorized personnel only. The platform also provides U.S.-formatted dashboards that display real-time metrics such as accuracy, speed, and costs (e.g., $1,234.56), helping you monitor performance and identify issues like drift. Set up FinOps alerts to notify your team when budgets approach their limits, ensuring timely adjustments. Finally, establish a regular review schedule to evaluate progress against goals like deployment frequency, infrastructure costs, and model consistency.

By 2026, data science teams are grappling with tool sprawl, which isolates engineers and analysts, hidden costs that stretch budgets, and compliance issues that jeopardize regulated projects. A robust AI platform addresses these challenges by consolidating multiple models into a single interface, embedding cost management tools, and automating governance processes. With standardized workflows, pre-built templates, and real-time budget tracking, teams can reduce friction and move swiftly from experimentation to production - delivering measurable operational improvements.

Prompts.ai stands out by offering three clear advantages: unified access to models, eliminating vendor lock-in; up to 98% cost savings through optimized usage and FinOps alerts; and integrated compliance tracking. These benefits translate directly into faster project rollouts, reduced infrastructure costs, and less time spent on manual coordination.

This shift from fragmented tools to a centralized orchestration platform reflects broader trends in 2026. As workflows evolve, unified platforms become the backbone of next-generation AI solutions. Features like real-time inference, retrieval-augmented generation, and multi-cloud deployments require scalable, high-performance systems. Platforms that combine data lakes, ML lifecycle management, and model monitoring foster seamless collaboration across teams. This shared, secure environment empowers business users, data scientists, and engineers alike, driving enterprise-wide AI adoption forward.

Migrating your data analysis and modeling workflows is a smart first step. A unified AI platform brings all your tools for data manipulation, model development, and deployment into one place. This simplifies your processes, reduces complexity, and boosts overall efficiency.

To get a handle on ROI and keep inference costs in check, pay close attention to factors like model size, input/output length, latency, and tokenization. You can cut costs significantly by applying methods such as prompt engineering, batching, caching, and fine-tuning your infrastructure. Stay proactive by forecasting expenses regularly, examining workloads, and using strategies like load balancing, semantic caching, and usage-based routing to keep costs under control.

To address HIPAA requirements, governance features should include strong safeguards for Protected Health Information (PHI). This involves compliance with the Security Rule, ensuring secure handling of sensitive data. Additionally, practices like thorough documentation, continuous monitoring, and rigorous vendor evaluations are essential to maintain adherence.

For GDPR, the focus should be on managing data privacy effectively. This includes ensuring transparency in data usage, adhering to ethical standards, and complying with regulations on data security and user rights. Tools for robust monitoring, auditing, and fostering collaboration are critical to support these efforts.