Testing and refining AI prompts is critical for achieving high-quality outputs. Whether you're optimizing for accuracy, creativity, or cost efficiency, the right platform can streamline workflows and improve results. This article reviews four key tools for prompt testing and comparison:

Each platform caters to different needs, from enterprise AI orchestration to creative image generation. Below is a quick comparison to help you choose the right tool.

| Platform | Multi-Model Support | Cost Controls | Team Collaboration | Scalability | Security Features |

|---|---|---|---|---|---|

| Prompts.ai | 35+ models | TOKN credits, usage analytics | Shared workflows | Enterprise-ready | Encrypted keys (BYOK) |

| OpenAI Playground | OpenAI models only | Per-token pricing, caching | Project-level organization | Tailored for OpenAI tasks | Standard cloud-based |

| MidJourney | MidJourney models only | Subscription tiers | Discord-based collaboration | Optimized for images | Stealth mode (Pro/Mega) |

| PromptPerfect | Text & image models | Freemium, token optimization | Hosted prompt deployment | Automated scaling | Standard cloud-based |

AI Prompt Testing Platforms Comparison: Features, Pricing, and Capabilities

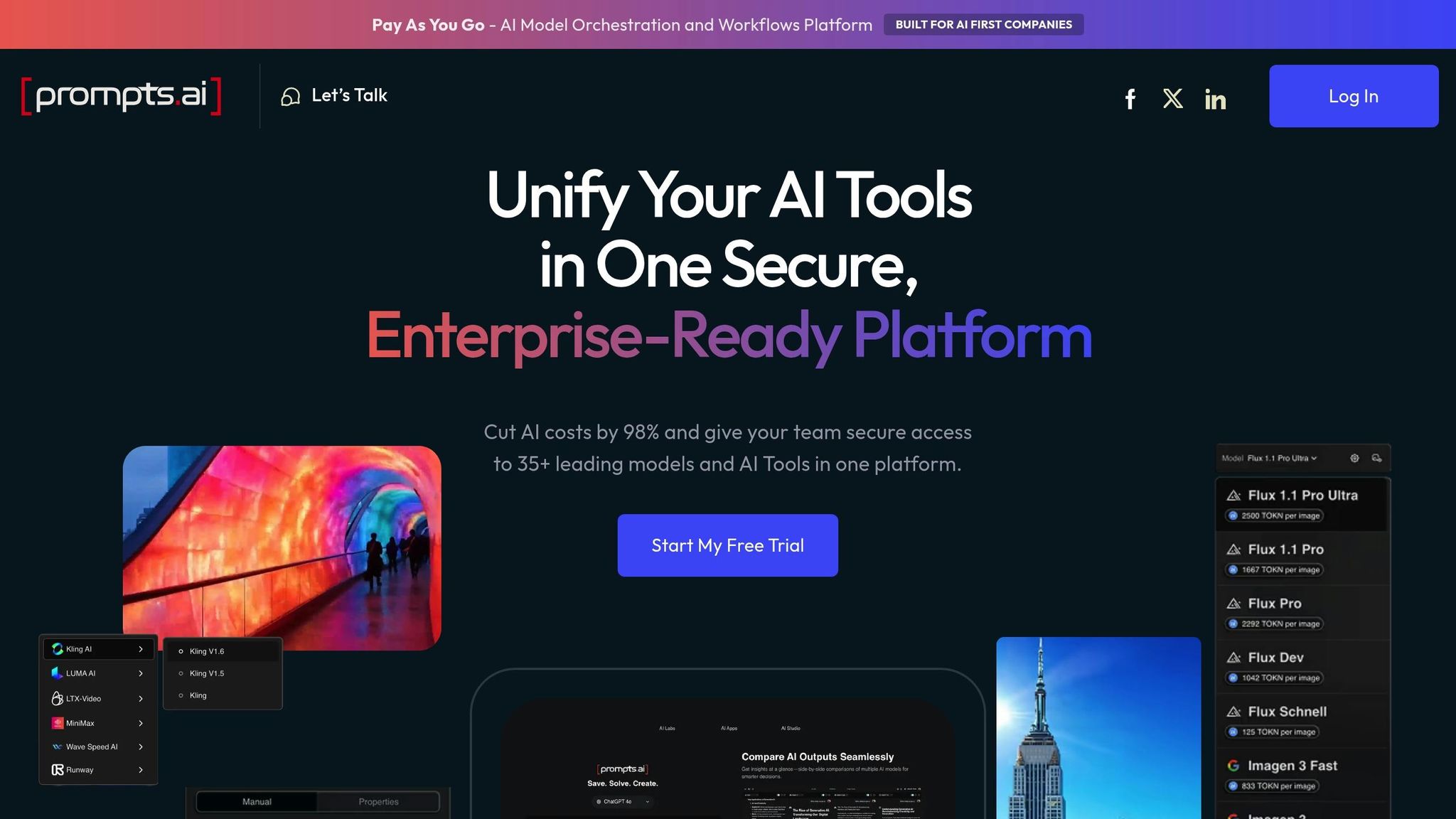

Prompts.ai serves as an AI orchestration platform designed for enterprise use, bringing together over 35 top-tier language models - including GPT-5, Claude, LLaMA, Gemini, and Grok-4 - into a single, streamlined interface. This setup simplifies subscription management and optimizes workflows for industries ranging from Fortune 500 companies to creative agencies and research institutions. The platform’s core features focus on model comparison, cost efficiency, collaboration, and robust security.

One of Prompts.ai’s standout features is its ability to compare multiple models side by side. Instead of juggling separate tools, users can evaluate how GPT-5 handles a technical query versus Claude’s approach, all within the same interface. This parallel view makes it easier to pinpoint which model delivers the best results - whether in accuracy, creativity, or contextual relevance. Beyond text generation, the platform also supports visual AI tools like Flux Pro and Kling, offering flexibility to choose the right tool for any task.

Prompts.ai offers real-time tracking of token usage, giving users a clear picture of their AI-related expenses. Its pay-as-you-go TOKN credit system eliminates the need for recurring monthly fees, potentially cutting AI costs by up to 98% compared to managing individual subscriptions. Users can set spending limits, monitor usage across teams or projects, and directly link costs to business goals, turning AI spending into a transparent and manageable budget item.

The platform promotes consistency through shared libraries and pre-designed workflows, reducing the need to start from scratch on every project. Organizations can benefit from a Prompt Engineer Certification program, which helps identify and train in-house experts. Additionally, a global community of users shares best practices and techniques. Role-Based Access Control (RBAC) ensures that junior team members can explore and experiment while senior engineers oversee operations and maintain compliance.

For businesses dealing with sensitive information, Prompts.ai provides enterprise-level security. It complies with SOC 2 Type II standards and supports on-premises, self-hosted, or air-gapped deployments. Data is protected with advanced encryption both at rest and in transit, meeting the stringent requirements of industries like finance, healthcare, and legal services.

The OpenAI Playground serves as a web-based testing platform for experimenting with OpenAI's latest models, including GPT-5.2, GPT-4.1, and the reasoning-focused o4-mini. A standout feature is its side-by-side comparison tool, which allows users to simultaneously view outputs from two different prompt versions. This makes it easier to decide which configuration works best before deploying prompts in production. Additionally, the automated "Optimize" tool reviews prompts for inconsistencies, unclear instructions, or missing output formats and offers a refined version with just one click. These features provide a strong foundation for effective prompt testing.

The Playground simplifies switching between models within a single interface. Each model offers distinct capabilities: GPT-5.2 comes with a 400,000-token context window and excels at coding tasks, while GPT-4.1 provides an even larger context window of 1,047,576 tokens. On the other hand, o4-mini delivers quick reasoning capabilities at a lower cost - $1.10 per 1 million input tokens compared to GPT-5.2's $1.75. The platform also supports variable-based prompts (e.g., {user_goal}), allowing users to separate static instructions from dynamic inputs. This enables efficient testing across various scenarios without needing to rewrite entire prompts.

To help manage expenses, the platform includes real-time logs and prompt caching, which significantly reduce costs. Cached inputs cost just $0.18 per 1 million tokens compared to $1.75 for standard inputs. For initial testing, users can start with lower-cost models like GPT-5-mini at $0.25 per 1 million tokens before transitioning to more advanced models. The Playground also features truncation controls, which automatically drop older messages once a specified threshold is reached, preventing unexpected costs from extended conversation histories. These cost-saving measures are complemented by tools that improve team efficiency.

Prompts are now organized at the project level, making it easier for teams to share and manage them using structured folders. Version control is built in, allowing for one-click rollbacks to earlier iterations if a new version underperforms. The platform also integrates "Evals", which link directly to prompts, pre-fill variables, and display pass/fail results on the prompt detail page. This helps teams identify and address potential issues before deployment.

Designed to handle high-demand workflows, the platform offers a tiered rate limit system that adjusts to usage needs. For example, Tier 5 users can process up to 40,000,000 tokens per minute with GPT-5.2 and up to 150,000,000 tokens per minute with o4-mini, making it suitable for large-scale testing. To further optimize costs for non-urgent or bulk tasks, OpenAI provides "Batch" and "Flex" processing options, ensuring the platform remains adaptable to various workloads.

MidJourney takes a collaborative approach to real-time image generation, making it easier for teams to refine and test prompts effectively. Operating through Discord, the platform allows users to invite the MidJourney Bot to private servers, enabling real-time results and immediate prompt adjustments. With its Remix Mode, users can tweak text and parameters - such as aspect ratios or stylization - while generating variations. This feature helps users experiment with minor changes without needing to start from scratch.

MidJourney supports multiple model versions, allowing users to switch between them by adding parameters like --v 6 or --v 7 to prompts. Since June 2025, MidJourney V7 has been the default model, though older versions remain accessible for comparison. The seed control feature ensures consistency across tests by allowing users to reuse specific seeds, making it easier to analyze how different word choices influence the same base composition. However, keep in mind that some parameters, like maximum stylize values, may not work uniformly across all versions. This flexibility makes it easier to refine prompts and achieve creative goals.

MidJourney offers several pricing tiers to fit different needs:

To help manage costs, users can monitor their graphics processing usage with the /info command, especially when testing complex prompts.

MidJourney encourages collaboration by providing access to a public gallery where users can explore prompts and settings shared by others. While its Discord-first setup fosters creativity, it may feel less intuitive compared to traditional enterprise tools. For teams handling sensitive projects, the Pro and Mega plans include Stealth Mode, which keeps prompts and generated images private. In contrast, the Basic and Standard plans make all outputs public by default.

Since MidJourney processes all prompts and images on external servers, privacy is a key consideration. By default, prompts and images are visible to the public unless users upgrade to Pro or Mega plans for Stealth Mode. As Mystic Palette, AI Art Tools Comparison Guide highlights:

Midjourney pools images publicly by default unless you pay for Pro/Mega.

For those working with proprietary or confidential material, the higher-tier plans are essential, as the Basic and Standard options lack privacy controls for generated content.

PromptPerfect uses reinforcement learning to simplify the often tedious process of manual prompt tweaking. This automation complements earlier-discussed systematic testing methods, allowing users to refine prompts for models like GPT-4, ChatGPT, DALL-E, Stable Diffusion, and MidJourney. By streamlining optimization, the platform helps reduce unnecessary API token usage during the process.

The platform works with both text and image generation models, giving users the ability to test and compare how a single prompt performs across various systems. With approximately 106,400 monthly visitors, PromptPerfect has gained traction for its ability to debug and deploy optimized prompts as hosted services. Its multilingual capabilities ensure that prompts are fine-tuned for different languages, with optimization explanations automatically aligning with the language settings of your browser.

PromptPerfect operates on a freemium model, offering free prompt hosting while lowering upfront costs and infrastructure expenses. By automating prompt optimization, it reduces both labor and API token costs. Additionally, users can choose the most cost-effective model for their tasks since the platform supports optimization across multiple systems with differing pricing structures.

The platform’s prompt hosting feature enables users to deploy optimized prompts as stable, hosted services, simplifying the process of scaling prompt-based applications without requiring extra infrastructure management. Integration with the ChatGPT API facilitates quicker optimization for high-volume tasks. Its user-friendly interface ensures that even non-technical users can navigate complex prompt engineering. This blend of automation and deployment capabilities empowers teams to expand their AI workflows efficiently, without a corresponding increase in manual effort. Together, these features make prompt evaluation and deployment a seamless part of the AI development process.

Each platform has its own strengths and limitations, making them suitable for different types of users and workflows:

OpenAI Playground provides precise control over the latest models (e.g., GPT-4o and o1) but is confined to the OpenAI ecosystem, limiting its flexibility for users needing multi-model support.

MidJourney excels in generating high-quality images but does not support text-based LLM comparisons or integration with external models, which might be a drawback for users with broader AI needs.

PromptPerfect offers multi-model prompt optimization for both text and image outputs. Its freemium pricing model makes it accessible, though subscription costs vary depending on usage, which may require careful budgeting.

Prompts.ai takes a versatile approach with support for nine major providers, including OpenAI, Anthropic, Google, DeepSeek, xAI (Grok), Groq, Mistral AI, Perplexity, and Together AI. Its Bring Your Own Key (BYOK) system ensures users only pay direct provider costs, with no added platform fees. Pricing starts at $0/month for up to 500 API calls. Performance metrics highlight Groq's fast response time of 456 ms and throughput of 464.9 tokens/sec, alongside Anthropic's 5.0/5 quality score.

Security measures also vary across platforms. Some offer In-VPC deployments for enhanced data protection, while BYOK ensures API keys remain encrypted on user devices. Cost management tools, like real-time usage dashboards and evaluation gates, help teams control spending by flagging prompts that exceed token limits before deployment.

| Platform | Multi-Model Support | Cost Controls | Team Collaboration | Scalability | Security Features |

|---|---|---|---|---|---|

| Prompts.ai | 9+ Providers | BYOK + Usage Analytics | Shared Workflows | Enterprise-Grade | Encrypted Keys (BYOK) |

| OpenAI Playground | OpenAI Only | Per-Token Pricing | Individual Testing | Tailored for OpenAI Models | Standard Cloud-Based |

| MidJourney | MidJourney Only | Subscription Tiers | Community Sharing | Optimized for Image Generation | Standard Cloud-Based |

| PromptPerfect | Multi-Model (Text/Image) | Freemium + Token Optimization | Hosted Deployment | Automated Scaling | Standard Cloud-Based |

This comparison highlights how the platforms cater to distinct needs, offering varied features to support different workflows and user priorities.

Choosing the right platform depends on your organization's technical demands and structure. Some platforms shine in offering precise control for development, while others focus on delivering exceptional visual content.

Platforms that incorporate automated prompt evaluation deliver clear benefits. For example, systematic management of prompts has significantly boosted issue detection rates (e.g., increasing from 3 to 30 incidents daily)[1]. This proactive approach helps prevent quality issues before they impact production.

Enterprise teams gain the most from platforms that offer a balance between adaptability and strong governance, paired with transparent, pay-as-you-go pricing models. This reinforces a key takeaway: prioritizing systematic prompt evaluation leads to smoother operations and improved outcomes.

The best results come when platform features align with team roles and workflows. Visual, no-code tools are ideal for non-technical managers, while engineering teams often prefer command-line interfaces for their efficiency and control, reducing time-to-production by as much as 75%[2]. Starting with free tiers to test usability and scaling based on actual needs - rather than assumptions - ensures teams achieve both value and efficiency.

When selecting a platform, it’s essential to match it with your team’s specific goals and workflows. If your team requires comprehensive prompt management, including features for testing, refining, and collaborating, look for platforms offering lifecycle management and governance tools. For teams with more technical processes, lightweight and scriptable tools or solutions that allow for comparing different AI models might be a better fit. Prioritize platforms that complement your team’s technical expertise, project demands, and collaboration needs to streamline prompt testing and deployment.

The most effective approach to evaluate prompt quality across different models is by utilizing platforms specifically built for thorough testing and analysis. Tools such as Prompts.ai enable users to compare models side by side, monitor costs, and benchmark performance in real time. These platforms often incorporate a variety of datasets and use advanced scoring techniques to ensure evaluations are both consistent and objective. Key features to prioritize include regression gates, comprehensive analytics, and version control, which help fine-tune and enhance prompt outputs efficiently.

You can keep token expenses under control by tracking usage in real time and establishing spending limits. Tools offered by platforms like Prompts.ai enable detailed cost tracking and management, ensuring you stick to your budget while fine-tuning and experimenting with prompts.