يعمل الذكاء الاصطناعي التوليدي على إعادة تشكيل الشركات، ولكن اختيار النظام الأساسي المناسب أمر بالغ الأهمية. يستعرض هذا الدليل خمس منصات رائدة لمساعدتك في العثور على أفضل ما يناسب سير عمل الذكاء الاصطناعي. سواء كنت تبحث عن خفض التكاليف أو تحسين الحوكمة أو توسيع نطاق العمليات، إليك ما تحتاج إلى معرفته:

الوجبات السريعة: يبرز Prompts.ai كخيار أفضل للمؤسسات التي تسعى إلى كفاءة التكلفة وسير العمل المبسط، بينما تتفوق IBM watsonx Orchstrate في البيئات المليئة بالحوكمة. يتميز Apache Airflow بمرونة المصدر المفتوح.

اكتشف كيف تتراكم هذه المنصات عبر التكامل والحوكمة وقابلية التوسع وإدارة التكاليف.

Prompts.ai عبارة عن منصة تنسيق للذكاء الاصطناعي للمؤسسات تجمع أكثر من 35 نموذجًا رائدًا للغات الكبيرة (LLMs) - بما في ذلك GPT-5، كلود، لاما، و الجوزاء - في واجهة واحدة آمنة وموحدة. إنه يعالج المشكلة الشائعة لأدوات الذكاء الاصطناعي المجزأة من خلال تبسيط تكامل النماذج وضمان الحوكمة وخفض التكاليف.

باستخدام Prompts.ai، يمكن للمؤسسات دمج العديد من LLMs من خلال واجهات برمجة التطبيقات والموصلات، مما يتيح الانتقال السلس بين نماذج مثل OpenAI GPTوجوجل جيميني وأنثروبيك كلود لتناسب الاحتياجات الخاصة. على سبيل المثال، استفاد أحد مزودي الرعاية الصحية الرئيسيين في الولايات المتحدة من Prompts.ai لتشغيل روبوتات الدردشة التي تواجه المريض باستخدام LLMs المتعددة، مما يوفر تفاعلات متسقة وموثوقة عبر سيناريوهات المرضى المختلفة.

يسمح تصميم API المفتوح للمنصة أيضًا بالتكامل مع أدوات التشغيل الآلي لسير العمل المستخدمة على نطاق واسع ومستودعات بيانات المؤسسة وأنظمة التخزين السحابية. يضمن هذا التوافق إمكانية دمج قدرات الذكاء الاصطناعي في البنى التحتية التقنية الحالية دون انقطاع، مما يمهد الطريق لكفاءة التكلفة القابلة للقياس.

يساعد Prompts.ai المؤسسات على التحكم في التكاليف من خلال تحليلات الاستخدام التفصيلية وأدوات FinOps في الوقت الفعلي وتتبع الميزانية الآلي. قامت الشركات التي تستخدم المنصة بخفض التكاليف التشغيلية المتعلقة بالسرعة بنسبة تصل إلى 30٪، مع تحقيق بعضها وفورات تصل إلى 98٪ في نفقات برامج الذكاء الاصطناعي. يربط نظام قروض TOKN للدفع أولاً بأول التكاليف مباشرة بالاستخدام، مما يوفر ميزانيات شهرية يمكن التنبؤ بها وشفافية مالية أكبر للشركات الأمريكية. هذا التركيز على التحكم في التكاليف يكمله ميزات الحوكمة التي تعزز الاتساق التشغيلي.

تعد الحوكمة حجر الزاوية في Prompts.ai، حيث تقدم أدوات مثل عناصر التحكم في الوصول القائمة على الأدوار، وسجلات التدقيق، والسياسات القابلة للتخصيص، والتحكم المدمج في الإصدار مع اختبار A/B. تساعد هذه الميزات المؤسسات على تلبية المتطلبات التنظيمية مثل HIPAA و CCPA مع تحسين جودة الإنتاج الفوري بنسبة تصل إلى 20٪ من خلال التحسينات التكرارية. تضمن البنية القابلة للتطوير للمنصة أن يظل الأداء مستقرًا حتى مع نمو الطلبات.

يدعم Prompts.ai، الذي تم إنشاؤه على أساس سحابي أصلي، التحجيم الأفقي للتعامل مع طلبات API عالية الإنتاجية وعمليات التنفيذ الفوري المتزامنة. مستضاف على مزودي السحابة الرئيسيين في الولايات المتحدة مثل الخدمات اللاسلكية المتقدمة، أزرق سماوي، و جوجل كلاود، تحافظ إمكانات التحجيم التلقائي وموازنة التحميل على الأداء المتسق أثناء ذروة الاستخدام. حققت عمليات النشر الخاصة بالمؤسسات وقت تشغيل بنسبة 99.9% مع أوقات تنفيذ سريعة في أقل من الثانية. بالإضافة إلى ذلك، فإن مساحات العمل التعاونية تجعل من السهل على الفرق إضافة نماذج ومستخدمين جدد أثناء نموهم، مما يعزز مكانة Prompts.ai كحل بديل لتبسيط تدفقات عمل الذكاء الاصطناعي التوليدية.

لا يوجد تحقق متاح من قدرات Vellum AI في مجالات مثل تكامل النماذج أو الحوكمة أو قابلية التوسع. ونتيجة لذلك، لا يتم تقييم هذه الجوانب في هذا التحليل. بعد ذلك، سنقوم بفحص IBM watsonx Orchestrate.

IBM watsonx Orchestrate عبارة عن منصة ذكاء اصطناعي للمؤسسات مصممة على سحابة IBM المختلطة، مما يبسط التشغيل الآلي للأعمال وسير عمل الذكاء الاصطناعي التوليدي. فهو يجمع بين الميزات التقنية المتقدمة والحوكمة المتكاملة لمساعدة المؤسسات على نشر حلول الذكاء الاصطناعي واسعة النطاق بكفاءة. على عكس Vellum AI الأكثر محدودية، تقدم watsonx Orchestrate حلاً شاملاً مصممًا خصيصًا لاحتياجات المؤسسة.

تدعم المنصة مجموعة متنوعة من نماذج اللغات الكبيرة، بما في ذلك BERT و Meta Llama 3 و GPT-3 و GPT-4 و Megatron-LM. يسمح تكامل النموذج المتنوع هذا بعمليات سلسة مع توفير الأساس لقدرات الحوكمة الخاصة به.

الحوكمة هي سمة أساسية لـ watsonx Orchestrate، مع دمج ممارسات الامتثال والأمان والذكاء الاصطناعي الأخلاقية مباشرة في بنيتها التحتية. من خلال دمج هذه التدابير منذ البداية، تضمن المنصة عمليات موثوقة وآمنة، ومواءمة الأداء الفني مع المعايير التنظيمية.

تم تصميم watsonx Orchestrate لمشاريع المؤسسات الكبيرة، وهو يستفيد من السحابة المختلطة لشركة IBM لدعم النمو دون المساس بالحوكمة. تضمن قدرتها على التوسع أداءً ثابتًا، حتى مع زيادة الطلبات، مع الحفاظ على معايير الحوكمة الصارمة طوال الوقت.

تبرز Apache Airflow كمنصة قوية مفتوحة المصدر مصممة لتنسيق سير العمل، وتقدم بديلاً فعالاً من حيث التكلفة للحلول الاحتكارية. تم تطويره في الأصل بواسطة Airbnb، فهي تتيح خطوط أنابيب الذكاء الاصطناعي التوليدية القابلة للتطوير والفعالة باستخدام الرسوم البيانية غير الحلقية الموجهة. على عكس المنصات المرتبطة برسوم ترخيص المؤسسات، توفر Airflow المرونة دون المصاريف الإضافية.

تم تصميم Airflow للتعامل مع المشاريع الكبيرة، وهي تستخدم بنية التنفيذ الموزعة. وهو يدعم العديد من المنفذين، مثل منفذ الكرفس و منفذ Kubernets، لتمكين التحجيم الأفقي عبر العقد العاملة. يقوم KubernetExecutor، على سبيل المثال، بتعيين كل مهمة إلى مجموعة فردية، مما يسمح بتخصيص الموارد الديناميكية بناءً على الاحتياجات الحسابية. تضمن هذه البنية قدرة النظام الأساسي على التكيف مع أعباء العمل المختلفة مع الحفاظ على العمليات الفعالة.

بالنسبة للمؤسسات، تتضمن Airflow ميزات تتناول الامتثال والأمان والشفافية. وتشمل هذه تسجيل التدقيق، التحكم في الوصول المستند إلى الأدوار (RBAC)، و تتبع نسب البيانات. تعمل هذه الأدوات معًا على حماية سير العمل وتعزيز الرقابة وتوفير رؤية واضحة للعمليات القائمة على الذكاء الاصطناعي، مما يجعلها خيارًا موثوقًا للمؤسسات التي تعطي الأولوية للحوكمة.

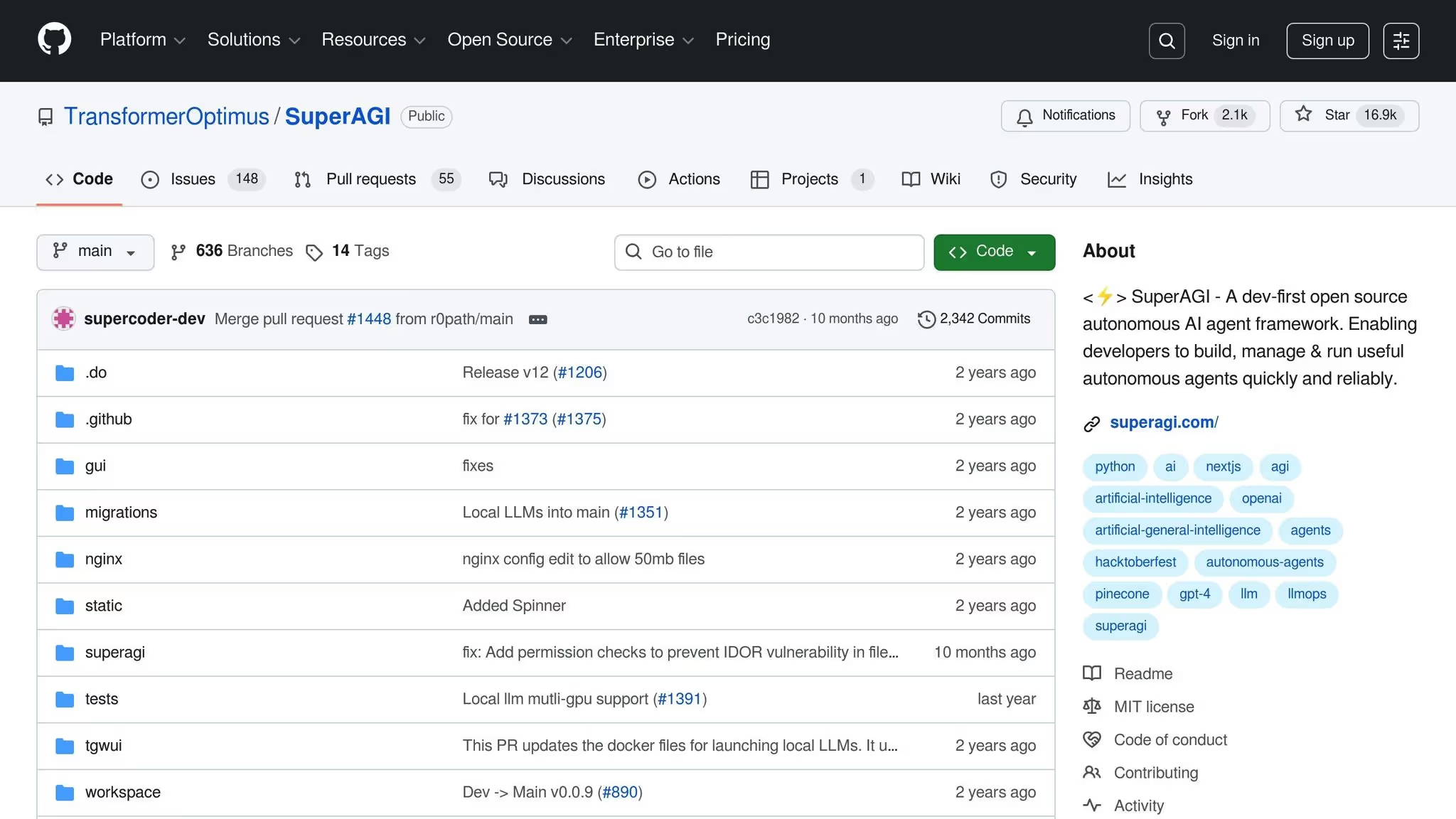

SuperAGI هو إطار صاعد مصمم خصيصًا لوكلاء الذكاء الاصطناعي المستقلين، ويهدف تحديدًا إلى تعزيز تدفقات عمل الذكاء الاصطناعي التوليدية. على الرغم من أنها تبشر بالخير، إلا أن هناك معلومات محدودة متاحة للجمهور حول بنيتها وقدرات التكامل وقابلية التوسع واستراتيجيات إدارة التكاليف. للحصول على رؤى أكثر دقة وحداثة، راجع مباشرة وثائق SuperAGI الرسمية.

استنادًا إلى المراجعات التفصيلية للمنصة، إليك ملخصًا موجزًا لنقاط القوة والقيود الرئيسية لكل حل. تقدم كل منصة مجموعة من المزايا والتحديات الخاصة بها، والتي تتشكل من خلال عوامل مثل تكامل النماذج وإدارة التكاليف والحوكمة وقابلية التوسع.

Prompts.ai هو خيار متميز للمؤسسات، حيث يوفر تنسيقًا سلسًا لأكثر من 35 نموذجًا لغويًا كبيرًا رائدًا. إنه يتألق في تبسيط سير العمل وتقليل انتشار الأدوات. يمكن لقدرات FinOps في الوقت الفعلي تقليل تكاليف الذكاء الاصطناعي بنسبة تصل إلى 98٪. ومع ذلك، فإن تركيزها على عمليات سير العمل المرتكزة على المطالبة قد لا يلبي تمامًا احتياجات المستخدمين الذين يبحثون عن ميزات التنسيق المتقدمة الموجودة عادةً في منصات سير العمل الأوسع.

فيلوم AI متخصص في الإدارة السريعة، ويقدم أدوات للإصدار والتعاون. إنه يتكامل جيدًا مع مزودي LLM الرئيسيين، مما يجعله خيارًا قويًا للفرق التي تتعامل مع مشاريع الذكاء الاصطناعي التوليدية المعقدة. على الجانب السلبي، قد تواجه مشكلات قابلية التوسع وتفتقر إلى التكامل مع النماذج غير LLM أو مصادر البيانات الخارجية.

أوركسترا آي بي إم واتسون تتفوق في الصناعات شديدة التنظيم، وتقدم ميزات قوية للحوكمة والامتثال. وهو يدعم عمليات النشر السحابية المختلطة ويتكامل بسلاسة مع النظام البيئي للذكاء الاصطناعي لشركة IBM. ومع ذلك، قد تشكل التكاليف المرتفعة والتعقيد تحديات للفرق الأصغر، خاصة تلك التي ليس لديها بنية أساسية موجودة لشركة IBM.

تدفق هواء أباتشي يوفر مرونة لا مثيل لها كنظام إدارة سير عمل مفتوح المصدر. يتم استخدامه على نطاق واسع في التكنولوجيا لتنظيم التدريب على نماذج التعلم الآلي واسعة النطاق وخطوط أنابيب النشر. تدعم بنيتها القابلة للتوسعة التنفيذ الموزع وتكامل النموذج المستند إلى Python. ومع ذلك، فإنه يحتوي على منحنى تعليمي حاد، ويتطلب خبرة فنية، ويتطلب تطبيقات حوكمة مخصصة.

سوبيراجي تم تصميمه للتنسيق المعياري والمستقل متعدد الوكلاء، مما يجعله مثاليًا لسير العمل التكيفي. غالبًا ما تستخدمه مختبرات الأبحاث للتجارب التي تتضمن أنظمة مستقلة متعددة الوكلاء وأتمتة سير العمل. في حين أن طبيعتها مفتوحة المصدر تحافظ على انخفاض التكاليف، إلا أنها تتطلب تخصيصًا كبيرًا وموارد تقنية. بالإضافة إلى ذلك، فإن ميزات الحوكمة الخاصة بها أقل تطورًا مقارنة بالمنصات على مستوى المؤسسات.

فيما يلي مقارنة جنبًا إلى جنب لكيفية أداء هذه المنصات عبر معايير التقييم الرئيسية:

تساعد هذه المقارنة في تحديد النظام الأساسي الذي يتوافق بشكل أفضل مع الأهداف التشغيلية المحددة. بالنسبة للصناعات التي تتطلب حوكمة صارمة، أوركسترا آي بي إم واتسون هو اختيار موثوق. قد تنجذب الفرق التي تبحث عن التخصيص العميق نحو تدفق هواء أباتشي. سوبيراجي تناشد أولئك الذين يعطون الأولوية لمرونة المصدر المفتوح وسير العمل التجريبي. وفي الوقت نفسه، Prompts.ai هو خيار قوي لعمليات سير العمل التي تركز على السرعة، وتجمع بين كفاءة التكلفة والميزات على مستوى المؤسسة.

يعتمد اختيار منصة سير عمل الذكاء الاصطناعي المناسبة القابلة للتشغيل المتبادل للذكاء الاصطناعي التوليدي على الاحتياجات الفريدة لمؤسستك والخبرة الفنية والأهداف طويلة المدى. في ما يلي ملخص سريع لما تقدمه كل منصة إلى الطاولة:

كل منصة لها نقاط قوتها، وفهم أولوياتك سيرشدك نحو الحل الأفضل.

يقدم Prompts.ai ملف طبقة FinOps يراقب كل تفاعل، ويقدم رؤى في الوقت الفعلي حول الاستخدام والإنفاق وعائد الاستثمار. تتيح لك هذه الميزة مراقبة النفقات عن كثب وإدارة التكاليف بدقة.

من خلال استبدال الحاجة إلى أكثر من 35 أداة منفصلة للذكاء الاصطناعي، تعمل المنصة على دمج تدفقات العمل ويمكنها تقليل التكاليف بنسبة تصل إلى 95%. إنها الدفع أولاً بأول يضمن النموذج، المدعوم بائتمانات TOKN المرنة، أنك تدفع فقط مقابل ما تستخدمه، مما يجعله خيارًا قابلاً للتكيف وفعالًا من حيث التكلفة لتوسيع نطاق مبادرات الذكاء الاصطناعي الخاصة بك.

يشتمل Prompts.ai على أدوات حوكمة قوية لمساعدة المؤسسات على البقاء متوافقة مع اللوائح الرئيسية، بما في ذلك HIPAA و CCPA و GDPR. تتميز هذه الأدوات بإجراءات حماية البيانات الصارمة وضوابط الوصول المتقدمة وممارسات إدارة البيانات الآمنة.

من خلال الالتزام بمعايير الصناعة المعمول بها مثل SOC 2 Type II، يوفر Prompts.ai بيئة آمنة للتعامل مع المعلومات الحساسة. وهذا يمكّن الشركات من الاستفادة بثقة من حلول الذكاء الاصطناعي المولدة مع ضمان وفائها بالالتزامات التنظيمية.

تم تصميم Prompts.ai للتوسع، مما يضمن قدرة مؤسستك على إدارة حركة مرور API الكثيفة وعمليات التنفيذ السريعة المتعددة دون أي عوائق. تسمح بنيتها التحتية القوية وسير العمل المبسط لها بالتعامل مع الطلبات المتزايدة لتوسيع مشاريع الذكاء الاصطناعي المولدة بسهولة.

سواء كنت تعالج آلاف الطلبات كل ثانية أو تنظم عمليات سير عمل الذكاء الاصطناعي المعقدة، فإن Prompts.ai تحافظ على سير الأمور بسلاسة. يضمن إطارها القابل للتطوير السرعة والموثوقية والكفاءة المتسقة، مما يساعد الشركات على التعامل مع سيناريوهات الطلب المرتفع دون حدوث تباطؤ تشغيلي.