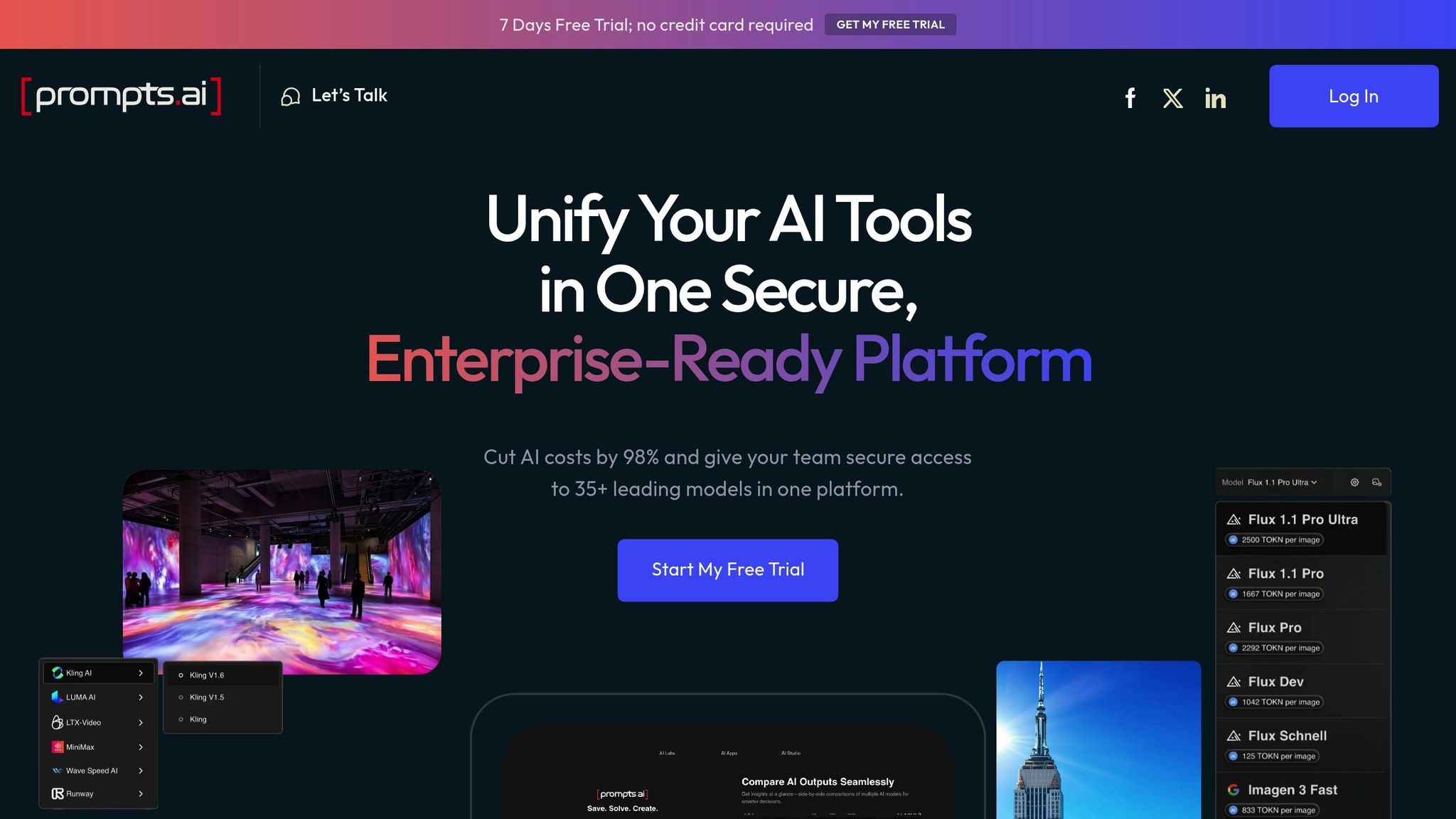

Prompts.ai 是管理大规模 AI 运营的企业的主要选择。它通过统一来简化人工智能工作流程 35+ 种顶级语言模型,提供 实时成本跟踪,并确保遵守 企业级安全。它专为可扩展性而设计,可处理复杂的工作负载,优化开支 代币级跟踪,并通过以下方式促进团队协作 共享工作空间 和 基于角色的权限。Prompts.ai 的 即用即付 TOKN 积分 使成本与使用量保持一致,使其成为经济高效且安全的人工智能协调平台。

Prompts.ai 组合了 实时治理, 财务透明度,以及 协作工具 以满足企业 AI 的需求。它可以消除效率低下、降低成本并确保合规性,所有这些都在一个平台上完成。

对于寻求简化 AI 运营的企业来说,Prompts.ai 是明智的选择。

为了支持成千上万的用户,同时确保安全和管理成本,企业人工智能指挥中心需要提供强大的功能组合。这些平台必须解决三个关键领域才能与组织需求一起发展:安全性、财务透明度和协作。

强大的安全框架是任何可扩展框架的支柱 AI 指挥中心。这开始于 企业级身份验证,它与身份提供商集成,例如 活动目录, Okta,或基于 SAML 的系统。单点登录 (SSO) 等功能不仅可以简化用户访问,而且还能在工作流程中保持严格的安全协议。

基于角色的访问控制 (RBAC) 是另一个基本功能,特别是对于具有不同职责的大型团队而言。例如,财务团队可能只需要成本分析的 “仅限查看” 权限,而数据科学家则需要部署权限而无需预算控制。通过分配精细权限,组织可以确保用户仅访问所需的工具和数据,从而最大限度地降低风险。

为了保护敏感信息,该平台必须 加密静态和传输中的数据。人工智能工作流程通常处理机密的客户数据、专有算法和关键业务见解。端到端加密在数据从输入到处理再到存储的整个过程中为其提供保护。

审计跟踪 在合规性方面起着至关重要的作用,使用时间戳、用户 ID 和详细更改记录每项操作。这会创建符合监管要求的不可变记录,例如 SOC 2、GDPR 和 HIPAA,同时在需要时为法证分析提供帮助。

最后, API 安全措施 -包括速率限制、令牌身份验证和请求验证-至关重要。随着 AI 运营的增长,API 端点可能成为恶意活动的目标。这些保障措施可防止未经授权的访问,同时确保合法的工作流程保持不间断。

这些安全措施与财务监督相结合,为可扩展的运营提供了坚实的基础。

有效的财务管理是扩展 AI 运营的关键。 代币级跟踪 提供对 AI 支出的精细可见性,确定哪些团队和工作流程消耗的资源最多。这种见解可以更好地分配资源和进行成本管理。

实时预算控制 帮助组织避免意外的成本超支。当支出接近预设阈值时,自动警报可以通知团队,而硬限制可以暂停不必要的工作流程,以防止支出失控。分配部门级预算可确保各个团队在不中断整体运营的情况下保持在限制范围内。

通过实施 成本与结果的映射,组织可以直接将人工智能投资与可衡量的业务成果联系起来。例如,可以将部署模型的成本与其节省的运营成本进行比较。这种方法将人工智能重塑为业务驱动力而不是成本中心,为持续投资提供了明确的理由。

该平台还应主动识别 节省成本的机会。通过分析使用模式,它可以建议更有效的模型配置,标记未充分利用的资源,并建议调度以利用较低成本的计算周期。

最后, 退款功能 实现跨部门和项目的精确成本分配。这促进了问责制,因为各团队有责任有效管理分配的资源。

清晰的财务见解可确保 AI 运营保持高效并与组织目标保持一致。

协作工具对于打破孤岛和简化 AI 工作流程至关重要。 共享工作空间 提供一个通用环境,数据科学家、工程师和利益相关者可以在模型上进行协作、测试工作流程和共享反馈——所有这些都无需在平台之间切换。版本控制可确保每个人都使用最新的配置,同时保留对先前版本的访问权限以进行回滚。

为了大规模保持质量,该平台必须支持 基于角色的批准工作流程。例如,关键模型部署可能需要技术主管和合规官员的签署,而例行更新可以通过自动批准来进行。这些可自定义的工作流程可适应不同的组织结构和风险级别。

实时协作工具 改善围绕 AI 工作流程的沟通。即时通知、内置消息和注释功能等功能使团队无需依赖外部工具即可快速解决问题并随时了解情况。

管理人工智能项目的生命周期是另一个关键要求。 生命周期管理 提供对每个工作流程的状态、性能指标和维护需求的可见性。自动化策略可以根据设定的标准触发更新、审查或停用,从而确保资源的有效分配。

最后,整合是关键。该平台应无缝 连接现有工具 like GitHub, Slack, and Jira. Instead of replacing these tools, the command center should enhance workflows with AI-specific features while maintaining compatibility with established processes.

These collaboration and workflow management features enable enterprises to centralize AI operations and scale effectively, ensuring teams can work together efficiently and achieve their goals.

Prompts.ai stands out as the ultimate AI command center for enterprises seeking scalable deployment. While many platforms promise to tackle enterprise AI challenges, Prompts.ai delivers a complete solution by offering unified multi-model access, real-time governance, and integrated FinOps - all designed for large-scale operations.

Handling multiple AI models from various vendors often leads to complications, higher costs, and slower deployment. Prompts.ai eliminates these hurdles by bringing together over 35 leading AI models into one secure platform. This allows teams to compare model performance side-by-side using identical prompts and datasets, test different configurations, and quickly adapt to new models or pricing changes - all without disrupting their existing infrastructure.

This integration reshapes how enterprises choose and deploy models. Teams can switch between models effortlessly to meet specific workload demands. For instance, a data scientist can test a customer service workflow using Claude for conversational tasks and then pivot to GPT-4 for analytical needs - all within the same environment.

The platform’s interoperability ensures workflows aren’t tied to a single vendor. If a new model becomes available or pricing shifts, teams can adapt without having to rebuild their systems. This flexibility, combined with real-time execution capabilities, strengthens operational efficiency and control.

Enterprise AI demands immediate oversight and control of all operations. Prompts.ai’s real-time execution engine provides instant feedback on model performance, costs, and compliance. Teams can monitor workflows live, identifying potential bottlenecks or issues before they escalate into larger problems.

The platform also integrates advanced security features such as authentication protocols, audit trails, and API-level safeguards. These tools ensure that teams have immediate insights into costs, performance, and compliance. With role-based access controls, users only access the tools they need, maintaining both operational efficiency and regulatory compliance.

In addition to its model management and governance tools, Prompts.ai includes financial controls tailored for scalable AI deployments. Traditional AI operations often face unexpected expenses, but Prompts.ai’s FinOps features help optimize spending through token-level tracking, real-time budget controls, and pay-as-you-go TOKN credits that align costs with actual usage.

Token-level tracking offers detailed insights into AI spending, showing which teams, projects, or workflows consume the most resources. Real-time budget controls prevent overspending by sending alerts and pausing non-essential workflows when spending approaches set limits.

With cost-to-outcome mapping, organizations can directly link AI investments to tangible business results. For example, teams can evaluate the cost of deploying a customer service bot against the operational savings it delivers. Additionally, the platform analyzes usage patterns to uncover cost-saving opportunities, such as recommending more efficient model configurations.

When selecting an AI command center for enterprise use, it's crucial to evaluate platforms based on their ability to meet essential requirements. Below is a detailed comparison of Prompts.ai's capabilities across critical areas, scored on a 5-point scale where 5 represents top-tier performance and 1 signifies basic functionality.

This table highlights Prompts.ai's ability to meet the diverse needs of enterprise AI users while addressing challenges like scalability, cost control, and security.

Each score reflects Prompts.ai's effectiveness in tackling enterprise demands, supported by specific features and functionalities.

Multi-Model Access (5/5): By offering access to over 35 models through a single interface, Prompts.ai simplifies workflows and eliminates the need for juggling multiple vendors. This also allows for direct performance comparisons between models.

Security & Compliance (5/5): The platform provides enterprise-level security with features like role-based access, authentication protocols, and detailed audit logs, ensuring secure and accountable AI operations.

Cost Management (5/5): Real-time spend tracking, automated budget alerts, and the use of pay-as-you-go TOKN credits enable businesses to monitor and control expenses effectively.

Scalability (5/5): With its real-time execution engine and scalable user management, Prompts.ai handles large-scale workloads seamlessly, ensuring performance remains consistent as demands grow.

Workflow Management (4/5): While offering strong tools for managing workflows and team collaboration, there is potential for more customization in niche or highly specialized processes.

Performance Monitoring (5/5): The platform’s real-time analytics and ability to compare models side-by-side empower users to make informed, data-driven decisions immediately.

Integration Capabilities (4/5): Prompts.ai supports most enterprise systems through its API suite, though older legacy infrastructures may require additional setup or adjustments.

Governance Controls (5/5): Automated enforcement of policies and comprehensive audit trails ensure that every AI operation remains compliant with organizational and regulatory standards.

This analysis underscores how Prompts.ai stands out as a robust solution for enterprises seeking to streamline and optimize their AI operations. Its blend of advanced features and user-centric design makes it a strong contender in the AI command center market.

Deploying Prompts.ai across multiple environments calls for a careful balance between maintaining security and enabling flexibility. Start by setting up distinct environments - development, staging, and production - that align with your organization’s infrastructure. Use Prompts.ai’s built-in governance tools to ensure seamless management across these setups.

Organize user groups based on your organizational structure to assign environment-specific permissions. For instance, data scientists, prompt engineers, business analysts, and administrators should each have access tailored to their roles. Integrate Prompts.ai with your existing identity management system to enable enterprise-grade authentication and single sign-on (SSO). This ensures uniform security policies across your AI operations while maintaining detailed audit logs. Define clear policies, such as controlling which models teams can access and setting spending limits, to prevent misuse while still allowing room for innovation.

Each environment should reflect its specific purpose. For example, the development environment can allow broader access to models for testing and experimentation, while the production environment should have stricter controls to ensure stability. Prompts.ai’s workflow management tools allow you to create flexible templates that ensure teams stay within approved guidelines.

Once environments are securely configured, the next step is to optimize financial management.

Prompts.ai’s pay-as-you-go TOKN credit system aligns costs directly with usage, eliminating the need for recurring subscription fees. Use real-time analytics to track token consumption across models, teams, and projects, providing detailed insights into resource usage and identifying opportunities to reduce costs.

To further manage expenses, tag workflows with project codes, department identifiers, or business objectives. This tagging not only helps demonstrate ROI but also supports more strategic planning for future AI investments. Implement approval workflows for spending to prevent budget overruns while maintaining operational efficiency.

With strong financial controls in place, you can focus on lifecycle management and tracking success metrics.

Building on governance and cost controls, lifecycle management ensures smooth operations from deployment through long-term performance. Use version control to document every prompt template, model configuration, and automation rule. This makes it easier to roll back changes if needed and ensures compliance with audit requirements.

Regularly evaluate model performance to ensure they remain effective and efficient. Focus on metrics such as response accuracy, processing speed, and resource allocation to gauge operational success. Additionally, monitor deployment timelines to assess efficiency and track user adoption rates to measure how well teams are adapting to the platform. Prompts.ai’s analytics tools provide insights into team engagement, helping you identify patterns and areas for improvement.

A unified platform like Prompts.ai can cut software costs by up to 98% while reducing IT overhead by consolidating AI operations.

Establish quality metrics that link AI performance to broader business outcomes. Automated reports and executive dashboards can summarize critical performance indicators - such as cost trends, adoption rates, and operational impact - ensuring transparency and enabling data-driven decision-making.

Prompts.ai emerges as the ultimate AI command center for enterprises aiming to scale their AI operations with confidence. Its performance highlights its ability to tackle the challenges enterprises face when deploying AI at scale. By unifying multi-model orchestration under one platform, Prompts.ai ensures top-tier security and compliance while delivering the cost efficiencies discussed earlier.

The platform's real-time execution engine 结合内置的治理工具,组织可以在不失去控制或监督的情况下扩展其人工智能能力。这对于以下方面尤其重要 人工智能驱动的金融解决方案,在这种情况下,遵守监管标准和保持透明的审计记录是不可谈判的。Prompts.ai 达到了完美的平衡——为团队提供创新所需的灵活性,同时确保高管拥有所需的治理结构。

通过实时分析和代币跟踪,该平台提供了无与伦比的成本可见性,将人工智能成本管理转变为精确的数据驱动流程。这种透明度使组织能够战略性地规划预算并准确地衡量投资回报率,从而消除猜测。

Prompts.ai 还通过其 AI 实验将单个 AI 实验转化为可重复、可扩展的工作流程 协作工作流程管理 工具。此外,其社区和认证计划提供专家精心策划的工作流程,可加快采用速度并最大限度地缩短企业人工智能部署的学习时间。

理解 Prompts.ai 优势的最佳方法是亲身体验它。该平台提供 动手入职和企业培训,确保您的团队从一开始就做好充分利用其潜力的准备。除此之外,其活跃的即时工程师社区提供持续的支持和共享的专业知识,从而建立了超越典型供应商关系的合作伙伴关系。

使用 Prompts.ai 的每月 0 美元即用即付计划,无风险地迈出第一步。此选项使您无需任何前期投资即可测试平台的功能。多亏了它 企业级基础架构,您可以从小规模的试点项目开始,然后随着人工智能计划的发展无缝扩展到全面的组织部署。

立即联系 Prompts.ai 安排个性化演示,看看他们的 AI 编排平台如何重新定义您的可扩展 AI 部署方法。

Prompts.ai 非常重视数据安全和满足监管标准,部署了高级保护措施来保护敏感信息。该平台符合 GDPR 和 你好 通过集成用于合规性监控和管理漏洞的自动化工具来制定法规,确保个人数据和受保护的健康信息(PHI)的安全。

除医疗保健外,Prompts.ai 还采用行业标准做法来维护隐私并保持各个领域的监管一致性。这些措施不仅可以保护人工智能工作流程,还可以帮助团队充满信心和高效地应对严格的合规要求。

代币级跟踪允许 详细见解 通过将支出细分为代币级别,分解为特定 AI 任务的成本。这种方法支持 实时监控,帮助团队快速发现违规行为,明智地管理支出,并使预算与目标保持一致。

凭借准确的成本归因,代币级跟踪使团队能够 更有效地使用资源,提高了人工智能运营的有效性。这使得扩展项目变得更加容易,同时保持对开支的严格控制。

Prompts.ai 使团队能够高效地合作,提供 基于角色的控件, 集中监督,以及 实时成本跟踪。这些工具可确保数据保持安全,运营保持透明,各团队之间的协作顺畅进行。

该平台的集中存储库简化了人工智能提示的创建、共享和管理,促进了项目之间的一致性和团队合作。其直观的工具和直观的界面简化了管理工作流程,允许团队启动代理、跟踪绩效和设计复杂的工作流程,所有这些都在一个统一的系统中完成。