AI workflow platforms are transforming how teams work together, with collaboration at the heart of their success. These platforms address challenges like misalignment between departments, slow progress, and lack of transparency, enabling organizations to scale AI effectively. Below are three standout platforms that offer advanced collaboration tools, integration with large language models (LLMs), and robust governance features:

Each platform is tailored to different needs, from simplifying workflows for non-technical users to providing full control for technical teams. Below is a comparison to help you decide which fits your organization best.

Whether you're scaling AI in creative, operational, or technical environments, these platforms offer tailored solutions to fit your goals.

AI Workflow Platforms Comparison: Features, Pricing, and Best Use Cases

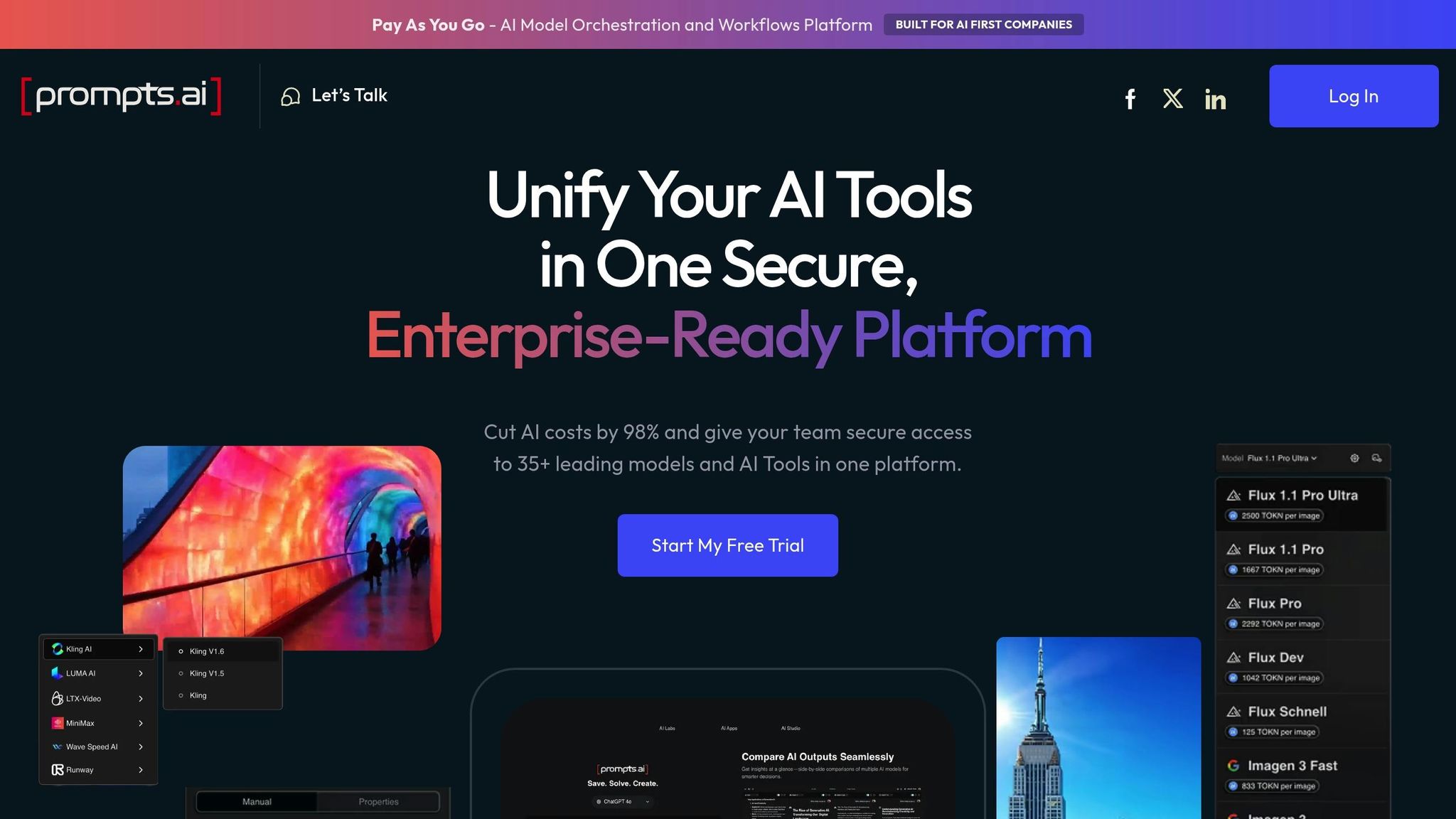

Prompts.ai brings together over 35 leading large language models (LLMs) - including GPT-5, Claude, LLaMA, Gemini, Grok-4, and Flux Pro - into a single, streamlined interface. This eliminates the hassle of switching between multiple platforms and reconciling inconsistent outputs. Whether you're an engineer, product manager, or part of a creative team, everyone can collaborate in one shared workspace. With access to the same model versions and shared workflows, teams can work more efficiently and cohesively.

The platform includes role-based permissions and audit trails, ensuring controlled access and complete transparency for all workflow changes. Teams can also benefit from Time Savers, pre-built workflows created by prompt engineers, which help speed up onboarding and establish consistent practices across departments. Additionally, the Prompt Engineer Certification program empowers team members to become in-house experts, guiding others and turning AI adoption into a collaborative success story.

This collaborative environment also simplifies comparing and selecting models for different needs.

Prompts.ai doesn’t limit teams to a single model provider. Instead, it allows for side-by-side comparisons across 35+ integrated LLMs. For example, you can test the same prompt on GPT-5, Claude, and Gemini simultaneously, making it easier to choose the best model for your specific project. This flexibility is invaluable when different tasks require different strengths - one model might excel in creative tasks, while another is better suited for data analysis.

The platform also supports plain language task descriptions, making it accessible to all team members. You simply describe the task in everyday English, and the system handles the technical orchestration behind the scenes.

Prompts.ai includes a FinOps layer that tracks every token used across all models and users, giving you real-time insights into AI expenses. By using its pay-as-you-go TOKN credit system, organizations can cut AI software costs by up to 98% - eliminating recurring subscription fees and charging only for actual usage. Finance teams can pinpoint spending down to specific projects, departments, or outcomes, ensuring transparency and accountability. All this data is consolidated into a single dashboard, making it easier to manage costs while optimizing workflows.

Every workflow on Prompts.ai is designed with enterprise-level governance in mind. Built-in compliance controls ensure that sensitive data stays within the organization’s security perimeter. Complete audit trails document each AI interaction, which not only meets regulatory requirements but also provides leadership with confidence that AI operations are secure and within established guidelines.

Platform B provides a user-friendly, drag-and-drop interface that allows team members to design multi-step AI workflows with ease. This visual approach ensures transparency in data flow and processes, making collaboration straightforward for everyone involved.

The platform also includes powerful routing and mapping tools that give teams precise control over how data moves through workflows. If issues arise, built-in error handling and replay features make it simple to identify and resolve problems without needing to start over. This reduces downtime and minimizes the hassle of debugging, keeping your team focused and productive.

Platform B connects with more than 300 AI tools, including ChatGPT, Claude, Gemini, and Perplexity, alongside over 8,000 applications. This allows you to create workflows that integrate AI capabilities with existing software, such as CRM systems and project management tools. AI-driven steps can handle tasks like content summarization, data analysis, and crafting personalized responses, while task-specific agents take care of repetitive operations.

These integrations operate smoothly while maintaining a strong focus on data security.

Platform B prioritizes data protection by implementing role-based access control (RBAC), environment separation, and secrets management. These measures ensure sensitive data remains secure while enabling safe testing of workflows. Additionally, detailed audit logs track every change, supporting compliance needs and simplifying incident reviews.

Platform C connects AI capabilities directly to the apps your team already uses, streamlining workflows and reducing disruptions. Its Model Context Protocol (MCP) acts as a link between AI platforms like ChatGPT or Claude and your work ecosystem, enabling external LLMs to execute over 30,000 actions across virtually any application. This means your team can harness AI-driven tasks without stepping outside their familiar tools.

The platform features a visual workflow builder, allowing teams to see exactly how data moves between apps and AI models. Updates to workflows are shared in real-time, ensuring everyone stays in sync and collaboration remains smooth.

Platform C enhances its collaborative foundation by seamlessly integrating leading AI models into workflows. Supported models include Claude 4.5, GPT 5.2, and Gemini 3 Pro, all accessible directly within the platform - no need for additional API keys. For users who need more flexibility, the platform also supports custom API key integration and self-hosted deployments, providing options for sensitive or specialized operations.

Billing operates on a per-token basis, with spending limits available in $50 or $100 increments. Administrators can set caps to manage costs effectively, preventing unexpected charges while scaling AI usage. By centralizing access to models and simplifying cost management, Platform C makes it easier for teams to collaborate efficiently.

To ensure security and compliance, Platform C adheres to the Security Development Lifecycle (SDL) framework. Administrators can enforce DLP policies to manage feature and connector access. For organizations with strict data controls, the platform offers Customer-managed encryption keys (CMK) and Customer Lockbox, providing enhanced data protection. Additionally, audit logs integrate with tools like Microsoft Purview and Microsoft Sentinel, enabling thorough compliance tracking. These robust security measures allow teams to innovate confidently, knowing their data is secure.

This section provides a concise comparison of the advantages and trade-offs of three platforms, highlighting their suitability for different AI workflow needs.

每个平台都具有独特的优势和挑战,这些优势和挑战会影响其在协作 AI 工作流程中的有效性。 prompts.ai 通过将 35 多种领先模型(例如 GPT-5、Claude 和 Gemini)整合到一个具有内置成本控制的单一平台中脱颖而出。这使其成为财富500强公司、创意机构和处理敏感数据的研究实验室的绝佳选择。即时工程师认证计划和专业设计的工作流程简化了入职流程。但是,其仅限云的设置可能无法满足需要自托管选项的组织的需求。

平台 B 简化了非技术用户的自动化,拥有超过 7,000 个应用程序集成和直观的界面。例如,欧洲房地产客户关系管理系统SweepBright在其企业层每月处理2700万个任务,展示了其可扩展性。但是,其基于活动的定价对于迭代人工智能工作流程来说可能会变得昂贵,而较低级别的计划(通常上限约为25名用户)可能会限制协作。虽然它确保了SOC 2和GDPR的合规性,但它对美国服务器的依赖可能会给具有严格数据驻留要求的组织带来挑战。

平台 C 其基于执行的定价模式可满足技术团队的需求,按完整的工作流程运行收费,这对于大批量流程具有成本效益。例如,沃达丰通过采用该平台执行关键自动化任务,节省了约270万美元。其自托管选项根据麻省理工学院的许可提供,可确保完全的数据所有权并消除协作障碍。但是,这种方法需要技术专业知识和自我管理的安全基础架构。不想自托管的用户可以使用具有托管合规性的云版本。

下表总结了每个平台的主要功能和利弊:

最佳平台取决于您团队的技术专长和工作流程需求。对于通过人工智能模型寻求成本透明度和灵活性的组织, prompts.ai 为简化 AI 管理提供了全面的解决方案。希望通过熟悉的工具实现轻松自动化的非技术团队将欣赏 Platform B 的简单性。同时,处理复杂工作流程和严格数据要求的技术团队可以利用Platform C的自托管选项和基于执行的定价,沃达丰的大量节省就证明了这一点。

选择合适的人工智能工作流程平台取决于团队规模、技术专业知识和协作要求等因素。对于大规模管理敏感数据的企业而言, prompts.ai 提供集成 35 多种领先模型的集中式解决方案,并具有内置的成本控制和企业级治理。其灵活的即用即付TOKN信用系统无需支付定期订阅费,而Prompt Engineer认证计划可帮助团队快速调整工作流程。Okta 和 Remote.com 等公司显著缩短了支持上报时间,并自动解决了问题单,展示了如何做到这一点 prompts.ai 提高各行各业的运营效率。

除了企业应用程序外, prompts.ai 通过防止工具蔓延和保持品牌声音和质量标准的一致性来支持创意团队。一个值得注意的例子是FREITAG在2026年1月与Miro解决方案合作伙伴智能系统协会的合作,这使时间和资源成本减少了50%,将过去需要数周的研讨会评估转变为仅需几天的时间。借助人为环检查点,创意团队可以在自动执行重复任务的同时保持控制权。

另一个成功案例来自Vendasta,营销运营专家雅各布·西尔斯利用了该公司 prompts.ai 开发人工智能驱动的铅浓缩系统。该计划恢复了100万美元的潜在收入,每年回收282个工作日。先生们分享了:

“由于自动化,我们已经看到潜在收入增加了约100万美元。我们的代表现在可以纯粹专注于完成交易,而不是管理员。”

归根结底,最好的平台是与团队当前能力保持一致,同时支持未来增长的平台。 prompts.ai 为使用多种 AI 模型的组织提供灵活透明的解决方案。无论您的重点是自动化支持单、丰富潜在客户还是简化创意工作流程,该平台都能减少障碍并提高工作效率。通过简化协作和确保协调一致, prompts.ai 提供凝聚力的 AI 体验,保持团队的效率和适应性。

Prompts.ai 提供了一个 即用即付定价模式 这样可以确保团队只为他们实际使用的资源付费。这种灵活的设置使企业能够根据需要扩大或缩小其 AI 运营,从而避免浪费在未使用容量上的开支。

该平台还包括 企业级治理工具 和 实时成本跟踪,为团队提供了完全的开支透明度。这些功能使您可以轻松控制预算、控制成本和在不超支的情况下保持生产力。这是管理人工智能工作流程的有效解决方案,同时保持财务正轨。

Prompts.ai 提供了一套协作工具,专为驾驶 AI 工作流程的创意团队量身定制。通过将超过 35 种大型语言模型和 AI 工具集成到一个安全的平台中,它确保团队能够管理提示、自动执行任务和有效组织工作流程,从而消除 “快速蔓延” 的混乱局面。

主要功能包括 多步提示链接,这允许团队按逻辑顺序关联提示,无缝处理复杂任务。该平台还提供 实时成本跟踪 帮助团队精确管理预算,同时提供企业级安全性以保护敏感数据。这些工具旨在为创意团队改善协作、提高生产力并简化人工智能驱动的项目。

Prompts.ai 将多个大型语言模型 (LLM) 的强大功能整合到一个简化的平台中,使其更易于管理和集成到您的工作流程中。通过访问超过35种AI模型,您可以毫不费力地在LLM之间切换以满足您的需求,同时通过灵活的方式控制成本 即用即付 系统由 TOKN 积分提供支持。

该平台在设计时考虑了企业级安全和治理,可确保遵守组织政策。其工具简化了人工智能驱动的工作流程,提高了生产力,自动化了重复性任务,并最大限度地减少了手动工作。对于希望利用多个 LLM 进行协作和高效项目的团队来说,Prompts.ai 是一个绝佳的选择。