AI workflows have transformed how developers build, deploy, and manage applications. They simplify complex processes, unify tools, and reduce costs. In 2025, managing "tool sprawl" is a key challenge as organizations juggle multiple AI services. Centralized platforms like Prompts.ai address this by providing a single interface for 35+ models, real-time cost tracking, and automated workflows. Open-source frameworks like TensorFlow and PyTorch offer deep customization, while Hugging Face excels in transformer-based models and APIs. These tools boost productivity, cut costs by up to 98%, and ensure scalability for growing AI projects.

AI workflow automation is evolving, combining tools, human oversight, and real-time optimization to scale AI effectively.

Navigating the challenges of managing multiple tools and ensuring seamless integration, these platforms simplify AI projects from initial experimentation to full-scale production. By unifying access to models and specialized features, they help developers streamline even the most complex workflows. From all-in-one platforms to open-source frameworks, developers have a wide array of options to customize their AI projects.

Prompts.ai tackles the problem of tool sprawl by consolidating access to over 35 leading large language models - including GPT-5, Claude, LLaMA, and Gemini - within a single, secure platform. This unified interface eliminates the need for multiple disconnected tools, simplifying workflows and boosting efficiency.

One of its standout features is the use of TOKN credits and FinOps capabilities, which enable real-time cost tracking. By leveraging these tools, organizations have reported cost reductions of 95-98%, making AI workflows more budget-friendly while maintaining transparency and repeatability. The platform also emphasizes workflow automation, allowing teams to create scalable, repeatable processes. With features like unlimited workspaces, centralized access controls, and detailed audit trails, Prompts.ai ensures that organizations can manage their AI adoption effectively, even as usage grows.

Security is a top priority, with compliance frameworks such as SOC 2 Type II, HIPAA, and GDPR embedded into the platform. This makes it a reliable choice for industries handling sensitive or regulated data, ensuring both safety and compliance.

For developers seeking deep customization, TensorFlow and PyTorch remain essential tools. These open-source frameworks provide unmatched control over model architecture and training, making them ideal for projects that demand custom solutions or advanced neural network designs.

TensorFlow excels in production settings, offering tools for model serving, mobile deployment, and large-scale distributed training. Its static computation graph ensures performance and stability, making it a strong choice for production environments where reliability is critical.

PyTorch, on the other hand, offers dynamic computation graphs that simplify experimentation and debugging. Its eager execution allows developers to tweak networks on the fly, making it particularly useful for research and rapid prototyping. This flexibility has made PyTorch a favorite in academic and experimental settings.

Both frameworks benefit from strong community support and a wealth of pre-built models, which can significantly reduce development time. While their learning curves are steeper compared to managed platforms like Prompts.ai, they offer unparalleled flexibility for developers working on proprietary models or with unique data needs.

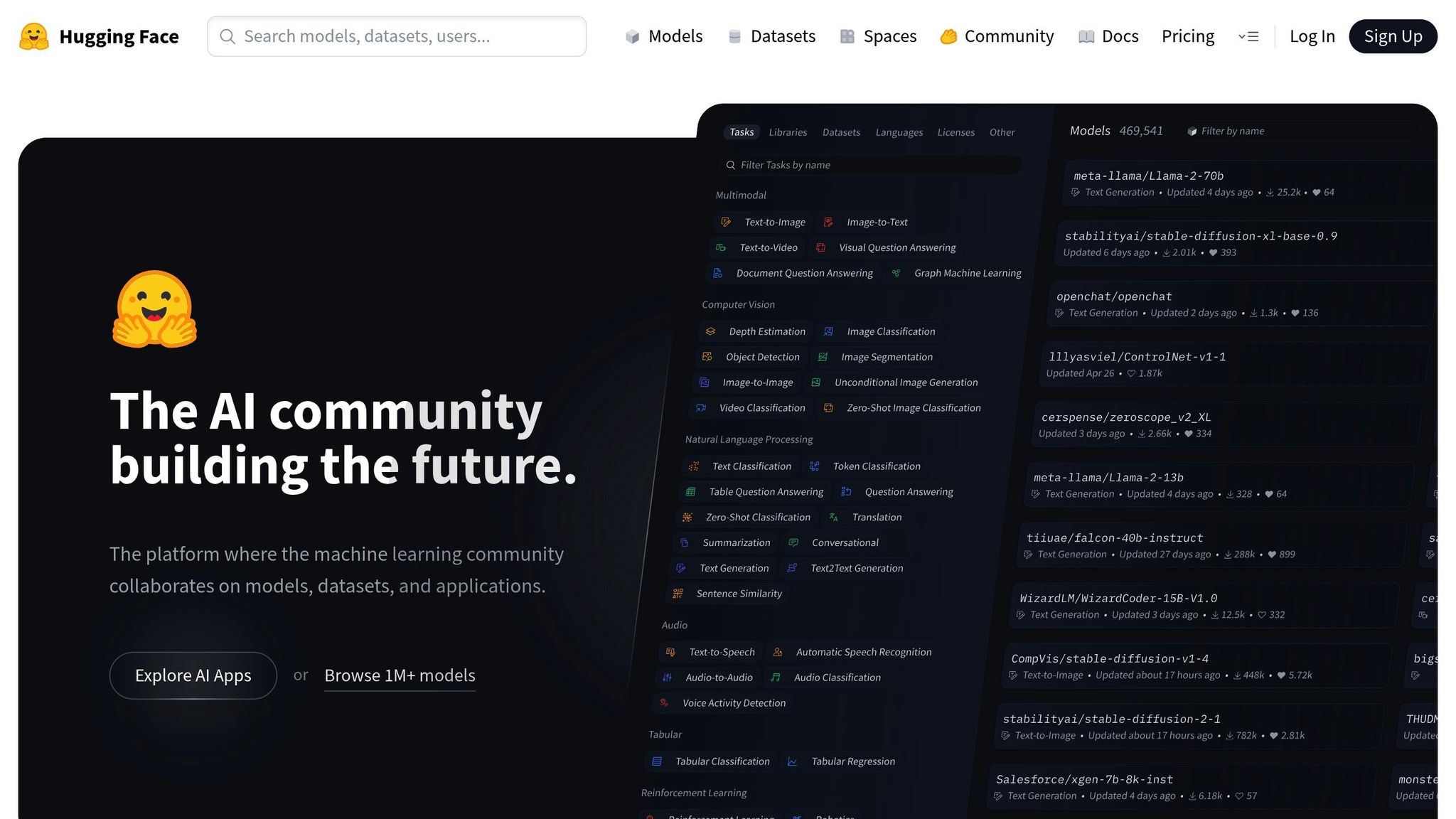

Hugging Face has become a leader in transformer-based models and natural language processing workflows. As of May 2025, it holds 13.3% of the AI development market share, and its dataset library saw 17 million monthly PyPI downloads in 2024.

The Transformers Hub gives developers access to thousands of pre-trained models that can be seamlessly integrated into various workflows. Hugging Face’s API-first design further simplifies the process, enabling developers to use advanced NLP capabilities without needing in-depth expertise in model training or fine-tuning.

A notable collaboration with Google Cloud highlights the platform’s commitment to streamlining transformer-based model deployment. This partnership provides optimized infrastructure, making it easier for developers to combine open models with high-performance cloud solutions.

Hugging Face also offers Workflow APIs, which enable integration with larger orchestration systems. This feature is particularly valuable for building comprehensive AI applications that require multiple models to work together. The platform’s community-driven approach ensures that new models and techniques are quickly available, often within days of appearing in research papers. This rapid innovation cycle allows developers to stay ahead in the fast-moving AI landscape without starting from scratch.

Centralized orchestration brings tangible benefits to AI workflows, turning manual, repetitive tasks into efficient, scalable processes. These use cases demonstrate how integrated workflows can enhance productivity and streamline AI development across various scenarios. By automating key tasks in data handling, coding, and model evaluation, these workflows deliver measurable improvements in efficiency and outcomes.

Data preprocessing is often one of the most labor-intensive stages in data science. Automated workflows simplify this by cleaning data, normalizing formats, and extracting features consistently. These systems can identify and address missing values, outliers, and formatting issues in real time, reducing manual intervention while ensuring data quality.

For instance, automated pipelines process large datasets using predefined validation rules, making it easier to detect and fix anomalies. Feature engineering workflows take this further by automatically generating new variables from existing data, evaluating their predictive value, and selecting the most relevant features for model training. This not only speeds up the process but also ensures reproducibility, which is critical for maintaining and updating models over time.

Real-time validation is especially useful when working with streaming data sources. These workflows continuously monitor data quality, flag anomalies, and trigger corrective actions as needed. This proactive approach prevents downstream issues and preserves the integrity of the entire data pipeline.

By automating these processes, data scientists can significantly cut down on preprocessing time, allowing them to focus more on model development and analysis.

The orchestration of large language models (LLMs) transforms code generation, testing, and documentation into streamlined workflows. Advanced models like GPT-5 and Claude can generate boilerplate code, API integrations, and even complex algorithms based on natural language prompts. Developers can design workflows to produce code in multiple programming languages, compare outputs, and select the best fit for their specific needs.

These workflows also automate documentation by extracting API references and inline comments, ensuring consistency across projects. As code evolves, updates to the documentation are made automatically, saving developers time and maintaining accuracy.

Quality assurance processes benefit as well. LLMs can generate test cases, identify bugs, and suggest improvements while analyzing code for security vulnerabilities, performance bottlenecks, and adherence to standards. Catching these issues early in the development cycle reduces errors and enhances overall code quality.

Unified platforms make these workflows seamless, enabling developers to integrate LLM capabilities without the hassle of managing multiple tools or interfaces.

Choosing the right model for a specific task often involves comparing multiple options. Automated workflows simplify this process by testing various models against the same datasets and evaluation criteria to determine the best fit.

For natural language processing (NLP) tasks like sentiment analysis, text classification, or named entity recognition, workflows evaluate models based on accuracy, processing speed, and resource usage. Developers can generate detailed performance reports, helping them identify the most suitable model for their needs.

In computer vision tasks, such as image classification, object detection, or image generation, similar workflows analyze models on large datasets. These systems provide insights into accuracy rates, processing times, and computational requirements, ensuring an informed decision-making process.

When scaling these workflows, balancing performance and cost becomes a priority. Automated comparisons using standardized datasets and preprocessing steps ensure consistent results. Uniform evaluation metrics and benchmarks minimize bias, offering clear, actionable insights for selecting the optimal model.

Unified platforms further simplify this process by enabling side-by-side comparisons through a single interface. Instead of juggling separate API integrations, developers can evaluate multiple models simultaneously, saving both time and effort while ensuring a systematic approach to model selection.

Transitioning AI projects from the experimental phase to fully operational workflows demands a thoughtful approach to refinement. Teams that excel in scaling AI operations concentrate on three key areas: centralized orchestration, financial transparency, and workflow standardization. Together, these elements help eliminate inefficiencies, cut costs, and establish practices that can grow alongside organizational needs.

Fragmented tools can slow down AI development. When teams are forced to switch between various interfaces, manage multiple API keys, and deal with inconsistent billing systems, productivity takes a hit, and expenses climb. Unified orchestration platforms tackle these issues by bringing access to multiple AI models under one roof.

Take Prompts.ai as an example - it unifies access to various AI models through a secure, single interface. This eliminates the hassle of maintaining separate integrations for each model provider, saving time and reducing complexity.

Beyond convenience, centralized orchestration enhances governance. With a unified platform, organizations can enforce consistent security policies and compliance measures across all AI activities. Instead of relying on individual team members to follow best practices across disparate tools, enterprise-grade controls can be uniformly applied.

Version control also becomes far simpler. Teams can monitor changes, revert problematic updates, and maintain consistent deployment practices without juggling multiple platforms. This streamlined approach is particularly valuable for organizations handling sensitive data or operating in regulated industries.

Managing access is another area where unified platforms shine. Instead of creating and managing accounts across numerous AI services, administrators can oversee permissions, track usage, and enforce policies from one central location. This not only reduces security risks but also provides clear visibility into how AI resources are being utilized, paving the way for better cost management.

Applying financial operations (FinOps) principles revolutionizes AI cost management, shifting from passive budget tracking to proactive planning. Traditional methods often lack real-time cost insights, but modern FinOps tools change the game by offering immediate visibility into spending patterns.

Today’s FinOps solutions allow teams to monitor token-level usage, offering granular insights into costs. This level of detail helps identify expensive operations, optimize prompts for efficiency, and make informed decisions about which models to use based on both cost and performance.

Setting budget limits for projects is another effective strategy. Automated alerts can notify stakeholders when spending approaches predefined thresholds, preventing unexpected overruns.

Advanced FinOps practices also tie AI expenses directly to business outcomes. By tracking which workflows deliver the best return on investment, organizations can allocate resources more effectively. For instance, a customer service automation that reduces ticket volume might justify higher spending compared to a less impactful experimental project.

Cost optimization algorithms play a crucial role in managing expenses. These systems analyze usage patterns and recommend ways to save, such as switching to more economical models for routine tasks while reserving premium models for complex operations. They can also identify opportunities to batch similar requests, which reduces per-operation costs through smarter API usage.

Prompts.ai simplifies cost management with its pay-as-you-go TOKN credits, eliminating recurring subscription fees and aligning expenses with actual usage. This approach ensures financial clarity, making it easier to scale AI workflows across teams.

Combining centralized control with clear cost insights provides the foundation for scaling AI workflows across multiple projects and teams. Reusable workflow templates are at the heart of this scalability. Instead of building custom solutions from scratch, organizations can rely on standardized patterns that adapt to various use cases, covering tasks like data preprocessing, model evaluation, and result formatting.

The best templates are flexible, featuring adjustable parameters that allow for easy customization. For example, a content generation workflow might include options for tone, length, and target audience, making it suitable for anything from blog posts to social media updates and email campaigns.

Workflow libraries further speed up development by offering pre-built components for common tasks. Developers can skip writing custom code for things like API rate limiting or error handling and instead use tested components that handle these processes automatically.

Standardized templates also foster better collaboration among teams. Automated scaling ensures workflows perform reliably as demand grows, while intelligent queuing manages traffic spikes without compromising performance.

As workflows evolve, version management becomes essential. Semantic versioning allows teams to make updates while preserving backward compatibility. Automated testing and rollback features provide additional safeguards, minimizing disruptions when issues arise.

Performance monitoring is another key component of scaling. Tracking metrics like execution times, success rates, and resource consumption helps identify bottlenecks before they affect users. This data guides optimization efforts, ensuring improvements focus on areas with the greatest impact.

Lastly, community-driven workflow sharing accelerates innovation. When one team develops an effective solution, others can adapt and build upon it, amplifying the value of individual efforts across the organization. This collaborative approach not only saves time but also drives continuous improvement in AI development processes.

AI workflow automation is moving beyond simple model integrations, evolving into intelligent orchestration systems designed to meet the shifting needs of businesses. This transition - from managing scattered tools to using unified platforms - marks a significant step in how AI solutions are implemented.

One key development is the rise of human-in-the-loop systems, which combine automated processes with human oversight. These workflows efficiently manage routine tasks while escalating more complex issues to human operators, ensuring both quality and accountability. Features like approval workflows and escalation paths make it possible to scale operations without compromising oversight or efficiency.

Platforms like Prompts.ai highlight the industry’s shift toward unified AI orchestration. By providing access to multiple leading models in a single system, these platforms simplify complex integrations and compliance challenges. This consolidation allows teams to shift their focus from managing infrastructure to driving innovation.

Real-time optimization has become a standard feature, enabling AI systems to automatically adjust model selection, refine prompts, and allocate resources based on performance metrics and cost considerations. These adaptive workflows respond to usage patterns, helping organizations reduce overhead and manage expenses more effectively. As optimization tools grow more advanced, budget management tools are evolving alongside them.

Future advancements in FinOps will bring even greater precision to cost management. Features like token-level tracking, predictive cost modeling, and automated spending alerts will provide organizations with deeper insights into their AI expenses, enabling smarter allocation of resources.

As these platforms continue to advance, they’ll go beyond simply connecting tools. They’ll adapt dynamically to new business needs, creating systems that scale effortlessly while maintaining control over costs, security, and compliance. Organizations that embrace these comprehensive orchestration platforms will be well-positioned to expand their AI initiatives effectively.

The future of AI workflows lies in systems that seamlessly integrate multiple AI capabilities, human expertise, and business logic to deliver measurable results.

Prompts.ai acts as a central hub for AI workflows, bringing together various tools and models into one seamless system. By consolidating these resources, it eliminates the hassle of juggling multiple platforms, cutting down on inefficiencies and saving valuable time.

With its ability to automate repetitive tasks, optimize resource usage, and simplify processes, Prompts.ai allows developers to concentrate on creating and refining AI solutions. This approach not only accelerates workflows but also ensures dependable and scalable AI deployments without the confusion of managing scattered tools.

Open-source frameworks such as TensorFlow and PyTorch bring valuable advantages to AI development. They empower developers to experiment and customize their projects with ease while benefiting from a robust and active community for support. PyTorch stands out for its user-friendly approach and dynamic computation graphs, making it a popular choice for research and smaller projects. On the other hand, TensorFlow shines in large-scale production settings, thanks to its scalability and strong performance.

These frameworks differ from centralized platforms by offering developers more control over their workflows. This autonomy accelerates innovation, as updates are driven by the community. Their versatility makes them suitable for everything from initial prototypes to deploying AI models on a large scale.

FinOps empowers developers to keep AI project costs under control by offering improved cost transparency, smarter resource allocation, and tools like Kubernetes to handle workloads more efficiently. This approach ensures spending stays aligned with project objectives, helping developers make well-informed decisions.

To stretch budgets effectively, developers can use strategies like real-time cost monitoring, refining workloads to eliminate inefficiencies, and applying FinOps principles at every stage of the AI development process. These methods not only help manage expenses but also support scalability without compromising performance.