AI orchestration tools must balance performance with security, ensuring compliance, data protection, and governance without sacrificing usability. Here's how four leading platforms compare:

Quick Comparison Table:

| Platform | Access Control | Data Protection | Compliance | Best For |

|---|---|---|---|---|

| Prompts.ai | Role-based, audit trails | Enterprise-grade encryption | Strong governance | Cost-conscious enterprises |

| Amazon SageMaker | AWS IAM integration | VPC isolation, encryption | HIPAA, ISO 27001 | AWS-centric organizations |

| Azure ML | Azure RBAC, Entra ID | Customer-managed keys | ISO 27001, HIPAA | Microsoft ecosystem users |

| Kubeflow | Kubernetes RBAC | Container-level security | Configurable | Advanced Kubernetes teams |

Each platform has strengths tailored to specific needs. Prompts.ai shines for enterprises needing cost control and governance. SageMaker and Azure ML are ideal for AWS or Microsoft users, while Kubeflow offers unmatched flexibility for Kubernetes experts. Choose based on your team’s expertise and security priorities.

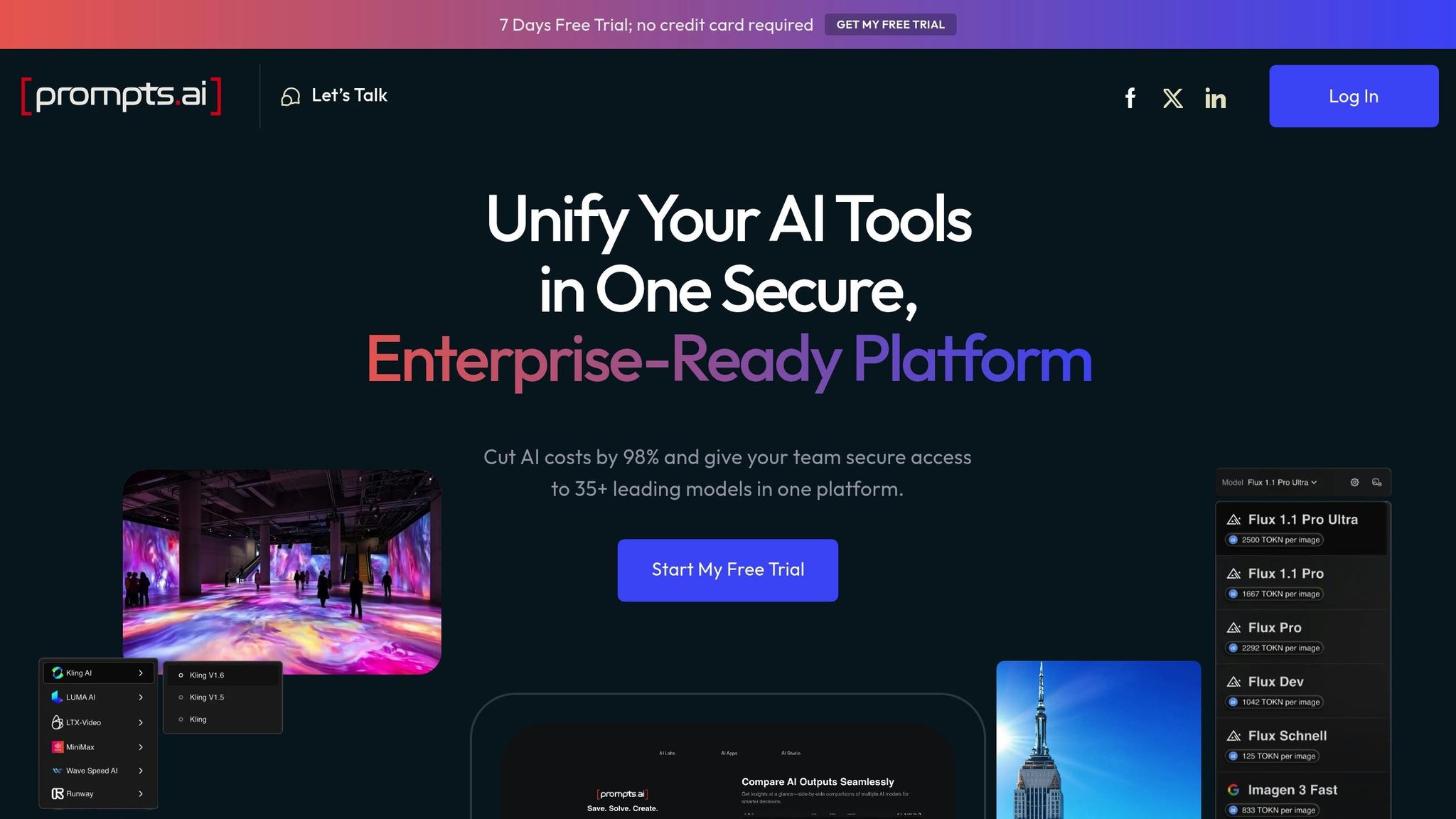

Prompts.ai is a powerful enterprise platform that brings together 35 large language models within a single, secure interface. By centralizing tools like GPT-4, Claude, LLaMA, and Gemini, it eliminates the chaos of managing multiple AI tools, offering a streamlined solution for enterprises.

With its integrated FinOps capabilities, the platform provides full visibility into interactions and expenses, helping businesses manage costs effectively. At the same time, it ensures strict governance and secure workflows, making it a reliable choice for enterprise AI orchestration.

Up next, we’ll explore Amazon SageMaker’s approach to security.

Amazon SageMaker is certified under several international security standards, including ISO/IEC 27001:2022, 27017:2015, 27018:2019, 27701:2019, 22301:2019, 20000-1:2018, and 9001:2015. These certifications reflect its commitment to maintaining stringent security protocols, providing a reliable and secure environment for AI workflows. This focus ensures that enterprises can meet both high-performance demands and regulatory requirements - key considerations in adopting AI at scale.

These certifications provide a foundation for evaluating other security frameworks. Up next, we’ll see how Azure Machine Learning incorporates similar principles into its approach.

Azure Machine Learning takes advantage of Microsoft's advanced identity and access management systems, integrating tools like Azure RBAC and Microsoft Entra ID to provide secure access for users ranging from individuals to large enterprises.

The platform employs a role-based access control (RBAC) system to manage permissions with precision. By integrating with Microsoft Entra ID as its primary identity provider, Azure Machine Learning ensures secure authentication and authorization processes.

| Role | Access Level |

|---|---|

| AzureML Data Scientist | Full access to workspace actions except creating/deleting compute resources and modifying the workspace itself. |

| AzureML Compute Operator | Manage compute resources within a workspace, including creation, deletion, and access. |

| Reader | View-only permissions, including access to assets and datastore credentials. |

| Contributor | Permissions to view, create, edit, or delete assets; attach compute clusters; submit runs; and deploy web services. |

| Owner | Complete control over the workspace, including asset management and role assignments. |

| AzureML Registry User | Permissions to read, write, and delete registry assets, excluding the creation or deletion of registry resources themselves. |

These clearly defined roles lay the groundwork for effective governance and security across the platform.

For organizations with unique needs, Azure Machine Learning allows the creation of custom roles. These roles can be tailored to highly specific requirements using JSON definitions, enabling precise control over permissions and restrictions. Custom roles can also be scoped to individual workspaces, offering flexibility for different team setups.

Microsoft Entra security groups further streamline governance by enabling team-based access management. Team leaders can manage permissions as group owners without requiring direct Owner-level access to the workspace, simplifying the process of granting and revoking permissions.

The platform also supports managed identities to enhance secure interactions between services. These identities come in two forms:

These identities are granted specific Azure RBAC permissions, such as Contributor access to workspaces and resource groups or Storage Blob Data Contributor access to storage. They also facilitate secure access to sensitive information like keys, secrets, and certificates stored in Key Vault.

Azure Machine Learning further allows compute clusters to operate with independent managed identities. This ensures that clusters can access secured datastores even when individual users lack direct permissions, maintaining security without compromising functionality.

To support automated workflows, the platform includes specialized roles like "MLOps Custom." This role is tailored for service principals managing MLOps pipelines, allowing them to read pipeline endpoints and submit experiment runs while restricting actions like creating compute resources or altering authorization settings. This ensures automated processes remain secure and within defined boundaries.

Kubeflow builds on Kubernetes' powerful security framework, making it a strong choice for managing containerized AI workflows. By integrating Kubernetes' native security features with tools tailored for AI and machine learning, Kubeflow offers a secure and adaptable environment for complex workflows.

Kubeflow uses Kubernetes' Role-Based Access Control (RBAC) system to manage permissions through four key components:

This setup allows for precise control by specifying actions - like create, read, update, or delete - for particular API groups and resources, such as pods or deployments. Permissions can even be limited to specific resource instances, ensuring users only access what they need for their tasks.

Kubeflow supports three types of subjects for assigning roles:

Kubeflow emphasizes the principle of least privilege, ensuring that users and processes only have access to what is absolutely necessary, reducing potential risks.

The platform also benefits from Kubernetes' automatic maintenance of security policies. During startup, the Kubernetes API server updates default cluster roles and bindings, repairing any accidental changes to ensure security settings remain intact. Organizations that prefer manual control can disable this feature.

Default RBAC policies in Kubeflow are designed to grant essential permissions to system components, while service accounts outside the kube-system namespace start with no permissions. This approach ensures deliberate and secure permission management.

A report by Red Hat in 2024 revealed that 46% of organizations experienced losses due to Kubernetes security incidents. In one notable case in April 2023, Aqua Security researchers uncovered attacks on exposed Kubernetes clusters with misconfigured RBAC settings. Attackers exploited API servers that permitted unauthenticated requests from anonymous users with elevated privileges.

To mitigate such risks, organizations using Kubeflow should actively monitor RBAC configurations and regularly audit permissions. Leveraging Kubernetes logging and monitoring tools can help track access attempts and changes to permissions, enabling quick detection and response to potential threats.

In distributed Kubeflow deployments, securing network communications between services is equally critical. AI workflows often involve multiple interconnected components, and maintaining secure communication channels is essential to preserve system integrity.

The following summary compares these platforms' security features.

Based on our thorough security evaluations, this summary highlights the key distinctions among platforms, offering a clear guide for organizations to identify the best match for their requirements. Each platform has its own strengths in security, and the comparison below provides a quick overview of their core attributes.

Prompts.ai stands out with its enterprise-grade governance, streamlined AI orchestration, detailed audit trails, and real-time FinOps capabilities. These features ensure both robust data protection and cost transparency.

Amazon SageMaker leverages AWS's well-established security framework, featuring strong encryption, seamless integration with AWS Identity and Access Management (IAM), and multiple compliance certifications. It’s an excellent option for organizations already using AWS, though it may require advanced AWS expertise to maximize its potential.

Azure Machine Learning excels in hybrid and multi-cloud environments, integrating seamlessly with Microsoft enterprise tools like Active Directory. Its security framework is built to complement Microsoft's ecosystem, offering a reliable option for businesses already invested in their tools.

Kubeflow provides unmatched flexibility with its open-source, Kubernetes-based architecture. Its Kubernetes Role-Based Access Control (RBAC) allows for highly granular security management but demands significant Kubernetes expertise to operate effectively.

| Platform | Access Control | Data Protection | Compliance | Flexibility | Manageability |

|---|---|---|---|---|---|

| Prompts.ai | Role-based with audit trails | Enterprise-grade encryption | Robust | Cloud-native | High – unified interface |

| Amazon SageMaker | AWS IAM integration | VPC isolation, encryption | HIPAA, PCI DSS, SOC | AWS-focused | Medium – AWS expertise needed |

| Azure Machine Learning | Azure AD integration | Customer-managed keys | ISO 27001, HIPAA | Hybrid cloud support | Medium – Microsoft stack |

| Kubeflow | Kubernetes RBAC | Container-level security | Configurable | Maximum flexibility | Low – requires Kubernetes expertise |

This breakdown illustrates how each platform aligns with various operational and security priorities. For example, Prompts.ai not only offers robust security but also integrates FinOps tools that enhance cost visibility and operational efficiency - an added advantage for organizations prioritizing both security and financial oversight.

Ultimately, the ideal platform depends on balancing security requirements, operational complexity, and the expertise available within your team.

When selecting an AI orchestration platform, it’s crucial to align your choice with your security needs, infrastructure setup, and level of technical expertise. Each platform caters to distinct enterprise requirements, so understanding their strengths will help guide your decision.

For enterprises in the US prioritizing security and cost management, Prompts.ai stands out. It offers strong protection and full cost transparency, combining enterprise-grade governance with real-time FinOps tools. This gives you a clear view of AI spending while maintaining stringent security measures. Its unified interface simplifies operations, minimizing the risks associated with managing multiple tools and reducing potential vulnerabilities caused by tool sprawl.

For organizations deeply integrated with AWS, Amazon SageMaker is a natural fit. Its features, like VPC isolation and seamless IAM integration, make it an excellent choice for companies already utilizing AWS infrastructure. However, to fully leverage its security features, a solid understanding of AWS tools is essential, which can add to operational overhead.

Similarly, Azure Machine Learning is ideal for companies that rely on Microsoft’s ecosystem. Its integration with Active Directory and hybrid cloud capabilities provides flexibility for businesses transitioning between on-premises and cloud setups, all while maintaining consistent security policies.

For maximum customization, Kubeflow offers unmatched control thanks to its open-source architecture. Organizations with advanced Kubernetes expertise can create highly tailored security configurations. However, this level of flexibility comes with added complexity and the need for specialized technical skills.

US enterprises should also keep in mind the shared responsibility model when implementing security measures. A layered approach, addressing both the AI platform and application levels, is essential to guard against risks such as prompt injection and toxic content generation.

Ultimately, the right choice depends on balancing your security priorities with operational complexity and your team’s technical capabilities. For those seeking a balance between security and cost efficiency, Prompts.ai’s integrated approach is a strong contender. On the other hand, businesses with specific ecosystem dependencies may find the tailored benefits of SageMaker, Azure ML, or Kubeflow more suitable. By considering these insights, you can confidently select a platform that aligns with your organization’s unique needs.

When assessing AI model orchestration platforms, make sure data protection is a top priority. Key features to look for include encryption for both data at rest and in transit, along with strong user access controls to block unauthorized access. Platforms that incorporate real-time threat detection and mitigation can help identify and address vulnerabilities before they become serious issues.

It's equally important to confirm that the platform adheres to relevant industry standards and regulations, such as GDPR or HIPAA, if those apply to your operations. Additional safeguards like secure data handling, vulnerability management, and audit logging can significantly strengthen the security of your AI workflows, minimizing the risk of data breaches and other security challenges.

Prompts.ai empowers enterprises to keep a close eye on their expenses without compromising on security. With features like real-time cost tracking, dynamic routing, and integrated FinOps tools, organizations can effortlessly monitor and fine-tune their spending.

On the security front, Prompts.ai offers secure API access, role-based permissions, and detailed audit trails. These tools work hand-in-hand to protect sensitive data, ensure compliance, and keep your AI workflows both secure and financially transparent.

Effectively managing the security features of AI platforms like Kubeflow or Amazon SageMaker calls for a balanced mix of technical know-how and hands-on experience. Key areas of expertise include a deep understanding of data encryption techniques, user access management, and network security protocols. Equally important is being well-versed in compliance standards such as GDPR, HIPAA, and SOC 2, ensuring that workflows align with regulatory requirements.

Practical experience with cloud security tools, container orchestration, and AI/ML workflows can empower administrators to design and maintain security measures tailored to their organization's specific needs. Given the ever-changing landscape of security threats and best practices in the AI field, a commitment to continuous learning is essential to stay ahead.