Cut through the complexity of machine learning workflows with the right orchestration tools. Managing ML pipelines can be challenging - tool sprawl, governance issues, and unclear costs often derail projects. This article reviews 10 platforms that simplify ML operations, offering solutions for interoperability, compliance, cost control, and scalability.

| Tool | Best For | Key Strengths | Limitations |

|---|---|---|---|

| Prompts.ai | AI orchestration, cost reduction | Unified LLM access, TOKN credits | N/A |

| Airflow | Python workflows, data pipelines | Mature ecosystem, plugins | Steep learning curve |

| Prefect | Ease of use, hybrid execution | Dynamic scaling, retries | Fewer integrations |

| Dagster | Data lineage, reproducibility | Asset tracking, type validation | Limited enterprise adoption |

| Flyte | Kubernetes-based workflows | Versioning, multi-tenancy | Kubernetes dependency |

| MLRun | End-to-end ML lifecycle | Auto-scaling, feature store | Complex setup |

| Metaflow | Data science workflows | AWS integration, scalability | AWS-centric |

| Kedro | Organized pipelines | Project structure, visualization | Limited orchestration features |

| ZenML | MLOps, modular pipelines | Experiment tracking, integrations | Smaller community |

| Argo | Kubernetes-native orchestration | Container isolation, scalability | Requires Kubernetes expertise |

Whether you're scaling AI, improving governance, or cutting costs, these tools can help you manage workflows efficiently. Choose based on your team's expertise, infrastructure, and goals.

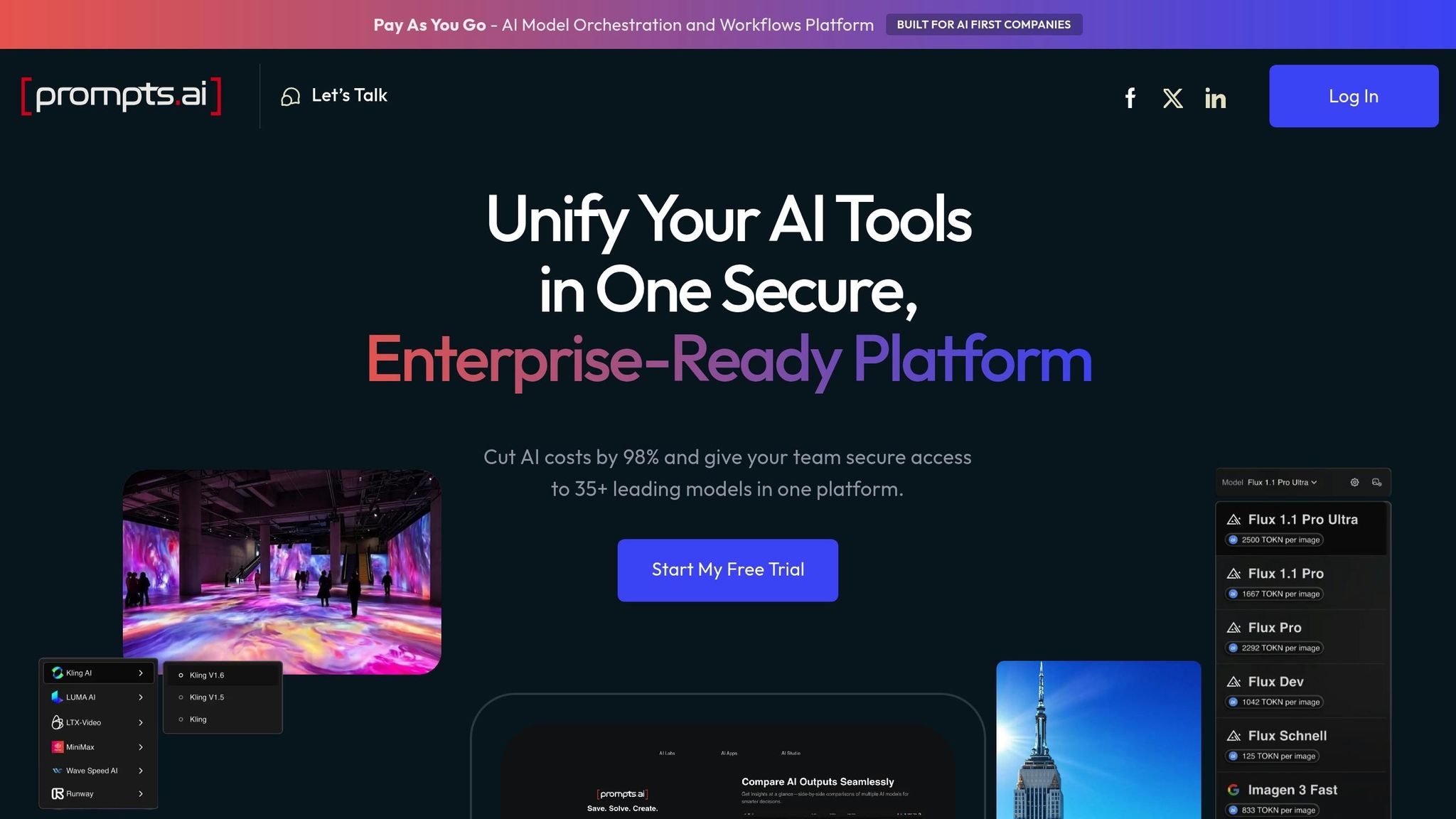

Prompts.ai is an enterprise-grade platform designed to streamline and simplify machine learning (ML) workflow management. Instead of juggling multiple AI tools, teams can access over 35 leading language models - including GPT-5, Claude, LLaMA, and Gemini - through a single, secure interface.

Prompts.ai tackles the common issue of tool sprawl by bringing all major language models together in one place, cutting down on technical complexity and reducing the burden of managing scattered AI services. This unified approach minimizes the technical debt that can build up when organizations rely on multiple, disconnected tools.

Beyond offering access to top-tier models, Prompts.ai integrates seamlessly with popular business tools like Slack, Gmail, and Trello. These integrations allow teams to automate workflows without overhauling their existing systems. The platform emphasizes "interoperable workflows" as a key feature, enabling smooth operations across various tools and technologies within an organization. In addition to integration capabilities, it ensures strong governance practices to meet industry compliance requirements.

Prompts.ai addresses compliance challenges head-on by providing robust governance controls and full auditability for all AI interactions. Its security framework incorporates best practices from SOC 2 Type 2, HIPAA, and GDPR, ensuring sensitive data remains protected throughout the ML lifecycle.

In June 2025, the platform initiated its SOC 2 Type 2 audit process, underscoring its commitment to stringent security and compliance standards. By partnering with Vanta, Prompts.ai offers continuous control monitoring, giving users real-time insights into their security posture via its Trust Center. This level of transparency helps bridge governance gaps that often arise in enterprise AI deployments.

Both business and personal plans include compliance monitoring and governance tools, making it easier for smaller teams to maintain oversight of their AI workflows - even without dedicated compliance personnel.

Prompts.ai employs a TOKN credit system, aligning costs directly with usage and eliminating recurring subscription fees. This pay-as-you-go model can slash AI software expenses by up to 98% compared to maintaining individual subscriptions for multiple tools.

The platform also provides detailed cost visibility at the token level, addressing the common challenge of unclear budgets when using multiple AI services across different providers and environments.

Built for rapid growth, Prompts.ai allows teams to scale their AI capabilities effortlessly. Adding models, users, or workflows takes just minutes, thanks to its cloud-based architecture. Unlike complex Kubernetes setups, Prompts.ai is easy to deploy, making it suitable for teams ranging from small agencies to Fortune 500 companies.

The platform’s ability to manage multiple models through a single interface ensures that organizations can expand their AI initiatives without needing to rebuild infrastructure or retrain staff on new tools.

Prompts.ai enhances teamwork through collaborative prompt engineering. Teams can share pre-built workflows and "Time Savers" across their organization, reducing redundant efforts and accelerating the implementation of proven AI solutions.

Additionally, the platform offers a Prompt Engineer Certification program, which helps organizations develop internal experts and establish best practices. This collaborative approach turns AI workflow management into a shared effort, leveraging the collective knowledge and expertise of teams and departments.

Apache Airflow stands out as a go-to open-source platform for orchestrating machine learning workflows, thanks to its flexibility and integration capabilities. Originally developed by Airbnb, this Python-based tool has become a favorite for managing data pipelines. Its adaptability and focus on seamless integration make it a solid choice for handling complex ML workflows.

Airflow excels at connecting the various systems that form the backbone of modern data architectures. With a robust set of operators and hooks, it integrates effortlessly with AWS, GCP, Azure, popular databases, message queues, and machine learning frameworks. Its Directed Acyclic Graph (DAG) structure allows workflows to be defined directly in Python, making it easy to incorporate existing Python libraries and scripts into the process.

The platform's XCom feature simplifies data sharing between tasks, ensuring smooth execution even in workflows with diverse computational requirements.

Airflow prioritizes governance and security through features like audit logging, which tracks task execution, retries, and workflow changes. Its Role-Based Access Control (RBAC) system restricts workflow modifications to authorized users, providing an added layer of protection. Additionally, Airflow integrates with enterprise authentication systems, including LDAP, OAuth, and SAML. For secure connection and secret management, it supports tools like HashiCorp Vault and AWS Secrets Manager.

As an open-source solution, Airflow eliminates licensing fees, requiring payment only for the infrastructure it runs on. Its design supports dynamic scaling of resources through executors like CeleryExecutor and KubernetesExecutor, allowing teams to allocate resources based on workload demands. For example, GPU instances can be reserved for model training, while less resource-intensive tasks can run on CPU-only instances. This task-level resource allocation ensures efficient use of computing resources.

Airflow's distributed architecture is built for scalability, enabling horizontal scaling across multiple machines or cloud regions. The platform’s pluggable executor system supports dynamic pod creation with KubernetesExecutor and maintains persistent worker pools via CeleryExecutor. This flexibility allows Airflow to handle a wide range of scheduling needs, from real-time data processing to periodic retraining of machine learning models.

Collaboration is made easier with Airflow's web-based UI, which provides a centralized view of all workflows for real-time monitoring and troubleshooting. Since workflows are defined in code, they can be integrated with version control systems and undergo code reviews. Airflow also supports workflow templating and reusability through its plugin system and custom operators, enabling teams to standardize tasks and share best practices across projects.

Prefect transforms how machine learning workflows are automated, embracing a dataflow automation approach. Designed to overcome the challenges of older workflow tools, it combines user-friendly design with enterprise-level features tailored for ML operations.

Prefect's integration capabilities span the entire ML ecosystem, thanks to its task library and blocks system. It integrates seamlessly with leading cloud platforms like AWS, Google Cloud, and Microsoft Azure through pre-built connectors. Additionally, it works smoothly with tools such as MLflow, Weights & Biases, and Hugging Face.

The platform's universal deployments feature ensures workflows can be executed anywhere - from local environments to Kubernetes clusters. With its subflows feature, teams can build intricate ML pipelines by linking smaller, reusable workflow components. This is especially useful for orchestrating tasks like data preprocessing, model training, and evaluation across different systems. Prefect's ability to connect various tools and environments ensures smooth operations while maintaining security and compliance.

Prefect prioritizes security and governance with its hybrid model, which keeps metadata in Prefect Cloud while running workflows locally. This ensures sensitive data stays within your environment while still benefiting from centralized monitoring and management.

The platform includes features like service accounts, API key management, and audit logs to secure and monitor workflow activities. Prefect's work pools isolate workflows by team or project, ensuring sensitive operations remain separate. It also supports single sign-on (SSO) through enterprise identity providers, simplifying user management. This secure and controlled setup supports efficient and scalable operations.

Prefect's hybrid architecture minimizes costs by removing the need for constant infrastructure to manage workflows. Teams only pay for compute resources during workflow execution, avoiding unnecessary expenses.

With work queues, tasks are automatically distributed based on available compute capacity. For ML workflows, this means costly GPU resources are allocated dynamically for tasks like model training, while lighter tasks, such as data validation, run on standard instances. Prefect Cloud's usage-based pricing aligns costs with actual workflow activity, making it a cost-efficient choice.

Prefect is built to scale effortlessly, leveraging its distributed execution architecture and work pools system. It adapts from single-machine workflows to large-scale distributed computing without a hitch.

Its task runner system allows parallel execution of independent workflow components, which is vital for ML tasks like hyperparameter tuning or running multiple experiments simultaneously. Concurrency controls ensure resources are used efficiently while avoiding conflicts, maximizing throughput for demanding workflows.

Prefect also emphasizes teamwork, offering features that enhance transparency and shared visibility for ML teams. The flow run dashboard provides real-time updates on workflow execution, allowing data scientists and engineers to track progress and identify potential bottlenecks quickly.

The platform's notification system integrates with tools like Slack, Microsoft Teams, and email, keeping teams informed about workflow statuses. Its deployment patterns promote workflows from development to production using infrastructure as code, ensuring consistent deployment practices across the organization. These collaborative tools streamline communication and help teams work more effectively.

Dagster takes a fresh approach to machine learning workflow orchestration by focusing on assets, treating data and ML models as core elements of the process. This perspective is particularly effective for managing complex ML pipelines, where tracking data lineage and dependencies is essential to ensure model quality and reproducibility.

Dagster excels at connecting diverse systems within your ML stack, offering seamless integration across tools and platforms. Its software-defined assets provide a unified view of your workflows, linking data sources, transformation tools, and model deployment platforms. The platform integrates directly with popular ML frameworks such as TensorFlow, PyTorch, and scikit-learn, while also supporting major cloud services like AWS SageMaker, Google Cloud AI Platform, and Azure Machine Learning.

With Dagster's resource system, you can define connections to external systems once and reuse them across multiple workflows. For instance, the same Snowflake warehouse used for data preprocessing can feed your model training pipeline, while model artifacts can sync with tracking tools like MLflow or Weights & Biases. Additionally, Dagster’s type system validates inputs and outputs at every stage, ensuring consistency throughout.

Dagster places a strong emphasis on maintaining control and oversight. Its data lineage tracking provides detailed insights into how ML models are built - from raw data through feature engineering to final artifacts - making it easier to meet regulatory requirements and conduct audits. Changes can be tested in isolated environments before moving to production, reducing risks. Observability features, such as data quality monitoring and alerting, help detect issues like data drift or performance degradation early on.

Dagster’s asset materialization strategy helps cut compute costs by processing data and training models only when upstream dependencies change. This incremental approach is more efficient than traditional batch processing. Backfill functionality allows you to reprocess only the affected portions of a pipeline, while conditional execution ensures that model training jobs run only when absolutely necessary, avoiding unnecessary compute usage.

Dagster is designed to handle workloads of all sizes, distributing tasks across multiple processes and machines. Its partition-based execution allows you to process large datasets in parallel or train multiple model variants at the same time. For even greater flexibility, Dagster Cloud offers serverless execution, automatically scaling compute resources to meet workflow demands during busy periods and scaling down when idle.

The platform’s asset catalog acts as a shared resource, enabling data scientists and ML engineers to discover and reuse datasets and models with ease. Dagster automatically generates documentation from your code, covering everything from data schemas to transformation logic and model metadata. The Dagit web interface provides real-time insights into pipeline execution, allowing team members to monitor progress, troubleshoot failures, and understand data dependencies without needing to dive into the code. Integrated Slack notifications keep teams informed of pipeline issues, ensuring quick responses when problems arise.

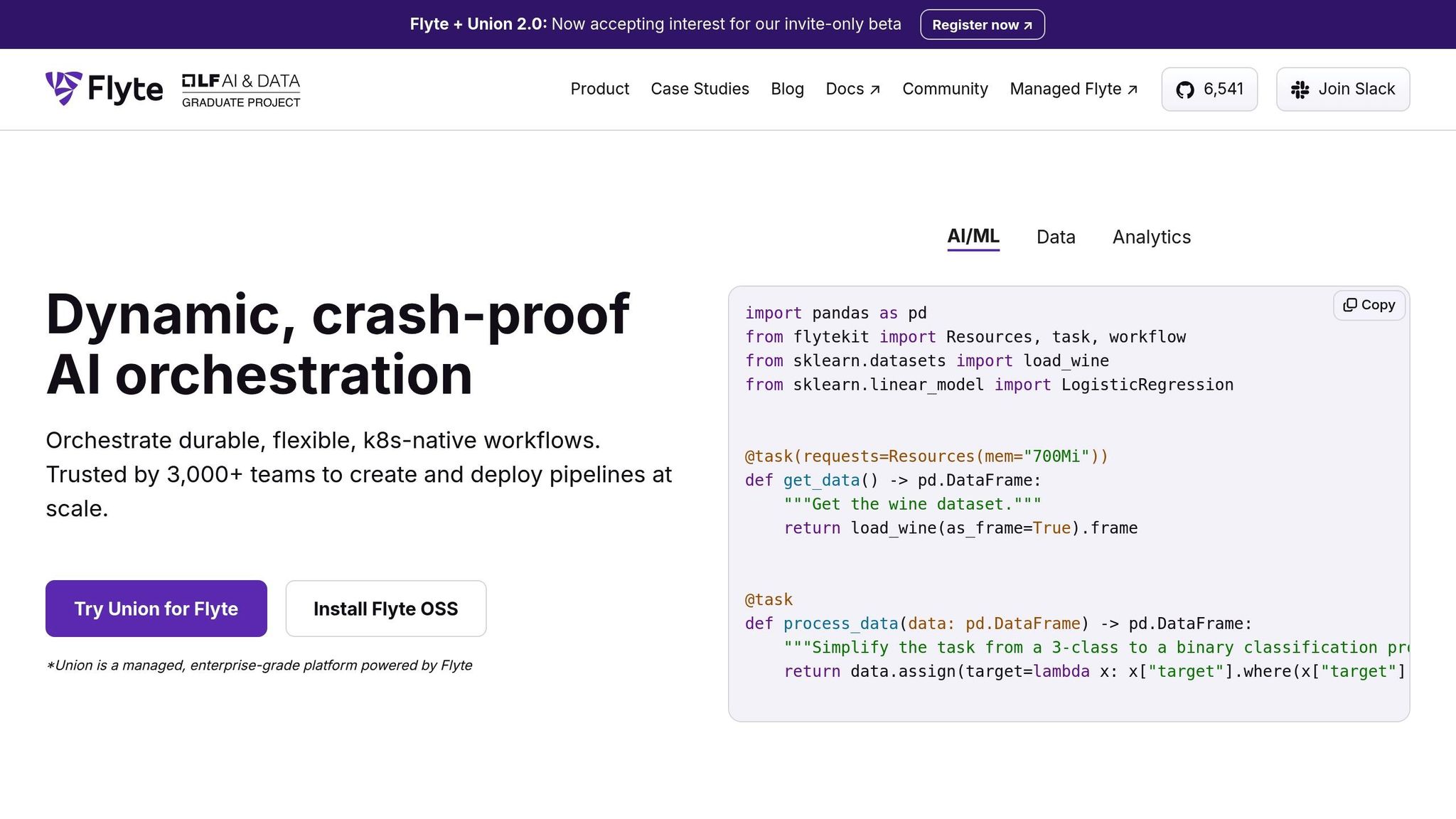

Flyte is a cloud-native platform designed to orchestrate and scale machine learning workflows. Originally developed by Lyft, it stands out for its focus on reproducibility and versioning, achieved through containerization. These capabilities make Flyte an appealing choice for teams aiming to streamline integration, enhance security, and scale workflows efficiently.

Flyte's deep integration with Kubernetes lets it operate seamlessly across AWS, GCP, and Azure. By utilizing managed Kubernetes services like EKS, GKE, and AKS, it avoids vendor lock-in, giving teams flexibility in their cloud infrastructure.

With FlyteKit, developers can use Python to build workflows while benefiting from compatibility with popular machine learning libraries, including PyTorch, TensorFlow, XGBoost, and scikit-learn. It also works with data processing frameworks like Spark, Hive, and Presto, simplifying the creation of data pipelines.

The platform’s container-first design ensures each task runs in its own isolated environment. This approach eliminates dependency conflicts and makes it easier to incorporate third-party tools and custom applications.

Flyte delivers strong governance features through detailed audit trails and version control. It tracks every execution with metadata, including input parameters, output artifacts, and logs, which aids in compliance and debugging. Multi-tenancy support helps organizations separate teams and projects while maintaining centralized oversight. Role-based access control further secures sensitive data and models, limiting access to authorized users. Additionally, Flyte integrates with external authentication systems like LDAP and OAuth to meet enterprise security requirements.

Reproducibility is a key feature of Flyte’s design. Immutable task definitions and containerized environments ensure workflows can be replayed exactly, a vital capability for regulatory compliance and validating models.

Flyte optimizes compute costs with its resource-aware scheduling, which allocates resources efficiently and supports the use of spot instances. Features like built-in retries, checkpointing, and dynamic scaling ensure that costs are tied directly to active usage, helping teams manage budgets effectively.

Flyte’s Kubernetes foundation enables horizontal scaling, accommodating everything from small experiments to large-scale enterprise pipelines. It automatically handles dependencies and executes independent tasks in parallel to maximize efficiency.

The platform’s map tasks feature is particularly useful for processing large datasets. By parallelizing tasks across multiple workers, it simplifies operations such as hyperparameter tuning, cross-validation, and batch predictions - scenarios where repetitive tasks need to be applied to multiple data subsets.

FlyteConsole serves as a centralized hub for monitoring workflows and diagnosing issues. Its project and domain structure makes it easy to share and reuse components across teams. Additionally, launch plans allow teams to execute parameterized workflows without modifying the underlying code, enhancing flexibility and collaboration.

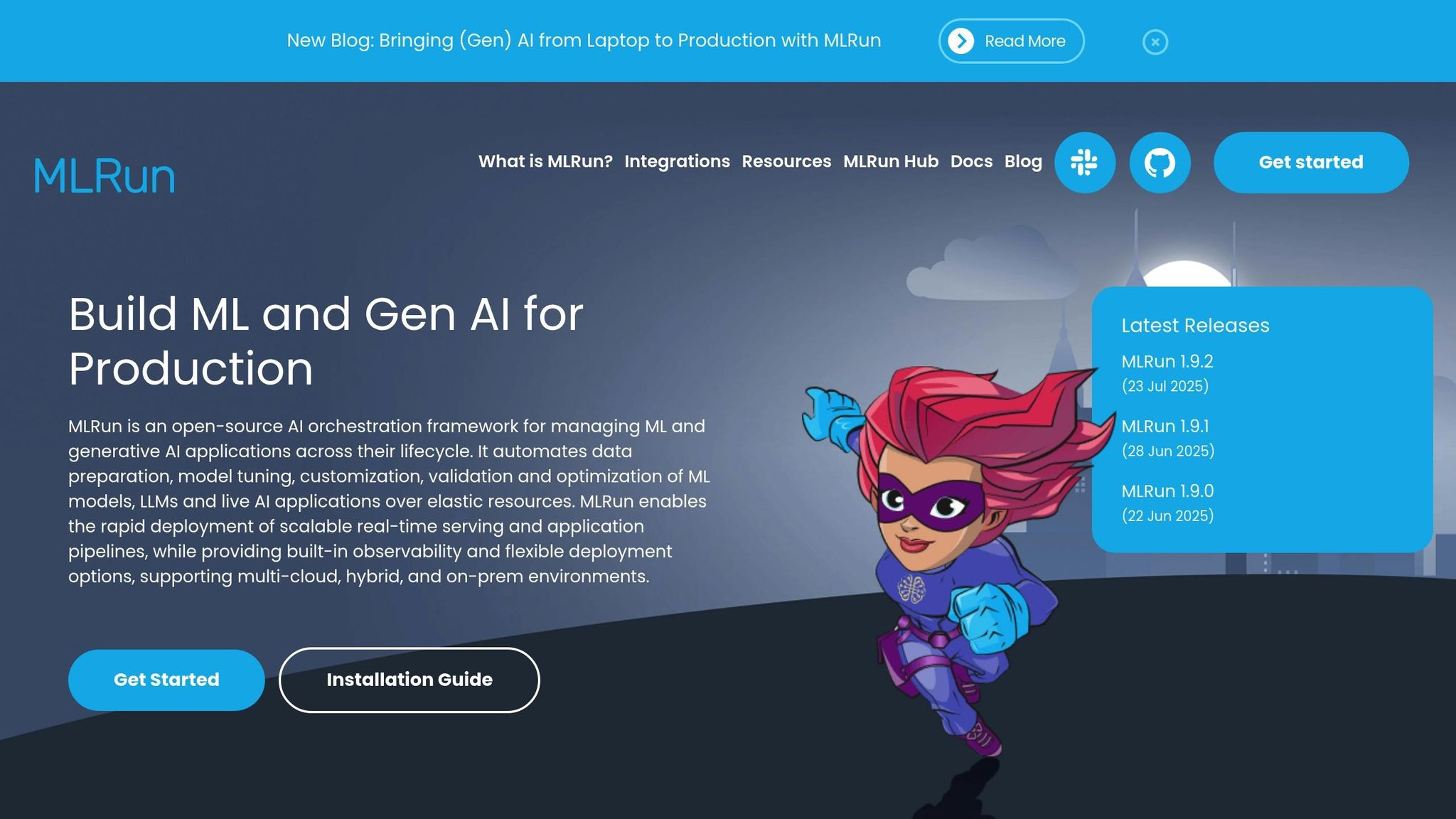

MLRun stands out as an open-source platform tailored for managing machine learning operations at an enterprise level. It simplifies the complexities of deploying and managing ML workflows, making it an excellent choice for teams aiming to implement ML models across various frameworks and infrastructures.

MLRun is compatible with a wide range of ML frameworks, including SKLearn, XGBoost, LightGBM, TensorFlow/Keras, PyTorch, and ONNX. It also integrates smoothly with popular development environments and platforms like PyCharm, VSCode, Jupyter, Colab, AzureML, and SageMaker. This flexibility ensures teams can work within their preferred tools without disruption.

The platform automatically logs activities, manages models, and supports distributed training, making it a comprehensive solution. As MLRun.org puts it:

"Future-proof your stack with an open architecture that supports all mainstream frameworks, managed ML services and LLMs and integrates with any third-party service."

- MLRun.org

For execution, MLRun supports frameworks such as Nuclio, Spark, Dask, Horovod/MPI, and Kubernetes Jobs, providing teams with the freedom to choose the best tools for their workloads. Additionally, it seamlessly connects to storage solutions like S3, Google Cloud Storage, Azure, and traditional file systems.

When it comes to GPU-accelerated tasks, MLRun employs serverless functions and a unified LLM gateway to enable on-demand scaling and monitoring.

Beyond its technical flexibility, MLRun strengthens governance by automatically logging all ML operations. Its experiment management features record every aspect of model training, deployment, and inference, ensuring reproducibility and accountability. For instance, in May 2025, a major bank used MLRun to create a multi-agent chatbot. This project incorporated real-time monitoring and adhered to regulatory requirements through automated evaluation pipelines and alert systems.

MLRun helps teams control costs by using resource-aware scheduling, which allocates resources efficiently and supports spot instances. Features such as built-in retries, checkpointing, and dynamic scaling ensure that expenses align closely with actual usage, making budget management more predictable and effective.

MLRun’s Kubernetes-native design allows it to scale automatically based on workload demands. This makes it suitable for everything from small prototypes to large-scale production deployments. Its distributed training capabilities enable horizontal scaling, ensuring efficient resource management during model training.

For inference tasks, MLRun uses serverless functions to dynamically allocate GPU resources, optimizing performance while maintaining cost efficiency.

MLRun also enhances team collaboration by integrating with leading CI/CD tools like Jenkins, GitHub Actions, GitLab CI/CD, and Kubeflow Pipelines. These integrations streamline workflows by automating testing and deployment processes. Additionally, real-time dashboards provide teams with clear insights into model performance and system health, fostering better communication and coordination.

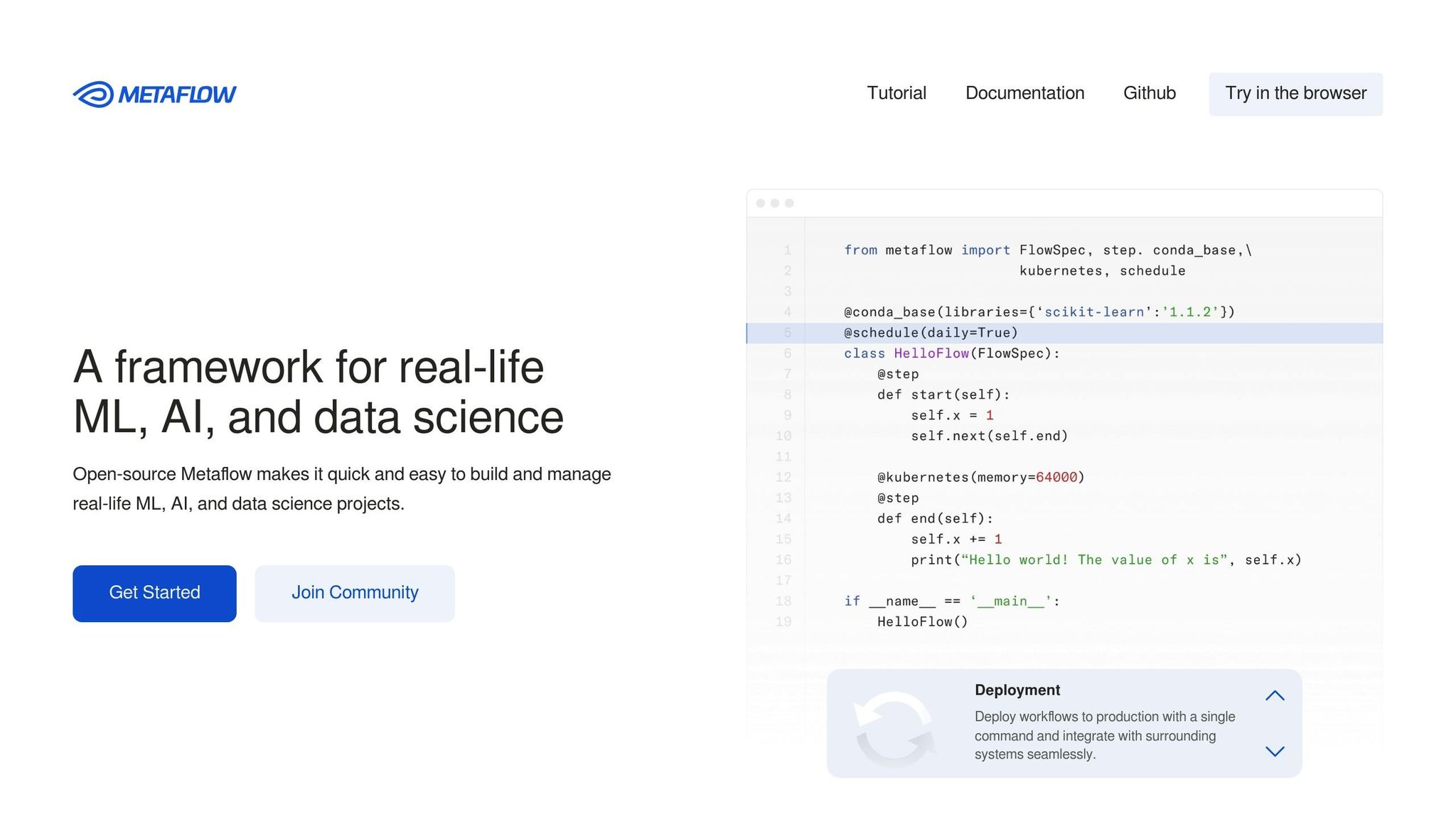

Developed at Netflix to support recommendation systems and A/B testing, Metaflow has evolved into an open-source platform that simplifies machine learning (ML) workflows while ensuring they scale reliably. Below, we explore its standout features, including interoperability, governance, cost management, scalability, and collaboration.

Metaflow tackles common challenges in ML orchestration by integrating effortlessly with the Python ecosystem. It supports widely-used ML libraries such as scikit-learn, TensorFlow, PyTorch, and XGBoost, all without requiring extra configuration. Its native integration with AWS further simplifies operations by automating tasks like EC2 instance provisioning, S3 storage management, and distributed computing via AWS Batch.

With decorators like @batch and @resources, data scientists can scale workflows from local machines to the cloud with minimal effort. This approach ensures that Python workflows can be enhanced for orchestration without significant code changes.

Additionally, Metaflow supports containerized environments through Docker, enabling consistent execution across diverse computing setups. This eliminates the common "it works on my machine" problem, making development smoother for teams.

Metaflow automatically assigns a unique identifier to every workflow run, tracking all artifacts, parameters, and code versions. This creates a reliable audit trail that supports regulatory compliance and allows for precise reproduction of experiments.

When deployed on cloud infrastructure, the platform uses role-based access controls integrated with AWS IAM policies to secure resource access. Its data lineage tracking feature documents the entire journey of data through workflows, making it easier to trace issues and comply with governance policies.

The metadata service centralizes workflow data, including runtime statistics, resource usage, and error logs. This comprehensive logging simplifies debugging and provides insights into workflow behavior over time.

Metaflow optimizes cloud spending by intelligently allocating resources, including support for AWS spot instances. Automatic cleanup mechanisms prevent waste by terminating idle instances and clearing temporary storage.

Through decorators like @resources(memory=32000, cpu=8), teams can define resource limits, ensuring workflows stay within budget. The platform’s dashboard provides usage analytics, highlighting resource-heavy workflows and identifying opportunities for cost savings.

Metaflow excels in scaling workflows to handle large datasets and complex models. Using AWS Batch, it distributes tasks across multiple machines, managing job queues, resource provisioning, and failure recovery automatically.

Step-level parallelization enables tasks to run simultaneously, cutting down runtime, while GPU-enabled instances are provisioned as needed for resource-intensive steps. The platform dynamically adjusts resources throughout execution, aligning instance types and quantities with workflow demands to avoid over-provisioning and minimize costs.

Metaflow fosters teamwork with its shared metadata store, which allows team members to discover, inspect, and reuse workflows. Its integration with Jupyter notebooks lets data scientists prototype ideas and transition them seamlessly into production.

The platform's experiment tracking creates a shared knowledge base, enabling teams to compare models, share insights, and build on each other’s work. Version control integration ensures workflow changes are tracked and reviewed through established development processes.

Real-time monitoring offers visibility into active workflows, helping teams coordinate more effectively and pinpoint bottlenecks. Detailed error reporting and retry mechanisms further reduce the time spent troubleshooting, streamlining collaboration and productivity.

Kedro stands out among platforms by prioritizing interoperability and simplifying workflows to improve machine learning operations.

This open-source Python framework is designed to standardize data science code and workflows, making team collaboration more efficient. Its structured approach ensures that projects maintain consistency while offering flexibility for customization.

One of Kedro's key strengths is its emphasis on teamwork. It provides a project template that organizes configurations, code, tests, documentation, and notebooks into a clear structure. This template can be tailored to meet the unique needs of different teams, fostering smoother collaboration.

Kedro-Viz, the framework's interactive pipeline visualization tool, plays a pivotal role in simplifying complex workflows. It offers a clear view of data lineage and execution details, making it easier for both technical teams and business stakeholders to grasp intricate processes. The ability to share visualizations through stateful URLs enables targeted discussions and collaboration.

Beyond its visualization capabilities, Kedro promotes essential software engineering practices like test-driven development, thorough documentation, and code linting. It also features a Visual Studio Code extension that enhances code navigation and autocompletion, streamlining the development process.

Another valuable feature is pipeline slicing, which allows developers to execute specific parts of workflows during development and testing, saving time and resources.

ZenML simplifies machine learning workflows by offering a framework for building reproducible and scalable pipelines. This open-source tool bridges the gap between experimentation and production, enabling teams to move seamlessly from prototypes to fully operational ML systems.

One of ZenML's standout features is its modular architecture, which breaks down ML pipelines into individual, testable steps. By treating each step as a separate unit, debugging and maintenance become far more straightforward compared to traditional, monolithic workflows.

ZenML shines when it comes to connecting with a variety of ML tools and cloud services. With support for over 30 integrations - including MLflow, Kubeflow, AWS SageMaker, and Google Cloud AI Platform - it provides unmatched flexibility in building and managing workflows.

The framework’s stack-based integration system allows you to tailor technology stacks to specific environments. For example, you might use local tools for development, cloud services for staging, and enterprise solutions for production. This adaptability ensures teams can adopt ZenML at their own pace without disrupting existing processes.

ZenML also consolidates artifact stores, orchestrators, and model registries under a single interface. This unified approach means you can easily switch from running pipelines locally to deploying them on Kubernetes without altering your code. Such versatility supports secure and well-governed operations across different environments.

ZenML meets enterprise-grade security needs with features like detailed lineage tracking and audit logs. Each pipeline run generates comprehensive metadata, including information on data sources, model versions, and execution environments. This level of transparency is crucial for regulatory compliance.

The framework also includes role-based access control, allowing organizations to define precisely who can access specific pipelines, artifacts, or environments. This ensures sensitive data and models are protected while still enabling collaboration across teams.

For model governance, ZenML offers automatic versioning, approval workflows, and deployment gates. These tools allow teams to enforce validation policies, reducing the risk of deploying untested or problematic models into production.

ZenML’s architecture supports scaling from small, local experiments to large, distributed cloud deployments. Features like step caching help save time and reduce costs by reusing results from unchanged pipeline steps.

For high-demand workloads, ZenML integrates with Kubernetes-based orchestrators, enabling automatic scaling of compute resources. This elasticity ensures that teams can handle fluctuating computational needs without overcommitting resources.

Additionally, pipeline parallelization allows independent steps to run simultaneously, optimizing resource usage and cutting down execution times for even the most complex workflows.

ZenML fosters teamwork through its centralized pipeline registry and shared artifact management. These features allow team members to share and reuse pipeline components, improving efficiency and consistency.

The platform integrates seamlessly with popular tools like Jupyter notebooks and IDEs, letting data scientists work in familiar environments while benefiting from robust pipeline management. It also supports code reviews and version control, ensuring that software engineering best practices are upheld.

With experiment tracking, teams can compare different model versions and pipeline configurations. This capability makes it easier to identify the best-performing solutions and share insights across the organization, enhancing collaboration and decision-making.

Argo Workflows is a container-native workflow engine crafted specifically for Kubernetes environments. This open-source tool is ideal for orchestrating machine learning (ML) pipelines, with each step running in its own isolated container - a perfect fit for teams leveraging Kubernetes.

The platform employs a declarative YAML-based approach to define workflows. This allows data scientists and ML engineers to outline their entire pipeline logic in a way that is version-controlled and reproducible. Each workflow step operates independently within its own container, ensuring isolation and preventing dependency conflicts. This container-centric design integrates seamlessly with Kubernetes, making it a natural choice for containerized ML pipelines.

Argo Workflows works effortlessly within the broader Kubernetes ecosystem. It integrates with popular container registries such as Docker Hub, Amazon ECR, and Google Container Registry, enabling teams to pull pre-built ML images or custom containers with ease.

Thanks to its container-first architecture, Argo can orchestrate a variety of tools, whether you're running TensorFlow jobs, PyTorch experiments, or custom scripts for data preprocessing. The platform's flexibility ensures that diverse components can be coordinated within a unified pipeline.

For artifact management, Argo supports multiple storage backends, including Amazon S3, Google Cloud Storage, and Azure Blob Storage. This allows teams to store and retrieve datasets, model checkpoints, and results using their preferred cloud storage solutions, avoiding vendor lock-in.

Argo Workflows leverages Kubernetes' RBAC system to provide robust security. Organizations can define detailed permissions to control who can create, modify, or run specific workflows. This ensures sensitive ML pipelines remain protected while still enabling collaborative development.

The platform also offers detailed audit logging via Kubernetes events and custom workflow logs. Every workflow execution is meticulously recorded, detailing what ran, when it ran, and the resources it consumed. This level of transparency helps meet compliance requirements and simplifies troubleshooting for complex pipelines.

For handling sensitive information, Argo follows Kubernetes' secret management best practices. Teams can securely inject API keys, database credentials, and other sensitive data into workflow steps without exposing them in YAML files. This ensures that pipelines can access necessary resources while maintaining security.

Argo Workflows is designed to scale effortlessly, distributing workflow steps across Kubernetes nodes. For pipelines with parallel tasks, the platform automatically schedules containers across available cluster resources, optimizing throughput for compute-heavy ML workloads.

With its resource management features, teams can define the CPU, memory, and GPU needs for each workflow step. This ensures that compute-intensive training tasks get the resources they require, while lighter steps avoid wasting cluster capacity.

For large-scale operations, Argo offers workflow templates that can be parameterized and reused across different datasets or model setups. This reduces redundancy and simplifies scaling consistent ML processes across multiple projects or environments.

Argo Workflows helps manage costs by using resources efficiently. Containers are launched on-demand and shut down once a task is completed, minimizing idle resource usage.

The platform also supports spot instances through Kubernetes node groups, enabling teams to take advantage of discounted cloud compute for fault-tolerant ML tasks. With automatic retries, Argo ensures that workloads can handle interruptions, making it a cost-effective option for training on preemptible infrastructure.

Each tool discussed earlier offers its own set of strengths and challenges, creating trade-offs that can influence a team's decision-making process.

Prompts.ai simplifies AI orchestration by unifying access to over 35 language models. Its TOKN credit system can reduce costs by up to 98%, all while maintaining robust enterprise-grade security.

Apache Airflow is backed by a mature ecosystem, offering extensive plugins and reliable logging. However, it requires a steep learning curve and significant resource investment.

Prefect stands out with its user-friendly interface and hybrid execution capabilities. That said, it has fewer integrations, and advanced features are reserved for paid tiers.

Dagster enhances data pipeline management with strong typing and asset lineage. Yet, it comes with a steeper learning curve and has limited adoption in larger enterprises.

Flyte excels in Kubernetes-based containerization, versioning, and reproducibility, making it a solid choice for machine learning workflows. However, its complexity and reliance on Kubernetes may pose challenges for smaller teams.

The table below summarizes the key advantages and limitations of each tool:

| Tool | Key Advantages | Primary Limitations |

|---|---|---|

| Prompts.ai | Unified AI orchestration, up to 98% cost savings, strong security | N/A |

| Apache Airflow | Mature ecosystem, extensive plugins, reliable logging | Steep learning curve, resource-intensive |

| Prefect | User-friendly interface, hybrid execution, automatic retries | Limited integrations, paid advanced features |

| Dagster | Strong typing, asset lineage, data-aware orchestration | Steep learning curve, limited enterprise adoption |

| Flyte | Kubernetes-based containerization, versioning, multi-tenancy | High complexity, Kubernetes dependency |

| MLRun | End-to-end ML lifecycle, auto-scaling, integrated feature store | Complex setup, vendor lock-in concerns |

| Metaflow | Proven scalability, data science-friendly, easy to use | AWS-centric, limited support for complex workflows |

| Kedro | Modular pipelines, comprehensive data catalog, reproducibility | Limited native orchestration, learning overhead |

| ZenML | MLOps-focused, strong tool integration, experiment tracking | Younger platform, smaller community |

| Argo Workflows | Kubernetes-native, container isolation, declarative YAML configuration | Requires Kubernetes expertise, YAML complexity |

MLRun offers a complete machine learning lifecycle solution, including automated scaling and an integrated feature store. However, it comes with a complex setup process and potential concerns around vendor lock-in.

Metaflow, developed by Netflix, is designed for scalability and data science workflows. While user-friendly, it is heavily centered on AWS infrastructure and struggles with highly complex workflows.

Kedro emphasizes modular pipeline design and a detailed data catalog, ensuring reproducibility. On the downside, its native orchestration capabilities are limited, and users may face a learning curve.

ZenML targets MLOps with strong integrations and effective experiment tracking. As a younger platform, it has a smaller community, which could impact support and resources.

Argo Workflows is Kubernetes-native, offering container isolation and YAML-based declarative configurations. However, it demands significant Kubernetes expertise and can involve managing complex YAML files.

Choosing the right tool depends on your team's technical expertise, infrastructure, and workflow needs. Teams with Kubernetes knowledge might lean toward Flyte or Argo Workflows, while those prioritizing ease of use could find Prefect or Prompts.ai more appealing. For data-heavy processes, Dagster's asset-focused approach shines, while research-driven teams may benefit from tools like Metaflow or Kedro.

Selecting the right machine learning (ML) workflow tool depends on your organization's unique goals, expertise, and operational priorities. With so many options available, it's crucial to focus on the features that align with your team's needs. Each tool in the market addresses specific stages of the ML lifecycle, offering varying levels of complexity and specialization.

For US-based organizations aiming to cut costs and simplify AI access, Prompts.ai is a standout choice. By combining access to over 35 leading language models into a single platform and leveraging its TOKN credit system, it delivers up to 98% in cost savings. Teams experienced with Kubernetes might prefer Flyte or Argo Workflows, which excel in cloud-native environments where scalability and containerization are key. These tools are particularly suited for organizations with robust cloud-native infrastructure strategies.

If ease of use is a top priority, tools like Prefect or Metaflow offer intuitive interfaces, reducing onboarding time for data science teams. This is especially beneficial for US companies navigating the ongoing shortage of skilled AI and ML professionals. Meanwhile, data-intensive enterprises - especially those in regulated industries like financial services or healthcare - may find Dagster’s asset-centric approach invaluable. Its strong typing and comprehensive lineage tracking help meet strict compliance requirements while managing complex datasets.

When evaluating tools, consider factors like integration capabilities, governance features, scalability, and cost. Take stock of your current infrastructure, team expertise, and compliance needs before committing to a platform. Starting with a pilot project can help assess workflow complexity, performance, and team adoption before making larger-scale decisions.

Ultimately, choose a solution that not only meets your current needs but also grows with your organization, ensuring security, compliance, and long-term efficiency.

When choosing a tool to manage machine learning workflows, there are several important factors to keep in mind to ensure it aligns with your team's needs. Team expertise plays a major role - certain tools, such as those that rely on Kubernetes, can be challenging for teams without prior experience, potentially creating unnecessary hurdles.

Another key consideration is integration capabilities. The tool should blend smoothly with your existing tech stack, including critical components like data warehouses, version control systems, and other parts of your ML pipeline. A seamless fit can save time and reduce operational friction.

For smaller or expanding teams, it's wise to prioritize tools that are user-friendly and come with a manageable learning curve. This lowers the barriers to entry, enabling quicker implementation and reducing onboarding difficulties. Lastly, tools equipped with built-in monitoring and alerting systems can be invaluable. These features allow for rapid identification and resolution of workflow issues, saving both time and effort.

Selecting the right tool not only simplifies your machine learning processes but also boosts overall productivity and efficiency.

Integrating machine learning tools into workflows can transform how teams handle model development by automating essential stages like data preprocessing, training, and deployment. This automation not only cuts down on manual effort but also speeds up project timelines, allowing teams to achieve results faster.

Additionally, it makes scaling up to manage large datasets more practical, ensures consistent reproducibility with version control for both models and datasets, and works effortlessly with widely-used ML libraries and cloud platforms. By taking the complexity out of these processes, teams can dedicate their energy to driving innovation and tackling important challenges, rather than getting bogged down by repetitive tasks.

When choosing tools for machine learning orchestration, it's crucial to focus on strong security and governance capabilities to safeguard sensitive information and meet compliance requirements. Seek out tools that include role-based access control, end-to-end encryption, and automated compliance checks to adhere to industry regulations.

Key features to consider also include IP allowlisting to manage access, data encryption both at rest and in transit, and support for secure authentication methods like SAML 2.0. These measures work together to protect your workflows, uphold data integrity, and ensure your machine learning operations remain secure and compliant.