Artificial intelligence offers immense potential but brings critical security challenges. Deploying AI without proper safeguards risks data breaches, regulatory penalties, and reputational harm. To address these challenges, four platforms stand out for securing AI deployments: Prompts.ai, Protect AI, AWS SageMaker, and Google Vertex AI. Each provides advanced tools for encryption, access control, compliance, and monitoring.

| Platform | Key Strengths | Ideal For |

|---|---|---|

| Prompts.ai | Centralized control, compliance tools | Enterprises managing sensitive data |

| Protect AI | AI-specific threat detection | Teams focused on AI security monitoring |

| AWS SageMaker | Enterprise security, cloud scalability | Organizations in AWS ecosystems |

| Google Vertex AI | Integrated Google Cloud security | Teams using Google Cloud infrastructure |

Choose the platform that aligns with your infrastructure, security priorities, and compliance requirements to ensure safe and efficient AI deployment.

Prompts.ai serves as a centralized platform for enterprise AI orchestration, bringing together over 35 models within a secure and user-friendly interface. It addresses the complexities of managing multiple AI tools, particularly for organizations that deal with sensitive data and adhere to stringent compliance requirements.

Security lies at the core of Prompts.ai's design. The platform uses Prompt Guard to block jailbreak attempts and enforce policies, ensuring prompt-level protection. It also features automatic PII redaction, which removes sensitive information before processing, safeguarding data integrity. To support compliance, tamper-evident audit logging provides a reliable record of all activities.

Prompts.ai is built to meet rigorous standards, including SOC 2 Type II, HIPAA, and GDPR. SOC 2 Type II audits commenced on June 19, 2025, with continuous monitoring facilitated by Vanta. These measures establish a strong compliance framework, ensuring secure operations.

The platform's Trust Center offers real-time insights into security status, alerting administrators to potential issues before they escalate. Subscribers to business plans benefit from active compliance monitoring, which enhances security by identifying risks early. This robust compliance infrastructure enables the platform to scale securely while maintaining high standards.

Prompts.ai is designed for large-scale enterprise use, supporting unlimited workspaces and collaborators. The platform transforms tasks into scalable, secure processes while maintaining consistent security protocols. Users can add new models, teams, and collaborators instantly without compromising security. With storage pooling, it handles expanding data requirements efficiently, eliminating bottlenecks. Its governance tools provide complete visibility and auditability, even in the most complex deployments.

Prompts.ai employs a usage-based pricing model using TOKN credits, aligning costs with actual AI consumption. This approach prevents organizations from paying for unused capacity:

Prompts.ai's flexible pricing ensures accessibility for individuals and scalability for enterprises, making it a versatile choice for AI orchestration.

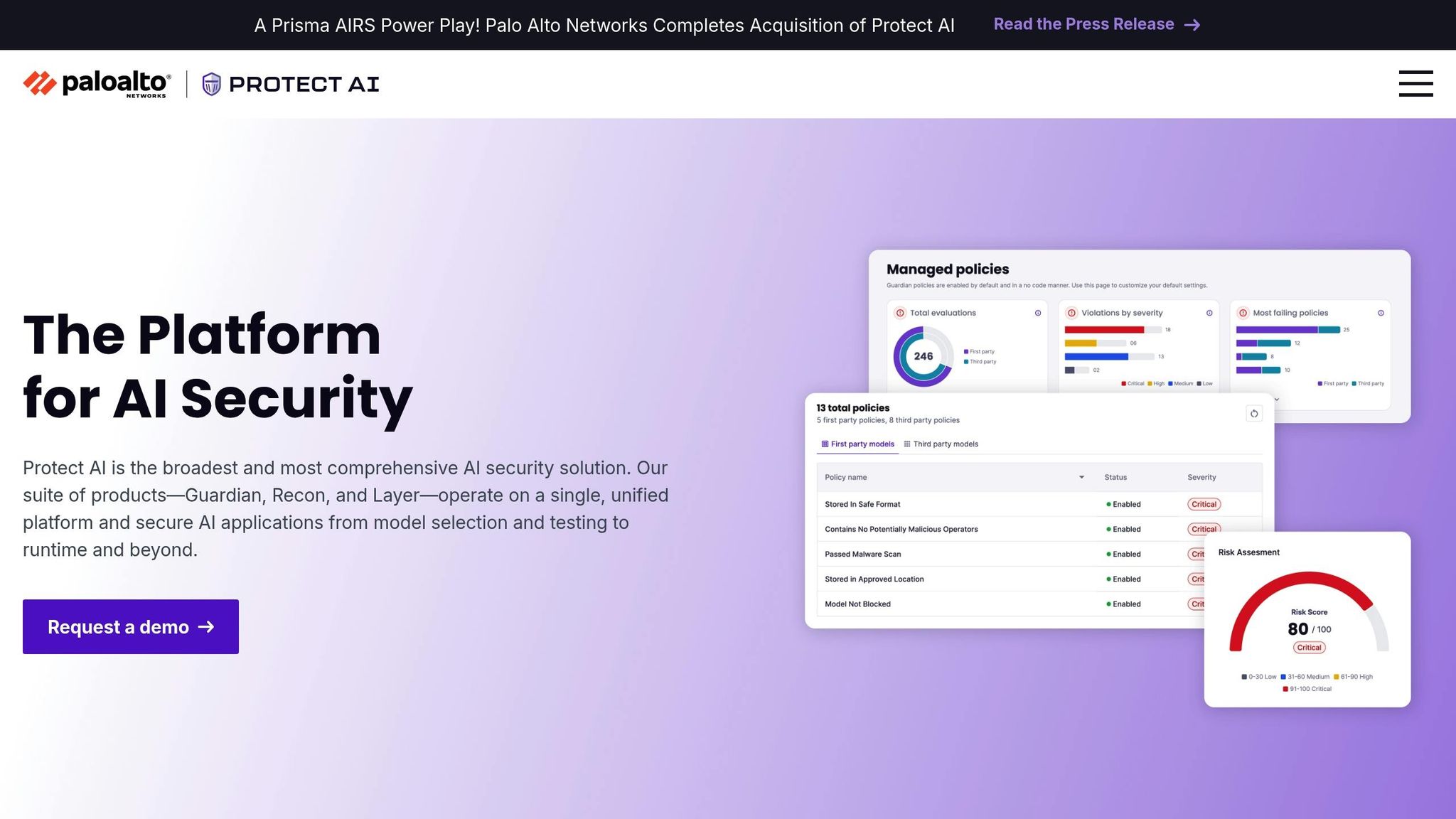

Protect AI is a security platform tailored for safeguarding AI deployments. With its "Secure By Design" philosophy, it proactively tackles emerging threats in the AI landscape.

The platform offers complete AI security through three core products:

What sets Protect AI apart is its robust threat intelligence framework. By collaborating with Hugging Face and leveraging insights from 17,000+ security researchers within the huntr community, the platform has scanned over 4,840,000 model versions and contributed 2,520 CVE records. This extensive database offers unmatched insight into AI-specific vulnerabilities.

Additionally, Protect AI employs 500+ threat scanners to ensure round-the-clock monitoring across the entire AI deployment lifecycle. From model selection to runtime monitoring, this comprehensive scanning ensures security at every stage, creating a solution that scales effortlessly to meet enterprise needs.

Designed for large-scale operations, Protect AI’s modular architecture and seamless integrations make it easy for organizations to expand their security measures as workloads grow. The platform’s community-driven model, supported by 8,000+ MLSecOps members, ensures continuous updates and improvements to counter evolving threats.

This adaptability ensures that Protect AI remains equipped to tackle the challenges of next-generation AI deployments, delivering secure operations without interruptions.

Beyond its technical capabilities, Protect AI adheres to key industry standards and has earned notable recognition. The platform was honored with the SINET16 Innovator Award 2024 for its forward-thinking approach to AI security. It was also named to Inc. Best Workplaces 2024 and recognized as an Enterprise Security Tech Cyber Top Company 2024.

These accolades underscore Protect AI's ability to maintain rigorous security standards while fostering a supportive and productive environment for development teams. The recognition from Enterprise Security Tech highlights the platform’s effectiveness in addressing complex enterprise-level security challenges, ensuring secure and reliable AI operations.

AWS SageMaker is a machine learning platform tailored for secure AI workflows, offering a complete suite of tools to safeguard AI workloads from development through production. Built on AWS’s trusted cloud infrastructure, it ensures robust protection while delivering enterprise-level capabilities.

SageMaker employs multi-layered security measures, including AWS Key Management Service (KMS) for encrypting data both in transit and at rest, giving organizations full control over encryption keys.

To protect sensitive AI models, SageMaker supports network isolation by running training jobs and inference endpoints within private Virtual Private Clouds (VPCs). This setup prevents communication over public networks and allows users to disable internet access during training, creating an air-gapped environment ideal for highly sensitive operations.

The platform also provides fine-grained Identity and Access Management (IAM) controls, enabling precise permissions for resources. Model artifacts are automatically encrypted, and a secure model registry with detailed lineage tracking simplifies auditing and enhances security across the ML lifecycle.

SageMaker adheres to rigorous compliance requirements, making it suitable for enterprise-grade AI deployments. It holds certifications such as SOC 1, SOC 2, SOC 3, and complies with standards like ISO 27001 and PCI DSS. For healthcare applications, it supports HIPAA compliance, and for government workloads, it has achieved FedRAMP authorization.

For organizations operating under GDPR, SageMaker provides tools for automated data lineage tracking and data retention policy implementation, helping meet regulatory mandates. With regular third-party audits and comprehensive logging via AWS CloudTrail, the platform ensures transparency and simplifies compliance reporting by tracking API calls and user actions.

Leveraging AWS’s global infrastructure, SageMaker allows businesses to scale their AI deployments seamlessly across regions. The platform dynamically adjusts compute resources to match demand, ensuring high performance while managing costs effectively.

SageMaker supports distributed training across multiple instances and auto-scaling for inference endpoints, making it capable of handling large models and fluctuating traffic. This scalability aligns perfectly with its security features, enabling efficient and secure AI operations.

SageMaker follows a pay-as-you-go pricing approach, eliminating upfront costs or long-term commitments. Organizations are charged separately for training compute time, inference hosting, and data storage. To help manage expenses, SageMaker offers cost-saving options like Savings Plans and Spot Training, which optimize costs based on usage patterns. For the latest rates, refer to the official AWS pricing page.

Google Vertex AI is Google Cloud's all-in-one platform designed to simplify AI development while maintaining high standards for security and compliance. It combines cutting-edge AI capabilities with a strong focus on safeguarding sensitive data.

Vertex AI takes a layered approach to security, offering tools that give organizations greater control over their data. Customer-managed encryption keys (CMEK) allow users to manage encryption for sensitive information and model artifacts. The platform also uses VPC Service Controls (VPC-SC) to establish security boundaries that prevent unauthorized access and data leaks. For transparency, the platform includes Access Transparency, which logs all actions performed on customer data. Additionally, integrated identity and access management features - such as multi-factor authentication (MFA) and strict password requirements - ensure that only authorized personnel have access. Data is encrypted both during transit and while stored, meeting rigorous security standards.

Vertex AI aligns with healthcare compliance requirements by supporting HIPAA through a Business Associate Agreement (BAA), which outlines Google Cloud's role in managing Protected Health Information (PHI). The platform incorporates features like secure authentication, audit logging, and data minimization to limit PHI exposure. To further protect sensitive data, it employs differential privacy during model training.

When evaluating platforms for governance, compliance, and data protection, it's essential to weigh their unique security measures. Here's a closer look at the key features and challenges of several platforms:

Prompts.ai

Prompts.ai offers centralized control with detailed audit trails, ensuring transparency and adherence to security protocols.

Protect AI

Protect AI specializes in threat detection for AI applications. However, its integration into broader AI workflows may require additional consideration.

AWS SageMaker

AWS SageMaker benefits from Amazon's robust cloud infrastructure, which includes extensive compliance frameworks and security certifications. While this provides a strong foundation, configuring its security settings can demand advanced expertise.

Google Vertex AI

Google Vertex AI taps into Google Cloud's powerful security infrastructure, offering configurable options to protect AI deployments. For organizations already using Google Cloud, its seamless integration can be a significant advantage.

The table below compares the platforms' security strengths, challenges, and ideal use cases:

| Platform | Security Strengths | Considerations | Ideal For |

|---|---|---|---|

| Prompts.ai | Centralized control and detailed audit trails | Review security history | Organizations needing secure, centralized AI management |

| Protect AI | Specialized threat detection for AI | Requires integration into broader workflows | Teams focusing on dedicated AI security |

| AWS SageMaker | Extensive compliance frameworks and cloud security | Demands advanced configuration skills | Enterprises leveraging AWS's established ecosystem |

| Google Vertex AI | Integrated protection within Google Cloud | Additional configuration needed for full security | Organizations already invested in Google Cloud |

Selecting the right platform depends on your organization's security priorities and existing infrastructure. To ensure the best fit, review vendor documentation and consider conducting pilot evaluations. Balancing robust security with deployment efficiency is essential for managing AI responsibly.

Tackling the security concerns outlined earlier requires finding the right balance between safeguarding operations and maintaining efficiency. Selecting a secure AI platform isn't just about features - it's about aligning the platform's strengths with your organization's priorities and infrastructure.

Here's a quick recap of the platforms and their key strengths:

When deciding on a platform, consider your current infrastructure, team expertise, and compliance requirements. Running pilot tests can offer valuable insights, helping you evaluate how well a platform fits into your operations and whether it delivers on its security promises in real-world scenarios.

Prompts.ai prioritizes enterprise-level security, making it a dependable option for organizations with strict compliance demands. It employs strong encryption protocols to protect data, ensuring sensitive information stays secure throughout processing and storage.

The platform aligns with top-tier regulatory standards like GDPR and CCPA, simplifying compliance for businesses. Furthermore, Prompts.ai includes advanced access controls and user authentication systems to prevent unauthorized access, offering organizations confidence in their AI deployments.

Protect AI strengthens the security of AI deployments through advanced threat detection powered by the latest research and real-time threat intelligence. These tools allow teams to spot and address potential risks early, reducing the chance of disruptions to operations.

By anticipating and countering new threats, Protect AI helps protect sensitive information, uphold security standards, and preserve the reliability of AI workflows. This empowers organizations to implement AI solutions with increased confidence and assurance.

AWS SageMaker aligns with critical industry standards like HIPAA and FedRAMP, prioritizing strong security and compliance protocols. For organizations operating under HIPAA regulations, SageMaker offers HIPAA-eligible services, detailed guidance through the HIPAA Eligible Services Reference, and a Business Associate Addendum (BAA) to assist in meeting regulatory obligations.

SageMaker is also fully integrated with AWS GovCloud (US), a dedicated region tailored to meet the rigorous requirements of FedRAMP compliance. This ensures a secure environment for government and public sector applications. These features position SageMaker as a dependable option for organizations demanding robust data protection and adherence to strict regulatory standards.