AI workflow orchestration is transforming how businesses manage complex systems. From cutting costs to enhancing compliance, tools like Prompts.ai, Apache Airflow, Camunda, Temporal, Argo Workflows, and Prefect are reshaping enterprise operations. Here's what you need to know:

| Tool | Best For | Pricing | Key Features |

|---|---|---|---|

| Prompts.ai | AI model orchestration | Pay-per-use TOKN credits | Real-time cost tracking, SOC 2 |

| Airflow | General-purpose workflows | Open-source | DAGs, REST API, Kubernetes-ready |

| Camunda | Business process management | Subscription-based | BPMN, scalable deployments |

| Temporal | Fault-tolerant workflows | Open-source/Cloud | Event-driven, multi-language |

| Argo Workflows | Kubernetes-native AI pipelines | Open-source | Container-based, autoscaling |

| Prefect | Python-first AI workflows | $100–$400/month+ | Hybrid cloud, fault tolerance |

Each tool addresses specific needs, from AI orchestration to business process management. Select based on your infrastructure, budget, and team expertise.

Prompts.ai is a dedicated AI orchestration platform designed specifically for managing large language model (LLM) operations and prompt workflows. Unlike general-purpose workflow tools, it focuses exclusively on AI-driven processes, offering a unified interface that connects users to over 35 leading AI models, including GPT-5, Claude, LLaMA, and Gemini.

With robust API integration capabilities, Prompts.ai not only connects to AI models but also integrates seamlessly with popular business tools through standardized REST APIs. This allows organizations to automate workflows across various departments, turning one-off tasks into scalable, repeatable processes that can run continuously. The REST-based design ensures flexibility and adaptability as business needs change.

Prompts.ai is built to handle enterprise-level demands, supporting millions of prompt executions each month. The platform automatically manages resources and parallel processing, enabling organizations to add new models, users, or teams without downtime. It also provides unlimited workspaces and collaboration options, making it ideal for large-scale, distributed AI initiatives. These scaling capabilities are further enhanced by advanced cost management tools.

One standout feature is its cost tracking and optimization system. Prompts.ai delivers real-time analytics on workflow performance, latency, and costs per API call. Organizations can monitor spending across different models and adjust resource allocation based on actual usage. The platform’s pay-as-you-go TOKN credit system replaces traditional subscription models, tying costs directly to usage and eliminating recurring fees.

Security is another key strength. Prompts.ai adheres to strict compliance standards, including SOC 2 Type II, HIPAA, and GDPR. The platform began its SOC 2 Type II audit process on June 19, 2025, and uses Vanta for continuous monitoring of controls. Role-based access control ensures sensitive workflows remain secure, while audit logging tracks all interactions for compliance purposes.

The platform’s model-agnostic design allows users to compare LLMs side-by-side in real time. This feature helps teams make informed, data-driven decisions about which models are most effective for specific tasks. By identifying the best-performing and most cost-efficient models, organizations can optimize workflows without compromising on quality.

Prompts.ai’s pricing reflects its enterprise-level capabilities, with plans starting at $99 per member per month for the Core tier and $129 per member per month for the Elite tier. The pay-as-you-go structure ensures users only pay for what they use, making it a flexible option for businesses.

For U.S. enterprises navigating regulatory challenges, Prompts.ai offers built-in governance features that provide the visibility and control needed for compliance audits. Its Trust Center delivers real-time monitoring of security posture, addressing the rigorous oversight requirements of regulated industries. With its focus on cost management, compliance, and operational efficiency, Prompts.ai simplifies and streamlines AI workflows for enterprise users.

Apache Airflow is a widely-used open-source orchestration platform designed to simplify and automate complex AI workflows. Its powerful REST API and Directed Acyclic Graphs (DAGs) make managing tasks and dependencies more efficient.

REST API Integration and Task Automation

With Airflow's REST API, external systems can trigger workflows, check task statuses, and access execution results, making it an ideal choice for integrating machine learning models and data pipelines. Workflows are structured as DAGs, which automatically handle task sequencing, retry failed tasks based on configurable rules, and log detailed information for troubleshooting.

Scalability and Performance

Airflow offers robust scalability options, supporting distributed execution through the CeleryExecutor for multi-node tasks and the KubernetesExecutor for dynamic scaling using pods. Parallelism settings can be fine-tuned at the global, DAG, or task level, ensuring optimal performance for diverse workloads.

Cost Efficiency and Resource Allocation

As an open-source platform, Airflow eliminates licensing costs while providing tools to optimize resource usage. Tasks can be assigned to infrastructure that matches their resource needs - lightweight tasks like data preprocessing can run on smaller instances, while resource-intensive operations such as model training can leverage GPU-enabled nodes. This flexibility helps organizations allocate resources effectively and avoid unnecessary expenses.

Security and Compliance

Airflow incorporates robust security features, including Role-Based Access Control (RBAC), integration with enterprise authentication systems like LDAP, OAuth, and OpenID Connect, encrypted connections, and external secret management. These features ensure compliance and secure operations.

Enterprise deployments often enhance security by placing Airflow behind firewalls, restricting network access, and using VPNs or private networks to connect with AI model endpoints and data sources. A well-designed deployment architecture is crucial for maintaining network security.

Operational Requirements

Running Airflow effectively requires skilled DevOps professionals to handle monitoring, database management, and system health checks. Tools like Prometheus and Grafana, supported by the Airflow community, can significantly enhance performance monitoring.

While Airflow has a steep learning curve for teams new to workflow orchestration, its extensive community resources and documentation are invaluable for overcoming challenges. Its mix of scalability, flexibility, and cost control makes it a strong choice for orchestrating AI workflows using REST-based systems.

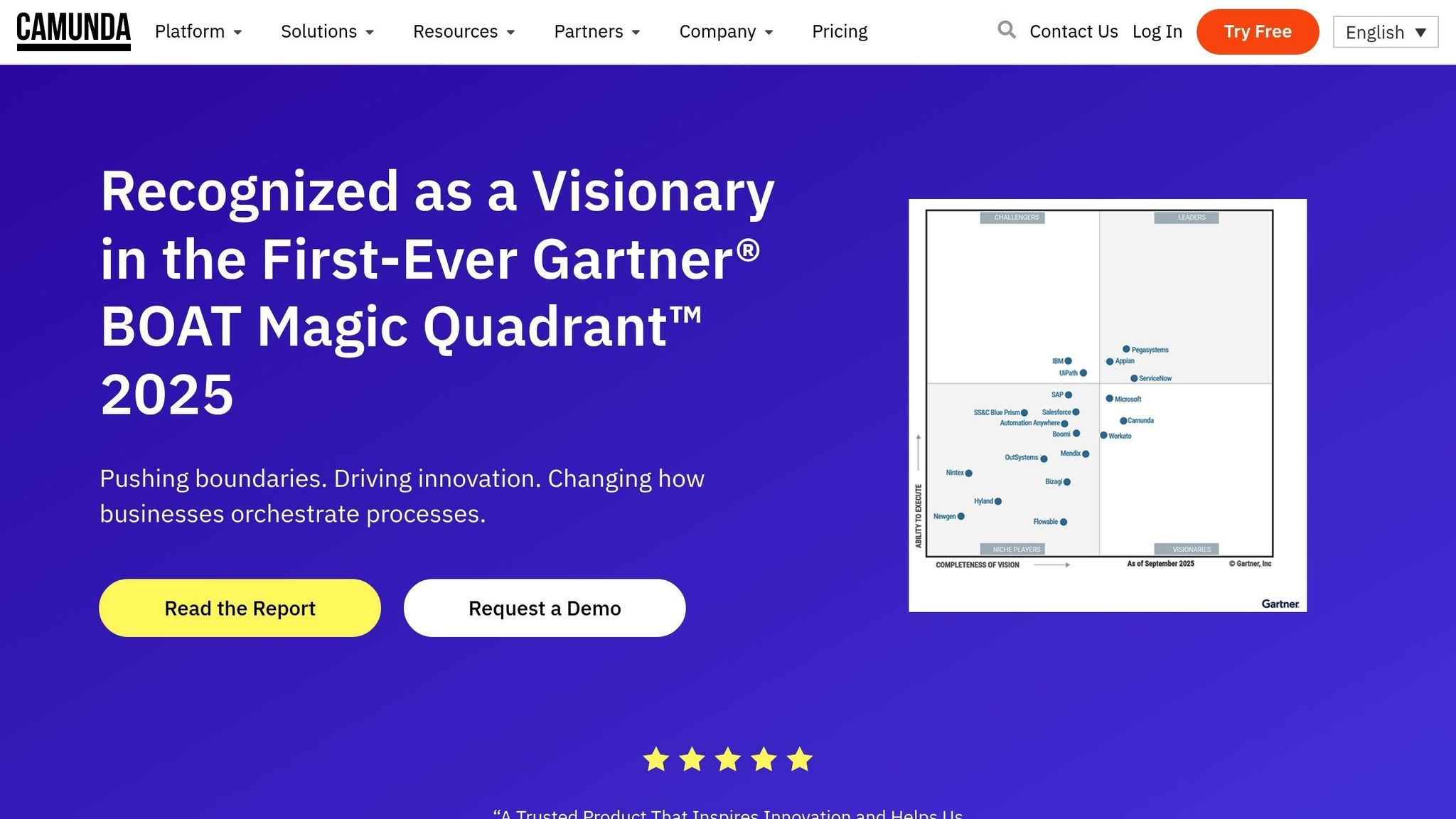

Camunda is a powerful platform for Business Process Management (BPM) that specializes in designing and running intricate workflows using BPMN. Its cloud-based solution is powered by Zeebe, an open-source engine designed for horizontal scalability. Built to thrive in Kubernetes environments, Zeebe offers the scalability and resilience needed to handle large-scale workloads efficiently.

With its open architecture, Camunda supports seamless integration across a variety of IT systems, complementing the adaptability of REST orchestration. Whether deployed on-premises or in the cloud, it fits effortlessly into existing infrastructures, giving organizations the flexibility to meet evolving demands.

Temporal is a microservice orchestration platform designed to help developers create scalable and dependable applications while maintaining efficiency. It originated as a fork of Uber's Cadence project but has since grown into a well-established platform. With its RESTful design and scalable microservices architecture, Temporal supports smooth AI workflows, fitting seamlessly into the integrated workflow systems highlighted in similar platforms.

Argo Workflows is a tool designed specifically for Kubernetes, enabling scalable AI workloads by isolating each step of a workflow into its own container.

Every step runs in a separate container, defined using YAML, while Kubernetes takes care of scheduling and resource allocation. This setup ensures efficiency and organization without requiring manual intervention.

With its REST interface, Argo Workflows simplifies the entire process, including submission, monitoring, retrieving results, and handling errors. This makes it easier for teams to manage workflows, even if they lack in-depth Kubernetes expertise.

The platform automates key tasks like managing dependencies, executing conditional logic, and retrying failed steps. It supports complex patterns such as directed acyclic graphs (DAGs), loops, and parallel branches, making it ideal for tasks like data preprocessing, model training, and validation.

By taking advantage of Kubernetes' autoscaling capabilities, Argo Workflows dynamically adjusts compute resources, including CPU and GPU containers, to match the demands of each workflow.

Security is a priority, with Kubernetes features like role-based access control (RBAC), network policies, and secrets management ensuring the protection of sensitive AI models and data.

This Kubernetes-focused orchestration approach works well alongside other platforms, showcasing different strategies for managing AI workflows. Argo Workflows stands out with its REST-driven management and seamless Kubernetes integration, offering a highly effective solution for modern AI operations.

Prefect takes a unique approach by blending cloud-based management through Prefect Cloud with on-premises execution. This hybrid setup allows organizations to deploy workflows while maintaining control over data processing, striking a balance between flexibility and security.

The platform's Python-first design makes it user-friendly for teams already familiar with Python. By using decorators, Prefect can transform standard Python functions into workflow tasks, reducing the need for additional training or steep learning curves.

Prefect is built with fault tolerance in mind. It includes features like configurable retries, backoff strategies, custom logic, and state-based recovery mechanisms to ensure that long-running workflows continue smoothly, even when issues arise.

When it comes to security, Prefect Cloud provides robust permissions and authorization capabilities. It also supports secure management of runtime secrets and parameters, keeping sensitive data protected throughout the workflow execution process.

Pricing is structured across several tiers to accommodate different needs:

Prefect's scalability is another standout feature. With tools like conditional logic, parallel execution, and dependency management, it is well-equipped to handle the orchestration of complex AI workflows, making it a powerful choice for organizations looking to streamline their operations.

Choosing the right tool for AI workflow management often depends on understanding the unique strengths and limitations of each option. By comparing these tools, organizations can align their decisions with their specific needs, budget, and technical capabilities. Below, we break down the key advantages and trade-offs of prominent platforms for AI orchestration.

Prompts.ai offers a streamlined solution for managing AI workflows, supporting over 35 top language models through a single interface. Its pay-as-you-go TOKN credit system eliminates subscription fees, offering significant cost savings. The platform includes a FinOps layer for real-time cost tracking and enterprise-grade security features to ensure compliance and protect data. However, for organizations requiring broader workflow logic beyond AI orchestration, its specialized focus may feel limiting compared to more general-purpose tools.

Apache Airflow is a strong contender for handling complex data pipelines, thanks to its extensive library of operators and integrations. Its scalability via Celery and Kubernetes, combined with its open-source nature, helps reduce licensing costs. However, Airflow demands a high level of technical expertise for setup and maintenance, and its resource consumption can be challenging for large-scale deployments.

Camunda stands out with its Business Process Model and Notation (BPMN) support, making it an excellent choice for organizations with established business process management practices. Its visual modeling tools allow non-technical team members to contribute to workflow designs, and it offers both cloud and on-premises deployment options. On the downside, Camunda's enterprise features come with higher licensing costs, and its complexity may overwhelm teams looking for simpler solutions.

Temporal shines in scenarios requiring fault tolerance, thanks to its event-sourcing architecture, which ensures workflows can recover from any failure point. It supports multiple programming languages and provides strong consistency guarantees, making it ideal for mission-critical AI processes. However, its distributed architecture adds complexity, and the learning curve can be steep for new users.

| Tool | REST API Support | AI Workflow Focus | Scaling Options | Cost Structure |

|---|---|---|---|---|

| Prompts.ai | Native REST APIs | Purpose-built for AI | Auto-scaling cloud | Pay-per-use TOKN credits |

| Apache Airflow | REST API available | General-purpose with AI plugins | Kubernetes, Celery executors | Open-source (infrastructure costs) |

| Camunda | Comprehensive REST APIs | Business process focus | Clustered deployment | Subscription-based licensing |

| Temporal | gRPC and REST support | Event-driven workflows | Horizontal scaling | Open-source core, paid cloud |

| Argo Workflows | Kubernetes-native APIs | Container-based AI pipelines | Kubernetes scaling | Open-source (cluster costs) |

| Prefect | Full REST API coverage | Python-first AI workflows | Hybrid cloud execution | Tiered pricing ($100–$400/month) |

Argo Workflows is tailor-made for Kubernetes environments, making it a natural fit for teams already invested in container orchestration. Its container-native framework is well-suited for AI workloads requiring specific runtime environments. The tool excels at parallel execution and resource management. However, it is limited to Kubernetes deployments and requires container expertise from the development team.

For teams that prioritize Python, Prefect offers a user-friendly yet powerful orchestration platform. Its decorator-based approach allows developers to convert standard Python functions into workflow tasks with minimal effort. The platform’s hybrid architecture supports cloud management while enabling on-premises execution, addressing security concerns without sacrificing flexibility. The main drawback is its pricing, which can become costly for larger teams compared to open-source solutions.

Ultimately, the choice of tool depends on organizational priorities. Teams focused on AI model orchestration and cost efficiency may find Prompts.ai ideal. Organizations leveraging Kubernetes infrastructure might lean toward Argo Workflows, while those requiring deep business process integration could prefer Camunda. Python-centric teams often favor Prefect, and companies prioritizing fault tolerance typically choose Temporal. These comparisons underscore the importance of selecting the right orchestration tool to scale AI operations effectively.

The world of REST orchestration tools for AI workflows offers a variety of options, each designed to cater to specific organizational needs and technical environments. The challenge lies in choosing a platform that aligns seamlessly with your requirements, ensuring that its strengths complement your goals rather than forcing you to adapt to its limitations. This guide provides a foundation for pairing tool capabilities with operational priorities.

For organizations prioritizing cost efficiency and AI-driven automation, Prompts.ai stands out with its pay-as-you-go model and enterprise-level governance. Teams already leveraging Kubernetes infrastructure might find Argo Workflows to be a natural fit, offering container-native orchestration that maximizes existing investments. On the other hand, businesses with established process management practices can benefit from Camunda's BPMN support. Each of these tools brings unique advantages, emphasizing the importance of aligning features with strategic goals.

When selecting a solution, it’s critical to weigh both short-term and long-term costs. Open-source tools like Apache Airflow and Temporal may have minimal upfront expenses, but they often require substantial technical expertise and infrastructure investment. Managed platforms, while potentially more expensive on a monthly basis, can reduce operational burdens and deliver faster results.

Compliance and security considerations also play a pivotal role, especially for enterprises handling sensitive data. As discussed earlier, platforms offering robust audit trails, role-based access controls, and strong governance features may justify higher costs by mitigating regulatory risks and compliance-related expenses.

A successful implementation starts with a thorough evaluation of your current technical setup and future scaling needs. Teams should assess their infrastructure, in-house expertise, and integration requirements before committing to a platform.

Ultimately, the goal is to empower innovation while keeping technical complexities to a minimum. Whether you choose a specialized AI platform for its simplicity, leverage Kubernetes-based solutions, or build on reliable open-source tools, success hinges on aligning the platform’s capabilities with your organizational needs and technical realities. By doing so, you can focus on creating impactful AI workflows rather than being bogged down by infrastructure management.

When choosing an AI workflow orchestration tool, it's important to weigh several key factors to ensure it aligns with your organization's needs. Start by evaluating the tool's user-friendliness, adaptability, and how well it integrates with your current systems. Features like clear workflow tracking, seamless human-AI collaboration, and detailed data analytics can play a significant role in improving efficiency and supporting better decision-making.

It's also crucial to consider the tool's ability to scale alongside your organization's growth and its capacity to meet long-term automation objectives. A straightforward interface combined with robust capabilities for managing complex workflows can help streamline operations and minimize potential challenges.

Prompts.ai places a strong emphasis on security and compliance, offering peace of mind to enterprises relying on AI for their operations. By adhering to established industry protocols and regulatory standards, we ensure that data privacy and integrity are maintained throughout the entire AI workflow.

Our platform employs advanced encryption techniques, secure API communications, and role-based access controls to protect sensitive information. To further reinforce trust, Prompts.ai undergoes routine audits and aligns with key frameworks such as GDPR and HIPAA, addressing the unique requirements of organizations managing confidential data.

Using Apache Airflow to manage AI workflows can feel overwhelming, particularly for those unfamiliar with workflow orchestration. The platform demands a solid grasp of technical skills, such as Python programming, managing task dependencies, and fine-tuning the system to handle intricate workflows efficiently.

On top of that, scaling Airflow to meet growing demands and resolving performance issues can be tricky without the right expertise. To get the most out of this powerful tool for AI-driven processes, organizations should either ensure their teams are equipped with the required knowledge or invest in training programs to bridge any gaps.