In the world of data science, managing complex workflows is key to handling tasks like data ingestion, preprocessing, training, and deployment. Orchestration tools simplify these processes by automating dependencies, scheduling, and scaling. Here’s a quick overview of four top tools:

Each tool has unique strengths, from AI optimization to batch processing, making your choice dependent on team expertise and project needs.

| Tool | Strengths | Limitations | Best Use Case |

|---|---|---|---|

| Prompts.ai | Unified AI access, cost control, governance | Enterprise focus, AI-specific | AI workflows, multi-model experiments |

| Airflow | Flexible, Python-native, strong community | Steep learning curve, complex setup | Batch ETL/ELT, large-scale workflows |

| Prefect | Error handling, dynamic workflows, intuitive | Smaller community, limited UI | Agile ML pipelines, developer teams |

| Luigi | Lightweight, simple dependencies | Limited scalability, basic documentation | Stable batch jobs, small setups |

Choose the tool that aligns with your workflow complexity, team expertise, and scalability needs.

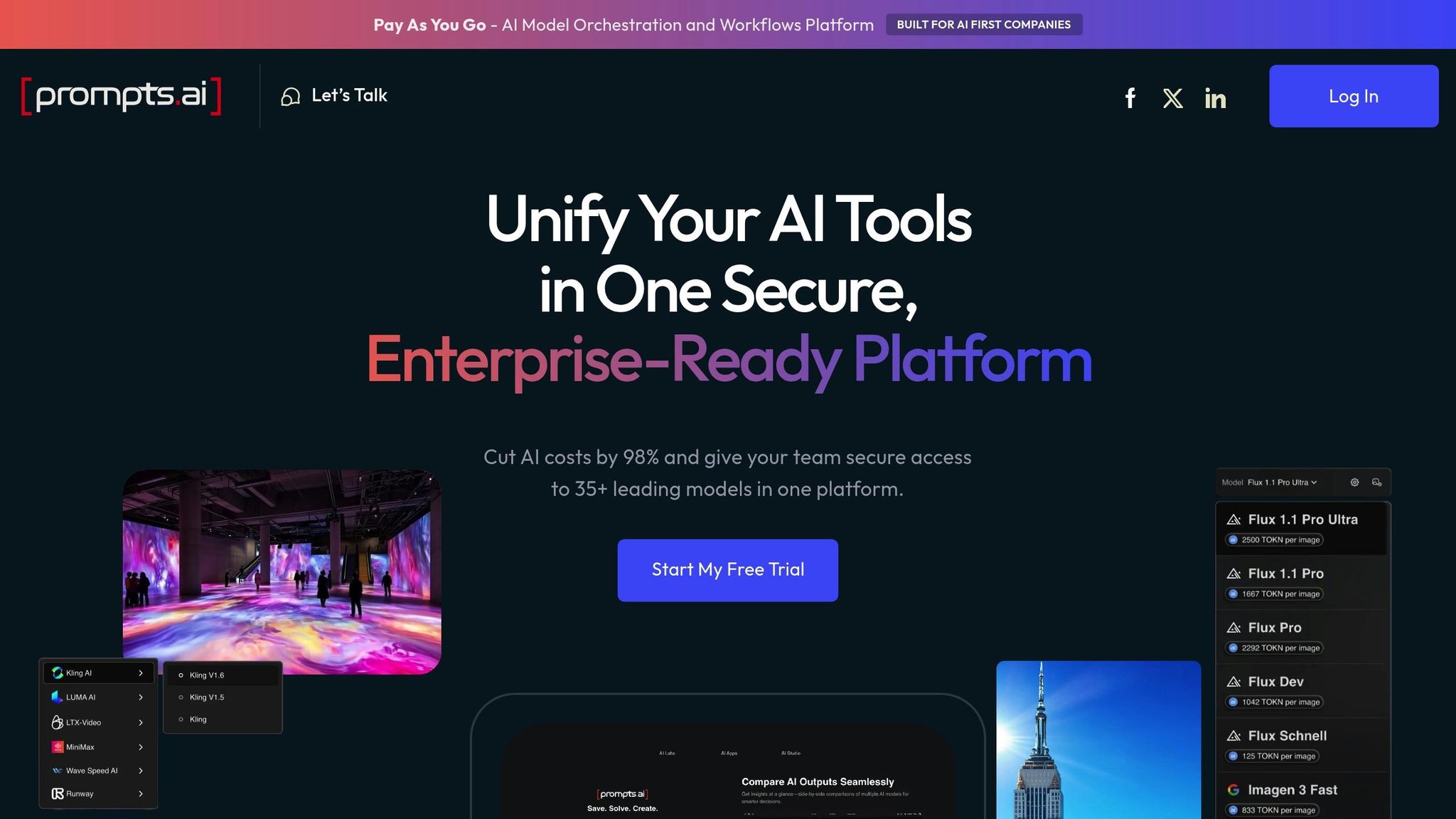

Prompts.ai is a cutting-edge platform designed to streamline enterprise AI workflows by bringing together over 35 large language models (including GPT-5, Claude, LLaMA, and Gemini) into one secure and centralized system. For data scientists working in enterprise settings, this unified approach simplifies access to multiple AI tools while ensuring strong governance and cost efficiency. By consolidating model access, organizations can significantly reduce their AI software expenses.

This platform fits seamlessly into existing workflows. Its model-agnostic framework ensures that businesses can continue using their current AI investments without the hassle of retraining or reconfiguring prompt libraries as new models are introduced.

Prompts.ai takes over many repetitive tasks in the AI workflow. Data scientists can develop standardized prompt templates to maintain consistency and integrate best practices across projects. The platform also automates model selection and comparison, offering built-in evaluation tools. On top of that, automated governance controls ensure compliance with enterprise standards for every AI interaction.

Built with enterprises in mind, Prompts.ai is designed to grow alongside your organization. Whether it’s adding more users, integrating new models, or extending usage to additional departments, scaling is quick and efficient. The platform’s pay-as-you-go TOKN credit system ensures costs align directly with actual usage, allowing teams with varying workloads to operate flexibly while maintaining strict data isolation and access controls.

Prompts.ai includes a FinOps layer that provides real-time insights into spending at the token level. This feature allows data scientists to monitor costs by project, model, or team member, linking AI expenses directly to business outcomes. With tools for tracking ROI and optimizing costs, teams can make smarter decisions about balancing performance and budget.

These capabilities position Prompts.ai as a robust solution for managing and optimizing enterprise AI workflows.

Apache Airflow has become a go-to open-source platform for orchestrating workflows and managing data pipelines. It uses a Directed Acyclic Graph (DAG) structure, allowing data scientists to define workflows as Python code. This approach ensures transparency, version control, and a solid framework for building scalable, automated processes.

Airflow offers a variety of pre-built connectors that make it easy to integrate with popular data tools and cloud services. Whether working with Snowflake, BigQuery, Amazon S3, Databricks, or Kubernetes, Airflow's operators and hooks simplify the connection process. The platform also uses its XCom feature to pass data between tasks, while its REST API enables seamless integration with external systems for monitoring and alerts.

For even more flexibility, Airflow's provider packages make adding new integrations straightforward. Official providers maintained by major cloud services - like AWS EMR, Google Cloud Dataflow, and Azure Data Factory - extend Airflow’s reach, allowing teams to orchestrate workflows across a wide range of platforms.

Airflow excels at automating workflows with built-in tools for scheduling, managing dependencies, and handling retries. Tasks are executed in the correct order, as downstream tasks only run after upstream tasks are successfully completed. Configurable retry mechanisms make troubleshooting more efficient, while sensors and custom operators enable event-based triggers.

One standout feature is dynamic DAG generation, which allows teams to programmatically create pipelines from templates. This is particularly useful for managing workflows at scale, as it reduces repetitive setup and ensures consistency across similar pipelines.

Airflow is designed to scale with your needs. Using CeleryExecutor or KubernetesExecutor, tasks can be distributed dynamically for parallel processing. Its horizontal scaling capabilities ensure efficient performance, even as workloads grow. Additionally, multi-tenancy features allow multiple teams to share infrastructure while maintaining strict task and data isolation.

Although Airflow itself is free to use, the infrastructure and maintenance required to run it can add to operational costs. To help manage these expenses, Airflow provides detailed metrics on task execution and resource usage. This visibility enables teams to monitor overhead and optimize resource allocation effectively.

Prefect emphasizes a smooth developer experience and straightforward operations for workflow orchestration. Unlike many traditional tools, it embraces failures as a natural part of its process rather than treating them as exceptions. This design philosophy builds resilience into its core, making it especially attractive to data scientists seeking dependable automation without the hassle of managing complex infrastructure.

Prefect's integration system revolves around blocks and collections, offering ready-made connections to key data platforms. It provides native integrations with major cloud services such as AWS S3, Google Cloud Storage, and Azure Blob Storage. These integrations come equipped with built-in credential management and connection pooling, streamlining the often tedious setup process for data science projects.

The platform's task library extends support to machine learning workflows with specialized blocks that connect directly to tools like MLflow, Weights & Biases, and Hugging Face. For computationally heavy tasks, Prefect integrates with Docker and Kubernetes, enabling seamless execution in containerized environments. Additionally, tools like Slack and Microsoft Teams blocks allow automated notifications for task completions or issues, ensuring teams stay informed without extra effort. These integrations collectively enhance Prefect's automation ecosystem.

Prefect's automation tools excel in intelligent scheduling and conditional logic. Workflows can be triggered by schedules, events, or APIs, while its subflows feature allows users to break down complex pipelines into reusable components across projects.

Conditional flows enable dynamic execution based on specific data conditions or prior outcomes. For instance, a data validation task can initiate different downstream processes depending on the quality of the data. Prefect also supports parallel execution, managing resources automatically so multiple tasks can run simultaneously without extra configuration.

The platform’s retry mechanisms include features like exponential backoff and custom retry conditions, while its caching system prevents redundant computations by storing task results. Prefect also handles state management automatically, tracking the status of tasks and flows with detailed logs and metadata for easy monitoring.

Prefect is designed to scale effortlessly to meet fluctuating workload demands. Its hybrid execution model combines managed orchestration through Prefect Cloud with the flexibility to run workloads on a team’s own infrastructure. This approach ensures teams can balance convenience with control.

For larger deployments, Prefect supports horizontal scaling using its work pools and workers architecture, which dynamically distributes tasks across multiple machines or cloud instances. Kubernetes integration further enhances its scalability, enabling automatic resource allocation for compute-intensive tasks. The platform’s agent-based architecture allows teams to deploy workers in diverse environments - whether on-premises, in the cloud, or hybrid - while maintaining centralized oversight and orchestration.

Prefect provides clear operational insights through its flow run dashboard and execution metrics, tracking details like compute time and memory usage for each workflow. This transparency helps teams fine-tune their pipelines for better efficiency.

For smaller teams, Prefect Cloud includes a free tier with up to 20,000 task runs per month, making it an accessible option for many data science projects. Additionally, resource tagging enables teams to monitor costs by project or department, offering a granular view that aids in demonstrating ROI and making informed decisions about resource allocation.

Luigi, an open-source Python tool developed by Spotify, takes a focused approach to batch data processing. It allows users to build intricate batch pipelines by linking tasks together, whether that's running Hadoop jobs, transferring data, or executing machine learning algorithms. This makes it a reliable choice for workflows that rely on sequential data processing. Additionally, Luigi’s built-in compatibility with Hadoop and various databases simplifies the setup for large-scale batch operations. Its emphasis on sequential batch workflows makes it a standout option, deserving a deeper examination of its strengths and potential drawbacks.

Selecting the right tool depends on your team's expertise, the project's complexity, and specific workflow needs. Each tool comes with its own strengths and challenges, so understanding these can help guide your decision.

Apache Airflow stands out for its Python-native design and robust community support, making it a go-to for complex, static batch ETL/ELT processes and comprehensive machine learning pipelines. However, this flexibility comes with challenges, including a steep learning curve, significant infrastructure requirements, and a lack of native workflow versioning.

Prefect simplifies dynamic pipelines with features like error handling, automatic retries, and scalability. Its modern architecture makes it a strong choice for teams prioritizing ease of use. That said, its smaller community and limited focus on visual interfaces could be drawbacks for some users.

Luigi excels in handling simple, stable batch processes with its lightweight, dependency-driven approach. It offers transparent version control and supports custom logic, making it a reliable choice for straightforward data workflows. However, scaling to big data scenarios can be challenging, and its minimal user interface and limited documentation may not satisfy teams accustomed to more advanced tools. Despite these limitations, Luigi remains a practical solution for streamlined batch processing.

Prompts.ai takes an AI-first approach, integrating over 35 top-tier language models into one platform. With features like enterprise-grade governance, real-time cost controls, and the ability to cut AI software expenses by up to 98%, it’s an excellent option for organizations managing diverse AI workflows. Its pay-as-you-go model adds flexibility by removing recurring fees while offering comprehensive compliance and audit capabilities.

Here’s a quick comparison of the tools, highlighting their strengths, weaknesses, and ideal use cases:

| Tool | Pros | Cons | Best Use Case |

|---|---|---|---|

| Prompts.ai | Unified AI model access, up to 98% cost savings, enterprise governance, real-time FinOps | - | AI-driven workflows, multi-model experiments, cost-sensitive enterprises |

| Apache Airflow | Mature ecosystem, highly flexible, Python-native, strong community support | Steep learning curve, complex deployment, lacks workflow versioning | Complex batch ETL/ELT, teams with infrastructure expertise |

| Prefect | Modern architecture, error handling, dynamic workflows, developer-friendly | Smaller community, limited visual UI | Dynamic machine learning pipelines, developer-focused teams |

| Luigi | Lightweight, transparent versioning, simple dependency management | Limited scalability for big data, basic UI, sparse documentation | Simple batch jobs, stable ETL processes, resource-limited setups |

For large-scale batch processing, Apache Airflow is often the preferred choice. Prefect shines in dynamic machine learning workflows, offering flexibility and developer-friendly features. Teams focused on AI-driven projects will find Prompts.ai particularly valuable for its specialized capabilities, while Luigi remains a dependable option for simpler, resource-efficient workflows.

After reviewing the comparisons, it's clear that the right orchestration tool depends on your team's specific needs and expertise. Here's a quick recap: Apache Airflow is a strong choice for managing complex, large-scale batch processes if you have the infrastructure expertise to support it. Prefect shines in handling dynamic and agile machine learning pipelines. Luigi works well for straightforward batch workflows, and Prompts.ai stands out for AI-focused processes with strong governance and cost management.

For smaller or mid-sized teams, Luigi offers a simple entry point for batch workflows, while Prompts.ai is a great match for AI-driven projects. Larger enterprises with dedicated infrastructure teams may find Apache Airflow to be the best fit, while agile teams working on machine learning might appreciate the modern approach of Prefect.

Ultimately, the best tool is the one your team can use effectively and efficiently. Start with what meets your current needs, and adapt as your workflows and requirements evolve.

When choosing an orchestration tool, data science teams should focus on key aspects such as ease of use, scalability, and how well it integrates with existing workflows. For handling complex and static workflows, tools like Apache Airflow and Luigi are excellent options. On the other hand, if you need more adaptable, Python-native pipelines, Prefect provides greater flexibility.

It’s also important to consider the infrastructure demands of each tool, as some may require more substantial resources to scale efficiently. Equally critical is evaluating how the team’s expertise matches the tool’s programming model to ensure a smooth transition and maintain productivity. The ideal tool will ultimately depend on your specific workflow requirements and the degree of automation or customization you need.

Prompts.ai makes managing costs and governance for AI workflows straightforward by providing a dedicated, centralized platform for AI teams. It emphasizes cost transparency, offering detailed tracking of expenses and resource usage. This allows teams to plan budgets confidently and steer clear of surprise costs.

Traditional orchestration tools often demand significant technical expertise and can bring hidden or unpredictable expenses. Prompts.ai, however, is purpose-built for smooth AI orchestration. By prioritizing efficient resource use and governance, it helps teams streamline workflows while keeping a firm grip on their budgets.

Prefect offers a smart and flexible way to handle workflow failures, making it a standout tool for data scientists. With features such as automatic retries, tailored notifications, and the capability to adjust workflows dynamically when problems occur, it simplifies troubleshooting and speeds up recovery. This means less downtime for intricate data pipelines and more time spent on meaningful analysis.

Unlike tools that stick to rigid frameworks, Prefect’s design allows workflows to adapt in real-time. This is especially useful for AI-driven or time-sensitive projects where flexibility is key. By streamlining operations and improving reliability, Prefect enables data scientists to concentrate on uncovering insights rather than dealing with operational headaches.