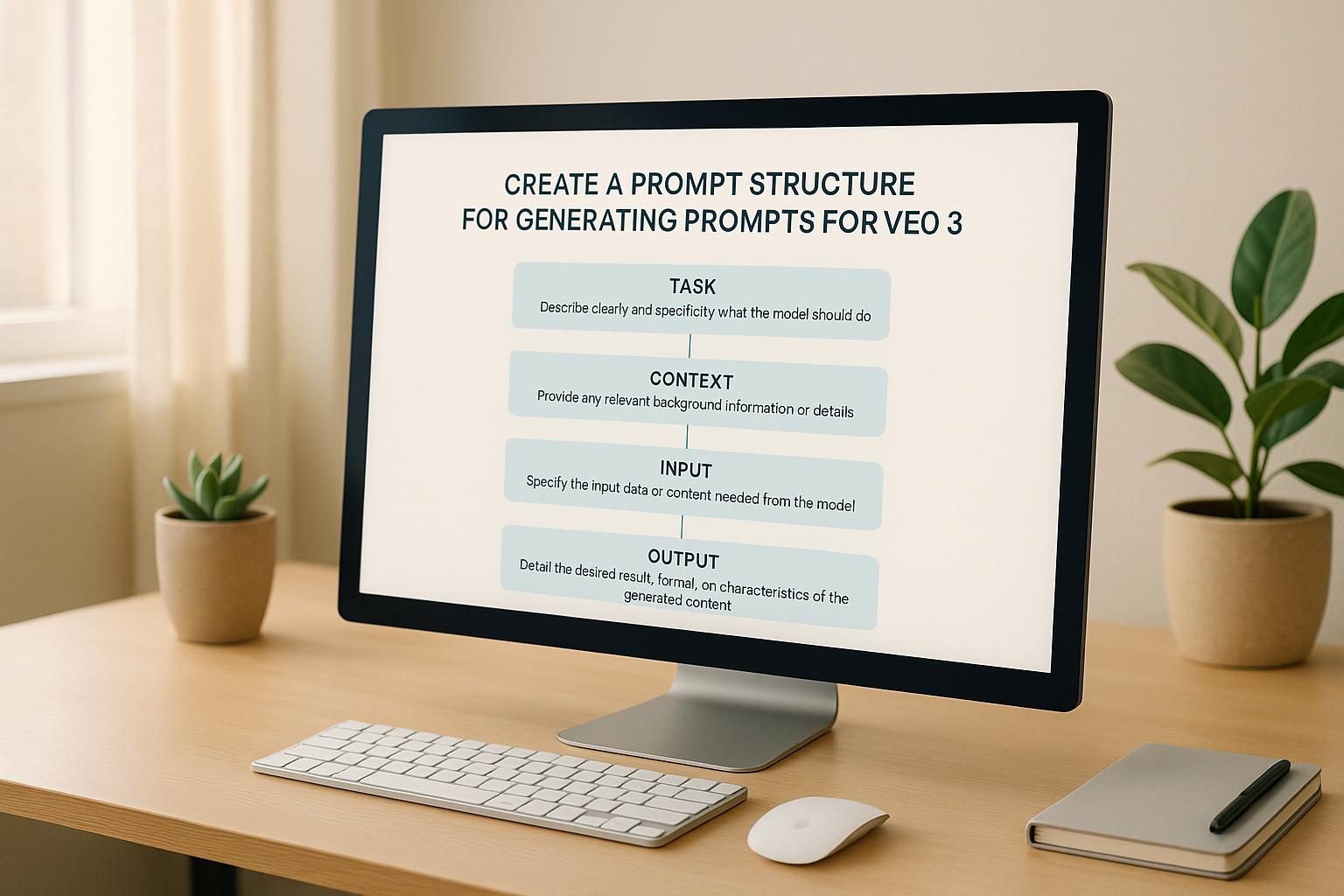

Veo 3 is an advanced AI workflow engine that integrates multiple models, data sources, and processes into seamless pipelines. Crafting structured prompts for Veo 3 ensures predictable, efficient workflows and aligns outputs with business needs. Here's how to build effective prompts:

{TIME_PERIOD}, {DATA_SOURCE}).By following these steps, you can create reliable, scalable prompts that align with enterprise workflows. Tools like Prompts.ai simplify the process, offering features like centralized templates, compliance tracking, and access to 35+ AI models for side-by-side comparisons.

Every Veo 3 prompt is built on seven essential components, each designed to provide clear and actionable instructions for AI workflows. These elements serve as the backbone for crafting prompts that deliver consistent results across various models and applications.

The subject establishes the core focus of the prompt. Whether you're asking the AI to generate content, analyze data, or process information, specificity is key. For example, instead of saying, "analyze customer feedback", try, "analyze customer satisfaction scores from Q3 2024 support tickets to identify the top three complaint categories."

Context and environment set the stage by providing background details the AI needs to understand the task. This could include data sources, industry standards, business constraints, or specific conditions. For instance, when dealing with financial data, you might specify, "using GAAP accounting standards" or "considering current Federal Reserve interest rates."

The action component outlines what the AI is expected to do with the subject and context. Use precise verbs such as "summarize", "categorize", "calculate", or "recommend" to define clear, measurable tasks. Avoid vague terms like "help" or "work with."

Style and modality dictate how the output should appear. This includes tone (e.g., professional, conversational, or technical), format (e.g., bullet points, paragraphs, or structured data), and length. For enterprise workflows, aligning outputs with company communication standards is often necessary.

Technical parameters specify any processing requirements, such as data formats, response length limits, or integration needs with other systems. These constraints help ensure consistent performance across models and workflow stages.

Workflow movement instructions explain how the task fits into the larger process, while validation and output cues guide the AI in checking its work and formatting the response for downstream use.

By combining these elements, you can create prompts that are not only effective but also adaptable to various scenarios.

Modular templates transform prompts into adaptable, reusable tools, streamlining the process and maintaining consistency. They save time by eliminating the need to start from scratch for every new task while ensuring that prompts integrate seamlessly into complex workflows.

A well-crafted template uses placeholders for variable elements while keeping core instructions intact. For example, a customer analysis template might include placeholders like {TIME_PERIOD}, {PRODUCT_LINE}, and {ANALYSIS_TYPE}, while maintaining a consistent analytical structure and output format.

The secret to effective templates lies in separating stable elements from variable ones. Stable elements might include analysis methods, quality standards, or preferred output formats, while variable elements could involve specific data sources, time frames, or department-specific requirements.

To make templates easy to use, structure them with clear sections. Start with a header that outlines the template's purpose and version. Follow this with customizable parameters, core logic, and output specifications. This organization allows team members to adapt templates without disrupting the underlying workflow.

Version control is essential when templates are shared across teams. Use clear naming conventions with version numbers and update dates, and store templates in a central repository. This ensures everyone uses the latest version and benefits from updates without risking workflow errors.

For related tasks, consider creating template families. For instance, a content generation family might include templates for blog posts, social media updates, email campaigns, and product descriptions. These templates can share common elements like brand voice guidelines while varying in format and length.

Once the core and modular structures of a prompt are in place, it's crucial to integrate safeguards to meet industry standards and ensure reliable outputs. These safeguards should be embedded into the prompt structure from the start.

Content filtering instructions help avoid inappropriate or harmful outputs. This includes setting restrictions on sensitive topics, ensuring inclusive language, and providing guidelines for handling potentially controversial subjects. For example, financial prompts might include disclaimers to avoid unintentionally offering investment advice.

Data privacy protections are vital for handling personal or sensitive information. Prompts should include instructions to anonymize identifiers, comply with regulations like GDPR or CCPA, and avoid improper storage or transmission of protected data. In healthcare, prompts must address HIPAA compliance for patient information.

Regulatory compliance markers help monitor AI usage in regulated industries. These might include classification levels for content, approval workflows for sensitive outputs, and documentation requirements for compliance reporting.

Error handling protocols guide the AI on what to do when it encounters unclear or problematic requests. Prompts should include instructions to flag issues, ask for clarification, or escalate tasks to human reviewers when necessary.

Output validation requirements ensure the AI's responses meet quality and safety standards before being integrated into workflows. This could involve fact-checking, requiring citations for referenced information, or setting thresholds for additional reviews.

Audit trail specifications document the AI's reasoning, sources, and assumptions. This documentation is invaluable for regulatory reviews and process improvements, providing transparency and accountability in AI decision-making.

Before diving into prompt creation, it’s crucial to establish clear and measurable objectives that align with your business goals. These objectives serve as the foundation for crafting prompts that deliver meaningful results rather than generic or ineffective outputs.

To set effective objectives, apply the SMART criteria: make them Specific, Measurable, Achievable, Relevant, and Time-bound. For example, TeamAI's May 2025 guide on prompt design offers this SMART objective: "Increase customer satisfaction scores by 50% within the next 3 months by addressing the top three customer-reported issues." Compare this to a vague goal like "improve customer satisfaction", and it’s easy to see how specificity drives better results.

"When creating prompts, it is important to clearly define the objectives and expected outcomes for each prompt and systematically test them to identify areas of improvement." – Google Cloud Vertex AI

Define the problem clearly by being explicit about the tasks or questions you need the AI to address. For instance, instead of asking Veo 3 to "analyze sales data", specify: "Analyze Q4 2024 sales performance across the Northeast region to identify product categories that underperformed by more than 15% compared to Q3 2024."

A strong objective includes three key elements: the target user, the specific problem to solve, and the desired actions. SysAid’s March 2025 documentation provides a great example: "The AI Agent should help the IT team maintain complete and accurate asset records by identifying assets with missing critical information. It should generate a response listing assets with incomplete details and notify the relevant IT personnel." This structure ensures clarity and actionable results.

When working with cross-functional teams, involve all relevant stakeholders to align objectives with their unique needs. For example, a marketing team might require brand-compliant content generation, while a finance team may focus on precise calculations with audit trails. Incorporating these perspectives early ensures the objectives address everyone’s requirements.

Finally, document your objectives in a standardized format that can guide the entire prompt creation process. With this clarity in place, you’re ready to structure each prompt component with precision.

To create effective prompts, systematically complete each of the seven core components, ensuring every detail supports the objectives.

By addressing each component thoroughly, you’ll create prompts that directly tackle the identified business challenges. Once complete, move on to testing and refining your prompts to ensure they perform as intended.

Testing is essential to ensure your prompts work reliably across various scenarios, data sets, and user contexts.

Refining prompts is an iterative process. Use testing data to adjust components, then retest to validate improvements. Repeat this cycle until your prompts consistently deliver the desired outcomes. This approach ensures your Veo 3 prompts remain effective, even as conditions change.

Crafting prompts that work seamlessly across different models requires a focus on universality. Use language that avoids system-specific terms and emphasizes clarity. Instead of referencing features tied to a particular model - like saying, "use your GPT-4 reasoning capabilities" - opt for instructions that any advanced language model can follow, such as "analyze the data using logical reasoning and provide step-by-step explanations."

Standardizing data formats is another key step. For structured data, choose JSON; for tabular data, use CSV; and stick to plain text for narrative content. These consistent formats help avoid compatibility issues when switching between models or using the same prompt across multiple systems.

When designing prompts, prioritize flexible parameter handling by clearly distinguishing between required and optional elements. Organize the essential information at the beginning and add optional details later. This structure ensures that even if a model doesn’t fully process every part of the prompt, the core task can still be completed effectively.

Also, aim for consistent output formatting by specifying the desired structure in your prompts. Whether you need structured responses, specific data types, or organized layouts, defining these requirements ensures that outputs align with automated workflows or downstream systems.

Testing is critical. Run your prompts on various models to identify potential compatibility challenges. Document how different models handle specific prompt structures and create fallback versions for those that struggle. This testing phase helps you refine your approach, ensuring greater reliability when working across platforms.

Once your prompts are standardized for cross-model use, they can be integrated into automated workflows for more complex operations.

Automation connects individual prompts into a unified process, enabling complex tasks to run smoothly. Start by mapping out the entire workflow journey before writing your prompts. Identify decision points, data transformations, and quality checks within the process. This blueprint ensures that prompts are designed to complement each other, forming a cohesive system.

Incorporate conditional logic into your workflows to handle varying scenarios. For example, in a customer service system, routine inquiries can be managed with standard responses, while complex issues are escalated to specialized prompts. This branching logic ensures tasks are routed efficiently based on the situation.

Define handoff protocols to ensure smooth data transitions between workflow stages. Specify what information each prompt should receive and how it should be formatted for the next step. Include validation checks to catch errors early and prevent them from affecting the entire process.

Set up monitoring and logging systems to track the performance of your workflows in real time. Alerts for failed handoffs, delays, or subpar quality metrics help you quickly identify and resolve bottlenecks, improving overall efficiency.

Lastly, integrate human oversight points at critical stages. These checkpoints allow for validation of automated decisions, ensuring quality without slowing down the workflow. By presenting only the necessary information, you can maintain efficiency while still enabling informed human intervention.

Prompts.ai offers specialized tools that make automation even more effective and streamlined.

Prompts.ai simplifies cross-platform prompt management with features designed to enhance efficiency and integration. With access to over 35 models in a single interface, reusable prompt templates, and real-time tracking tools, the platform is built to optimize your workflows.

The prompt library system allows you to centralize reusable templates. This reduces redundant work and ensures that successful prompt designs are consistently applied across teams and projects.

Take advantage of side-by-side model comparisons to evaluate how different models respond to your prompts. By reviewing performance in real time, you can quickly identify the best model-prompt combinations for specific tasks, saving time and eliminating the need for manual testing.

Prompts.ai also includes compliance auditing tools that automatically document AI interactions. These features create detailed logs showing which prompts were used, when they were executed, and what results they produced. This level of documentation is essential for industries with strict regulatory requirements or organizations that need to demonstrate responsible AI practices.

To further enhance your team's skills, explore the Prompt Engineer Certification program and community resources. These tools connect you with expert-designed workflows and a global network of prompt engineers who share valuable insights and solutions. By leveraging these resources, your team can stay ahead of common challenges and continuously improve their prompt development capabilities.

Tackling common issues and using advanced methods can significantly improve the efficiency and effectiveness of prompts.

Inconsistent output formatting is one of the most frequent challenges when working with Veo 3 prompts. This often happens because the instructions lack clarity. To resolve this, include specific formatting details in your prompts. For instance, instead of a vague request like "provide a summary", specify "provide a summary in three bullet points, each with no more than 25 words." Such precision removes ambiguity and ensures consistent results.

Context bleeding arises when details from earlier interactions unintentionally influence current outputs. This issue is especially problematic in workflows where prompts are executed sequentially. To prevent this, start each prompt with a clear reset statement like, "Ignore all previous instructions and focus solely on the following task." You can also use markers such as "BEGIN TASK" and "END TASK" to clearly define boundaries.

Resource inefficiency occurs when prompts are overly complex or repetitive, leading to unnecessary computational strain. Simplify your prompts by consolidating similar instructions. For example, instead of separately stating "use a professional tone", "maintain formal language", and "write professionally", combine them into "use formal, professional language throughout."

Token waste is another common efficiency issue. Long, redundant prompts can quickly deplete token limits, especially in intricate workflows. Regularly review your prompts to eliminate repetition. For example, replace "please analyze the following data carefully and provide detailed insights" with "analyze this data and provide key insights."

Error propagation in multi-step workflows can amplify small mistakes, affecting subsequent stages. To address this, include validation checkpoints within your prompts. Add instructions like "before proceeding, confirm that the previous output contains all required elements" or "ensure the data format matches the specified requirements."

By addressing these issues, you lay the groundwork for implementing advanced techniques that further enhance prompt performance.

Layered instruction architecture organizes prompts into distinct sections - context, processing, and output. This structure provides precise control over each part of the AI's response, ensuring clarity and consistency.

Dynamic parameter injection makes prompts more adaptable by allowing placeholders to be programmatically filled based on specific inputs. For example, a template like "Analyze the {DATA_TYPE} using {ANALYSIS_METHOD} and present results in {OUTPUT_FORMAT}" can adjust dynamically for different scenarios, boosting flexibility and reusability.

Conditional logic embedding enables prompts to handle multiple scenarios within a single structure. Instead of crafting separate prompts for different cases, embed decision-making logic directly. For instance: "If the input contains numerical data, perform statistical analysis. If it contains text data, perform sentiment analysis. If both, prioritize based on data volume." This approach reduces the need for multiple prompts while maintaining specificity.

Sensory and emotional cue integration enhances creative or customer-facing outputs. Instead of a general request like "write a product description", try "write a product description that conveys luxury and includes tactile details to help customers imagine using the product." This level of detail ensures more engaging and vivid results.

Progressive refinement involves iterating on outputs to improve quality. Design prompts to first generate an initial response, then critique and refine it in subsequent steps. This iterative approach often yields higher-quality results than a single-pass method.

Resource allocation optimization is essential for workflows involving multiple models or extensive processing. Specify resource requirements in your prompts, such as preferred model types, processing priorities, and timeout limits. This ensures critical tasks get the resources they need while routine tasks run efficiently.

Once individual prompts are refined, centralizing them into a shared library can greatly enhance team productivity.

Centralized prompt management allows teams to access, edit, and contribute to a shared repository of proven templates. Organize prompts by function, complexity, and use case to make them easy to find and implement. This avoids redundant work and streamlines prompt engineering.

Version control implementation ensures updates to prompts don’t disrupt workflows. Keep detailed records of changes, including performance metrics before and after modifications. This makes it easy to roll back to previous versions if newer iterations underperform.

Template standardization creates consistency across an organization. Develop standard formats for different types of prompts - analytical, creative, or workflow automation. Include key sections like context setting, task definition, output specifications, and quality criteria.

Performance documentation turns your prompt library into a strategic asset. Track metrics like execution time, token usage, success rates, and user satisfaction for each prompt. This data helps identify which prompts to prioritize, refine, or retire. It also guides model selection for specific tasks.

Access control and governance are crucial as the library grows and handles sensitive information. Implement role-based access controls to limit who can view, edit, or execute prompts. Establish approval workflows for new or modified prompts to ensure quality and security before deployment.

Integration with Prompts.ai's library system simplifies management by providing enterprise-grade tools for prompt storage and performance tracking. Features like automated compliance auditing and model comparison tools make it easier to optimize prompts without manual testing.

Community contribution protocols encourage team members to share successful prompts while maintaining quality. Set guidelines for documenting new prompts, including benchmarks, use case descriptions, and implementation notes. Feedback mechanisms, such as user ratings and suggestions, create a continuous improvement loop for your prompt library.

Crafting effective Veo 3 prompt structures hinges on having clear workflow goals, a modular design, and the ability to adapt to changing needs. The key elements - context setting, task definition, output specifications, and quality criteria - help eliminate uncertainty and deliver reliable performance across various models and environments. By using modular prompts, updates can be implemented quickly, while safety and compliance are maintained, avoiding expensive revisions. This structured methodology is essential for leveraging Veo 3's interoperability in complex workflows. Together, these components not only simplify processes but also create a foundation for enterprise-level management.

To scale AI operations effectively, enterprises require systems that can manage, optimize, and secure their workflows. As John Hwang explains:

"prompts are fast becoming a mission critical business artifact like SOPs, but with even more leverage as they can plug into AI agents and run 24/7. They will contain sensitive internal processes, proprietary information, and critical business insights - in essence, key intellectual property of the post-LLM era."

Prompts.ai meets these challenges by offering centralized prompt management with features like role-based access controls and comprehensive audit trails to meet compliance needs. Its unified interface supports integration with over 35 leading models, significantly reducing tool sprawl and cutting software costs by up to 98%. The platform also includes observability tools that track performance metrics such as output relevance, response times, and resource usage, enabling data-driven optimization. For organizations managing extensive prompt libraries, these insights provide a critical advantage.

"prompts are slowly becoming repositories of a company's 'business logic', distilling domain expertise, trade secrets, etc. The key distinction being, obviously, that prompts are written for machines, not humans."

Modular templates in Veo 3 take the hassle out of prompt creation by offering a ready-made structure that eliminates the need to start from scratch. This not only cuts down on the time spent but also reduces the chances of making mistakes, leading to smoother and more efficient workflows.

These templates ensure a consistent design, which is key to achieving dependable and repeatable results across different tasks and users. This consistency plays a crucial role in maintaining high-quality outputs while supporting the seamless scaling of AI operations.

To prioritize safety and compliance when working with Veo 3, take advantage of its integrated safety tools, such as content filters designed to block harmful or inappropriate outputs. Pair these tools with well-defined governance policies to regulate AI model access and ensure responsible data management.

When crafting prompts, focus on clear and detailed instructions, assign specific roles, and include relevant context. This method not only helps the AI generate accurate and compliant responses but also ensures alignment with safety guidelines and user goals while maximizing performance.

Prompts.ai makes handling and fine-tuning prompts for enterprise AI workflows straightforward by providing a single platform packed with useful tools. Features like version tracking, real-time collaboration, and detailed analytics help ensure prompts remain effective, consistent, and aligned with your business goals.

The platform also prioritizes cost control, secure workflows, and smooth integration with more than 35 AI models, allowing businesses to simplify operations, cut costs, and get the most out of their AI-powered processes.