Managing machine learning (ML) models is complex, requiring tools that simplify deployment, monitoring, and version control. This guide highlights five leading AI platforms - Microsoft Azure Machine Learning, Google Cloud Vertex AI, Amazon SageMaker, Hugging Face, and Prompts.ai - each designed to address unique challenges in ML workflows. Here's what you need to know:

Each platform caters to specific needs, from cost efficiency to scalability. Below is a quick comparison to help you decide.

| Platform | Strengths | Limitations | Best For |

|---|---|---|---|

| Azure ML | Enterprise integration, experiment tracking | High costs, steep learning curve | Large enterprises in Microsoft ecosystems |

| Google Vertex AI | AutoML, TensorFlow support, data tools | Limited flexibility, confusing pricing | AI research, TensorFlow-heavy projects |

| Amazon SageMaker | Scalability, AWS integration | Vendor lock-in, complex setup | Teams needing AWS scalability |

| Hugging Face | Pre-trained models, NLP tools | Limited enterprise features | Research teams, NLP-heavy projects |

| Prompts.ai | Cost savings, LLM management | Focused on LLMs, newer platform | Cost-conscious LLM workflows |

Choose the platform that aligns with your technical goals, infrastructure, and budget. Start small with pilot projects to evaluate compatibility before scaling.

Microsoft Azure Machine Learning is a cloud-based platform designed to tackle the challenges of managing machine learning (ML) models. It supports every stage of the ML lifecycle while integrating seamlessly with Microsoft's broader ecosystem of tools and services.

Azure ML simplifies the entire model lifecycle with a centralized registry that automatically tracks model lineage, including datasets, code, and hyperparameters. Its automated pipelines manage everything from data preparation to deployment, ensuring smooth transitions between stages.

The platform shines in experiment tracking, thanks to its built-in MLflow integration. This feature allows data scientists to log metrics, parameters, and artifacts automatically, making it easier to compare model versions and reproduce successful experiments. It also supports A/B testing in production, enabling gradual rollouts while monitoring real-time performance.

Beyond tracking model files, Azure ML provides version control for environment configurations, compute targets, and deployment settings. This ensures that models can be reliably reproduced across development stages. Additionally, the snapshot feature captures every detail of an experiment, including code, dependencies, and data versions.

Such comprehensive lifecycle management makes Azure ML a strong choice for scalable deployments and seamless integration into existing workflows.

Azure ML adapts to varying computational needs with its auto-scaling feature, which adjusts resources dynamically, from single-node training to distributed GPU clusters, without requiring code modifications. This flexibility is particularly beneficial for organizations handling diverse ML workloads.

The platform integrates smoothly with Azure DevOps and GitHub, enabling teams to automate continuous integration and delivery (CI/CD) workflows. For instance, pipelines can be triggered to retrain models whenever new data is available or code changes are committed. Additionally, Azure ML connects directly with Power BI for actionable insights and Azure Synapse Analytics for data processing, creating a cohesive data and AI ecosystem.

Azure ML also supports multi-cloud deployment, allowing models trained on Azure to be deployed on other cloud platforms or even on-premises infrastructure. This capability helps organizations avoid vendor lock-in while maintaining consistent model management across different environments.

Azure ML offers a pay-as-you-go pricing model, with separate charges for compute, storage, and specific services. Compute costs range from approximately $0.10 per hour for CPU instances to over $3.00 per hour for high-end GPUs. For predictable workloads, reserved instances can provide savings of up to 72%.

To help manage costs, Azure ML includes automatic compute management, which shuts down idle resources and scales usage based on demand. The platform also provides detailed cost tracking and budgeting tools, allowing teams to set spending limits and receive alerts as they approach those thresholds.

Storage costs are typically $0.02-$0.05 per GB per month, though organizations moving large datasets between regions should be mindful of potential data transfer expenses.

Microsoft backs Azure ML with extensive resources, including detailed documentation, hands-on labs, and certification programs through Microsoft Learn. The platform benefits from active community forums and receives quarterly updates with new features.

For enterprises, Microsoft offers robust support options, including 24/7 technical assistance, guaranteed response times, and access to dedicated customer success managers. Organizations can also leverage professional consulting services to design and implement ML workflows tailored to their needs.

Azure ML supports popular frameworks like PyTorch, TensorFlow, and Scikit-learn and offers pre-built solution accelerators for tasks like demand forecasting and predictive maintenance. These tools are designed to streamline workflows and make ML model management more efficient.

Google Cloud Vertex AI brings together machine learning model management features into a single platform, merging the strengths of AutoML and AI Platform. It is designed to simplify ML workflows while delivering enterprise-level scalability and performance.

Vertex AI provides a unified ML platform that streamlines the entire model lifecycle, from data preparation to deployment. Its Model Registry tracks versions, lineage, and metadata, making it easier to compare and evaluate model performance over time.

The platform includes continuous monitoring tools to track production performance and alert teams to issues like data drift. It supports custom training with frameworks such as TensorFlow, PyTorch, and XGBoost, while also offering AutoML options for those who prefer a no-code solution. With pipeline orchestration, teams can create reproducible workflows that run automatically or on-demand, ensuring consistent processes. The Feature Store further enhances reliability by managing and serving features uniformly across training and deployment environments, reducing the risk of discrepancies.

These capabilities make it easier for teams to scale their efforts and integrate seamlessly into existing workflows.

Built on Google's robust infrastructure, Vertex AI supports custom machine configurations and preemptible instances, offering a balance between performance and cost. Its auto-scaling capabilities allow seamless transitions from single-node to distributed training setups.

Vertex AI integrates effortlessly with Google Cloud's data ecosystem, including BigQuery, Cloud Storage, and Dataflow. The Vertex AI Workbench provides managed Jupyter notebooks with pre-configured environments, while Vertex AI Pipelines simplifies the creation and deployment of ML workflows using Kubeflow Pipelines.

For inference, the platform offers online prediction endpoints with automatic load balancing and scaling, as well as batch prediction options to handle large-scale inference tasks efficiently across distributed resources.

Vertex AI operates on a pay-as-you-go pricing model, with separate charges for training, prediction, and storage. Costs depend on factors like instance type, performance needs, and usage duration. It offers several cost-saving options, including sustained use discounts, preemptible instances for fault-tolerant workloads, and committed use discounts for predictable usage patterns. Integrated cost monitoring tools help teams manage their budgets effectively.

Google provides extensive resources for Vertex AI users, including detailed documentation, hands-on labs, and certification programs through Google Cloud Skills Boost. The platform benefits from a vibrant developer community and frequent updates to stay aligned with the latest advancements.

Enterprise users have access to 24/7 support with guaranteed response times based on issue severity. Professional Services are also available to help organizations design and implement ML strategies, particularly for large-scale deployments.

Vertex AI supports widely-used open-source frameworks and integrates with tools like MLflow and TensorBoard for experiment tracking and visualization. Additionally, Google's AI Hub offers pre-trained models and pipeline templates, enabling teams to accelerate development for common ML use cases. Community forums and platforms like Stack Overflow further enhance the support system, while Google's ongoing publications of research and best practices ensure teams stay informed about emerging trends in machine learning.

Amazon SageMaker is AWS's all-in-one machine learning platform designed to help data scientists and ML engineers build, train, and deploy models at scale. Built on AWS's global infrastructure, SageMaker combines powerful tools for model management with scalable deployment options, making it a go-to solution for enterprises.

SageMaker provides a full suite of tools to manage the entire lifecycle of machine learning models. At the core is the SageMaker Model Registry, a centralized hub where teams can catalog, version, and track the lineage of their models. This repository includes metadata and performance metrics, simplifying version comparisons and enabling quick rollbacks when needed.

With SageMaker Studio, users can access Jupyter notebooks, track experiments, and debug workflows all in one place. Meanwhile, SageMaker Experiments automatically logs training runs, hyperparameters, and results, streamlining the process of tracking and refining models.

To ensure models perform well in production, SageMaker Model Monitor keeps an eye on data quality, drift, and bias, issuing alerts when performance declines or when incoming data deviates significantly. SageMaker Pipelines automates the entire workflow, from data processing to deployment, ensuring consistency and reliability throughout the development process.

SageMaker stands out for its ability to scale resources efficiently. By leveraging AWS's elastic infrastructure, it can handle even the most demanding ML workloads. The platform supports distributed training across multiple instances, simplifying parallel processing for large datasets and complex models. With SageMaker Training Jobs, resources can scale from a single instance to hundreds of machines, automatically provisioning and releasing resources as needed.

Integration with other AWS services makes SageMaker even more powerful. For example, the SageMaker Feature Store acts as a centralized repository for machine learning features, ensuring consistency between training and inference while enabling feature reuse across projects.

For deployment, SageMaker Endpoints provide real-time inference with automatic scaling based on traffic patterns. The platform also supports multi-model endpoints, allowing multiple models to run on a single endpoint to maximize resource efficiency and reduce costs. For batch processing, SageMaker Batch Transform efficiently handles large inference jobs using distributed computing resources.

SageMaker uses AWS's pay-as-you-go model, with separate charges for training, hosting, and data processing. Training costs depend on the instance type and duration, with Spot Instances offering up to 90% savings compared to on-demand rates.

For predictable workloads, Savings Plans offer discounts of up to 64% for committed usage. To further optimize costs, SageMaker Inference Recommender tests various instance types and configurations, helping teams find the most cost-effective deployment setup without sacrificing performance.

Endpoints equipped with automatic scaling ensure that users only pay for the compute resources they need. Resources scale down during low-traffic periods and ramp up as demand increases. Additionally, SageMaker provides tools for tracking and budgeting, giving teams better control over their ML spending.

Amazon SageMaker users benefit from a wealth of resources, including detailed documentation, hands-on tutorials, and the AWS Machine Learning University, which offers free courses and certifications. The platform is backed by a vibrant developer community and frequent updates that align with the latest advancements in machine learning.

For enterprise customers, AWS Support offers tiered assistance, ranging from 24/7 phone support for critical issues to general guidance during business hours. Additionally, AWS Professional Services provides consulting and implementation help for large-scale or complex ML projects.

SageMaker supports popular open-source frameworks like TensorFlow, PyTorch, Scikit-learn, and XGBoost through pre-built containers, while also allowing custom containers for specialized needs. The AWS Machine Learning Blog regularly shares best practices, case studies, and in-depth technical guides. Community forums and events like AWS re:Invent provide further opportunities for learning and networking, catering to both beginners and seasoned professionals alike.

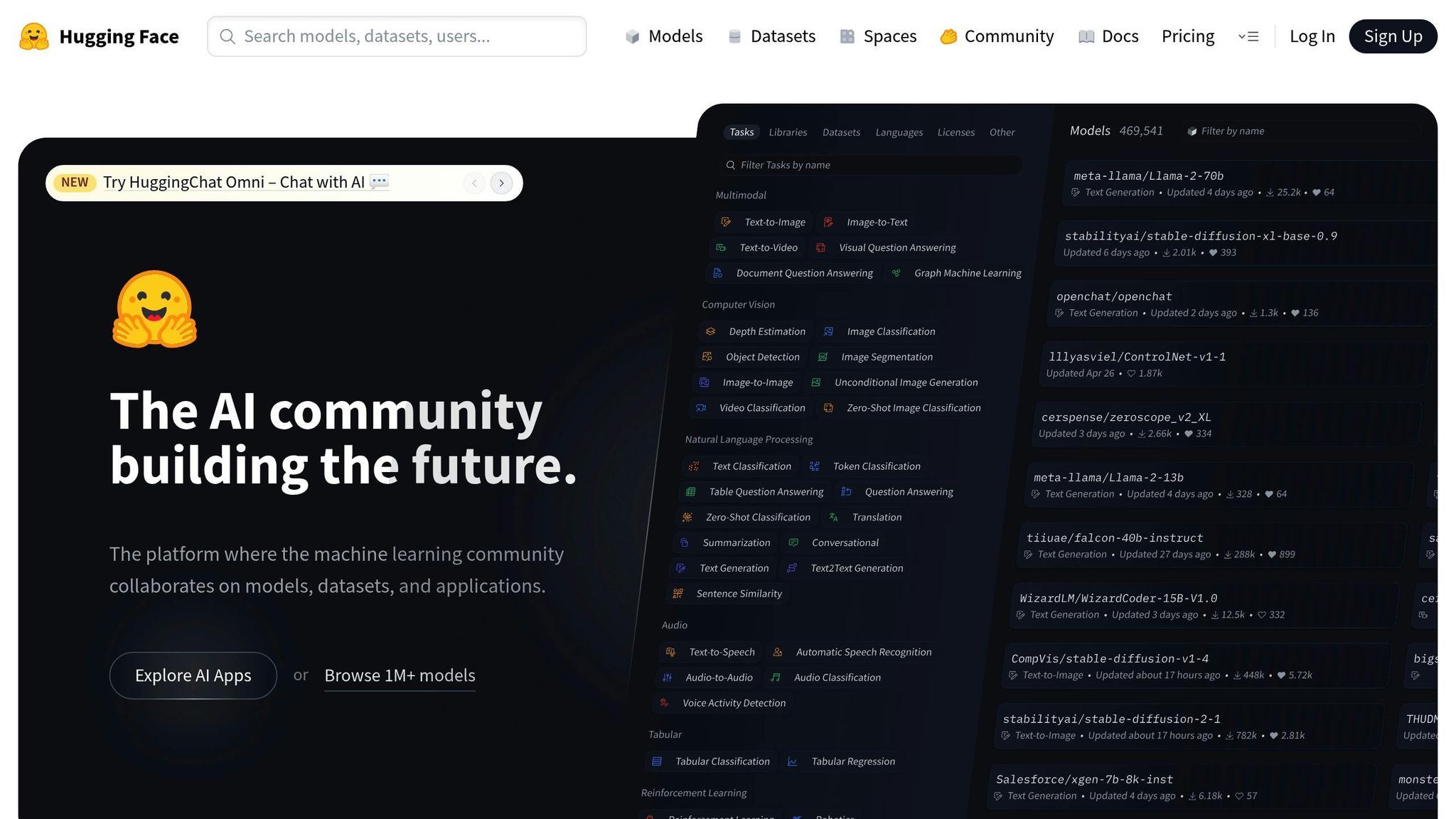

Hugging Face provides users with a comprehensive suite of machine learning tools. While it originally focused on natural language processing, it has expanded its capabilities to include computer vision, audio processing, and multimodal applications. This evolution has made it a go-to platform for managing and deploying machine learning models.

The Hugging Face Hub acts as a centralized repository for pre-trained models, datasets, and interactive demos. Each model repository includes a detailed model card that outlines the training process, potential use cases, limitations, and ethical considerations, ensuring transparency at every stage of the model's lifecycle. The Hugging Face Transformers library further simplifies workflows, allowing users to effortlessly load, fine-tune, and update models using Git-based version control.

When it comes to deployment, Hugging Face Inference Endpoints offer a seamless solution. These endpoints handle automatic scaling, CPU/GPU monitoring, and provide performance metrics alongside error logging. This setup helps teams evaluate how models perform in real-world scenarios, ensuring smooth transitions from development to production.

Hugging Face offers robust scalability through its Accelerate library, which supports distributed training across multiple GPUs and machines. It integrates seamlessly with popular deep learning frameworks like PyTorch, TensorFlow, and JAX, making it adaptable to diverse workflows. Additionally, the Datasets library provides access to an extensive range of datasets, complete with tools for preprocessing and streaming, helping to optimize data pipelines.

For showcasing models and gathering feedback, Hugging Face Spaces is a standout feature. Using tools like Gradio or Streamlit, users can create interactive demos and applications with ease. These demos can be integrated into continuous integration workflows, simplifying stakeholder engagement and iteration.

Hugging Face thrives on its vibrant open-source community, where users actively share models, datasets, and applications. The platform also offers a free educational course covering everything from the basics of transformers to advanced fine-tuning techniques. For enterprise clients, Hugging Face provides private model repositories, enhanced security features, and dedicated support, enabling organizations to manage proprietary models while leveraging the platform's powerful tools.

Hugging Face operates on a freemium model. Individuals and small teams can access public repositories and community features at no cost. For those needing managed deployment, additional storage, or advanced support, the platform offers paid plans with pricing customized to specific requirements and usage levels.

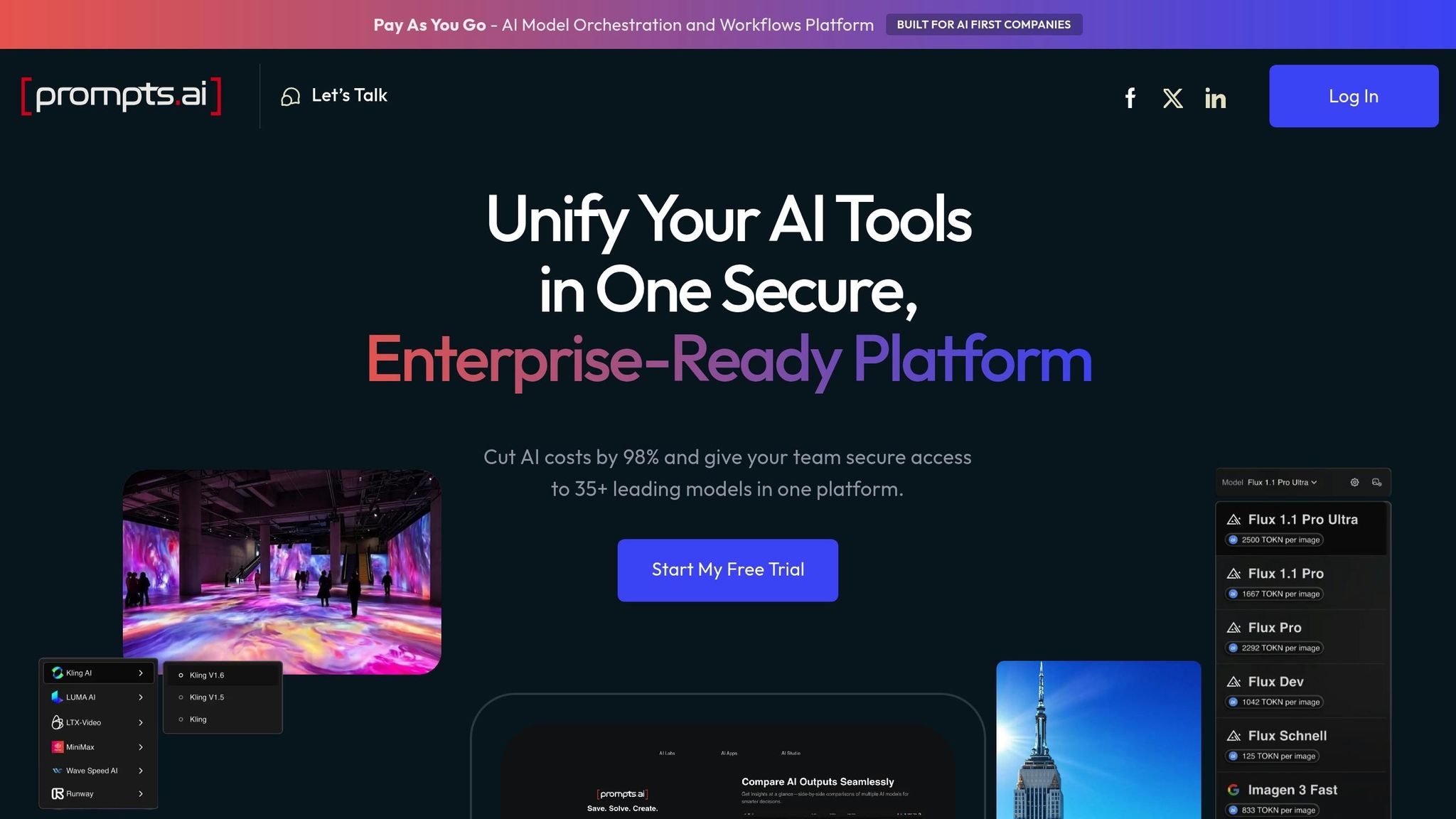

Prompts.ai brings together over 35 large language models into a secure, streamlined platform. Designed specifically for prompt management and LLMOps, it provides a production-ready environment for managing and optimizing prompts.

Prompts.ai delivers a complete suite of tools for managing the entire lifecycle of models, with a focus on prompt versioning and tracking. It allows users to version prompts, roll back changes, and ensure reproducibility through advanced version control systems.

The platform features automated monitoring to track key metrics like prediction accuracy, latency, and data drift. Users can configure custom alerts to address performance issues or anomalies quickly, ensuring smooth operations even in production environments. This monitoring is particularly useful for tackling challenges such as prompt drift and maintaining consistent performance.

For example, a healthcare analytics company in the U.S. used Prompts.ai to cut model deployment times by 40% while improving accuracy tracking. This led to better patient outcomes and more efficient compliance reporting.

These lifecycle tools are designed to support scalable and reliable deployments.

Prompts.ai integrates effortlessly with popular machine learning frameworks, including TensorFlow, PyTorch, and scikit-learn, as well as major cloud platforms like AWS, Azure, and Google Cloud. It supports scalable deployments with autoscaling for high-demand scenarios and works with container orchestration systems like Kubernetes.

By consolidating model selection, prompt workflows, cost management, and performance comparisons into a single platform, Prompts.ai eliminates the need for multiple tools. This unified approach can lower AI software costs by up to 98%, all while maintaining enterprise-level security and compliance.

Prompts.ai goes beyond technical capabilities by fostering collaboration. It offers features like shared workspaces, role-based access controls, and integrated commenting on model artifacts, making it easier for data scientists and ML engineers to collaborate effectively. These tools ensure transparency and teamwork throughout the model development lifecycle.

The platform also provides extensive resources, including comprehensive documentation, user forums, and direct support. Enterprise customers benefit from dedicated account managers and priority support to handle complex implementations. Additionally, Prompts.ai supports an active user community where members can exchange best practices and seek expert advice.

Prompts.ai operates on a pay-as-you-go TOKN credit system. Personal plans start at no cost and scale to $29 or $99 per month, while Business plans range from $99 to $129 per member/month. The platform’s usage-based billing model avoids long-term commitments, with annual plans offering a 10% discount.

This pricing structure is particularly appealing to U.S.-based organizations looking for flexibility and cost control. Prompts.ai’s real-time FinOps tools provide full visibility into spending, connecting every token used to measurable business outcomes.

This section brings together the strengths and challenges of each platform to help refine your machine learning (ML) model management strategy. By comparing their features, you can align your choice with your specific needs, budget, and technical goals.

Microsoft Azure Machine Learning is a standout for organizations already embedded in the Microsoft ecosystem. Its integration with tools like Office 365 and Power BI ensures a streamlined workflow. However, these benefits come at a premium, as costs can escalate quickly, especially for smaller teams. Additionally, the platform's learning curve can be steep for those unfamiliar with Azure.

Google Cloud Vertex AI shines with its advanced AutoML capabilities and close ties to Google’s cutting-edge AI research. It offers excellent support for TensorFlow and strong data analytics tools. That said, its reliance on Google frameworks limits flexibility, and its pricing structure can be confusing, occasionally leading to unexpected charges.

Amazon SageMaker offers unparalleled scalability and a comprehensive suite of tools for managing the entire ML lifecycle. Its pay-as-you-go model appeals to budget-conscious organizations, and the extensive AWS ecosystem provides a wealth of resources. However, the platform's complexity and potential for vendor lock-in can pose challenges, especially for those new to cloud-based ML.

Hugging Face has transformed model sharing and collaboration with its extensive library of pre-trained models and a vibrant community. It excels in natural language processing (NLP), supported by clear and accessible documentation. On the downside, it lacks some enterprise-level features, which might be a concern for organizations with strict data governance needs.

| Platform | Key Strengths | Main Limitations | Best For |

|---|---|---|---|

| Microsoft Azure ML | Seamless enterprise integration, robust security, hybrid cloud support | High costs, steep learning curve, complex interface | Large enterprises in the Microsoft ecosystem |

| Google Cloud Vertex AI | Advanced AutoML, strong TensorFlow support, cutting-edge AI research | Limited framework support, confusing pricing | Organizations prioritizing AI research and TensorFlow |

| Amazon SageMaker | Massive scalability, full ML lifecycle tools, flexible pricing | Vendor lock-in, steep learning curve, AWS dependency | Teams requiring scalability and AWS integration |

| Hugging Face | Extensive model library, strong NLP tools, active community | Limited enterprise features, weaker governance options | Research teams and NLP-heavy projects |

| Prompts.ai | Up to 98% cost savings, unified interface, real-time FinOps | Newer platform, focused on large language models | Companies seeking cost control and access to multiple LLMs |

Each platform’s strengths and weaknesses reflect their approach to lifecycle management, scalability, and user support.

Prompts.ai distinguishes itself with its ability to cut costs - up to 98% - while consolidating access to multiple leading large language models in a single, secure platform. This approach not only reduces operational expenses but also simplifies management by minimizing administrative overhead.

However, it’s important to note that Prompts.ai primarily focuses on large language models. Organizations needing specialized tools for computer vision or traditional ML algorithms may need to integrate additional resources. As a relatively new platform, it may not yet match the extensive enterprise features of more established providers. That said, its commitment to enterprise-grade security and compliance continues to evolve rapidly.

The platform also fosters collaboration by building a community of prompt engineers and offering comprehensive onboarding and training. This teamwork-oriented approach ensures that data scientists, ML engineers, and business stakeholders can collaborate effectively while adhering to strict governance and security standards.

Cost structures vary significantly across these platforms. Traditional providers like AWS and Google, while resource-rich, can sometimes result in unforeseen expenses. In contrast, Prompts.ai offers a transparent pricing model designed to prevent billing surprises, making it an excellent choice for organizations looking to scale their AI operations without escalating costs.

Support and documentation also differ. While platforms like AWS and Google provide vast resources, the sheer volume of information can overwhelm users. Prompts.ai, on the other hand, offers focused documentation, user forums, and tailored support designed specifically for prompt engineering and LLM workflows, ensuring users have the guidance they need without unnecessary complexity.

Selecting the right AI platform boils down to understanding your specific needs, existing infrastructure, and budgetary constraints. Each platform discussed offers distinct advantages tailored to different use cases, making it essential to weigh the trade-offs carefully.

Microsoft Azure Machine Learning is a strong choice for enterprises already invested in the Microsoft ecosystem, thanks to its seamless integration with tools like Office 365 and Power BI. Google Cloud Vertex AI shines for teams that emphasize AI research and rely heavily on TensorFlow. Amazon SageMaker is an excellent option for organizations requiring extensive scalability and end-to-end machine learning lifecycle management. Meanwhile, Hugging Face has set a new standard in natural language processing with its vast model library and active community. For businesses navigating large language model workflows, Prompts.ai delivers streamlined management and up to 98% cost savings by offering access to over 35 leading LLMs through a single, unified interface.

These insights can help guide your pilot testing and inform your long-term AI strategy. Enterprises with established cloud ecosystems often gravitate toward Azure ML or SageMaker, while research institutions and collaborative teams may find Hugging Face’s environment more appealing. For businesses focused on cost efficiency in LLM management, Prompts.ai’s transparent pricing and unified approach make it a compelling option.

As AI platforms continue to evolve, it’s crucial to align your choice with both immediate needs and future goals. Pilot projects are an effective way to test compatibility before committing to a particular platform.

Ultimately, the best platform is the one that empowers your team to deploy, monitor, and scale machine learning models efficiently, all while staying within budget and meeting compliance standards. By matching platform capabilities to your unique challenges, you can create a solid foundation for effective AI deployment and management.

Choosing an AI platform to manage your machine learning models requires careful consideration of several factors. Begin by pinpointing your organization's specific needs. Do you need real-time predictions, batch processing, or a combination of both? Ensure the platform offers the serving features you rely on, such as low-latency endpoints or scheduled workflows, to meet these demands.

Next, assess how well the platform integrates with your existing tools and frameworks. Seamless compatibility with your current ML stack is crucial to avoid disruptions as you transition from model development to deployment. Additionally, think about deployment options - whether your focus is on cloud environments, edge devices, or a hybrid setup - and choose a platform that aligns with these requirements while staying within your budget and scalability plans.

By addressing these factors, you can find a platform that meets your technical needs while keeping operations efficient and cost-effective.

Pricing structures for AI platforms that handle machine learning (ML) models often depend on factors like usage, available features, and scalability options. Many platforms base their charges on resource consumption, such as compute hours, storage capacity, or the number of models deployed. Others provide tiered plans tailored to different needs, ranging from small-scale projects to large enterprise operations.

When choosing a platform, it’s essential to assess your specific needs - how often you plan to train models, the scale of deployment, and your monitoring requirements. Be sure to review any potential extra costs, such as fees for premium features or exceeding resource limits, to avoid surprises in your budget.

When bringing an AI platform into your current tech setup, the first step is to pinpoint the challenges you aim to solve. Whether it's enhancing customer interactions or streamlining workflows, having a clear focus will guide your efforts. From there, craft a detailed strategy that includes your objectives, the tools you'll need, and a plan for handling and monitoring data.

Make sure your internal data is in good shape - organized, easy to access, and dependable. This might involve consolidating data sources and putting strong governance practices in place. Don’t overlook ethical considerations, such as addressing bias and ensuring fairness, and think about how these changes might affect your team. Prioritize your use cases, run comprehensive tests on the platform, and prepare a solid change management plan. This thoughtful approach will allow you to integrate AI smoothly while keeping potential risks in check.