Evaluating large language model (LLM) outputs is now a priority for businesses aiming to improve AI performance, cut costs, and ensure compliance. Three platforms stand out for these needs:

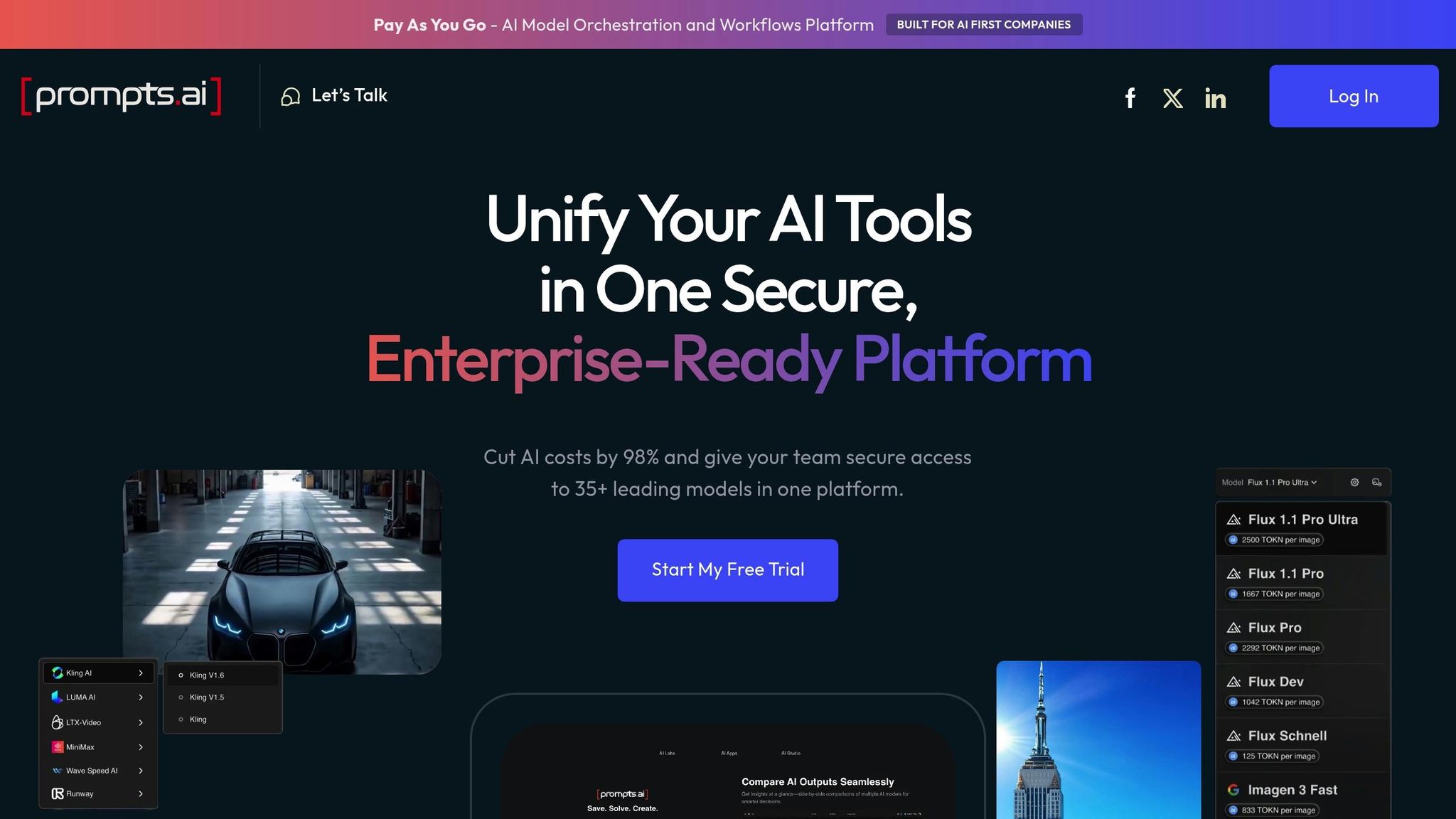

Prompts.ai leads with its robust governance, cost efficiency, and scalability, making it ideal for enterprises managing high-volume AI workflows. Below, we explore how these platforms compare.

| Platform | Strengths | Drawbacks | Best For |

|---|---|---|---|

| Prompts.ai | 35+ LLMs, cost tracking, governance | None noted | Enterprises needing secure AI tools |

| EvalGPT | Open-source, customizable evaluations | Details pending | Organizations focused on LLM testing |

| LLMChecker Pro | Potential for evaluation metrics | Features unconfirmed | Businesses awaiting more details |

For teams seeking secure, cost-effective AI evaluations, Prompts.ai is a top choice. Its TOKN system aligns costs with use, while governance tools ensure compliance.

Prompts.ai is a centralized platform that brings together over 35 leading AI models - including GPT-5, Claude, LLaMA, and Gemini - into a secure and user-friendly interface. It’s designed to help enterprises evaluate and optimize large language models (LLMs) seamlessly. Below, we’ll explore its standout features in interoperability, governance, cost management, and scalability.

Prompts.ai simplifies the complexity of managing AI workflows by consolidating API connections and authentication into one platform. Its advanced API framework integrates directly with CI/CD pipelines and machine learning operations, making it easier to automate the evaluation of LLM outputs during deployment.

Prompts.ai is built with enterprise-grade governance in mind, addressing the stringent security and compliance needs of Fortune 500 companies and regulated industries. It adheres to key standards, including SOC 2 Type II, HIPAA, and GDPR, ensuring data protection at every stage of the evaluation process. The platform officially launched its SOC 2 Type II audit on June 19, 2025, and provides real-time compliance monitoring through its Trust Center (https://trust.prompts.ai/). With full visibility into all AI interactions, organizations can maintain detailed audit trails to meet regulatory requirements.

Using a FinOps-driven approach, Prompts.ai links costs directly to usage, offering real-time dashboards to track spending, forecast monthly expenses, and identify cost-saving opportunities. Its flexible Pay-As-You-Go TOKN credits system eliminates subscription fees, making budgeting straightforward. For example, a customer service LLM handling 10,000 daily queries can see a 30% improvement in accuracy within weeks and a reduction of 3,000 escalations, significantly enhancing operational efficiency.

Prompts.ai is designed to handle high-volume evaluations with ease. It supports batch processing, parallel evaluations, and auto-scaling, allowing it to process thousands - or even millions - of outputs daily. The platform’s user-friendly interface includes customizable dashboards, role-based access, and exportable results, catering to both technical and non-technical teams. With automated evaluations and instant feedback, development speeds can increase up to 10 times faster. Additionally, guided workflows and customizable templates make it easy for teams to get started without a steep learning curve.

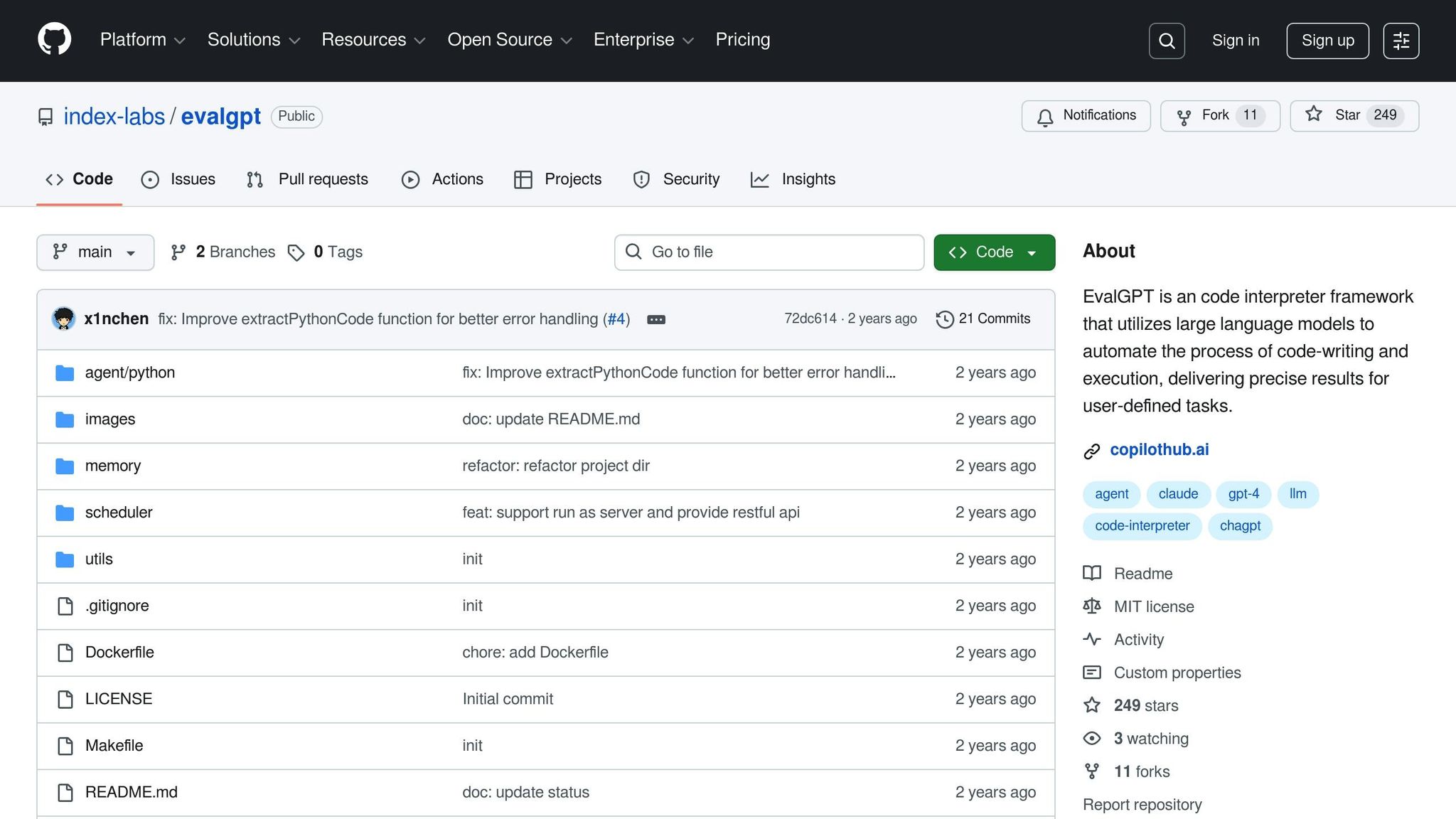

EvalGPT, developed by H2O.ai, is an open-source platform designed to compare the performance of large language models (LLMs) across a variety of tasks. It provides transparency and allows users to create tailored evaluation workflows.

Built with an open-source framework, EvalGPT can be seamlessly integrated into development pipelines, offering organizations the flexibility to adapt it to their specific needs. By utilizing GPT-4 for A/B testing, the platform automates evaluation tasks - such as summarizing financial reports or answering queries - making it a natural fit for existing AI systems. This adaptability enhances its ability to scale and supports extensive customization.

EvalGPT's design is built to handle scalability while remaining user-friendly. Teams can adjust the evaluation framework to accommodate varying workloads and incorporate custom benchmarks that align with their unique business goals. The platform enables simultaneous processing of multiple models, delivering comparative insights to identify the best-performing LLM for a given application. This approach ensures that evaluation outcomes directly contribute to better performance in real-world production settings.

As we transition from our detailed exploration of EvalGPT, let's turn our attention to LLMChecker Pro. While we're still awaiting confirmed specifics, this platform is anticipated to offer evaluation metrics across key areas such as performance, compliance, cost management, and scalability. Once verified details are available, a comprehensive breakdown will be provided. For now, LLMChecker Pro stands as a promising addition to our comparison lineup. Stay tuned for further updates.

Examining these platforms highlights their strengths while leaving some details yet to be clarified.

Prompts.ai stands out as an enterprise-level AI orchestration platform, integrating over 35 top large language models (LLMs) like GPT-5, Claude, LLaMA, and Gemini into a single, secure system. It operates on a pay-as-you-go TOKN credit system, which can slash AI software costs by up to 98%. The platform also includes a built-in FinOps layer, enabling real-time cost tracking and optimization. For enterprises, its governance features - such as audit trails and enterprise-grade security - are tailored to meet the demands of large companies and regulated industries.

EvalGPT is positioned as a tool for evaluating LLM outputs, though comprehensive and verified details about its features and performance remain unavailable at this time.

LLMChecker Pro has been mentioned as another option, but key information about its capabilities is still pending further confirmation.

The table below summarizes the core strengths and limitations of these platforms, offering insights into their potential roles in enterprise AI evaluation frameworks.

| Platform | Key Strengths | Primary Drawbacks | Best Suited For |

|---|---|---|---|

| Prompts.ai | Access to 35+ leading LLMs, cost-saving TOKN model, real-time FinOps, and strong governance | – | Enterprises needing secure, centralized AI tools |

| EvalGPT | Details pending | Details pending | Organizations exploring evaluation-focused tools |

| LLMChecker Pro | Details pending | Details pending | Companies awaiting more specific feature updates |

These comparisons bring attention to critical factors such as cost efficiency, scalability, and governance when selecting an AI orchestration platform.

Prompts.ai’s pay-as-you-go TOKN credit system aligns costs with actual usage, making it an appealing choice for organizations with fluctuating workloads.

Designed for enterprise needs, Prompts.ai supports seamless scalability while adhering to strict governance standards. These features make it a reliable choice for organizations prioritizing cost control and robust oversight in their AI workflows.

After reviewing the benefits, it’s clear that Prompts.ai stands out as a top choice for LLM output evaluation. Here’s why:

To get started, consider Prompts.ai’s pay-as-you-go plan. It’s a smart way to streamline LLM evaluation and set the stage for AI-driven growth well into 2026 and beyond.

Prompts.ai offers powerful tools to ensure enterprises can securely handle sensitive data with confidence. These include detailed monitoring of AI-generated outputs to verify they meet regulatory standards and governance features that safeguard data privacy and maintain workflow integrity.

By prioritizing the protection of sensitive information, Prompts.ai helps businesses adhere to strict compliance regulations while streamlining their AI-powered processes.

The TOKN credit system offered by Prompts.ai brings a smarter way to manage costs, allowing users to pay only for the services they actually use. Unlike standard subscription plans that charge fixed fees regardless of usage, TOKN credits put you in full control of your spending.

This pay-as-you-go model is perfect for businesses and individuals aiming to make the most of their budgets without sacrificing access to top-tier AI tools. It’s a practical solution for managing expenses while maintaining the performance you need.

Prompts.ai is designed to adapt effortlessly to your business's evolving AI evaluation demands. Whether your needs expand or contract, the platform offers flexible solutions that align with your requirements, removing the pressure of committing to fixed resources.

Thanks to its integrated FinOps layer, Prompts.ai lets you monitor costs in real-time, fine-tune spending, and enhance your ROI. This approach ensures you maintain control and efficiency, even when usage patterns shift.