AI systems working together is no longer optional - it’s essential. With organizations relying on diverse AI tools, ensuring seamless communication between these systems is critical for efficiency and scalability. This article explores four key protocols - MCP, A2A, ACP, and ANP - that enable AI agents to collaborate in decentralized workflows. Each protocol offers distinct strengths and trade-offs:

Choosing the right protocol depends on your needs. Whether prioritizing speed, security, or scalability, these frameworks provide tailored solutions to unify your AI workflows.

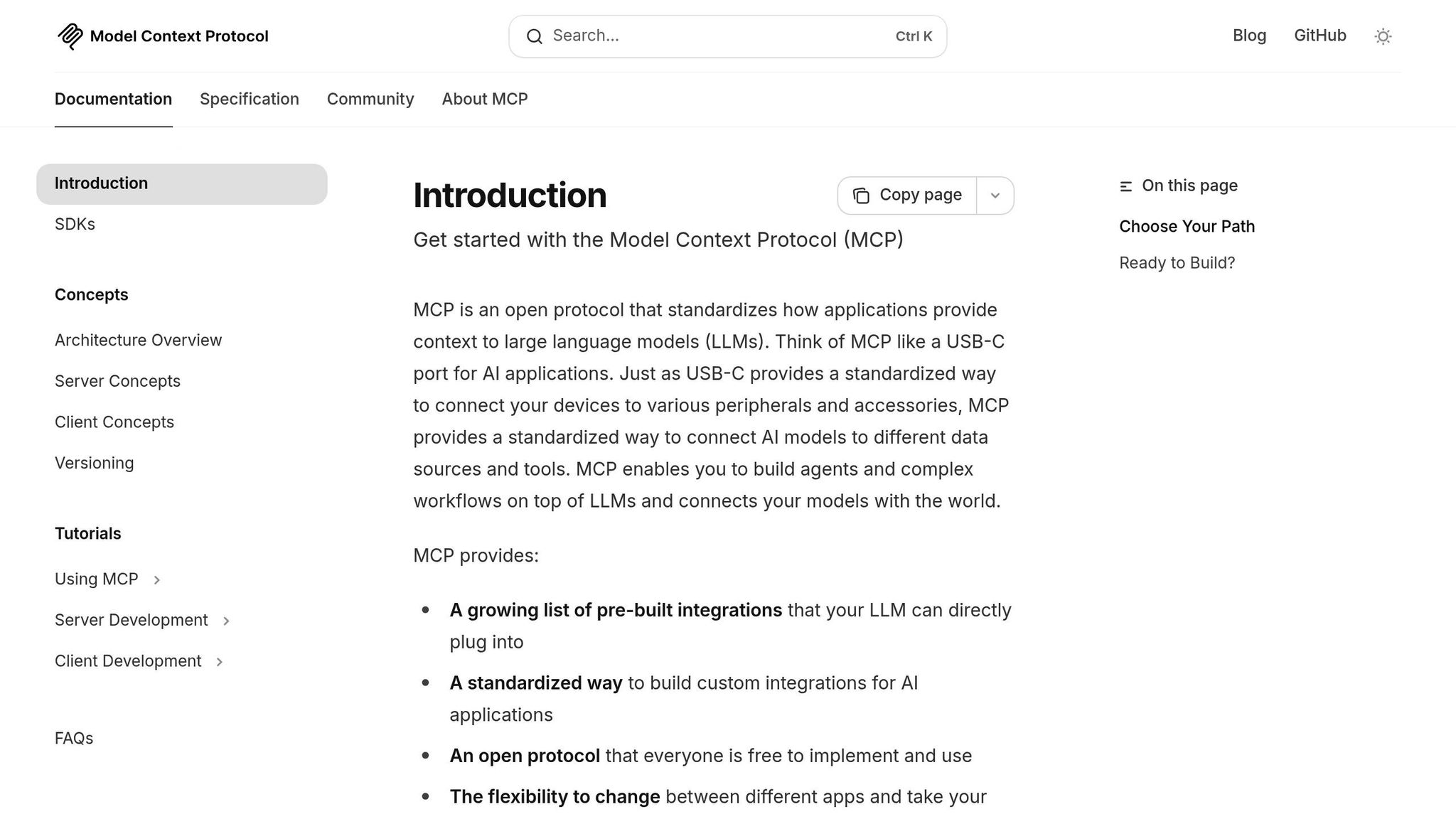

The Model Context Protocol (MCP) is designed to standardize how AI agents collaborate and share context using a peer-to-peer architecture. Unlike centralized systems, MCP empowers agents to operate independently while seamlessly coordinating tasks within decentralized workflows.

MCP eliminates the need for a central authority by leveraging distributed consensus. Each agent manages its own context while synchronizing critical information with peers through structured message exchanges. This ensures workflows remain uninterrupted, even if some nodes go offline.

The protocol supports dynamic agent discovery, where agents broadcast their capabilities and requirements to join workflows automatically. This feature allows MCP to adapt and scale efficiently, particularly in enterprise settings.

Another key feature is context inheritance, which enables agents to pass relevant background information to downstream processes without compromising sensitive data. This selective sharing ensures smooth workflow transitions while maintaining strict data boundaries.

Security is at the core of MCP. All communications are protected with end-to-end encryption, using rotating keys and cryptographic signatures to confirm identities and ensure message integrity.

MCP enforces role-based access controls, allowing organizations to define agent permissions for initiating workflows, accessing data, or modifying shared contexts. These permissions are backed by distributed ledger technology, creating an immutable audit trail of all interactions and data exchanges.

The protocol also employs a zero-trust verification model, requiring agents to continuously authenticate their identity and authorization levels. This dynamic approach prevents unauthorized access, even if an agent is compromised, ensuring the decentralized network remains secure and functional.

MCP is built to scale effectively. It clusters related agents into local groups that connect through designated gateways, reducing communication overhead while maintaining global workflow visibility. During high-demand periods, MCP prioritizes essential workflow operations by temporarily reducing non-critical synchronization.

With asynchronous processing, agents can continue working on local tasks while awaiting responses from remote peers. This prevents bottlenecks and ensures that temporary delays or downtime don’t disrupt overall workflow progress.

MCP is designed for easy integration through lightweight, standardized APIs that require minimal changes to existing infrastructure. Organizations can adopt the protocol incrementally, starting with basic agent communications and gradually expanding to more complex workflows.

The protocol also includes backward compatibility mechanisms, allowing legacy systems to participate in MCP workflows through adapter interfaces. These adapters translate proprietary formats into MCP’s standardized structures, enabling businesses to maximize the value of their current AI investments while transitioning to a fully interoperable system.

Configuration management is streamlined with declarative templates that define workflow patterns, agent roles, and communication needs. These templates can be version-controlled and reused across projects, simplifying the implementation of decentralized workflows and speeding up deployment for new AI use cases.

Next, we’ll explore the Agent-to-Agent Protocol (A2A) for deeper insights into decentralized coordination.

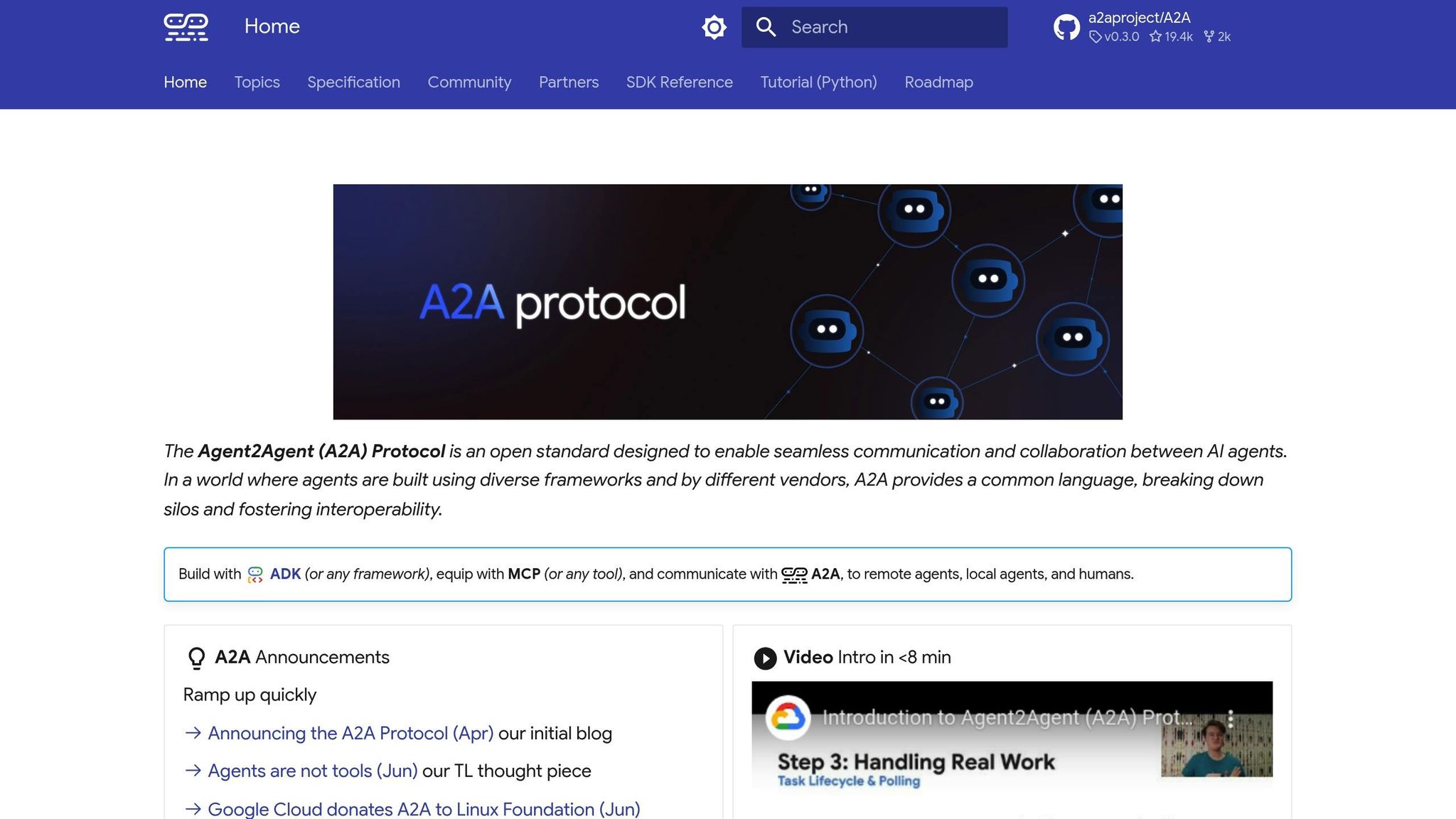

The Agent-to-Agent Protocol (A2A) enables AI agents to connect directly with each other, bypassing shared context pools. This setup facilitates peer-to-peer task negotiation, data sharing, and coordination through direct agreements. Below, we explore its key features: decentralization, security, scalability, and integration challenges.

A2A relies on a mesh network architecture, where each agent maintains direct links with multiple peers. This structure provides redundancy, ensuring smooth communication even if some agents go offline. A distributed routing system is in place to automatically find alternative paths when primary connections fail.

The protocol also supports autonomous task delegation, allowing agents to assign tasks independently based on their capabilities and workload. For instance, when given a complex task, an agent can break it into smaller components and contract with specialized peers for specific parts. Agents continuously share information about their processing capacity and queue status with nearby peers, enabling dynamic redistribution of tasks to less busy nodes. This results in a decentralized workflow with no central control.

These decentralized features work hand-in-hand with robust security measures to strengthen the system.

A2A ensures secure communication through mutual authentication, using cryptographic certificates and challenge-response protocols. This creates a trusted network where agents only interact with verified peers.

Each agent-to-agent connection is protected by isolated encryption, with unique encryption keys and access permissions. This design ensures that a breach in one connection doesn’t compromise the entire network. The isolation prevents cascading security failures.

To maintain data integrity, the protocol includes transaction-level verification. Each message is accompanied by cryptographic hashes, allowing recipients to confirm that the data hasn’t been altered during transmission. If an integrity check fails, the connection is terminated, and network administrators are alerted immediately.

To manage growth efficiently, A2A employs hierarchical clustering and connection pooling. Agents are grouped into clusters that share communication channels. Gateway agents handle interactions between clusters, reducing the number of direct connections each agent needs to maintain while still enabling global coordination.

The protocol supports elastic scaling, allowing new agents to join the network through introductions from existing peers. When demand rises, additional agents can be deployed and integrated into the network within minutes, ready to take on delegated tasks.

These scalability features align seamlessly with the protocol’s broader interoperability goals.

Implementing A2A comes with technical challenges, particularly in managing multiple simultaneous connections and enabling autonomous peer negotiations. Organizations need to deploy connection management tools to monitor network health, optimize routing, and ensure failover mechanisms are in place to maintain connectivity.

Network topology planning is also crucial. To prevent communication bottlenecks, organizations must design agent deployments carefully, modeling workflow patterns and strategically placing agents to reduce routing delays.

Although A2A introduces complexity, its direct communication model eliminates single points of failure and provides the adaptability needed for dynamic, self-organizing AI systems. This makes it a powerful solution for achieving resilient and autonomous workflows.

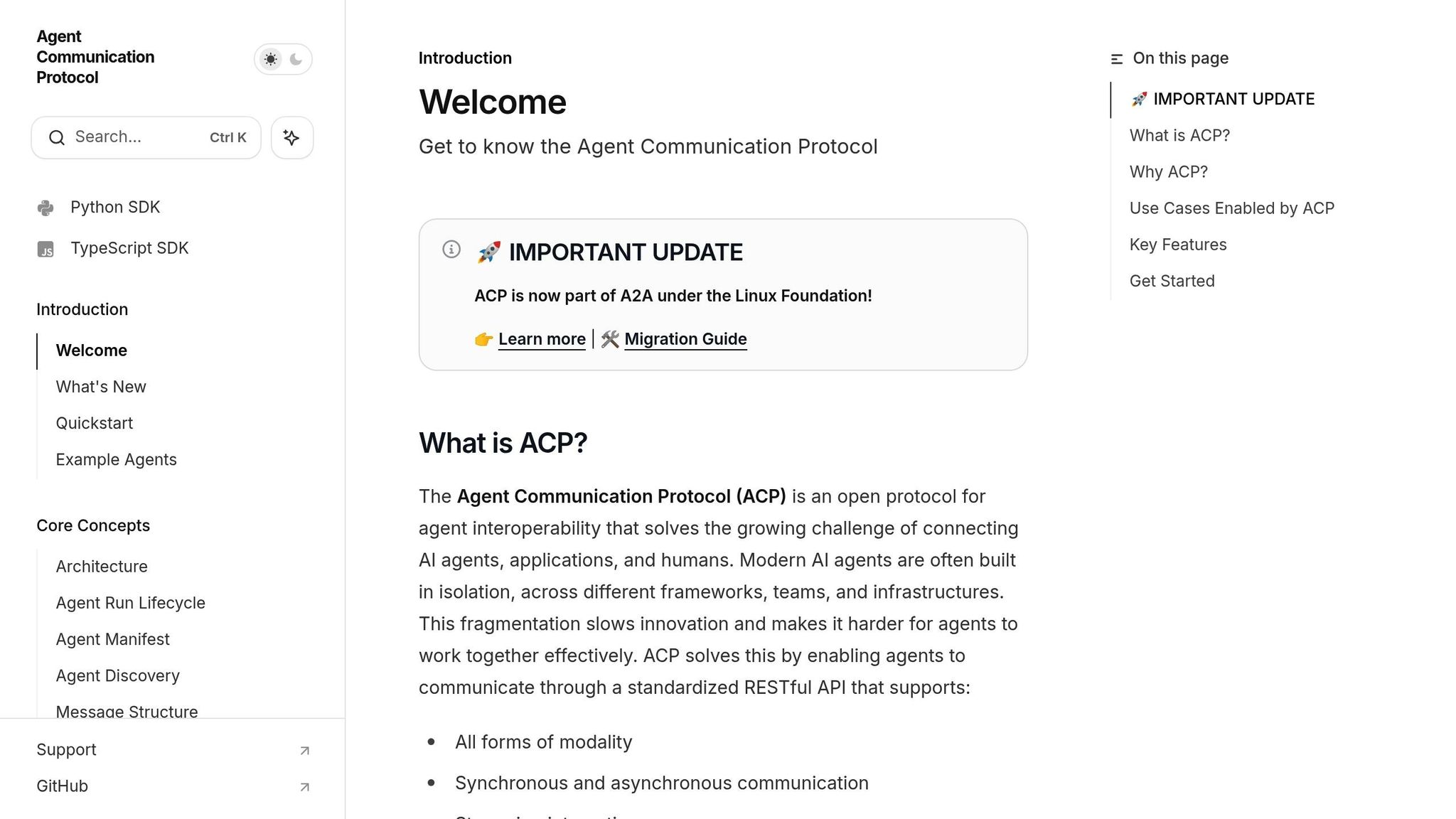

The Agent Communication Protocol (ACP) strikes a balance between centralized and decentralized approaches, offering a hybrid model tailored for workflows requiring both flexibility and oversight. It combines centralized coordination with distributed task execution, using lightweight coordination hubs to manage communication while allowing agents to operate independently. This setup ensures efficient oversight without compromising the autonomy of agents.

ACP employs federated coordination, where multiple hubs collaborate to oversee distinct workflow domains. Each hub manages specific tasks or regions and can seamlessly transfer responsibilities to other hubs when needed. This prevents any single hub from becoming a bottleneck while retaining the benefits of centralized coordination.

The protocol enables selective autonomy, allowing agents to independently handle routine tasks while reserving coordination for more complex or resource-intensive operations. This autonomy ensures agents can continue functioning even when temporarily disconnected from coordination hubs.

With dynamic hub assignment, agents are routed to the most suitable hub based on factors like workload, location, and task requirements. If a hub becomes overloaded or goes offline, agents are seamlessly redirected to alternative hubs. Robust access controls and encryption ensure these transitions remain secure.

Security is a cornerstone of ACP, starting with role-based access control managed through coordination hubs. Each hub maintains detailed permission matrices, specifying which resources agents can access, their communication permissions, and the tasks they are authorized to perform. This centralized management enforces consistent security policies across the network.

The protocol secures communication using encrypted queues, where messages are protected with hub-specific encryption keys. These queues include tamper detection mechanisms, ensuring that any intercepted or altered messages are flagged and retransmitted.

Additionally, audit trails are automatically generated for all agent interactions. These logs, distributed across multiple hubs, provide a complete record of actions, ensuring accountability and making it easier to identify unusual patterns or investigate potential security incidents.

ACP is designed to scale efficiently through hub clustering, which groups coordination hubs to share processing loads. When activity increases, new hubs can be added to existing clusters within hours, and the protocol automatically redistributes agent assignments to maintain balanced workloads.

The system also supports tiered coordination, with regional hubs managing local agents and master hubs overseeing inter-regional coordination. This hierarchical structure ensures global scalability while maintaining local responsiveness, reducing latency and improving performance.

Resource pooling allows hubs to share computational resources. During peak demand, overloaded hubs can borrow capacity from less busy ones, ensuring consistent response times even during spikes in activity.

Implementing ACP requires thoughtful hub architecture planning to determine the ideal number and placement of coordination hubs. Organizations must consider workflow patterns, geographic distribution, and future growth to avoid performance bottlenecks.

Managing agent registration is another challenge, as each agent must be correctly configured to interact with designated coordination hubs. Robust provisioning systems are essential for onboarding agents, assigning permissions, and managing hub reassignments when network topologies change.

Finally, cross-hub synchronization is critical to ensure consistency when agents move between hubs. While this adds operational overhead, it is necessary to maintain data integrity and prevent conflicts in distributed workflows.

Despite these complexities, ACP provides a practical middle ground, offering the control and visibility organizations need while supporting flexible, distributed operations for agents.

The Agent Network Protocol (ANP) takes decentralization to its peak, creating a fully distributed mesh network that eliminates the need for centralized coordination. Unlike protocols that depend on hubs or brokers, ANP establishes a peer-to-peer system where every agent acts as both a participant and a coordinator, ensuring maximum resilience and autonomy.

ANP achieves full decentralization through mesh networking, where each agent connects directly to multiple others. This setup provides redundancy, as each agent maintains a local routing table that is updated regularly through broadcasts. This ensures the network remains operational, even during outages.

The protocol’s self-organizing capabilities allow it to adapt to changes seamlessly. When a new agent joins, it announces its presence and capabilities to nearby peers, which then share this information across the network. Similarly, if an agent leaves or fails, the system automatically reroutes communications and redistributes tasks among the remaining agents. This dynamic adaptability solidifies ANP’s ability to handle disruptions effectively.

ANP employs a distributed trust model, where agents verify each other using cryptographic signatures and reputation scores. This creates a self-regulating system that isolates malicious or unreliable agents over time.

Key security measures include end-to-end encryption, secure key exchanges, and digital signatures to ensure authenticity and prevent tampering or impersonation. Additionally, blockchain-based identity management provides an immutable record of agent credentials and permissions. By eliminating the need for centralized certificate authorities, this approach ensures agent identities cannot be forged or duplicated, further strengthening the network’s integrity.

ANP addresses scalability by forming interconnected clusters. These clusters dynamically balance workloads, with local communications staying within clusters and inter-cluster messages routed through designated gateways. This structure ensures the network can grow without compromising efficiency.

Deploying ANP involves complexities, particularly in peer discovery, where agents must locate and connect with suitable partners. While bootstrap servers or multicast protocols can initiate connections, the network becomes self-sustaining once a critical mass of agents is active.

Managing network topology is another hurdle. Administrators need to monitor connection patterns to maintain redundancy while avoiding excessive overhead. Troubleshooting can also be more challenging due to ANP’s distributed nature. Issues may appear differently in various parts of the network, requiring specialized tools and diagnostics to pinpoint and resolve problems.

Despite these challenges, ANP’s resilience and autonomy make it the go-to choice for organizations needing decentralized operations. It’s particularly suited for scenarios demanding censorship resistance, high uptime, or the ability to handle network partitions effectively.

Interoperability protocols come with their own sets of strengths and weaknesses, making the choice of the right one a balancing act. Key considerations include how quickly the protocol can be deployed, its operational demands, and the effort required for long-term upkeep.

Ultimately, selecting the best protocol depends on your priorities - whether you need rapid deployment, decentralized functionality, or a secure and cost-conscious solution for the long haul. This comparison outlines the trade-offs, paving the way for deeper insights in the Conclusion.

Selecting the most suitable agent interoperability protocol hinges on your specific operational requirements. Each protocol comes with its own set of strengths and limitations, which should be carefully matched to the demands of your environment.

Standardized interoperability plays a critical role in decentralized workflows, as it directly impacts how efficiently AI agents can work together across distributed systems.

For instance, MCP is ideal for rapid prototyping and proof-of-concept projects. However, its centralized nature might pose challenges when scaling to larger production environments. On the other hand, A2A excels in scenarios where speed is essential, thanks to its low latency. That said, managing the increasing complexity of the network requires careful infrastructure oversight.

If your focus is on balancing scalability and security while managing multi-department workflows, ACP offers a practical solution. Its design simplifies operations in complex environments, making it fit for general-purpose deployments. Meanwhile, ANP shines in situations where uninterrupted operation is non-negotiable. Its mesh networking ensures resilience by maintaining functionality even when individual components fail, making it a strong choice for high-resilience applications.

Ultimately, these protocols provide a range of options to align with diverse workflow needs. Organizations should carefully evaluate their operational goals, scalability requirements, and tolerance for complexity to choose the protocol that best supports their decentralized workflows.

Choosing the right interoperability protocol hinges on understanding your organization's workflow requirements and the complexity of the tasks at hand. If your workflows demand real-time communication and secure coordination between AI agents operating across various platforms, A2A (Agent-to-Agent) protocols are a strong fit. These protocols enable smooth collaboration, making them ideal for dynamic and interactive processes.

For workflows that involve scalable, interconnected systems with multiple agents handling complex tasks, the MCP (Multi-Agent Coordination Protocol) offers a more structured approach. It integrates tools, data, and processes into a cohesive framework, ensuring efficient coordination in more intricate setups.

When deciding, consider whether your workflows emphasize immediate interaction or require a systematic integration of resources. Aligning your protocol choice with these priorities will help you achieve seamless and effective operations.

The primary security risks tied to A2A and MCP protocols stem from vulnerabilities like command injection, prompt injection, server-side request forgery (SSRF), and weak authentication. These flaws can leave decentralized workflows open to unauthorized access and potential data breaches.

To address these challenges, organizations should prioritize strong authentication methods, utilize encrypted communication channels, and enforce rigorous input validation to prevent malicious commands. Additionally, defining clear trust boundaries and conducting regular security audits can strengthen the protocols' defenses and maintain adherence to security standards in decentralized systems.

Integrating A2A (Agent-to-Agent) and MCP (Multi-Agent Coordination Protocol) into existing AI systems can be a challenging yet worthwhile endeavor. These protocols are designed to enable smooth collaboration among decentralized AI agents, but implementing them often demands considerable changes to current system architectures to ensure compatibility and efficient communication.

Some of the main hurdles include:

Successfully navigating these challenges calls for a blend of technical know-how, strong security measures, and a commitment to developing unified standards that streamline integration efforts.