Managing multiple large language models (LLMs) like GPT-4, Claude, Gemini, and LLaMA can quickly become a logistical and financial burden. From redundant API calls to unpredictable token costs, these inefficiencies disrupt workflows and inflate budgets. Platforms like Prompts.ai simplify this process by consolidating access to 35+ LLMs, offering detailed cost tracking, real-time analytics, and automated model routing. Here’s a quick breakdown of the key platforms covered:

| Feature | Prompts.ai | Platform B | Platform C |

|---|---|---|---|

| Token Spend Tracking | Detailed, real-time by agent/project | Basic, real-time metrics | Application-level summaries |

| Real-Time Analytics | Spending trends, cost insights | Token usage only | Operational health metrics |

| Automated Model Routing | Cost-aware, adaptive routing | Not available | Performance-focused routing |

| Governance Controls | Spending limits, audit trails | Limited | Basic controls |

| Focus Area | Cost and workflow optimization | Finance teams | Development workflows |

For teams managing multiple LLMs, Prompts.ai offers unmatched cost savings, streamlined workflows, and governance features, making it the go-to choice for scalable AI operations.

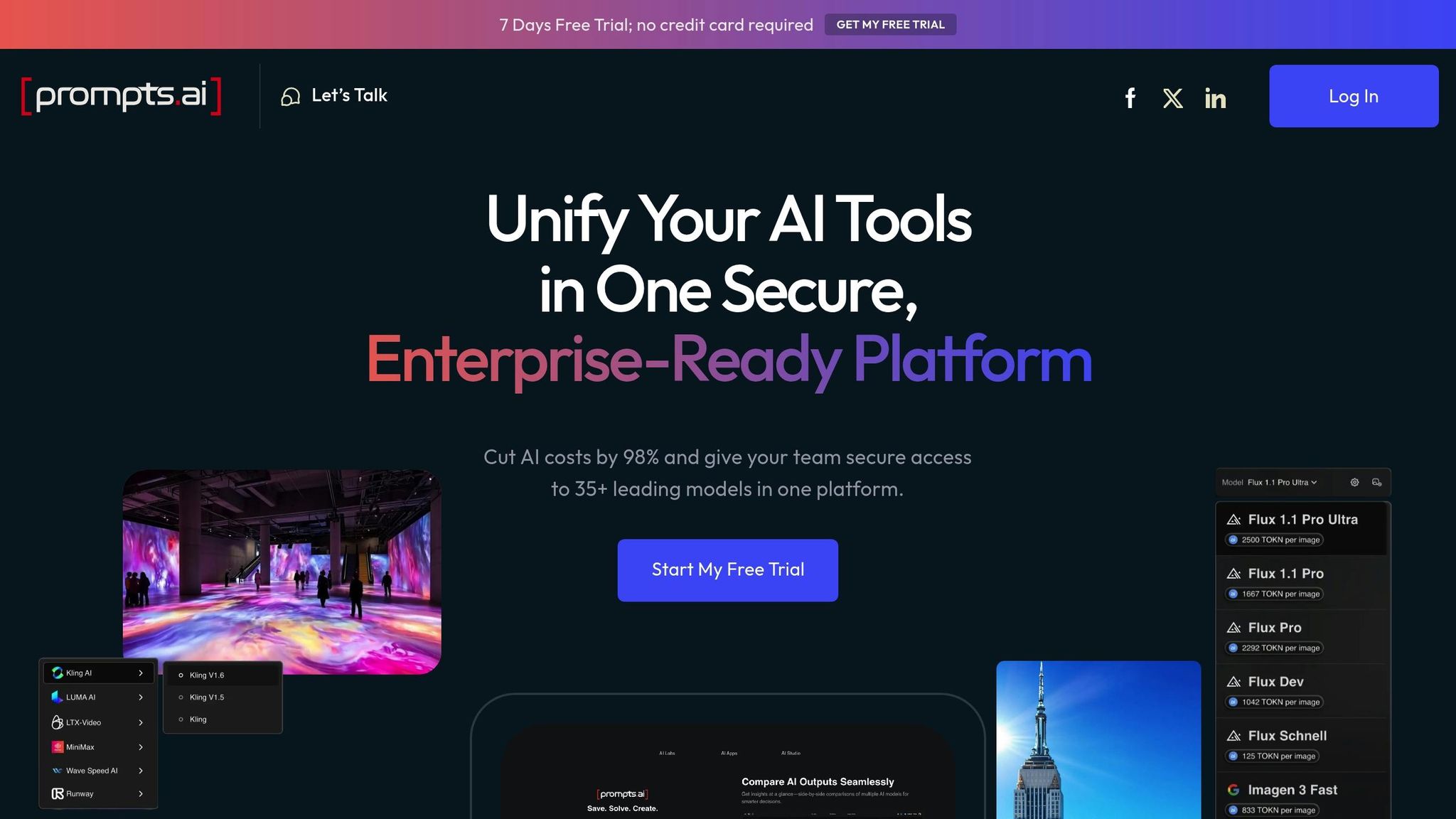

Prompts.ai simplifies AI workflows by integrating access to over 35 models into a single, enterprise-level workspace. This platform eliminates the need for juggling multiple tools, offering full oversight of costs, performance, and governance. With it, companies can cut AI software expenses by up to 98% and increase team productivity tenfold. Here's how prompts.ai transforms AI workflow management:

One standout feature of prompts.ai is its detailed token spend tracking. This tool operates on agent, model, and project levels, giving teams a clear view of where their costs are coming from. It helps identify which prompts are the most costly, pinpoints the priciest models for specific tasks, and compares token usage across various projects.

For teams experimenting with multiple models, such as GPT-4 and Claude, this level of tracking is invaluable. Real-time cost comparisons for individual prompt interactions allow for informed decision-making. Additionally, project-level tracking simplifies internal audits, letting finance teams allocate AI expenses to specific products, clients, or departments without the hassle of manual calculations.

Prompts.ai includes a robust analytics feature that delivers immediate insights into spending at the action level. This allows teams to identify spending trends, understand inefficiencies, and make quick adjustments. By providing real-time data, the platform helps users avoid surprises at the end of billing cycles and stay ahead of potential issues.

The platform also features automated model routing, which intelligently redirects requests to more cost-effective model endpoints when suitable. This ensures tasks are handled by the best-fit model for the job while keeping expenses in check. Over time, the system adapts its routing decisions based on observed usage patterns, further improving efficiency.

Prompts.ai provides tools to set spending limits and alerts for users or entire workspaces. It also includes comprehensive audit trails that track every prompt, offering transparency and preventing budget overruns. These controls are especially valuable for organizations that need to adhere to strict security and compliance requirements.

Unlike the all-encompassing features of prompts.ai, Platform B narrows its focus to monitoring token usage. It offers real-time metrics and detailed breakdowns of token consumption across various LLM environments and interfaces. This data-driven approach is designed to support quick, informed cost management decisions, primarily catering to finance teams looking to optimize expenditures. By specializing in this area, Platform B carves out its own niche, distinct from broader solutions.

Platform C takes a workflow-focused approach, integrating development pipelines with production environments while emphasizing ease of use for developers and essential monitoring tools. While prompts.ai shines in financial analytics, Platform C centers its efforts on ensuring strong operational performance within development workflows. Below is a closer look at its token tracking, operational analytics, routing, and governance capabilities.

Platform C offers metrics for token usage across connected LLM endpoints, providing an application-level view of consumption. This makes it easier for development teams to identify which projects are using the most tokens. However, the platform does not allow for detailed insights into individual prompt performance or agent-specific costs, which can make it harder to fine-tune multi-agent workflows.

The dashboard provides daily and monthly summaries of token usage across providers, but it lacks the advanced cost attribution tools that finance teams often need for granular budgeting or chargeback processes.

The platform's analytics focus on operational health metrics rather than detailed financial insights. It provides visibility into response times, error rates, and throughput across various LLM endpoints. This allows development teams to quickly identify and address latency issues or high failure rates in production systems.

Operational data includes API response codes, average processing times, and queue depths, giving DevOps teams the tools they need to maintain system reliability. However, the analytics fall short when it comes to cost-per-request analysis or comparing the efficiency of different models.

Platform C prioritizes performance and reliability over cost considerations in its routing features. The platform automatically redirects traffic to alternative endpoints if primary services go down and uses load balancing to maintain consistent performance.

It supports balancing traffic across multiple instances of the same model and offers strategies like round-robin or weighted distribution to ensure steady operations. However, it does not incorporate cost-aware routing, which could be a drawback for organizations looking to optimize expenses.

Platform C includes basic governance tools, such as user access controls, project-level monthly token budgets, email alerts for threshold breaches, and audit logs for compliance purposes.

While these features provide a solid foundation for oversight, the governance framework does not include more advanced options like approval workflows for high-cost activities or detailed cost center allocations. These are often critical for larger enterprises managing complex AI budgets. Still, the platform's straightforward controls make it easier for teams to manage multi-LLM environments with confidence and efficiency.

Prompts.ai stands out by bringing clarity and cost efficiency to multi-LLM setups. Its FinOps controls provide real-time, detailed insights into token usage across agents, models, and projects, tackling the financial unpredictability that often plagues multi-LLM environments. This streamlined token management approach ensures better oversight while laying the groundwork for strong governance and scalable operations.

With access to more than 35 large language models, Prompts.ai consolidates AI workflows into a single, centralized platform. It incorporates enterprise-level governance and compliance into every interaction, ensuring security and reliability. The platform’s pay-as-you-go TOKN credit system eliminates recurring fees, slashing AI costs by as much as 98%.

Designed for growth and efficiency, Prompts.ai simplifies AI experimentation, enhances cost analytics, and optimizes prompts - all within a secure framework. This allows teams to focus on driving innovation without the hassle of juggling disconnected tools, addressing the challenges of managing multiple LLMs effectively discussed in this analysis.

Effectively managing multiple LLMs demands a platform that brings together centralized orchestration and precise cost management. Success hinges on tools that provide real-time insights into token usage, automate routing decisions, and enforce governance frameworks that grow with your organization.

Prompts.ai emerges as an ideal solution for organizations tackling multi-LLM management. With access to 35+ integrated models, real-time financial controls, and enterprise-grade governance features, it sets the stage for scalable AI operations while delivering the transparency needed to optimize costs and performance.

The pay-as-you-go TOKN credit system transforms how organizations approach AI budgets. By eliminating recurring subscription fees, teams can test and innovate freely without worrying about runaway expenses. This model is particularly beneficial for those conducting multi-model experiments with providers like OpenAI and Claude, where unpredictable costs often hinder progress.

Beyond cost management, governance plays a vital role in enterprise environments. The platform’s built-in compliance features ensure security requirements are met without sacrificing operational efficiency. Automated routing logic further enhances value by steering teams away from expensive model endpoints when less costly alternatives can achieve the same results, addressing the common issue of model sprawl in complex workflows.

For teams handling internal audits, the platform offers additional advantages. Features like workspace-level spending limits and alerts allow for precise cost tracking. Granular controls - organized by user, project, or client - ensure budgets remain in check, preventing overruns before they happen.

By consolidating operations into a single, secure interface, the platform eliminates the chaos of juggling multiple tools. This streamlined approach not only reduces complexity but also enables side-by-side performance comparisons across models, paving the way for more informed decision-making.

For organizations committed to scaling AI operations while controlling costs and maintaining security, Prompts.ai provides the infrastructure needed to turn experimental workflows into governed, production-ready processes. Its unified approach positions it as an essential tool for managing multi-LLM environments effectively.

Prompts.ai slashes costs by up to 98% with its efficient pay-per-use system, powered by TOKN credits. By bringing together more than 35 LLMs on a single platform, it ensures token usage is optimized, eliminates unnecessary model calls, and simplifies prompt management to cut down on waste.

The platform also employs smart routing logic to bypass costly model endpoints and offers detailed analytics for monitoring token usage across agents, models, and projects. This empowers teams to make informed decisions, spot cost anomalies, and maintain complete oversight of their LLM budgets.

Prompts.ai transforms how teams handle AI workflows, streamlining processes and improving productivity. With features like real-time co-editing, shared prompt logic, and multi-user permissions, collaboration becomes effortless. Integrated version control keeps updates organized and eliminates any potential confusion.

The platform also offers centralized AI workflow management, providing detailed insights into token usage and costs. By leveraging tools like precise usage analytics and smarter model routing, teams can cut down on unnecessary expenses and concentrate on achieving impactful results.

Prompts.ai's automated model routing is designed to reduce expenses by assigning simpler tasks to less costly models, achieving savings of up to 85%. This system works by analyzing tasks in real time and choosing the most economical models, all while ensuring performance remains top-notch.

On top of that, teams can track usage trends and set spending limits to avoid unnecessary costs. This feature helps businesses save an additional 20–40% on AI-related expenses, all while delivering reliable, high-quality results.