Optimize AI Spending with Smart Prompt Routing

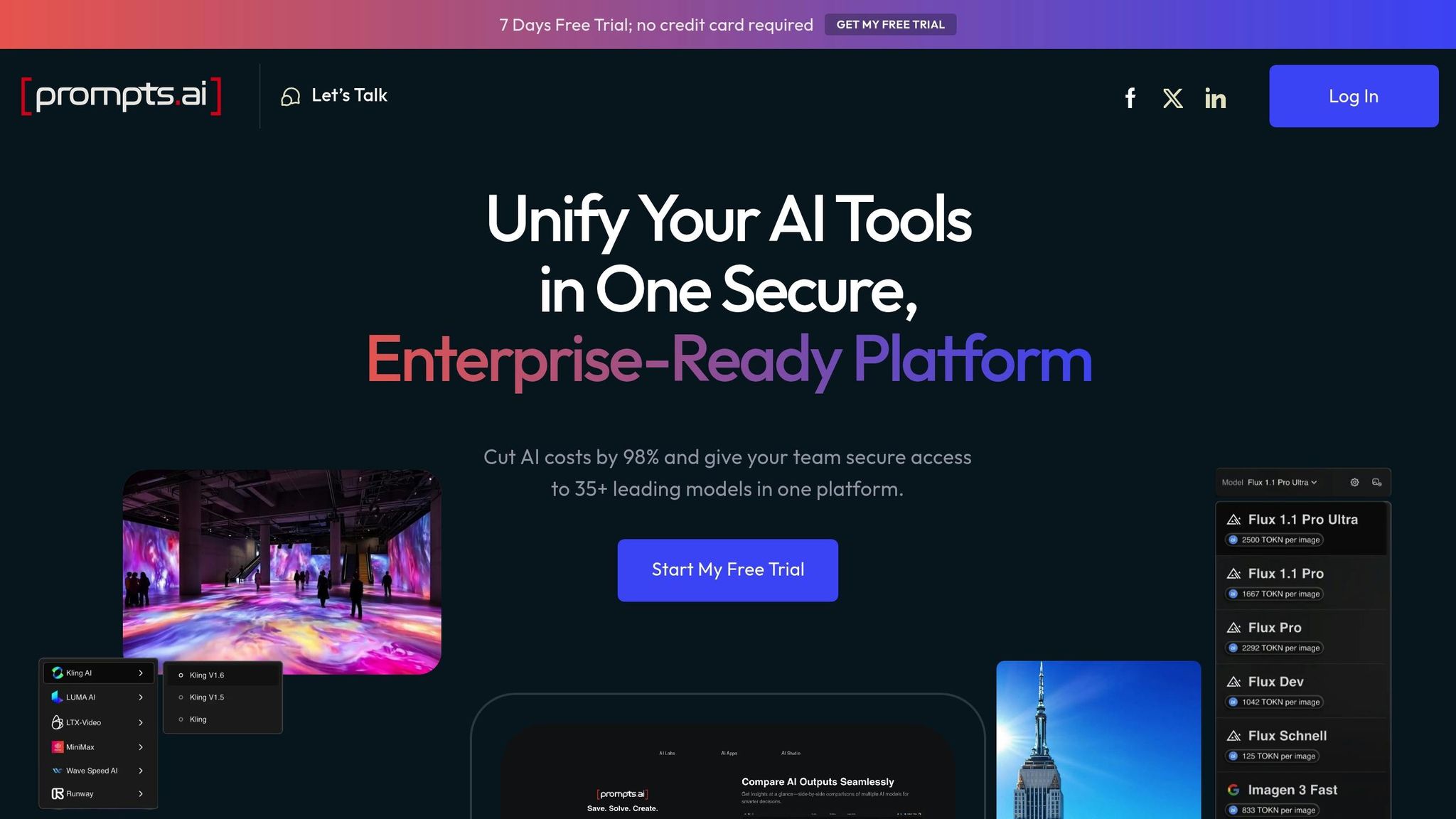

Prompt routing is transforming how businesses manage AI queries by directing each task to the most suitable model, ensuring high performance while cutting costs. Platforms like prompts.ai, Platform B, and Platform C specialize in this, offering solutions that can reduce AI expenses by up to 99% without compromising output quality. Here's what you need to know:

With AI software spending projected to hit $300 billion by 2027, businesses need tools that balance performance and cost. Platforms like prompts.ai stand out by offering transparency, flexibility, and significant savings, making them ideal for enterprises scaling AI operations.

Quick Comparison:

| Platform | Strengths | Limitations |

|---|---|---|

| prompts.ai | 35+ models, real-time tracking, TOKN credits, enterprise security | Learning curve for new users, premium features may increase costs |

| Platform B | OpenAI-compatible, dynamic routing, high cost savings | Requires fine-tuning for maximum efficiency |

| Platform C | Simple setup, Amazon Bedrock integration | Limited to Amazon-hosted models, reduced customization |

Platforms like these ensure businesses can scale AI efficiently while keeping expenses in check. Whether you're cost-sensitive, scaling operations, or seeking simplicity, there's a solution tailored to your needs.

Prompts.ai is an enterprise AI platform that brings together over 35 large language models into one secure and unified interface. Designed for organizations ranging from Fortune 500 companies to creative agencies, it simplifies AI management by consolidating tools while ensuring tight governance and controlled costs. Its advanced prompt routing ensures that every token delivers maximum value.

Prompts.ai uses a dynamic system to match the complexity of a prompt with the most suitable model. Through its pay-as-you-go TOKN credits system, the platform can cut AI costs by up to 98%, eliminating the need for multiple subscriptions. Simple queries are directed to faster, lower-cost models, while more complex tasks are routed to advanced, higher-cost models only when necessary.

For businesses in the U.S., the platform offers US dollar ($) currency reporting with standard American number formatting, making ROI calculations straightforward and easy to follow. This localized feature ensures that financial reporting aligns seamlessly with familiar accounting practices.

Prompts.ai is built with robust routing logic, failover mechanisms, and real-time monitoring to deliver consistent performance, even during peak demand or model outages. Its enterprise-grade architecture ensures smooth and stable prompt handling, regardless of traffic fluctuations or unexpected disruptions.

The platform includes automatic retries and fallback strategies, which are essential for mission-critical operations. Advanced analytics continuously track performance metrics, enabling the system to adjust routing decisions in real time based on model availability and efficiency.

Prompts.ai offers extensive customization options, allowing users to define routing rules, choose preferred models or agents, and set thresholds for performance or cost. This flexibility lets organizations adapt workflows to their specific needs - whether it's prioritizing speed for customer support or ensuring precision for research tasks.

Both visual and code-based configuration options are available, making the platform accessible to technical teams and non-technical users alike. Multi-agent orchestration and customizable prompt flows ensure structured, traceable processes. Additionally, the platform’s governance tools guarantee that all AI interactions meet enterprise-level security and compliance standards.

A standout feature of prompts.ai is its integrated FinOps layer, which tracks every token and provides full visibility into AI spending. The platform’s dashboard offers real-time insights into token consumption, costs per prompt, and overall spending trends in an easy-to-digest format.

Detailed metrics, such as average response times, token usage by model, cost per prompt, and success/failure rates, empower users to refine routing logic and address inefficiencies. Built-in budget alerts prevent overspending, and robust reporting tools link AI expenses directly to business outcomes. This level of transparency is invaluable for enterprises needing to demonstrate ROI and maintain strict budget oversight across various teams and applications.

Platform B, much like prompts.ai, focuses on efficiently routing inputs to the most suitable language model. It prioritizes getting the best value for each token while maintaining stable operations and offering clear cost visibility. By dynamically directing prompts between large language models (LLMs) and smaller language models (SLMs), it achieves significant cost savings without sacrificing performance.

Platform B stands out by using intelligent routing to analyze the task type, domain, and complexity of each prompt. This ensures that prompts are directed to the most cost-effective model. This approach is crucial since premium AI models can be up to 188 times more expensive than smaller models per prompt.

For example, the platform employs models like Arcee-Blitz, which costs only $0.05 per million output tokens and $0.03 per million input tokens. This translates to a savings of $17.92 per million tokens compared to running Sonnet exclusively.

One practical use case involved a marketing team creating a LinkedIn post using the platform's Auto Mode. The cost? Just $0.00002038, as opposed to $0.003282 for Claude-3.7-Sonnet - a staggering 99.38% cost reduction. Similarly, for engineering workflows, the Virtuoso-Medium model handled routine developer questions at $0.00018229, compared to $0.007062 with Claude-3.7-Sonnet, delivering 97.4% savings per prompt.

Platform B doesn’t just save costs; it ensures reliable performance. For routine tasks, it routes prompts to smaller, faster models. When faced with more complex queries that demand advanced reasoning, it escalates the task to powerful models like Claude-3.7-Sonnet.

The platform also offers an OpenAI-compatible endpoint, simplifying integration into existing systems. This compatibility minimizes the need for significant infrastructure changes, reducing implementation risks and ensuring smooth connections with current workflows.

Platform B supports a variety of routing strategies, including static and dynamic routing, LLM-assisted routing, semantic routing, and hybrid approaches. Organizations can fine-tune both classifier and embedding models using proprietary data, enhancing classification accuracy and optimizing routing decisions. This customization allows for transparent, real-time cost tracking while adapting to specific business needs.

In large-scale financial operations, Platform B has achieved remarkable results, including a 99.67% cost reduction and 32% faster processing times - 14 seconds compared to 20.71 seconds with Claude-3.7-Sonnet. By intelligently selecting models, the system can cut AI processing costs by up to 85% in finance operations. Additionally, users can refine reference prompt sets over time, ensuring routing decisions remain aligned with actual usage patterns, further enhancing cost efficiency.

Platform C utilizes Amazon Bedrock's intelligent routing to make prompt delivery more efficient. Its fully managed system focuses on balancing cost and performance without requiring extensive technical input. By integrating built-in intelligence, the platform simplifies the routing process, saving both time and resources.

Platform C achieves cost savings through Amazon Bedrock's intelligent routing, which can lower costs by up to 30% while maintaining accuracy. Its pricing model reflects a focus on efficiency. For straightforward tasks like basic question classification, the Amazon Titan Text G1 – Express model costs just $0.0002 per 1,000 input tokens. For semantic routing, the Amazon Titan Text Embeddings V2 model creates question embeddings at a minimal $0.00002 per 1,000 input tokens.

For more advanced needs, the platform seamlessly transitions to premium models. Anthropic's Claude 3 Haiku is designed for history-related queries, priced at $0.00025 per 1,000 input tokens and $0.00125 per 1,000 output tokens. Similarly, Claude 3.5 Sonnet handles mathematical problems, charging $0.003 per 1,000 input tokens and $0.015 per 1,000 output tokens.

To further optimize expenses, Platform C employs prompt caching, slashing costs by 90% and reducing latency by 85%. This feature is particularly advantageous for businesses with repetitive queries or standardized workflows.

In addition to its cost benefits, the platform ensures reliable performance through robust operational measures.

Platform C is built around a fully managed service model that removes the need for custom configurations or ongoing maintenance. This approach minimizes operational risks while delivering consistent performance.

The platform's reliability is rooted in its integration with Amazon Bedrock's infrastructure, which ensures enterprise-grade stability and uptime. Its intelligent routing system actively monitors model performance and availability, automatically rerouting traffic as needed to maintain seamless service.

However, Platform C has some limitations, particularly in model hosting flexibility. It only supports models hosted within Amazon Bedrock and within the same model family. This restriction may pose challenges for organizations requiring external model hosting or cross-family routing capabilities.

While Platform C emphasizes simplicity and efficiency, it also offers basic workflow customization. It provides predefined optimizations for cost and performance, which are suitable for most standard use cases. The platform supports both LLM-assisted routing using classifier models and semantic routing via embedding-based methods.

Users can configure routing policies within the Amazon Bedrock ecosystem, but the platform offers limited control over routing logic and optimization criteria compared to fully custom solutions. This balance between ease of use and flexibility makes it a strong choice for organizations that value quick implementation over granular control.

Platform C integrates seamlessly with Bedrock's analytics tools, offering real-time tracking of token usage and costs. Its live dashboard provides detailed insights, including total token consumption, costs per model, and usage patterns, enabling immediate budget adjustments.

The platform also delivers model-specific breakdowns, showing token usage and costs for each model. These insights allow managers to create data-driven reports that clearly demonstrate ROI to leadership. By offering this level of transparency, Platform C helps organizations avoid common pitfalls like budget mismanagement, compliance issues, and lack of optimization clarity.

When it comes to managing prompt routing, each platform presents a unique mix of strengths and limitations. Here's a detailed comparison to help you weigh the options based on your specific needs and budget considerations.

| Platform | Advantages | Disadvantages |

|---|---|---|

| prompts.ai | - Access to 35+ leading models (GPT-4, Claude, LLaMA, Gemini) in one place. - Real-time FinOps tracking with clear token usage insights. - Pay-as-you-go TOKN credits, avoiding recurring fees. - Enterprise-grade security and compliance standards. - Expert-designed workflows and a supportive community. - Potential for up to 98% savings on AI software costs. |

- May require a learning curve for teams unfamiliar with multi-model orchestration. - Needs thoughtful planning to optimize routing efficiency. - Premium features could drive up costs for heavy users. |

| Platform C | - Fully managed service, reducing technical setup efforts. - Integrated with Amazon Bedrock, ensuring enterprise-grade stability. |

- Limited to Amazon Bedrock hosted models within the same model family. - Restricted customization options for routing logic. - Dependence on the AWS ecosystem can lead to vendor lock-in. - Reduced flexibility for organizations leveraging diverse model sources. |

Transparency is a cornerstone of operational value for these platforms. With 70% of consumers favoring businesses that openly share their practices, this demand for clarity extends to enterprise AI solutions. Organizations increasingly expect detailed visibility into token usage and costs, as this transparency supports budget adjustments and strengthens trust in the platform.

By offering real-time token tracking, platforms not only improve budget management but also provide clear insights into return on investment (ROI). This level of visibility is essential for data-driven decision-making, helping businesses maximize the value of their AI investments.

Another key factor to consider is the trade-off between customization and simplicity. For instance, while Platform C offers a user-friendly interface, its reliance on Amazon Bedrock-hosted models within the same family limits its flexibility. Despite these restrictions, it can still deliver results. A legal tech company using Intelligent Prompt Routing via AWS Bedrock reported a 35% reduction in costs and a 20% improvement in response times within just 60 days.

This example highlights that even platforms with limitations can provide substantial benefits when aligned with specific operational goals.

The cost structure of a platform significantly impacts its overall value. For organizations with fluctuating workloads, pay-as-you-go models like TOKN credits can be a smart choice, offering financial flexibility without long-term commitments. On the other hand, managed services are ideal for teams that prioritize ease of use and operational simplicity over granular control.

Customization also plays a major role. Platforms offering custom prompt routing allow organizations to fine-tune routing logic and optimization criteria. However, this level of control comes with the added complexity of managing bespoke systems, which may not suit every team.

Real-time performance monitoring sets enterprise-ready platforms apart. This feature enables continuous optimization, providing instant feedback on system performance and ROI. For businesses aiming to stay competitive, the ability to make quick, informed adjustments is invaluable.

Based on our analysis, prompts.ai stands out as an ideal choice for balancing performance, cost management, and operational transparency. This platform not only meets the key criteria outlined earlier but also strengthens the financial and operational capabilities necessary to thrive in today’s AI-driven environment. With access to over 35 top-tier models, it’s a smart option for enterprises looking to maximize value without compromising on quality.

The data underscores the powerful impact of efficient AI routing. Businesses have reported cost reductions of 85–90% when AI is properly implemented, with the break-even point typically reached at 50,000–55,000 interactions annually. Considering that AI agents cost just $0.25–$0.50 per interaction compared to $3.00–$6.00 for human agents, the financial benefits of intelligent routing are clear and measurable.

AI software spending is projected to approach $300 billion by 2027, and 97% of executives plan to increase investments in generative AI. However, S&P Global data reveals that by 2025, 42% of companies will have abandoned most AI projects, often due to high costs and unclear value. In this context, prompts.ai stands out with its transparent token tracking and real-time ROI monitoring, ensuring every dollar spent delivers measurable results. These financial tools provide the clarity and accountability that many organizations find lacking in other solutions.

For most businesses, prompts.ai strikes the perfect balance between functionality, cost control, and scalability. Its expert workflows, active user community, and proven cost-saving capabilities make it a standout option. With a typical payback period of just 4–6 months for AI implementations, this platform drives efficiency and delivers competitive advantages through better resource allocation and actionable insights.

Prompts.ai’s prompt routing system is designed to cut costs by matching tasks to the most suitable AI models. Simpler prompts are directed to models that are more budget-friendly, while more advanced models are reserved for handling complex tasks. This smart allocation ensures a balance between saving money and maintaining dependable performance.

Another advantage of this system is its ability to trim token usage, giving users more value without sacrificing accuracy. With real-time tracking of token consumption, users can keep an eye on their ROI and fine-tune workflows based on data insights to achieve peak efficiency.

With prompts.ai, tailoring AI workflows to your specific needs is straightforward. The visual workflow builder allows you to create multi-agent pipelines, bringing prompts and agents together in a single, organized space. For handling more intricate tasks, you can implement multi-step prompt chaining, while keeping an eye on token usage in real time to maintain better control over costs.

Features like version control and performance analytics provide the tools to fine-tune your workflows, ensuring they run as efficiently as possible. These capabilities make it easy to adapt your AI processes and deliver clear, measurable outcomes.

The real-time cost tracking feature in Prompts.ai helps businesses maintain control over their AI expenses by continuously monitoring token usage and related costs. This constant oversight enables teams to make quick adjustments, avoiding unnecessary spending and ensuring budgets are managed effectively.

By offering clear insights into spending trends, businesses can allocate resources more wisely, improve performance, and see tangible returns on their AI investments.