AI workflow platforms in 2026 are reshaping how businesses operate, moving beyond simple task automation to smarter, decision-driven systems powered by large language models (LLMs). These tools streamline operations, cut costs, and integrate seamlessly with existing tech ecosystems, solving challenges like tool sprawl and lack of compatibility. Below are the top platforms leading this transformation:

These platforms address critical needs like cost efficiency, scalability, and governance, making them essential tools for businesses navigating the AI-driven future.

Prompts.ai brings together over 35 large language models (LLMs) - like GPT-5, Claude, LLaMA, Gemini, Grok-4, and Flux Pro - into a single, secure platform. This eliminates the hassle of juggling multiple subscriptions, logins, and billing systems. Here's how its features simplify AI workflows and enhance productivity.

Prompts.ai’s design allows users to switch between models effortlessly without reconfiguring workflows or managing separate API keys. This means you can directly compare GPT-5’s strengths to those of Claude or Gemini, then direct production tasks to the model that fits your needs best. Each model has unique strengths - some excel in creative tasks, while others are better suited for data analysis or coding. This flexibility ensures you always have the right tool for the job.

Beyond its integration capabilities, Prompts.ai helps reduce costs with its straightforward, usage-based billing model. By implementing a unified tracking system for token usage, the platform offers pay-as-you-go TOKN credits, removing the need for recurring fees. Many organizations have reduced their AI software expenses by up to 98% by consolidating multiple tools into this single, transparent platform.

Prompts.ai adapts to the needs of individuals, teams, and enterprises alike. Plans start at $29 per month for individual users, while enterprise plans range from $99 to $129 per member. There’s even a $99 Family Plan, recognizing that AI automation is increasingly valuable for both personal and professional use. This scalability ensures that users only pay for what they need, whether they’re automating workflows for a small team or an entire organization.

For growing operations, secure governance is a top priority. Prompts.ai is built with enterprise-grade features, providing an audit trail for every AI interaction to support compliance needs. Sensitive data stays within the organization, avoiding exposure across multiple third-party services. Real-time dashboards give administrators visibility into spending, access control, and the ability to link AI costs directly to business outcomes. These tools are crucial for maintaining transparency and control in AI-driven operations.

Vellum AI takes a different approach by focusing on AI-native workflow orchestration rather than just automating individual tasks. It allows users to describe a process in plain language, and the platform automatically generates the workflow structure - eliminating the need for manual node setup. This "prompt-to-build" feature saves significant time, especially for teams experimenting with multiple agent concepts.

With its model-agnostic design, Vellum enables seamless switching between LLMs to balance cost, speed, and quality without requiring a complete workflow rebuild. The platform supports advanced features like retrieval-augmented generation (RAG), semantic routing, and multi-step agent orchestration. It allows users to chain prompts, so the output from one model becomes the input for another, enabling complex reasoning pipelines. Built-in versioning and evaluation tools make it easy to test and refine prompt variations.

Vellum is designed to bring technical and non-technical teams together. Product managers and operations staff can use its visual builder to create agents, while engineers can enhance workflows using custom logic with TypeScript or Python SDKs. For example, RelyHealth used Vellum to deploy hundreds of custom healthcare agents, cutting their deployment timeline by 100x. Max Bryan, VP of Technology and Design, shared:

We accelerated our 9-month timeline by 2x and achieved bulletproof accuracy with our virtual assistant. Vellum has been instrumental in making our data actionable and reliable.

The platform’s strong governance features ensure that rapid deployment doesn’t compromise security.

Vellum provides enterprise-grade security with role-based access control (RBAC), SSO/SAML integration, and immutable audit logs that track every prompt and output. It complies with SOC 2, GDPR, HIPAA, and ISO 27001 standards. Deployment options include public cloud, private VPC, on-premise setups, and even air-gapped environments for industries with strict regulations. Human-in-the-loop approval gates allow sensitive actions to pause for review, ensuring accountability. Notably, 78% of operations leaders cite explainability and debugging tools as critical factors in their purchasing decisions.

Pricing starts at $25/month for the Pro plan, $79/user/month for Business plans, and custom Enterprise pricing for unlimited credits and dedicated support.

Zapier connects AI tasks across more than 8,000 apps, handling over 23 million AI tasks monthly for over 1 million companies. Users can select from LLM providers like GPT, Anthropic, Gemini, and Azure OpenAI, or opt for built-in free options such as GPT-4o mini and Gemini 2.0 Flash. By replacing static workflows with dynamic, AI-driven decision-making, Zapier offers a more adaptive automation experience.

Zapier's Agents in Zaps feature enables AI to make decisions on how to achieve goals autonomously, moving beyond rigid workflows. These agents can perform tasks like web searches, drafting messages, and updating databases without manual intervention. The platform also supports processing images, audio, and video directly within workflows. With the Model Context Protocol (MCP) connector, developers can seamlessly integrate external AI tools into Zapier's ecosystem of 8,000+ apps without needing complex API setups. For added control, human-in-the-loop approval steps - such as Slack-based reviews - allow teams to verify AI-generated actions before they are finalized, enhancing both accuracy and trust in automation.

Zapier demonstrates its value in scaling operations through real-world applications. For example:

These examples highlight how AI automation can drive efficiency and financial impact across various industries.

"Zapier makes our team of three seem like a team of ten." - Marcus Saito, Head of IT and AI Automation, Remote

Zapier adheres to SOC 2 Type II, GDPR, GDPR UK, and CCPA standards, with safeguards to ensure that third-party AI models do not use customer data for training. The platform offers centralized governance tools like Domain Capture for full usage visibility, Application Controls to restrict specific AI integrations, and detailed permissions at the team, app, and data levels. Security measures include Enterprise SSO (SAML 2.0), SCIM, two-factor authentication, IP allowlisting, and VPC peering for secure internal connectivity. Data is protected with top-tier encryption and transport protocols, ensuring transparency in workflow changes. Pricing starts at $19.99/month for Professional plans, $69/month for Team plans, with custom pricing options available for Enterprise plans that include unlimited users.

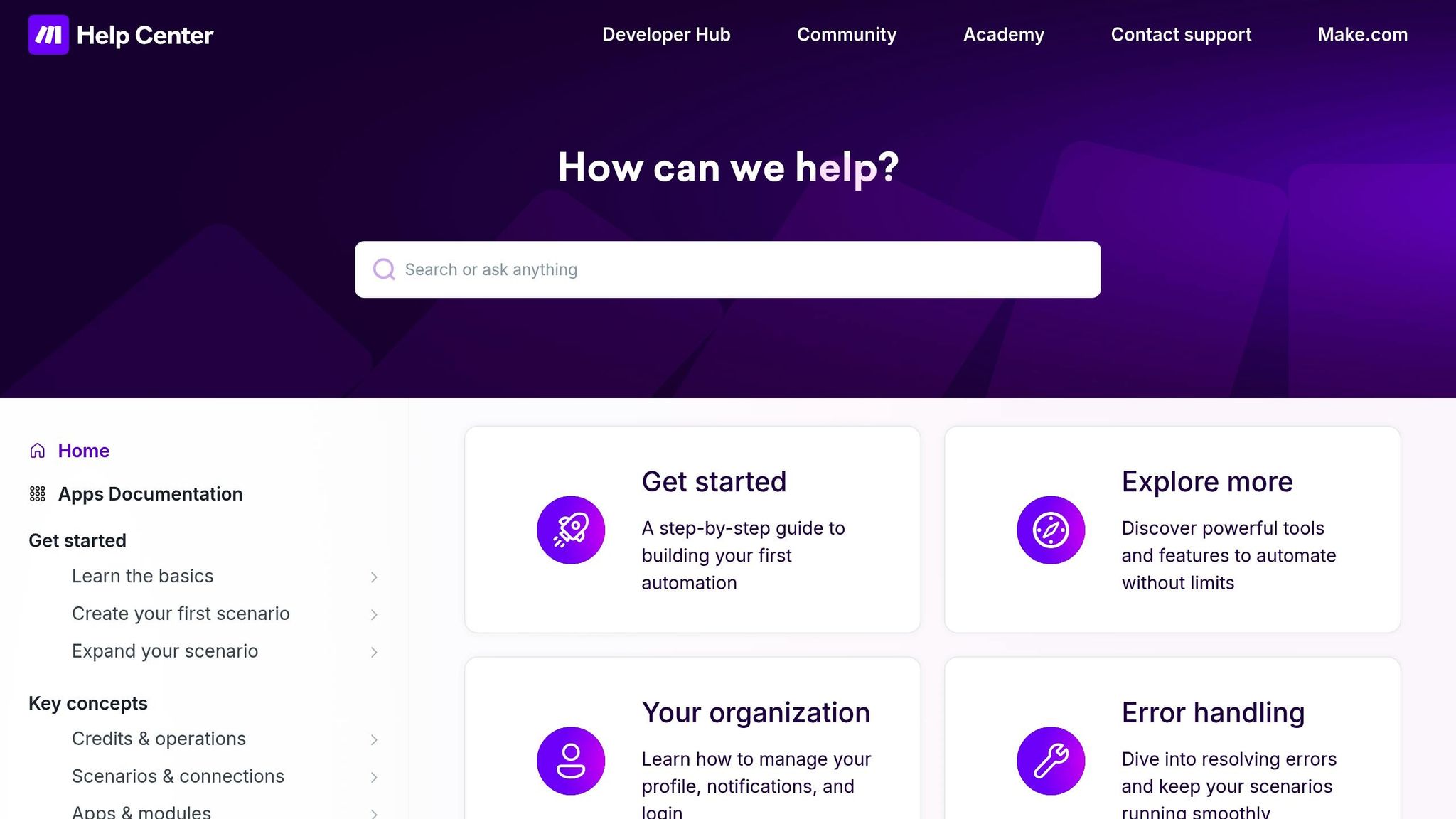

Make connects over 3,000 apps using a visual canvas that displays your automation workflows. Unlike traditional linear tools, Make employs scenario-based automation, which incorporates branching logic, filters, and flexible multi-path workflows. Its visual builder is especially adept at handling data transformations, thanks to features like iterators and aggregators. This makes it a strong choice for processing large volumes of data without requiring coding skills.

Make provides native modules for popular tools like OpenAI (ChatGPT, DALL-E, Whisper) and Google Gemini, while also supporting any OpenAI-compatible model through its HTTP module. In 2026, the platform introduced Make AI Agents - goal-driven automations that autonomously select tools to achieve defined objectives. These agents leverage existing Make scenarios to handle tasks without manual intervention. Additionally, Make functions as a Model Context Protocol (MCP) server, allowing external AI models, such as Claude, to securely execute Make scenarios as tools for real-world tasks.

Looking ahead, the Make Maia assistant will enable users to build complex workflows simply by describing them in natural language. This feature will allow automations to be created conversationally, reducing the need for hands-on configuration and simplifying the process for users.

Make uses an operations-based pricing model, charging per module execution instead of per completed workflow. The Free plan includes 1,000 operations per month and 2 active scenarios. Paid plans start at $9/month for the Core plan (10,000 operations, unlimited scenarios), $16/month for Pro (which adds priority support), and $29/month for Teams (featuring multi-user capabilities). Additional operations can be purchased for approximately $1 per 1,000 operations. This model can be highly economical for complex workflows with branching logic, as you only pay for the modules that run. However, AI steps may consume multiple credits per execution, depending on their complexity.

Make’s pricing model and operational framework support scalability, from simple automations to enterprise-level workflows. The platform ensures data integrity with ACID transactions and offers tools for bulk data processing. It boasts a 99.9% infrastructure availability rate and average response times under 200ms for standard integrations. Examples of its scalability in action include:

Teleclinic's Head of Operations shared, "Make really helped us to scale our operations, take the friction out of our processes, reduce costs, and relieved our support team."

Shop Accelerator Martech's COO remarked, "Make drives unprecedented efficiency within our business in ways we never imagined. It's having an extra employee (or 10) for a fraction of the cost."

The upcoming Make Grid feature, set to launch in 2026, will act as a "Command Center", providing a visual overview of an organization’s entire automation ecosystem. This tool will help teams identify bottlenecks and monitor workflow performance in real time.

Make adheres to SOC 2 Type II, GDPR, and SCIM standards, offering Single Sign-On (SSO) and a secure connection manager that stores API keys securely instead of embedding them directly in workflows. The "Scenario Run Replay" feature allows users to replay failed executions with the original data, speeding up troubleshooting processes. Enterprise plans include advanced governance options and dedicated support, though some users have noted that the platform’s role-based access control (RBAC) is less detailed compared to other enterprise solutions. To minimize risks, critical modules should include manual error-handling configurations to prevent silent data loss during API disruptions. For organizations with strict compliance needs, redirecting execution data to an external logging service may be advisable, as Make’s native logs are retained only for a limited time based on the plan.

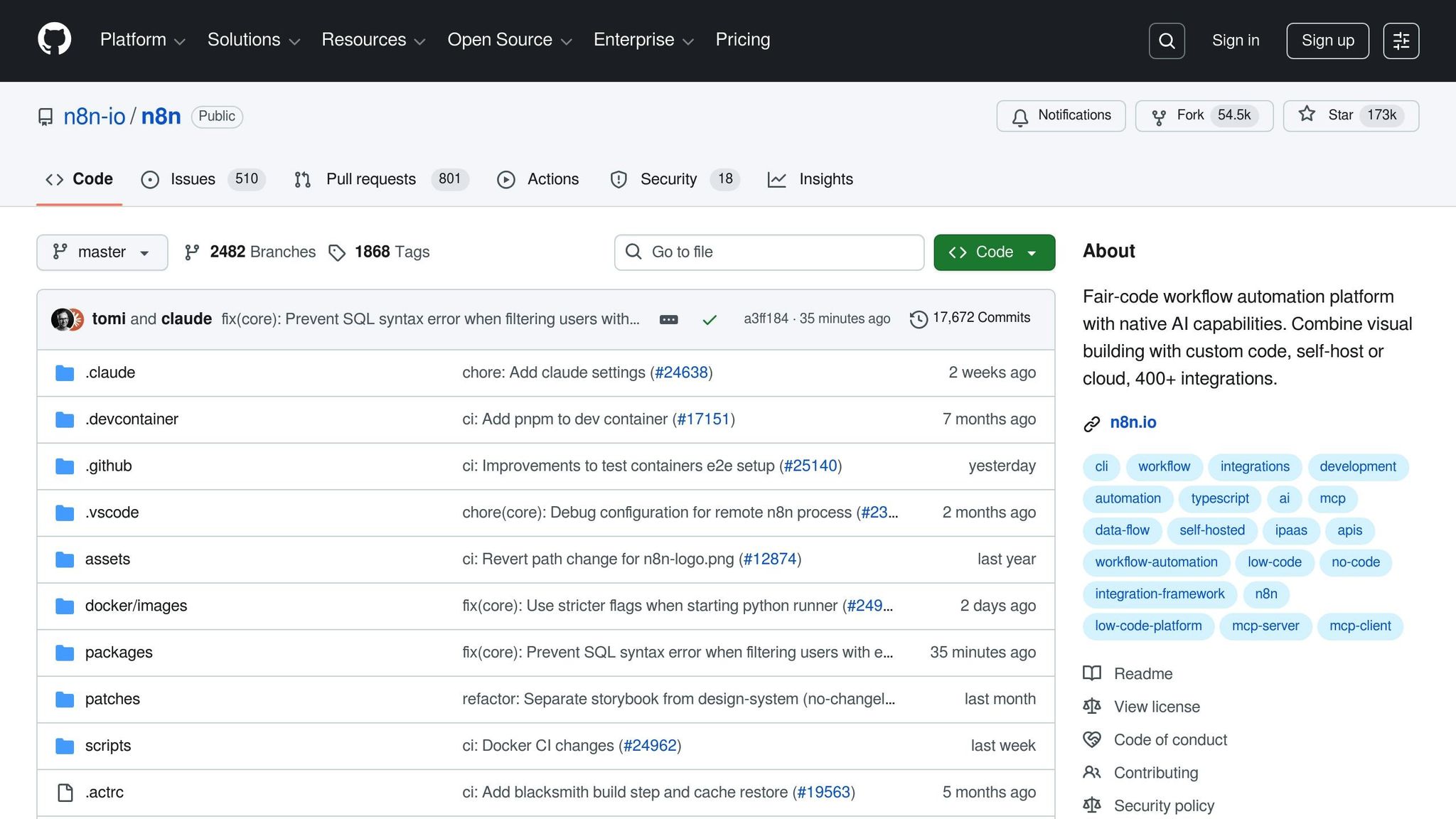

n8n stands out as a platform designed with developers in mind, offering both cloud-hosted and self-hosted options. It operates on an execution-based pricing model, where one workflow run equals one execution, no matter how many steps it includes. This makes it a practical choice for managing intricate, multi-step AI workflows without the higher costs often associated with task-based pricing.

n8n provides native LangChain integration, enabling users to create advanced multi-agent AI systems and RAG (Retrieval-Augmented Generation) pipelines directly within its visual editor. With the AI Agent Node, standard LLMs can be transformed into goal-driven agents capable of using tools, making decisions, and retaining memory across complex workflows. The platform supports a range of LLMs, including OpenAI, Anthropic (Claude), Google Gemini, DeepSeek, Groq, and Hugging Face, alongside vector database integrations such as pgvector, Pinecone, Chroma, Qdrant, and Weaviate. Its AI Workflow Builder can even convert natural language descriptions into functional workflows automatically. By chaining AI requests and using cheaper models for intermediate steps, users can cut API costs by 30-50%.

n8n’s execution-based pricing ensures affordability for even the most complex workflows. Technical users can access the self-hosted Community Edition for free, with unlimited workflows and executions. For cloud-hosted options, the Cloud Starter plan begins at $20/month for 2,500 executions, while Cloud Pro offers 10,000 executions for $50/month. For businesses, the self-hosted Business plan costs $800/month, offering advanced features, while Enterprise pricing is customized for organizations requiring features like advanced RBAC, SSO, and dedicated support. By charging per workflow execution instead of per step, n8n can save organizations over $500 per month on high-volume workflows compared to task-based pricing models.

n8n is built to handle up to 220 workflow executions per second per instance. Its Queue Mode leverages Redis to distribute execution across multiple worker processes, enabling horizontal scaling to meet increasing demands for fast and efficient AI workflows. For instance, in 2025, Dennis Zahrt, Director of Global IT Service Delivery at Delivery Hero, implemented a user management workflow that saved the company 200 hours each month. Similarly, Luka Pilic, Marketplace Tech Lead at StepStone, reported a dramatic improvement in efficiency, completing two weeks’ worth of API integration and data transformation work in just two hours.

"It takes me 2 hours max to connect up APIs and transform the data we need. You can't do this that fast in code." - Luka Pilic, Marketplace Tech Lead, StepStone

n8n prioritizes security by integrating with external secret managers such as AWS Secrets Manager, Azure Key Vault, Google Cloud Platform, HashiCorp Vault, and Infisical, ensuring sensitive credentials stay off the platform. For users opting for self-hosting via Docker or Kubernetes, all workflow data, credentials, and logs remain within their own firewall, supporting compliance with regulations like HIPAA, GDPR, and SOC 2. Enterprise users benefit from advanced RBAC through "Projects", which restrict access to specific workflows and credentials based on roles. Additionally, Git-based source control allows secure movement of workflows between development, staging, and production environments.

Gumloop is a platform specifically designed to help teams leverage large language models (LLMs) with ease. At the heart of this system is Gummie, an AI assistant that converts natural language instructions into fully functioning workflows. This capability has proven invaluable for organizations. Fidji Simo, CEO of Instacart, shared, "Gumloop has been critical in helping all teams at Instacart - including those without technical skills - adopt AI and automate their workflows, which has greatly improved our operational efficiency."

Gumloop supports model-agnostic workflows, allowing users to switch seamlessly between models like GPT-4o, Claude 3.7 Sonnet, Deepseek V3 R1, and Gemini 2.5 Pro within a single automation. Unlike many platforms that require users to manage API keys separately, Gumloop simplifies the process by including LLM costs in its subscription plans. The AI Router node intelligently assigns tasks to the right models, while the Model Context Protocol (MCP) server enables connections to custom APIs, extending the platform’s capabilities beyond its 125+ built-in nodes. This setup ensures predictable pricing and smooth integration.

Gumloop’s pricing structure is designed for flexibility and clarity:

By bundling LLM API costs into its subscription plans rather than charging per task, Gumloop simplifies budgeting, making it easier for organizations to scale their AI workflows without unexpected expenses.

Gumloop’s structure is designed to grow alongside organizations of any size. It uses a hierarchical approach, with Organizations providing centralized control and Workspaces enabling collaboration across departments like Sales, Marketing, and Operations. The platform also supports agentic workflows, where AI agents can assess tasks dynamically and trigger workflows based on context instead of following rigid sequences. Bryant Chou of Webflow remarked, "Gumloop wins time back across an org. It puts the tools into the hands of people who understand a task and lets them completely automate it away." Additionally, the platform’s ability to auto-scale and execute workflows in parallel ensures it can handle the demands of enterprise operations.

Gumloop prioritizes security and compliance to meet organizational needs. It adheres to SOC 2 and GDPR standards and includes tools for enterprise-grade control. Administrators can enforce AI model allow/deny lists, ensuring compliance with regulations like HIPAA by restricting certain models or providers. The Incognito Mode enhances privacy by erasing all inputs, outputs, and intermediates in real time. Centralized security policies are further supported by organization-level credentials, which override personal or workspace keys to prevent unauthorized access. For organizations requiring maximum security, VPC deployments allow Gumloop to operate within their own cloud infrastructure. Comprehensive audit logging tracks every action on the platform, offering a transparent view of data flow and administrative changes either through a dashboard or API.

Lindy.ai stands out in the automation landscape by creating AI agents that think and adapt instead of following rigid, pre-set scripts. With its innovative "vibe coding" approach, users can describe an agent's behavior in plain English, and the platform quickly transforms that into a production-ready solution - sometimes in as little as 5 minutes. This allows businesses to reduce repetitive tasks by deploying agents equipped with memory and adaptability.

The platform leverages GPT and Claude to power agents capable of analyzing text, generating outputs, and understanding user intent. Unlike traditional automation tools that falter when faced with shifting contexts, Lindy.ai agents use memory to navigate complex scenarios, including edge cases that often require human input. With support for over 3,000 native integrations - and up to 7,000+ in certain configurations - Lindy.ai connects seamlessly with CRM systems, email platforms, and calendar tools. CEO Flo Crivello highlights this capability:

AI-powered workflows can handle those edge cases and adapt to keep the automation going.

Its Autopilot feature enhances functionality by providing agents with their own cloud-based computers, enabling them to execute tasks beyond typical API integrations. Additionally, multi-agent coordination allows specialized agents to collaborate on intricate business processes, bridging the gap between automation and real-time decision-making.

Lindy.ai offers a task-based pricing model, charging for task execution rather than per user, making it an economical choice for teams with high usage demands. The pricing tiers include:

Additional charges include AI phone calls at 20 credits per minute and AI phone numbers at $10 per number per month, offering flexibility for specialized tasks.

Lindy.ai is designed to scale effortlessly, accommodating both individual users and large enterprises. Team Accounts allow secure sharing and deployment of AI agents across departments, while the Agent Swarms feature enables a single agent to clone itself and perform hundreds of tasks simultaneously, such as managing personalized outreach campaigns. For workflows requiring precision, the Human-in-the-Loop (HITL) feature lets AI handle the majority of tasks but pauses for human review at critical points, making it ideal for sensitive operations in areas like finance, HR, or customer support.

Lindy.ai prioritizes security and compliance, meeting rigorous standards such as SOC 2, HIPAA, GDPR, and PIPEDA. The platform includes a Trust Center and secret management tools to address enterprise-level security requirements. Through Team Accounts, organizations can configure permissions and controls to ensure agents operate safely and predictably. For healthcare providers, Lindy.ai offers a HIPAA-compliant notetaker tailored for medical documentation, addressing the unique needs of regulated industries like healthcare and finance.

StackAI provides a no-code platform that bridges large language models (LLMs) with enterprise data through an intuitive 2D visual canvas. Business teams can easily create workflows using drag-and-drop tools, while developers have the flexibility to enhance these workflows with Python nodes for deeper customization. In 2025, Stefan Galluppi, LifeMD's CIO, used StackAI to simplify intricate AI workflows, showcasing the platform's effectiveness.

This user-friendly design supports seamless integration with leading LLMs.

StackAI natively supports top LLMs, including OpenAI, Anthropic (Claude), Mistral, Google, Meta, Azure OpenAI, and local models via endpoints. Its AI-Native Routing introduces logic nodes for if/else decision-making and dynamic prompting, enabling automated task routing based on LLM outputs. A built-in Knowledge Base node simplifies the Retrieval-Augmented Generation (RAG) process by handling chunking, embedding, indexing, and retrieval with citations, covering 90% of RAG use cases out of the box.

The platform also excels at processing unstructured data, offering tools for PDF parsing, web scraping, and OCR. This transforms raw data into structured insights that can be deployed as chatbots, web forms, batch processors for bulk tasks, or REST APIs for backend integration. For example, at the MIT Martin Trust Center, Doug Williams, Gen AI Lead, created AI assistants for students in just weeks, revolutionizing their learning experience without requiring coding skills. Similarly, Brian Hayt, Director of BI at Noblereach, reduced the time needed for generating fully cited reports for grants and competitive research from a week to just five minutes.

StackAI offers a clear and flexible pricing structure with three tiers: a Free Plan for early-stage prototyping, a Usage-Based SaaS model for scaling operations, and an Enterprise Custom plan tailored for large-scale deployments, including private cloud or on-premise options.

This flexible pricing ensures that StackAI can support users at every stage, from small-scale prototypes to enterprise-level applications.

The platform adapts to both individual and enterprise needs, featuring over 100 pre-built templates for quick deployment. Enterprise-grade tools include SSO, password protection, custom domains, and centralized analytics to monitor token usage and performance. Developers can expand workflows with custom code or API nodes, while non-technical users benefit from the straightforward visual builder. Guillermo Rauch, CEO of Vercel, highlights its accessibility:

StackAI makes the promise of AI agents real. For everyone, at scale, with just point and click.

This balance of simplicity and advanced functionality makes StackAI a powerful tool for organizations of all sizes.

StackAI prioritizes security with an 8-Layer Governance Model that includes RBAC, folder-level controls, project versioning, SSO, encrypted connections, production analytics, and MFA. The platform complies with SOC 2 Type II, HIPAA, and GDPR standards, with ISO 27001 certification in progress. Features like PII detection and redaction, data retention policies, and a strict commitment to not using user data for model training under enterprise contracts ensure data privacy. For industries with stringent data residency requirements, such as defense and finance, StackAI supports on-premise and private cloud deployments. Additionally, manual approval workflows ensure that no AI agent goes live without proper review.

This comprehensive approach to governance and security makes StackAI a trusted choice for organizations with high compliance and privacy standards.

Pipedream is a platform designed with developers in mind, blending serverless infrastructure with AI-driven workflow automation. It’s trusted by over 1,000,000 developers, from small startups to Fortune 500 companies, handling billions of events and supporting data pipelines that process over 10,000,000 events per second. After being acquired by Workday in December 2025, Pipedream has strengthened its focus on enterprise-level stability and governance, giving developers more control and flexibility in automation. This foundation supports its advanced AI integration capabilities.

The platform’s code-centric design is a key feature, allowing developers to write custom Node.js, Python, Go, or Bash code directly within workflows. It also supports the use of any npm or PyPI package in a serverless environment. This flexibility makes it easier for developers to prototype quickly without the limitations often found in no-code platforms.

Pipedream incorporates AI tools like "Create with AI", "Edit with AI," and "Debug with AI" - powered by String.com - to help developers build, modify, and troubleshoot workflows using natural language prompts and automated error fixes.

The platform supports the Model Context Protocol (MCP), which includes a Docs MCP Server. This feature enables AI assistants like Claude and development environments such as VSCode to search Pipedream documentation and generate precise integration code. Pre-built actions are available for popular LLMs like OpenAI and Claude, handling tasks such as text classification, summarization, and embeddings. Developers can also write their own code to interact with any LLM API using Pipedream’s serverless environment.

Pipedream uses a credit-based pricing structure where 1 credit equals 30 seconds of compute time.

For enterprises, custom pricing is available with additional features like HIPAA compliance and audit logs.

Pipedream is built for scalability, offering features such as adjustable memory, custom timeouts, rate limits, and cold start management. It supports event-driven patterns and real-time triggers, making it ideal for high-volume data processing and backend automation. With over 3,000 app integrations and built-in authentication management, teams can create complex workflows without worrying about manual credential handling.

For developer teams, the platform provides tools like GitHub Sync, VS Code integration, and MCP server deployment. Step Notes allow developers to add markdown-based explanations directly to workflow steps, improving team communication and documentation.

Pipedream prioritizes governance and security alongside performance. The platform is SOC 2 Type II certified, HIPAA compliant, and meets GDPR standards. It includes a Data Processing Agreement (DPA) for managing sensitive data. Key security features include:

For enterprise users, additional options like SAML SSO, SCIM for identity management, and two-factor authentication (2FA) are available. Audit logging is included in the Enterprise tier, providing insights into workflow executions and changes. Additionally, source-available triggers and actions enhance transparency for security reviews.

Workato is a platform designed to handle complex, cross-departmental processes for enterprises. It’s trusted by over 2,000 brands, including Broadcom, Zendesk, and Atlassian, to manage workflows that require high uptime and strict governance.

The platform features the Workato Agentic & Genie Framework, which uses AI-driven automation to go beyond basic chatbot capabilities. These specialized AI agents, called "Genies", can retrieve data, trigger actions across more than 8,500 apps, and manage dynamic processes. The AI Connector, developed in collaboration with Anthropic and OpenAI, leverages models like Anthropic's Sonnet 4 and OpenAI's GPT-4o mini for tasks such as text analysis, email drafting, summarization, and translation.

At the heart of Workato’s AI capabilities is Workato Genie, which uses generative AI to interpret user intent, incorporate real-time enterprise data, and execute tasks autonomously. Bhaskar Roy, Chief of AI Products & Solutions at Workato, highlights its impact:

"With Workato Agentic, businesses can now rapidly build and manage AI Agents with low-code/no-code to supercharge productivity and drive operational efficiency throughout their organization."

Workato also includes AIRO (AI Orchestrator), an AI-powered assistant that helps users create and refine workflows using natural language. Pre-configured AI actions offer built-in LLM capabilities, while the Agent Studio routes sensitive decisions to humans via Slack or Microsoft Teams for approval. For customer workspaces, AI connector actions are limited to 60 requests per minute.

The platform offers pre-built applications known as Genie Apps, such as ITGenie for handling helpdesk tickets and SalesGenie to boost sales productivity. These Genies adapt based on user interactions, ensuring that learning remains specific to the customer’s environment to protect data privacy.

Workato's AI integration is paired with the ability to scale effectively across large organizations.

Workato’s workflows are designed to scale across enterprises and mid-sized businesses, connecting with over 8,500 apps, databases, and ERP systems. With orchestration patterns capable of managing more than 850 billion actions, the platform also supports Data Pipelines for high-volume replication to data warehouses like Snowflake and Databricks. These pipelines use parallel execution and automatic schema updates to handle large datasets efficiently.

Decision Models simplify complex business logic, such as dynamic pricing or lead scoring, by storing rules in centralized tables outside individual workflows. This approach makes auditing and updates straightforward. Meanwhile, Data Tables - an upgrade from Lookup Tables - store significantly larger datasets with advanced search functionality.

Workato’s global infrastructure supports local data residency with data centers in the EU, Australia, and Singapore. The platform is available in eight languages, including Japanese, Korean, and Spanish, and maintains a 99.9% uptime SLA for critical operations. It has been recognized as a Leader in the Gartner® Magic Quadrant™ for iPaaS seven times, with two instances of being positioned furthest in "Vision" as of 2025.

To complement its scalability, Workato prioritizes governance and security.

Workato’s Enterprise MCP Gateway serves as a secure control layer between AI tools and business systems, enforcing policies and ensuring visibility during runtime. AI agents operate under user-specific OAuth credentials, limiting access to authorized data. Additional safeguards include PII masking and de-identification within workflows.

Administrators can define "trusted skills", which are pre-approved recipes or connectors that agents can use, preventing unauthorized API calls. RBAC 2.0 ensures precise project-level permissions, avoiding unnecessary role expansion. The platform also includes advanced security measures like BYOK encryption, hourly key rotation, and container isolation with mTLS. Certifications include SOC 2 Type II, ISO 27001, PCI DSS, and GDPR compliance.

Real-time audit trails and human-in-the-loop approvals ensure accountability for all agent actions. The Reviews and Approvals feature streamlines recipe deployment across development, testing, and production environments. One organization reported processing employee relocation requests 98% faster using Workato.

Workato follows an enterprise-focused pricing model, with no publicly available rates. Pricing is tailored based on factors like the number of tasks, advanced connector usage, and the number of users. Small businesses typically spend $500 to $3,000 per month, mid-market companies $3,000 to $10,000, and large enterprises often exceed $10,000 monthly for custom integrations and support. The platform is designed for organizations that require advanced governance and complex process management.

AI Workflow Platform Comparison: Features, Pricing & Target Audience 2026

Choosing the right AI workflow platform depends on your team’s size, technical expertise, budget, and governance requirements. To simplify the decision-making process, the table below provides a side-by-side comparison of key features across various platforms.

| Platform | Target Audience | Deployment | Pricing Model | LLM Integration | Key Strength |

|---|---|---|---|---|---|

| Prompts.ai | Small to Enterprise | Cloud | Pay-As-You-Go (TOKN credits) | 35+ models (GPT-5, Claude, LLaMA, Gemini) | FinOps cost tracking; up to 98% cost savings; extensive prompt workflow library |

| Zapier | Small to Enterprise | Cloud | Task-based (starts $19.99/mo) | Natural language features; 8,000+ app integrations | User-friendly for non-technical users; largest integration ecosystem |

| Make | Small to Mid-market | Cloud | Credit-per-step (starts $9/mo) | Visual scenario builder; Maia AI (2026) | Handles complex logic with visual interface; data transformation capabilities |

| n8n | Mid-market to Enterprise | Cloud, Self-hosted | Execution-based (starts $20/mo or free self-hosted) | Code fallback (JavaScript/Python); LangSmith integration | Cost predictability; technical flexibility; unlimited steps per execution |

| Gumloop | Freelancers/Small Biz | Cloud | Credit-based (starting at $30/mo) | Drag-and-drop AI nodes | Ideal for specific AI tasks and rapid prototyping |

| Lindy.ai | Freelancers/Small Biz | Cloud | Credit-based (starts $39.99/mo) | Conversational AI for business tasks | Automates emails and meetings; lightweight setup |

| Pipedream | Developers | Cloud | Usage-based | Code-first automation (Node.js/Python) | Focused on engineering-led workflows; event-driven architecture |

| Workato | Large Enterprise | Cloud | Enterprise – contact sales for pricing | Enterprise-grade automation | Advanced governance; RBAC; SOC 2 compliance; 99.9% uptime SLA |

This comparison highlights how each platform caters to specific business needs, balancing pricing models and deployment flexibility. For example, n8n offers predictable costs and flexibility with its free self-hosted option, making it appealing for technical teams. In contrast, platforms like Zapier prioritize ease of use for non-technical users but can become costly with high task volumes.

When considering deployment options, data privacy is a key factor. Unlike most cloud-only platforms, n8n allows for self-hosting, giving teams full control over their infrastructure. On the other hand, enterprise solutions like Workato focus on advanced governance and compliance, making them ideal for managing sensitive, high-volume workflows. These distinctions ensure that businesses can align platform capabilities with their operational priorities.

Choosing the right AI workflow platform in 2026 requires aligning your team's technical skills with your organization’s scale and compliance needs. For non-technical teams, tools like Zapier or Lindy.ai offer intuitive, prompt-based environments. On the other hand, developer-heavy organizations often prefer platforms like n8n or Pipedream, which provide greater customization through code extensions. A key differentiator among these platforms is how they manage workflow maintenance, a theme that has been explored throughout this guide.

The evolution from basic automation to autonomous AI workflows introduces new demands - handling semantic decision-making, retrieval-augmented generation, and multi-agent orchestration. As Nicolas Zeeb puts it:

The path forward in 2026 is making a huge leap from being a AI automation dev-only discipline to a team sport.

This shift emphasizes the importance of platforms that blend technical flexibility with governance features, allowing engineers and subject matter experts to collaborate effectively. These challenges highlight the importance of performance metrics and operational transparency.

Production-ready platforms stand out through features like real-time logging, version control, and clear pricing structures. With 95% of generative AI pilots failing to move into production due to infrastructure limitations, tools that support observability and cost predictability are essential. Many organizations achieve production-level results within 2 to 6 weeks by starting with focused pilots, incorporating semantic routing early on, and introducing regression testing by the third week.

Beyond technical capabilities, regulatory and security compliance are crucial for enterprise adoption. Features like role-based access control (RBAC), SOC 2 compliance, audit trails, and flexible deployment options ensure platforms can scale while meeting regulatory requirements. As highlighted earlier, platforms such as Prompts.ai and Workato effectively integrate these controls to address diverse enterprise needs.

While AI agents could contribute $450 billion in value by 2028, only 2% of organizations have fully embraced them. The platforms discussed in this guide provide the foundation to bridge this gap, transforming isolated AI experiments into scalable, measurable workflows that drive productivity forward.

AI workflow platforms in 2026 bring game-changing advantages to businesses and professionals alike. They simplify the automation of intricate tasks, improve workflow efficiency, and centralize the management of various AI models. These capabilities lead to major cost reductions and up to a tenfold boost in productivity.

Beyond efficiency, these platforms tackle pressing issues such as compliance, security, and the challenge of integrating multiple tools. By unifying AI models and automating governance processes, they ensure smooth operations. They also integrate effortlessly with advanced large language models, enabling organizations to scale their operations effectively while maintaining control and oversight of their AI workflows. This combination of efficiency and oversight makes these platforms essential for driving productivity and maintaining high operational standards.

Prompts.ai enables businesses to cut expenses while staying transparent by offering real-time cost tracking and a flexible pay-as-you-go pricing model. These tools allow companies to keep a close eye on their spending and only pay for the resources they actively use, ensuring expenses remain predictable and adaptable.

On top of that, Prompts.ai emphasizes security and compliance, featuring SOC 2 Type II and HIPAA compliance standards. These measures help lower the risk of expensive regulatory setbacks. By combining effective cost management with strong compliance safeguards, Prompts.ai helps organizations streamline their workflows without facing unexpected financial hurdles.

AI workflow platforms prioritize strong security protocols to protect sensitive information and meet industry regulations. Key measures include encryption for safeguarding data both in transit and at rest, role-based access controls to manage user permissions, and compliance with frameworks like SOC 2 and GDPR.

Many platforms also provide advanced features such as real-time threat detection, secure key management, and certifications that validate their focus on security. These tools help reduce risks like unauthorized access, data breaches, and the theft of AI models, allowing businesses to confidently manage AI-powered workflows.