يمكن أن يؤدي اختيار أداة تقييم نموذج اللغة المناسبة إلى توفير الوقت وتقليل التكاليف وتعزيز الكفاءة. سواء كنت تدير عمليات سير عمل الذكاء الاصطناعي أو تقارن النماذج أو تعمل على تحسين الميزانيات، فإن اختيار أفضل الأدوات أمر ضروري. فيما يلي نظرة عامة سريعة على أربعة خيارات رائدة:

مقارنة سريعة

تقدم كل أداة مزايا فريدة اعتمادًا على خبرتك الفنية واحتياجات سير العمل. تعمق أكثر لترى كيف يمكن لهذه الأدوات أن تناسب استراتيجية الذكاء الاصطناعي الخاصة بك.

مخطط مقارنة أدوات تقييم نموذج لغة الذكاء الاصطناعي

يجمع Prompts.ai الوصول إلى أكثر من 35 نموذجًا لغويًا من الدرجة الأولى في مساحة عمل واحدة مبسطة. وتشمل هذه البرامج GPT-4O وGPT-5 من أوبن إيه آي، أنثروبيكلود، غوغل جيميني، ميتااللاما، و الحيرة سونار. بنقرة واحدة فقط، يمكن للفرق التبديل بين النماذج، مما يتيح إجراء مقارنات مباشرة. على سبيل المثال، يتيح تشغيل نفس المطالبة عبر نماذج متعددة للمستخدمين تقييم أي منها يقدم أفضل نغمة أو أخطاء أقل أو استجابات أسرع لمهام مثل دعم العملاء أو إنشاء المحتوى. تخيل أن شركة SaaS ناشئة مقرها الولايات المتحدة تختبر GPT‑4o وكلود 4 وGemini 2.5 لعمليات سير عمل الدعم. ويمكنهم تحديد النموذج الذي يحقق التوازن الصحيح بين الجودة وموثوقية واجهة برمجة التطبيقات وموقع البيانات بسرعة، كل ذلك مع تجنب تقييد المورد.

يتجاوز Prompts.ai الوصول من خلال تقديم تتبع مفصل للأداء. تراقب المنصة جودة الاستجابة ووقت الاستجابة ومعدلات الخطأ لكل نموذج عند استخدام مجموعات مطالبة متطابقة. كما أنه يدعم الاختبار العملي من خلال المكتبات السريعة القابلة لإعادة الاستخدام واختبار A/B والنتائج الموحدة التي تتكامل مع المقاييس المخصصة. على سبيل المثال، أنشأت شركة تجارة إلكترونية أمريكية مجموعة اختبار مكونة من 200 موجه تغطي الاستفسارات حول سياسات الإرجاع وحسابات الشحن في القياسات الأمريكية مع تواريخ MM/DD/YYYY والإجابات الحساسة للنغمة. من خلال إجراء هذه الاختبارات شهريًا عبر نماذج مختلفة، فإنها تتعقب مقاييس مثل التقييمات البشرية (1-5)، والامتثال لسياسات الشركة، ومتوسط الرموز لكل استجابة. وهذا يساعدهم على اختيار النموذج الأفضل أداءً باعتباره النموذج الافتراضي كل ثلاثة أشهر.

يبسط Prompts.ai إدارة التكلفة من خلال تمكين الفرق من التبديل بسرعة بين النماذج والموردين، مما يجعل من السهل تجربة خيارات أكثر بأسعار معقولة. على سبيل المثال، يمكن للفرق مقارنة النماذج الأصغر والأقل تكلفة مثل Google Gemini بالنماذج المتميزة مثل GPT-5 أو Claude 4، مع موازنة اختلافات الجودة مقابل التكلفة. تسجل المنصة متوسط الرموز لكل ناتج وتسمح بإجراء مقارنة مباشرة لأسعار الرموز بالدولار الأمريكي (على سبيل المثال، لكل 1,000 أو 1,000,000 رمز)، مما يساعد الفرق على تقدير التكاليف لكل طلب والنفقات الشهرية. على سبيل المثال، اكتشفت وكالة أمريكية نموذجًا متوسط المستوى يخفض التكاليف بنسبة 40٪ لكل منشور مدونة دون التضحية بالجودة. تدعي Prompts.ai أنها تقلل تكاليف الذكاء الاصطناعي بنسبة تصل إلى 98٪ من خلال الوصول الموحد وتجميع الموارد، بما يتماشى مع الميزانيات والمعايير التشغيلية الأمريكية.

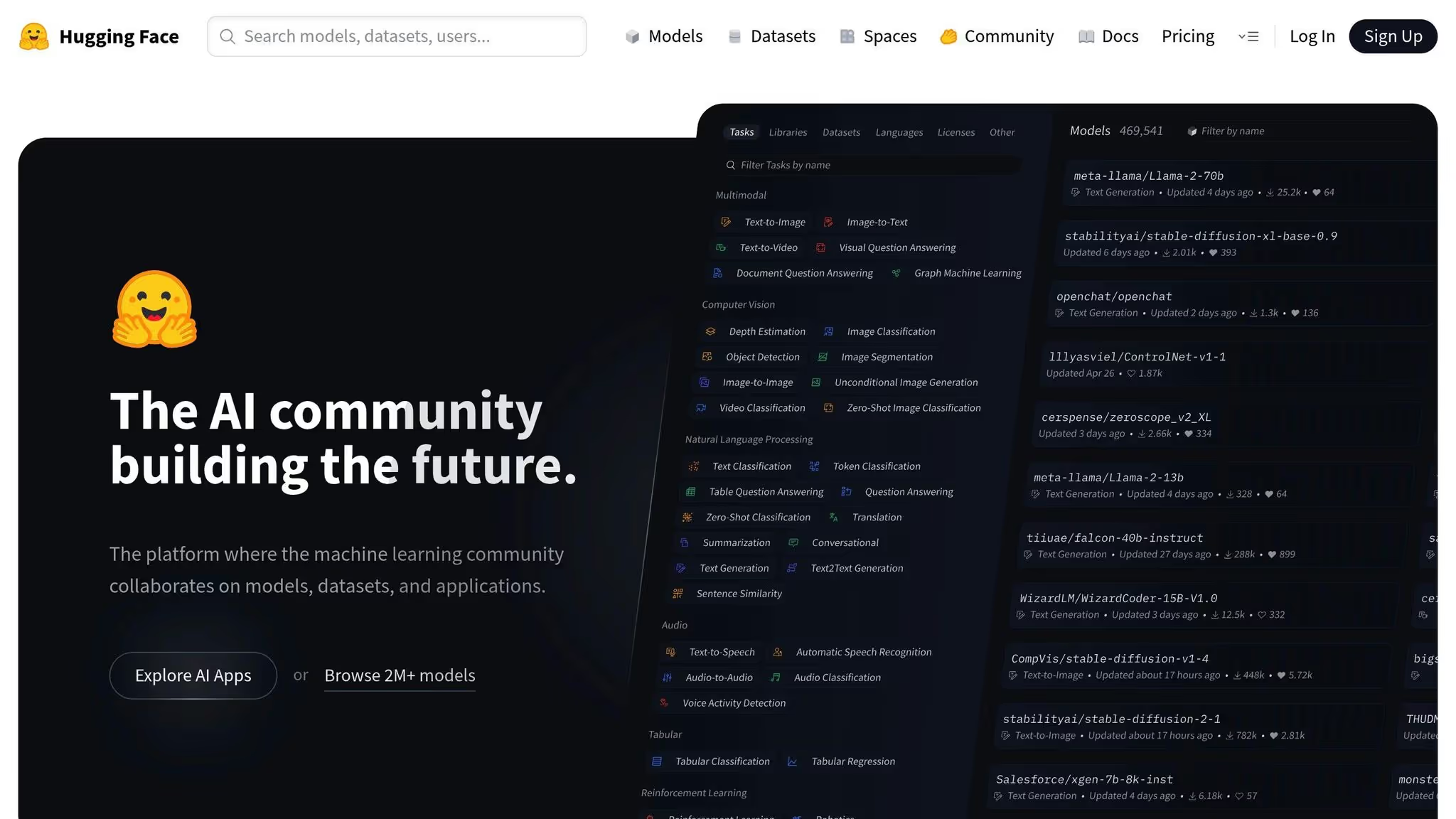

يتكامل Prompts.ai بسلاسة مع عمليات سير عمل الذكاء الاصطناعي الحالية، ويعمل كطبقة بدون تعليمات برمجية تربط واجهات برمجة التطبيقات النموذجية المتعددة. في حين أن الفرق الفنية قد تستمر في استخدام أدوات مثل OpenAI Evals أو Hugging Face للمعايير الرسمية، فإن Prompts.ai تتفوق في إدارة المطالبات ومقارنة المخرجات وتمكين أصحاب المصلحة غير التقنيين من المشاركة في اختيار النموذج. كما أنه يتكامل مع أدوات الإنتاجية الشائعة، مما يبسط سير العمل مباشرة من مخرجات الذكاء الاصطناعي. على سبيل المثال، يستخدم فريق التكنولوجيا المالية في الولايات المتحدة Prompts.ai لمهام مثل التصميم الفوري الاستكشافي ومقارنات النماذج ومراجعات أصحاب المصلحة. يحافظون على اختبارات مؤتمتة ومنظمة ضمن الكود وخطوط أنابيب CI الخاصة بهم ولكنهم يعتمدون على Prompts.ai للعمل التعاوني. يتم تصدير المطالبات الفائزة واختيارات النماذج مرة أخرى إلى أنظمتها عبر واجهات برمجة التطبيقات أو ملفات التكوين، مما يضمن الامتثال والتكامل الآمن - وهو أمر بالغ الأهمية للعمليات التي تتخذ من الولايات المتحدة مقراً لها.

يركز إطار تقييم OpenAI بشكل أساسي على تقييم نماذج OpenAI الخاصة، مثل GPT-4 و GPT-4.5. في حين أنه مصمم خصيصًا لعروض OpenAI، فإنه يستخدم نهجًا موحدًا يستخدم مجموعات البيانات المعيارية مثل MMLU و GSM8K، جنبًا إلى جنب مع بروتوكول التحفيز المكون من 5 لقطات، لضمان مقارنات متسقة ومباشرة. توفر هذه الطرق طريقة منظمة للتعمق في أداء النموذج والسلوك.

بالإضافة إلى الدقة الأساسية، يقوم الإطار بتقييم مجموعة من أبعاد الأداء، بما في ذلك المعايرة والمتانة والتحيز والسمية والكفاءة. تضمن المعايرة توافق ثقة النموذج مع دقته الفعلية، بينما تختبر المتانة مدى نجاحه في التعامل مع التحديات مثل الأخطاء المطبعية أو اختلافات اللهجة. ومن الإضافات البارزة طريقة «LLM-as-a-judge»، حيث تسجل النماذج المتقدمة مثل GPT-4 استجابات مفتوحة على مقياس 1-10 لتقريب التقييمات البشرية. أظهر باحثو ستانفورد قابلية تطوير إطار العمل، حيث قاموا بتطبيقه على 22 مجموعة بيانات و 172 نموذجًا.

يتضمن الإطار أساليب نظرية الاستجابة للعناصر (IRT) لخفض التكاليف المعيارية بنسبة 50-80٪. بدلاً من تشغيل مجموعات اختبار شاملة، يحدد الاختبار التكيفي الأسئلة بناءً على الصعوبة، مما يوفر الوقت ونفقات API. بالنسبة للفرق الأمريكية التي تعمل بميزانيات محدودة، يقلل هذا النهج بشكل كبير من استخدام الرمز المميز أثناء التقييمات. تختلف تكاليف الرمز المميز على نطاق واسع، من 0.03 دولارًا لكل مليون رمز لنماذج مثل Gemma 3n E4B إلى 150 دولارًا لكل مليون رمز للموديلات المتميزة مثل GPT-4.5. من خلال اعتماد الاختبار التكيفي، يمكن للفرق تحقيق تخفيضات كبيرة في التكاليف مع الحفاظ على رؤى موثوقة لأداء النموذج.

يدعم إطار العمل التكامل السلس، ويوفر نشر SDK من سطر واحد باستخدام أدوات مثل LangChain. تعمل واجهات برمجة تطبيقات REST الخاصة به على تمكين التطبيقات الخالية من اللغة، مما يجعل من السهل على الفرق التي تستخدم Python أو JavaScript أو بيئات البرمجة الأخرى دمج الإطار في عمليات سير العمل الخاصة بها. بالإضافة إلى ذلك، توفر منصات المراقبة مثل LangSmith و Galileo و Langfuse مراقبة مفصلة للعمليات التي تعتمد على OpenAI، بما في ذلك التتبع وتتبع التكاليف وتحليل وقت الاستجابة. كما اكتسبت طريقة «LLM-as-a-judge» زخمًا بين أدوات التقييم الأخرى، حيث وضعت معيارًا مشتركًا لتسجيل الجودة الآلي. بالنسبة للفرق الأمريكية، يمكن أن يساعد دمج حزم SDK الخاصة بإمكانية الملاحظة في وقت مبكر من التطوير في تحديد مشكلات مثل الانحدارات أو الهلوسة قبل أن تؤثر على الإنتاج.

تعد مكتبة Hugging Face Transformers موردًا بارزًا في عالم أدوات تقييم الذكاء الاصطناعي، وذلك بفضل نظامها البيئي الواسع لنماذج الأوزان المفتوحة.

كمركز لنماذج الأوزان المفتوحة، تقدم مكتبة Hugging Face Transformers تنوعًا أكبر بكثير من البنى مقارنة بالمنصات ذات الموفر الفردي. وهو يدعم مجموعة واسعة من النماذج التي طورتها المختبرات العالمية الرائدة، بما في ذلك Meta's Llama، و Gemma من Google، و Qwen من Alibaba، ميسترال إيه آي، و ديب سيك. وهذا يشمل نماذج متخصصة مثل كوين 2.5 كودر لمهام الترميز، رؤية لاما 3.2 لتحليل الصور، و لاما 4 سكاوت، والتي تتفوق في التفكير طويل السياق بسعة تصل إلى 10 ملايين توكن. على عكس الأدوات التي تعتمد على الوصول إلى الويب في الوقت الفعلي، يوفر Huging Face أوزان النموذج الفعلية، مما يتيح النشر المحلي أو عمليات الدمج المخصصة. تضمن هذه المجموعة الواسعة من النماذج أساسًا متينًا لتقييمات الأداء الصارمة.

يعزز Huging Face الشفافية وقابلية المقارنة من خلال افتح لوحة ليدربورد LLM، الذي يجمع بيانات الأداء من المعايير الموحدة. يتم تقييم النماذج باستخدام مقاييس خاصة بالمهمة، مثل:

معايير إضافية، بما في ذلك وينو غراندي و امتحان الإنسانية الأخير، واختبار النماذج على المهام التي تتراوح من حل المشكلات الرياضية إلى التفكير المنطقي. توفر هذه المقاييس عرضًا شاملاً لإمكانيات كل نموذج.

تأتي نماذج الأوزان المفتوحة المتوفرة من خلال Hugging Face بفوائد كبيرة من حيث التكلفة. إنها توفر أسعارًا رمزية تنافسية وسرعات معالجة مذهلة. على سبيل المثال، جيما 3 إن E4B يبدأ في مجرد 0.03 دولار لكل مليون رمز، في حين لاما 3.2 1B و 3 ب توفر النماذج خيارات اقتصادية للتعامل مع المهام واسعة النطاق.

تعمل واجهة برمجة التطبيقات الموحدة للمكتبة على تبسيط عملية التبديل بين النماذج، ولا تتطلب سوى الحد الأدنى من تعديلات التعليمات البرمجية. يتكامل بسلاسة مع منصات MLops الشهيرة مثل الأوزان والتحيزات، إم إل فلو، و Neptune.ai، مما يجعل من السهل تتبع التجارب ومقارنة النماذج. للتقييم، أدوات مثل نظام غاليليو للذكاء الاصطناعي و من الواضح أن الذكاء الاصطناعي تمكين الاختبار الشامل والتحقق من الصحة. بالإضافة إلى ذلك، يمكن للمطورين الوصول مباشرة إلى مجموعات البيانات من Huging Face Hub للاختبار المحلي، مما يضمن مرونة النشر عبر السحابات الخاصة أو الأنظمة المحلية أو نقاط نهاية API. هذه قابلية التشغيل البيني تجعل Hugging Face خيارًا متعدد الاستخدامات وعمليًا لمجموعة واسعة من تطبيقات الذكاء الاصطناعي.

بناءً على مناقشتنا لأدوات التقييم، تقدم قوائم المتصدرين بالذكاء الاصطناعي منظورًا أوسع من خلال تجميع بيانات الأداء من معايير متعددة. توفر هذه المنصات رؤية موحدة لكيفية أداء النماذج المختلفة، مع تسليط الضوء على نقاط القوة والضعف فيها. على عكس أدوات التقييم أحادية الغرض، تجمع قوائم المتصدرين بيانات متنوعة لتقديم مقارنة شاملة، لتكمل التقييمات الأكثر تركيزًا التي تمت مناقشتها سابقًا.

تقوم لوحات المتصدرين بالذكاء الاصطناعي بتقييم مزيج من النماذج الاحتكارية وذات الوزن المفتوح من خلال أنظمة موحدة. على سبيل المثال، التحليل الاصطناعي مؤشر الذكاء v3.0، الذي تم تقديمه في سبتمبر 2025، يدرس النماذج عبر 10 أبعاد. وتشمل هذه الأدوات مثل MMLU-pro للتفكير والمعرفة، و GPQA Diamond للتفكير العلمي، و AIME 2025 للرياضيات التنافسية. ال فيلوم ليدربورد LLM يضيق تركيزه على النماذج المتطورة التي تم إطلاقها بعد أبريل 2024، بالاعتماد على البيانات من مقدمي الخدمات والتقييمات المستقلة والمساهمات مفتوحة المصدر. بالإضافة إلى ذلك، تسمح منصات مثل التحليل الاصطناعي للمستخدمين بإدخال النماذج الناشئة أو المصممة خصيصًا يدويًا، مما يتيح إجراء مقارنات مع المعايير المعمول بها.

تقدم قوائم المتصدرين نتائج مفصلة عبر أبعاد مختلفة، مما يوفر نظرة شاملة على إمكانيات النموذج. يتم استخدام مقاييس مثل القدرة على التفكير وأداء الترميز وسرعة المعالجة وفهارس الموثوقية لتقييم النماذج وترتيبها. تساعد هذه الرؤى المقارنة الفرق على تحديد النماذج التي تتوافق مع احتياجاتهم الخاصة.

تعد شفافية التسعير ميزة رئيسية أخرى في قوائم المتصدرين بالذكاء الاصطناعي، حيث تكشف عن تكاليف الرمز المميز التي تتراوح من 0.03 دولار إلى الأسعار المميزة. تسمح هذه البيانات للفرق بتقييم النماذج بناءً على كل من الأداء والميزانية. على سبيل المثال، يُظهر تحليل الذكاء مقابل السعر أن الذكاء العالي لا يأتي دائمًا بسعر أعلى. نماذج مثل ديب سيك-v3 إظهار قدرات التفكير القوية بتكلفة 0.27 دولار لكل إدخال و 1.10 دولار لكل إخراج لكل مليون رمز. تسهل هذه الرؤى تحديد النماذج التي تحقق التوازن الصحيح بين التكلفة والأداء.

ولضمان إجراء مقارنات عادلة، تستخدم لوحات الصدارة أنظمة تسجيل عادية تعمل عبر كل من النماذج الاحتكارية ونماذج الوزن المفتوح. توفر المعايير المحددة، مثل مهام الترميز والاستدلال متعدد اللغات والأداء النهائي، فهمًا أعمق لإمكانيات النموذج. ال إل إم أرينا (ملعب تشاتبوت) يقدم نهجًا فريدًا باستخدام اختبارات التعمية الجماعية حيث يقارن المستخدمون استجابات النموذج. تولد هذه الاختبارات تقييمات Elo بناءً على التفضيلات البشرية، مما يوفر منظورًا حقيقيًا. تعمل هذه الميزات مجتمعة على تحسين الرؤى المكتسبة من الأدوات الفردية، مما يوفر رؤية أكثر اكتمالاً لتحسين سير عمل الذكاء الاصطناعي.

يتطلب تحسين سير عمل الذكاء الاصطناعي فهمًا واضحًا لفوائد وعيوب أدوات التقييم المختلفة. يسلط هذا القسم الضوء على المزايا والتحديات الفريدة لكل أداة، مما يساعد الفرق على اتخاذ قرارات مستنيرة بناءً على احتياجاتهم الخاصة.

Prompts.ai تتميز بوصولها السلس إلى أكثر من 35 طرازًا، بما في ذلك متغيرات GPT و Claude و Gemini و LLama، كل ذلك من خلال واجهة موحدة تلغي الحاجة إلى عمليات تكامل مخصصة. تتيح المقارنات جنبًا إلى جنب وميزات تتبع التكاليف النماذج الأولية السريعة وتحسين رؤية الميزانية. ومع الادعاءات بخفض تكاليف الذكاء الاصطناعي بنسبة تصل إلى 98٪ مع تعزيز كفاءة سير العمل، فإنها تعد منافسًا قويًا للمؤسسات. ومع ذلك، فإن اعتمادها على أرصدة TOKN بدلاً من الفواتير السحابية المباشرة قد يمثل عقبة لبعض الفرق. بالإضافة إلى ذلك، قد تجد المؤسسات التي تتطلب بنية تحتية مستضافة ذاتيًا لأغراض الامتثال أن نهجها المُدار مقيدًا.

ال إطار تقييم OpenAI تم تصميمه للفرق الهندسية، حيث يقدم معايير معيارية خاصة بالمهام ودمجًا سلسًا في خطوط أنابيب CI/CD القائمة على Python. هذا يجعله اختيارًا ممتازًا لفحوصات الجودة الآلية عند الانتقال بين إصدارات الطراز. على الجانب السلبي، يقتصر الأمر على النظام البيئي لـ OpenAI، مما يحد من فائدته لإجراء مقارنات بين البائعين دون تخصيص كبير. علاوة على ذلك، يمكن أن تتراكم تكاليف استخدام API بمرور الوقت.

محولات الوجه المعانقة يوفر مرونة لا مثيل لها للفرق التي تعطي الأولوية للأدوات مفتوحة المصدر. وهي تدعم مئات النماذج من خلال واجهات برمجة التطبيقات الموحدة المتوافقة مع PyTorch و TensorFlow و JAX، وهي ذات قيمة خاصة للصناعات الحساسة للخصوصية مثل الرعاية الصحية والتمويل نظرًا لقدرات الاستضافة الذاتية. بالإضافة إلى ذلك، فإنه يسمح بضبط مجموعات البيانات الخاصة. ومع ذلك، فإن الاستفادة من إمكاناتها الكاملة تتطلب خبرة فنية متقدمة، بما في ذلك إتقان Python ومهارات تحسين GPU/CPU. يجب على الفرق أيضًا إنشاء لوحات معلومات المراقبة الخاصة بها، لأنها لا تتضمن واجهة تقييم مضمنة. في حين أن إدارة التكلفة ممكنة، يجب على المستخدمين تتبع الإنفاق يدويًا مقابل الأداء.

قوائم المتصدرين والمعايير الخاصة بالذكاء الاصطناعي تجميع المقاييس الموحدة - مثل درجات التفكير وقدرات الترميز والتسعير المقدر - عبر العديد من النماذج، مما يجعلها مثالية للمقارنات الأولية. ومع ذلك، فإنها تفتقر إلى ميزات الاختبار التفاعلية، مما يعني أنه لا يمكن للمستخدمين تشغيل المطالبات المخصصة أو التحقق من صحة النتائج للمهام الخاصة بالمجال. بالإضافة إلى ذلك، قد لا تعكس لوحات الصدارة دائمًا آخر تحديثات النموذج أو تتناول متطلبات الامتثال المحددة في الولايات المتحدة.

تسلط هذه الأفكار الضوء على المقايضات التي ينطوي عليها تقييم النموذج واختياره. يلخص الجدول أدناه النقاط الرئيسية التي تمت مناقشتها.

تم فحص كل أداة - بدءًا من Prompts.ai إلى قوائم المتصدرين بالذكاء الاصطناعي - يجلب نقاط قوة مميزة إلى الطاولة، مصممة خصيصًا لتلبية الاحتياجات التشغيلية المختلفة. ستعتمد أداة تقييم نموذج اللغة المناسبة لفريقك في النهاية على أولوياتك ومستوى خبرتك الفنية.

Prompts.ai تتميز ببساطتها وسهولة الوصول إليها، حيث توفر وصولاً فوريًا إلى أكثر من 35 طرازًا جنبًا إلى جنب مع تتبع التكاليف المدمج، كل ذلك دون الحاجة إلى معرفة بايثون. بالنسبة للفرق التي تقدر مرونة المصدر المفتوح وتفضل الاستضافة الذاتية، توفر مكتبة Hugging Face Transformers دعمًا واسعًا لعمليات نشر النماذج المتنوعة. وفي الوقت نفسه، فإن إطار تقييم OpenAI مناسب تمامًا للفرق الهندسية التي تركز على Python والتي تدير خطوط أنابيب CI/CD الآلية. ومع ذلك، قد يتطلب نطاق البائع الواحد برمجة نصية إضافية لقياس الأداء عبر الأنظمة الأساسية. يجب أن يتوافق قرارك مع القدرات الفنية لفريقك واحتياجات سير العمل.

قوائم المتصدرين بالذكاء الاصطناعي تعد مصدرًا رائعًا للبحث الأولي، حيث تقدم مقارنات أداء واضحة عبر نماذج متعددة. ومع ذلك، لا يمكن للمقاييس الثابتة وحدها أن تحل محل الاختبار العملي المصمم وفقًا لمطالبك وحالات الاستخدام المحددة.

مع توقع نمو سوق LLM في أمريكا الشمالية إلى 105.5 مليار دولار بحلول عام 2030، حان الوقت الآن لإنشاء عمليات تقييم مبسطة وفعالة.

يقدم Prompts.ai عدة فوائد مهمة، مثل الأمان عالي المستوى المصمم خصيصًا للمؤسسات، والتكامل السهل مع أكثر من 35 نموذجًا رائدًا للذكاء الاصطناعي، وسير العمل المبسط الذي يمكن أن يقلل نفقات الذكاء الاصطناعي بنسبة تصل إلى 98٪. نقاط القوة هذه تضعها كخيار قوي للشركات التي تهدف إلى تبسيط عمليات الذكاء الاصطناعي الخاصة بها وتعزيزها.

ومع ذلك، فإن المنصة موجهة بشكل أساسي نحو المستخدمين على مستوى المؤسسة، مما قد يجعلها أقل ملاءمة للمطورين الفرديين أو الفرق الصغيرة. بالإضافة إلى ذلك، يمكن أن يؤدي التنقل وإدارة نماذج متعددة داخل منصة واحدة إلى تقديم منحنى تعليمي لأولئك الجدد على هذه الأنظمة. حتى مع هذه الاعتبارات، تبرز Prompts.ai كأداة قوية للمؤسسات التي تتعامل مع متطلبات الذكاء الاصطناعي المعقدة.

يبسط إطار تقييم OpenAI تقييمات الأداء من خلال التشغيل الآلي لعملية التقييم، مما يقلل بشكل كبير من العمل اليدوي الذي ينطوي عليه الأمر عادة. إنه يدعم اختبار الدفعة، مما يتيح اختبار سيناريوهات متعددة في وقت واحد، مما يوفر الوقت والموارد.

من خلال جعل عملية التقييم أكثر كفاءة، يقلل هذا الإطار من الحاجة إلى المهام كثيفة العمالة ويضمن استخدام الموارد بفعالية، مما يوفر طريقة عملية لقياس ومقارنة نماذج اللغة.

تبرز مكتبة Huging Face Transformers كأفضل اختيار للفرق الفنية التي تقدم أدوات متقدمة للعمل بسلاسة مع نماذج اللغة. إنه يمكّن التكامل في الوقت الفعلي مع مصادر البيانات الخارجية، مما يضمن بقاء النتائج حديثة ودقيقة. تتضمن المكتبة أيضًا ميزات مثل الوصول متعدد النماذج، والقياس المتعمق، وتحليل الأداء، مما يجعلها خيارًا قويًا للبحث والتطوير وتقييم النموذج.

تم تصميم هذه المكتبة مع وضع سهولة الاستخدام والوظائف في الاعتبار، وتسمح للفرق بمقارنة النماذج وضبطها بكفاءة، ودعم أهداف الذكاء الاصطناعي الخاصة بهم بدقة وموثوقية.