Prompts are the key to unlocking the full potential of enterprise AI. They turn vague instructions into precise actions, enabling businesses to achieve better results, reduce costs, and streamline workflows. As AI adoption expands, mastering prompt design has become a critical advantage for organizations.

By focusing on precise prompt engineering and leveraging tools like Prompts.ai, companies can stay ahead in the competitive AI landscape while maintaining control, security, and measurable outcomes.

The success of enterprise AI systems largely depends on the quality of their prompts. Well-designed prompts can turn costly AI investments into reliable, impactful results. On the other hand, poorly crafted prompts lead to inconsistent outputs, wasted resources, and missed opportunities. In essence, prompts act as the bridge between potential and performance, unlocking the true value of AI.

Prompts also serve as the steering mechanism for enterprise AI, shaping not only what the AI generates but also how it operates within the boundaries of an organization. This precision is especially critical in industries where compliance and control are non-negotiable.

The quality of prompts directly influences both regulatory compliance and operational efficiency. In highly regulated fields, like healthcare and finance, prompt design plays a crucial role in managing risks and meeting strict standards. For example, healthcare organizations using AI for analyzing patient data must ensure their prompts align with HIPAA regulations to protect privacy. Similarly, financial services firms rely on prompts that incorporate compliance checks to meet audit requirements.

Research shows that formal prompt engineering programs can enhance output quality by 40–60%. These improvements reduce errors, speed up processes, and ensure adherence to compliance standards.

Prompts also enable real-time moderation and control of AI-generated content. Organizations can use them to filter outputs as they are created, ensuring the content aligns with company policies and preventing inappropriate or harmful material. This capability is particularly critical under regulatory frameworks like the EU AI Act.

Consider these examples: a healthcare system and a financial services firm both adopted standardized prompt frameworks. The healthcare system achieved a 68% reduction in development time, while the financial firm delivered 99.8% audit-ready outputs.

Prompts further streamline transparency and documentation. By supporting detailed logging of AI interactions and creating comprehensive audit trails, prompts simplify compliance reporting. Techniques like Chain-of-Thought prompting improve transparency by breaking down decision-making processes into understandable steps, meeting regulatory demands for oversight.

Effective prompts also address bias and fairness. They incorporate bias audits, diverse perspective checks, and neutrality verification, which can increase stakeholder trust by 62%. These measures help organizations tackle potential biases in AI outputs, ensuring fairness and compliance with regulatory requirements.

Finally, advanced prompt strategies support continuous oversight. They enable organizations to detect and mitigate risks quickly, ensuring their AI systems remain aligned with both business objectives and regulatory standards throughout their lifecycle. This ongoing monitoring is essential for maintaining trust and achieving long-term success with AI.

Creating effective prompts for enterprise AI requires a structured approach to ensure they drive clear and measurable outcomes. Organizations that excel in this area benefit from improved accuracy, streamlined operations, and reduced risks. The secret lies in designing prompts that perform reliably across diverse business scenarios while remaining adaptable to changing requirements.

The foundation of prompt engineering in enterprises rests on clarity and specificity. Ambiguous instructions often lead to inconsistent or unusable outputs, which can disrupt workflows. For instance, instead of a vague directive like "analyze customer feedback", a well-crafted prompt would specify the analysis method, desired output format, and key performance indicators.

Including clear boundaries in prompts is equally crucial, especially in industries with strict regulations. For example, a financial services prompt might instruct the AI to analyze market trends but explicitly avoid offering investment advice, ensuring compliance with legal standards.

Role-based prompts guide the AI to act in specific capacities, such as a "senior data analyst", "compliance reviewer", or "technical documentation specialist." Assigning a defined role aligns the AI's responses with professional expectations, producing outputs that are more relevant and actionable.

Specifying output formats ensures the AI's responses integrate smoothly into existing workflows. Whether the output needs to be in JSON for API use, structured reports for executives, or formatted datasets for further processing, clear formatting instructions make the results immediately usable.

The concept of iterative refinement acknowledges that prompt engineering is a dynamic process. Initial prompts are just starting points that improve with testing, feedback, and adjustments. By refining prompts over time, organizations can consistently enhance AI performance.

These principles gain even more value when tailored to specific AI models.

Different AI models excel in distinct areas, so prompts should leverage each model's strengths. For example, GPT-4 thrives on detailed, conversational prompts that include rich context and examples. This model handles complex and descriptive instructions effectively, making it ideal for nuanced tasks.

On the other hand, Claude performs best with structured, step-by-step prompts that break tasks into manageable components. Using numbered steps and logical reasoning chains often yields better results with Claude, especially for tasks requiring clear sequences.

To standardize and optimize performance, organizations can utilize model-specific prompt libraries and conduct cross-model testing. These libraries provide pre-tested templates tailored to each model's capabilities, allowing teams to maintain consistency while selecting the best fit for their chosen platform.

Performance benchmarking further aids decision-making by comparing models based on accuracy, speed, and cost. Testing standardized prompts across different systems helps enterprises deploy the most suitable AI for specific tasks.

While tailoring prompts to models is essential, maintaining their effectiveness requires robust feedback and version control systems.

Improving prompts over time depends on collecting and analyzing data. User ratings, quality assessments, and performance metrics provide valuable insights into what works and what doesn't, guiding prompt iterations.

Implementing version control for prompts ensures that every change is tracked, and previous versions remain accessible. This allows teams to revert to earlier prompts if needed and assess the impact of updates systematically.

A/B testing is another powerful tool, enabling teams to compare variations of a prompt to identify which version delivers better results. This data-driven approach removes guesswork from prompt optimization.

Automated quality monitoring systems continuously track prompt performance, flagging any decline in effectiveness. By catching issues early, businesses can address problems before they disrupt operations.

A collaborative approach to prompt development brings together subject matter experts, technical teams, and end users. This ensures prompts address real-world needs while meeting technical and compliance standards.

Maintaining clear documentation for prompt changes is equally important. Each modification should include an explanation of the update, expected results, and testing outcomes. This transparency fosters knowledge sharing and helps teams understand why certain strategies succeed.

Finally, staged rollout procedures minimize risks when deploying new prompts. Testing updates with smaller user groups before full implementation allows organizations to catch potential issues early, ensuring smoother transitions to production environments.

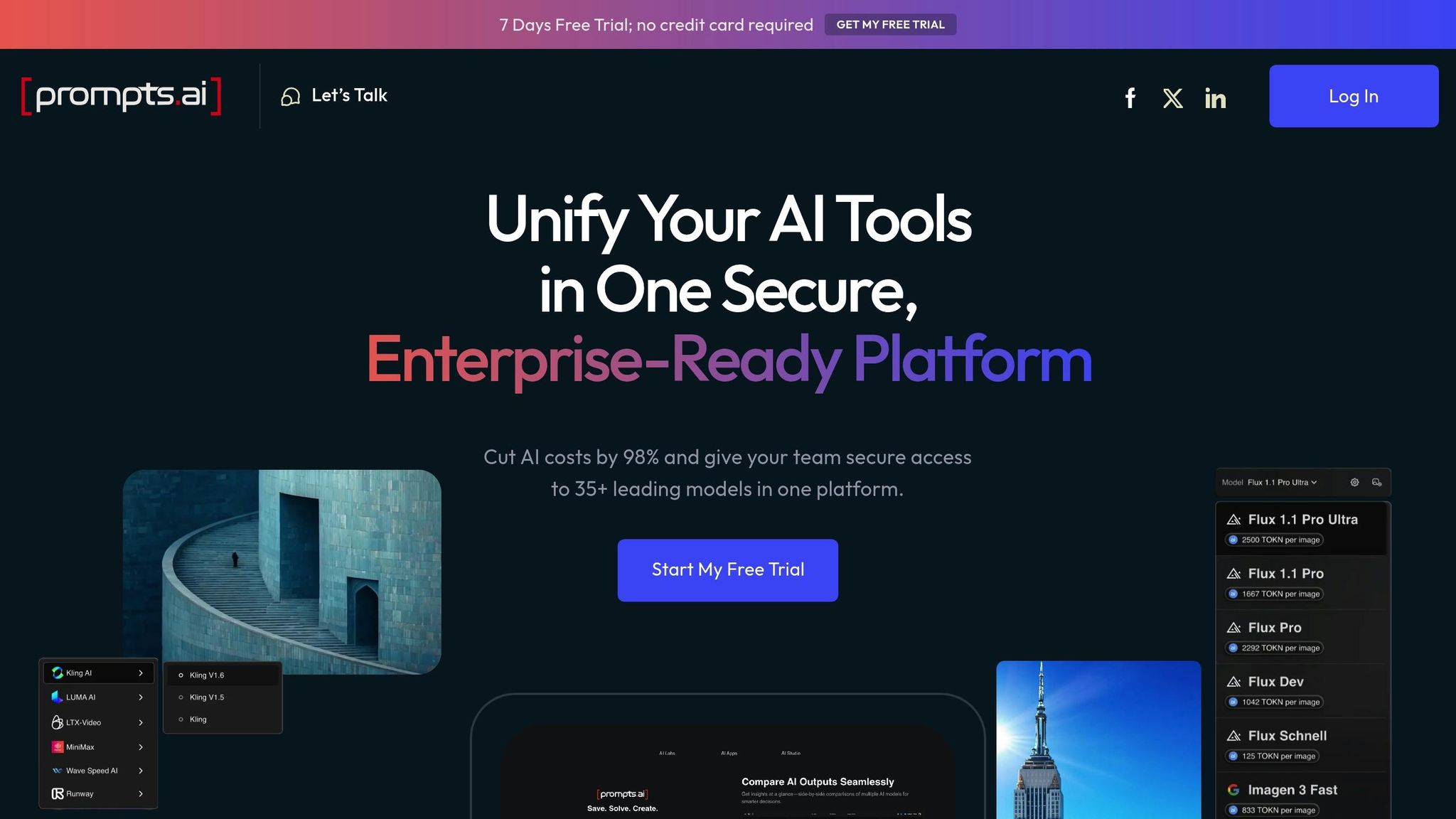

Managing AI at an enterprise level requires a centralized system that ensures control, transparency, and accountability. Many companies struggle with scattered tools, unclear costs, and governance issues that can hinder their AI strategies. Prompts.ai tackles these challenges head-on with a comprehensive platform designed to bring order and efficiency to enterprise AI workflows. Below, we explore how its features make this possible.

Prompts.ai is designed to resolve common pain points that businesses face when implementing AI:

In addition to its technical capabilities, Prompts.ai supports enterprise teams with tailored training and a vibrant community network.

Prompts.ai offers extensive resources to help organizations make the most of their AI investments:

As businesses increasingly embrace prompt-driven AI, the tangible benefits are hard to ignore. Thoughtful prompt engineering doesn't just enhance workflows - it directly influences the bottom line, driving measurable value across multiple areas.

Refined prompt engineering can deliver a 340% ROI, with cost savings ranging from 45% to 67% and productivity improvements of up to 340% in critical business functions.

For example, AI-powered search tools cut the average 1.9 hours employees spend daily searching for information by half, dramatically improving information access. Similarly, AI-driven knowledge portals reduce onboarding times for new hires by 40%, leading to faster integration and lower training costs.

These outcomes highlight the efficiency and financial benefits of adopting a centralized platform like Prompts.ai.

Prompts.ai offers tools to maximize returns through features like real-time FinOps controls, which allow finance teams to track costs and eliminate waste. By comparing AI models side by side, teams can choose the best fit for their needs, balancing speed, accuracy, and cost-effectiveness.

Collaboration is another key advantage. Teams can develop, refine, and share prompt workflows based on actual performance, ensuring consistent quality while avoiding redundant efforts. This collaborative approach not only saves time but also promotes the adoption of best practices across the organization.

With centralized management, transparent cost tracking, and streamlined collaboration, Prompts.ai helps businesses cut expenses, accelerate outcomes, and maintain strong governance for secure AI deployments. These operational improvements create a solid foundation for achieving long-term success.

As AI becomes an integral part of enterprise operations, maintaining strong governance and compliance is no longer optional - it’s a necessity. Businesses must protect sensitive data while ensuring every AI interaction is fully traceable. Achieving this balance between rapid technological progress and stringent oversight requires a governance framework that operates at the prompt level.

Prompt-level governance ensures that every interaction with AI is not only traceable but also auditable and controllable. This approach allows organizations to automatically redact sensitive data and maintain detailed logs of all interactions, seamlessly aligning their AI workflows with internal compliance protocols.

The security of AI workflows demands more than basic safeguards - it requires advanced defenses against modern threats like prompt injection attacks and jailbreak attempts. Prompts.ai addresses these challenges with real-time monitoring that tracks AI usage across browsers, desktop apps, and APIs. This system identifies both authorized and unauthorized GenAI applications instantly.

"The immediate visibility and control Prompt Security delivers to all employee use of GenAI applications in the work environment is unparalleled." – SentinelOne

A key component of this security infrastructure is the MCP Gateway. Positioned between AI applications and over 13,000 known MCP servers, it acts as a protective barrier. It intercepts every call, template, and response, assigning dynamic risk scores to enforce policies. This ensures safe interactions while redacting potentially risky content.

"Granular, policy-driven rules let teams redact or tokenize sensitive data on the fly, block high-risk prompts, and deliver inline coaching that helps users learn safe AI practices without losing productivity." – SentinelOne

Auditable logs provide a complete context for every prompt and response, giving compliance teams the tools they need for efficient audits. These measures not only enhance security but also allow for a clear comparison of governance capabilities across platforms.

| Governance Capability | Traditional IT Security | Basic AI Tools | Enterprise AI Platform |

|---|---|---|---|

| Real-time Monitoring | Network-level only | Limited logging | Full interaction visibility |

| Policy Enforcement | Perimeter-based | Manual oversight | Automated, prompt-level |

| Audit Trail Depth | System logs only | Basic usage stats | Complete prompt/response context |

| Multi-model Coverage | N/A | Single vendor | All major LLM providers |

| Risk Assessment | Static rules | None | Dynamic scoring per interaction |

| Compliance Reporting | Manual compilation | Limited metrics | Automated compliance dashboards |

An enterprise AI platform provides model-agnostic security, applying consistent safeguards across all major large language models, including self-hosted or on-premises options. Policy-based data protection further enhances security by automatically redacting or tokenizing sensitive information in real time, ensuring that productivity isn’t compromised. Inline coaching complements these measures by offering immediate feedback and safer alternatives during potentially risky interactions, fostering a culture of responsible AI use.

For organizations prioritizing robust AI governance, centralized management of workflows is key to building compliant, scalable practices that can grow alongside business demands. These features collectively create a foundation for secure and compliant enterprise AI.

The pace of change in enterprise AI is relentless, and mastering prompt engineering is becoming a defining factor for organizations aiming to stay ahead. As businesses increasingly depend on AI to enhance productivity, cut costs, and maintain a competitive edge, the quality of their prompts directly influences the success of these initiatives.

Prompts are the cornerstone of effective enterprise AI. By focusing on well-crafted prompt design, organizations can dramatically reduce costs - up to 98% - while increasing productivity by tenfold. These gains pave the way for establishing strong governance frameworks and fostering continuous improvement.

In an era of strict regulatory oversight, robust governance is not optional. Real-time monitoring, policy enforcement, and maintaining auditability for every AI interaction are critical for sustainable AI integration. Without these measures, companies risk compliance violations and may face challenges in scaling their AI strategies effectively.

Transitioning from isolated AI tools to a centralized orchestration approach can revolutionize operations. By implementing strong security and governance protocols, businesses can confidently deploy top-tier AI models while ensuring compliance and safety. This centralized flexibility is crucial as new models emerge and business priorities evolve.

Beyond technology, investing in workforce training and community support ensures long-term success. Programs like Prompt Engineer Certification and initiatives that empower internal champions help organizations build lasting AI practices. Sharing expert-designed prompt workflows across teams accelerates positive outcomes, benefiting the entire enterprise - not just technical departments.

Prompts.ai equips enterprises to fully embrace the potential of prompt-driven AI. Offering access to over 35 leading models through a single secure interface, the platform eliminates tool sprawl and simplifies model selection.

Its pay-as-you-go TOKN credit system ensures businesses only pay for what they use, aligning costs directly with value delivered. Coupled with real-time FinOps controls, this model provides clear visibility into AI spending, enabling smarter, data-driven decisions.

At its core, Prompts.ai prioritizes enterprise-grade governance. Built-in audit trails for every workflow ensure secure, responsible AI usage at scale. Additionally, the platform’s thriving community of prompt engineers and comprehensive training programs provide organizations with the tools and expertise needed to maximize their AI investments.

As enterprises continue to navigate the dynamic AI landscape, those that understand the strategic importance of prompts will establish scalable, compliant, and impactful AI operations. The future belongs to businesses that effectively leverage expert-designed and well-managed prompt workflows.

Prompts are essential for ensuring compliance and governance, especially in tightly regulated sectors like healthcare and finance. By customizing AI outputs to align with specific regulations - such as HIPAA for healthcare or financial reporting standards - organizations can stay firmly within legal and ethical boundaries.

Well-crafted prompts also minimize risks, such as bias, inaccuracies, or non-compliance, by steering AI systems to produce responses that are clear, auditable, and aligned with regulations. This approach enhances accountability, fosters trust, and simplifies audits - key elements for thriving in regulated industries.

Designing prompts that work effectively hinges on three key elements: clarity, structure, and alignment with your business objectives. To get the most out of your AI tools, craft prompts that are both clear and specific, ensuring they steer the model toward delivering the results you need. Tailor your prompts to leverage the model’s strengths while providing enough context to eliminate uncertainty.

Depending on your business needs, you can take different approaches. Use broad prompts when brainstorming ideas, detailed prompts when you need precise outputs, and reusable templates to maintain consistency across tasks. Regularly test and refine your prompts to keep them relevant and impactful. By applying these strategies, you can seamlessly integrate AI into your workflows and unlock its full potential.

Prompts.ai empowers businesses to manage their AI budgets effectively with its pay-as-you-go TOKN credit system, offering potential savings of up to 98%. This system ensures companies only pay for the resources they actually use, enabling precise cost tracking and better budgeting.

To enhance operational efficiency, Prompts.ai provides real-time cost monitoring and streamlined workflows. These tools help organizations pinpoint unnecessary spending and focus resources on boosting productivity. On the security side, Prompts.ai delivers enterprise-grade governance with features like detailed audit trails, robust data handling protocols, and adherence to regulatory standards. These measures ensure companies maintain strong security practices and compliance, all while staying competitive in the rapidly evolving AI landscape.