Prompt engineering is the key to unlocking AI’s potential in 2025. Businesses are cutting costs, improving reliability, and scaling AI operations with well-crafted prompts. From reducing costs by up to 76% per call to ensuring compliance in complex regulatory environments, these tools are transforming enterprise AI workflows.

Here’s a quick look at the top solutions driving this transformation:

Each platform offers unique strengths, from cost transparency to multi-model compatibility. Whether you’re a developer, researcher, or enterprise team, choosing the right tool ensures your AI systems deliver measurable results.

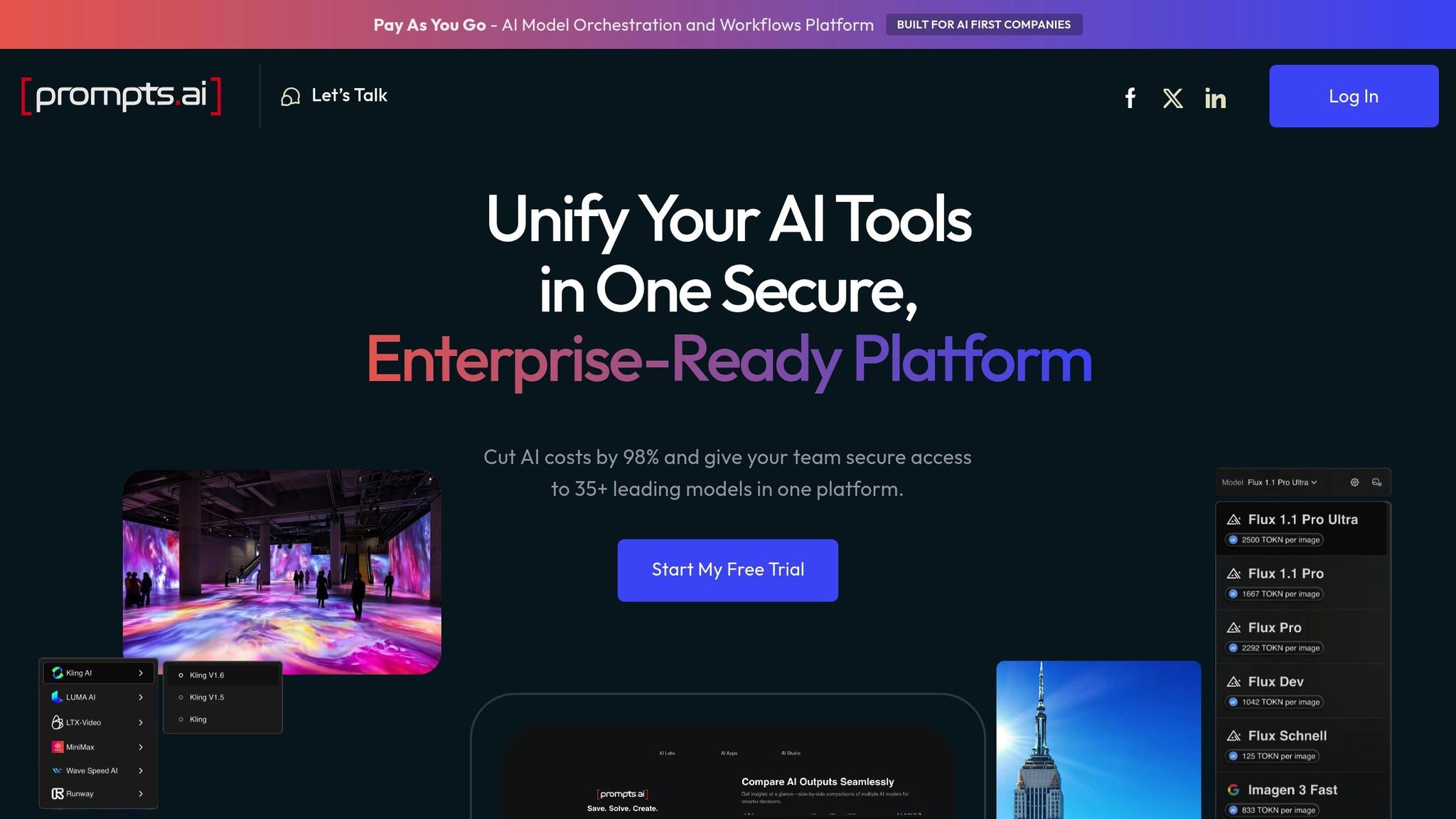

Prompts.ai is an advanced AI orchestration platform designed to streamline and scale AI operations for U.S.-based organizations. Instead of consolidating tools into a single system, it provides access to over 35 top-tier large language models through a secure interface. This approach prevents tool sprawl while maintaining governance and operational control.

With its unified model access and a pay-as-you-go TOKN credit system, organizations can cut costs by up to 98%, aligning expenses directly with usage. This setup serves as the backbone for the platform's key features.

Prompts.ai’s architecture is built to integrate effortlessly with both proprietary and open-source large language models using standardized APIs and connectors. The platform supports a wide range of models, offering the flexibility to switch between or combine them based on specific tasks. This multi-model strategy allows businesses to test new models alongside existing ones, comparing their real-time performance. By doing so, enterprises can fine-tune their workflows and select the most effective and cost-efficient models for their needs.

Teams can rapidly create, test, and deploy consistent prompt templates using "Time Saver" workflows - expert-designed templates tailored for common use cases. The platform tracks performance and cost metrics automatically, offering actionable insights to fine-tune prompts and improve results. This automation accelerates deployment while ensuring data-driven decision-making.

For U.S. enterprises facing strict regulatory demands, Prompts.ai incorporates governance controls directly into its workflows. The platform generates detailed audit trails, documenting every model interaction, prompt adjustment, and user action. This ensures that compliance reporting and risk management are fully supported. Additionally, its robust data security framework keeps sensitive information securely under the organization’s control during AI processing. Role-based access controls further enhance compliance by restricting access to specific models or datasets, ensuring regulatory standards are consistently upheld.

Prompts.ai includes a built-in FinOps layer that provides real-time insights into AI spending. Costs are tracked down to individual tokens and linked directly to business outcomes, offering unmatched transparency. This enables finance teams to evaluate AI ROI effectively, while technical teams can optimize model usage for greater efficiency. The platform’s spending visibility ensures budgets remain on track without compromising operational flexibility, giving organizations the tools to manage costs with confidence.

LangChain is an open-source framework designed to simplify the development of large language model (LLM) applications. Whether you're building a basic chatbot or a system capable of advanced reasoning, LangChain provides pre-built components that reduce the need for extensive custom coding.

This framework streamlines the creation of AI workflows by combining elements like prompts, memory modules, and external data integrations. It ensures applications can maintain context during interactions and access live information, speeding up the development of tailored solutions. LangChain also allows seamless integration of diverse models, making it a versatile choice for building reliable AI systems.

LangChain is built to work with a wide variety of language models from providers such as OpenAI, Anthropic, Google, and select open-source options. Its standardized interface makes switching between models straightforward. The platform handles complexities like API connections, authentication, and request formatting, saving developers time and effort. It also supports hybrid deployments, combining cloud-based and local models to balance cost, speed, and accuracy.

One of LangChain's standout features is its "chains", which automate multi-step prompt workflows. These chains use conditional logic, prompt templates, and memory management to process tasks efficiently. For example, a research chain could generate search queries, retrieve and summarize documents, and compile a report - all in a single automated process.

The framework supports variable substitution in prompt templates, ensuring consistency even as inputs change. Its memory management tools allow applications to store and reference conversation history, enabling richer and more context-aware interactions.

LangChain includes a callback system to monitor execution and ensure compliance with audit requirements. Its flexible design also supports the integration of custom validators to screen outputs and uphold safety and consistency standards. These features make LangChain a reliable option for creating efficient, secure AI systems that meet evolving industry expectations.

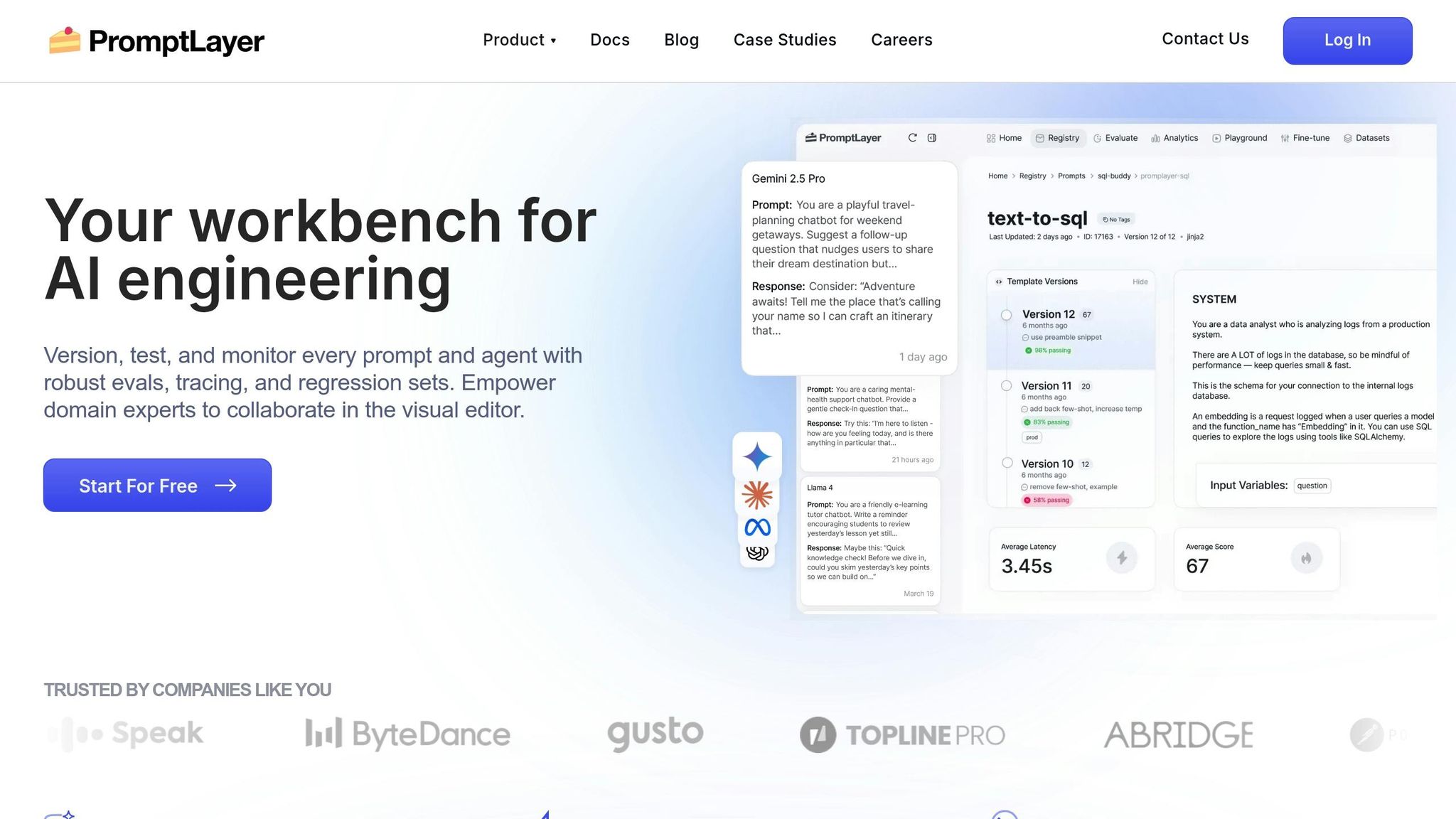

PromptLayer is designed as an observability platform tailored for prompt engineering workflows. Its primary goal is to give teams the tools they need to monitor, refine, and optimize their AI applications by offering features like logging, version control, and analytics.

Serving as a middleware layer, PromptLayer sits between your application and language models, capturing every prompt interaction without requiring extensive code changes. This setup enables teams to keep track of prompt performance, manage costs, and ensure accountability in their AI systems. By analyzing real usage data, PromptLayer provides actionable insights that help refine prompts for better outcomes. The platform not only simplifies integration but also enhances monitoring and cost visibility.

PromptLayer is compatible with major language model providers such as OpenAI, Anthropic, Cohere, and Azure OpenAI. It uses a drop-in replacement approach, allowing developers to integrate the platform into existing applications with minimal modifications. API calls are routed through PromptLayer’s logging layer, which then forwards them to the chosen provider.

The platform is designed to work seamlessly with various model versions and adjusts automatically to API updates from providers, ensuring uninterrupted functionality. Additionally, PromptLayer supports custom model endpoints, enabling teams to include proprietary or fine-tuned models in their monitoring workflows. These integration features ensure consistent visibility and performance optimization across diverse AI systems, even in multi-model environments.

PromptLayer is built with enterprise compliance in mind, offering robust governance tools like audit trails and access controls. Every prompt interaction is logged with detailed metadata, including timestamps, user identifiers, and response information, creating a comprehensive record for compliance reviews.

The platform employs role-based access controls to protect sensitive data, allowing administrators to limit access to confidential information or system settings. It also includes automated data retention policies, which archive or delete logs in line with organizational requirements. Teams can set up alerts for unusual activity, such as security risks or compliance violations, and export data in standard formats for integration with other compliance systems.

One of PromptLayer’s standout features is its ability to track and analyze costs in detail. By calculating expenses based on token usage and provider pricing, the platform helps teams identify high-cost prompts and optimize token efficiency.

The dashboard provides real-time cost monitoring, complete with alerts when spending surpasses predefined limits. Teams can review cost trends, compare the efficiency of different prompt strategies, and discover opportunities to cut unnecessary expenses. The platform also includes budget allocation tools, enabling organizations to assign spending limits for specific teams, projects, or workflows.

Beyond basic cost reporting, PromptLayer offers performance-per-dollar metrics, giving teams a clearer picture of how to balance costs with output quality. This helps organizations decide when to use premium models versus more economical options, depending on the task at hand. These insights support the goal of streamlining AI workflows and improving efficiency across enterprise operations.

OpenPrompt takes the spotlight by advancing the field of prompt engineering through its open-source framework. Designed to support research and development, it provides a flexible platform for experimenting with and crafting custom strategies in this emerging area. While it builds on platforms that prioritize operational tracing and governance, OpenPrompt shifts its emphasis toward foundational research. For now, detailed information about features like integration methods, workflow automation, and template management remains limited. For the most accurate and up-to-date insights, consult the official documentation.

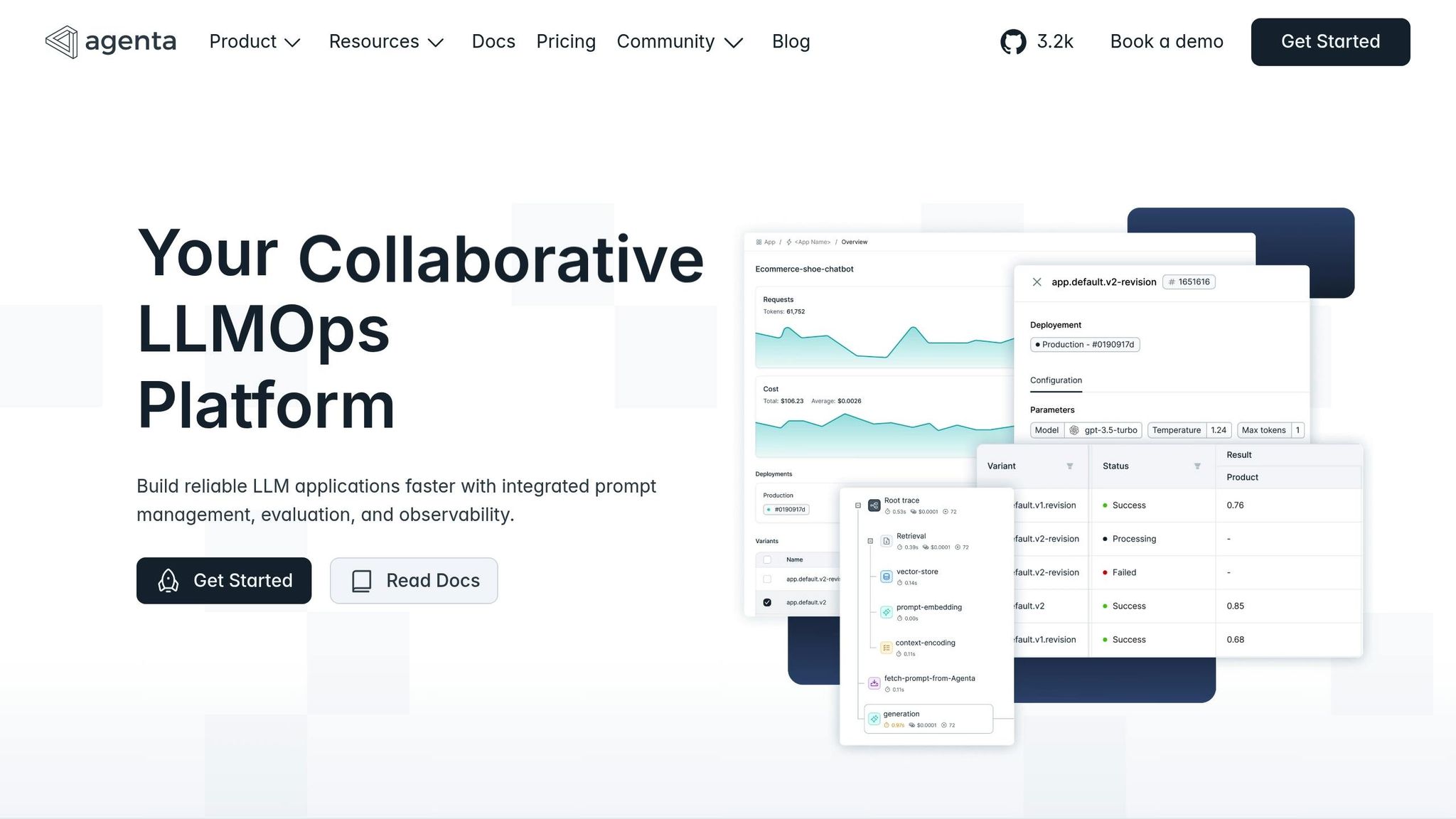

Agenta continues to advance integrated prompt engineering by seamlessly connecting experimentation with production deployment. It offers developers and AI teams scalable tools to build, test, and deploy AI applications while maintaining visibility into performance. This unified framework forms the backbone of Agenta's capabilities, which are outlined below.

Agenta's Model Hub simplifies the integration of various AI models, streamlining workflows that involve multiple providers. Introduced in April 2025, this feature addresses the increasing complexity of managing multi-model AI setups.

"Connect any model: Azure OpenAI, AWS Bedrock, self-hosted models, fine-tuned models – any model with an OpenAI-compatible API." - Mahmoud Mabrouk, Author, Agenta Blog

The Model Hub connects models from major providers like Azure OpenAI and AWS Bedrock, as well as self-hosted and fine-tuned solutions. By centralizing these integrations, developers no longer need to juggle multiple connection points, saving time and reducing errors.

Agenta also extends its reach by supporting popular AI agent frameworks. In July 2025, it introduced observability integrations with OpenAI Agents SDK, PydanticAI, LangGraph, and LlamaIndex, with plans for additional integrations in the future.

Agenta's playground provides a versatile environment for defining tools through JSON schema, testing prompt-based tool calls, and supporting image workflows for vision models. This environment is unified across its playground, test sets, and evaluation tools, allowing for a seamless workflow.

Teams can experiment with various models in the playground, systematically evaluate their performance using test sets, and deploy configurations to production with minimal setup. This streamlined process ensures consistency and efficiency throughout the development lifecycle.

Agenta enhances governance by equipping teams with observability tools to monitor and manage AI deployments effectively. The centralized Model Hub serves as a control center for managing model access and configurations, enabling teams to enforce policies consistently. This reduces the risk of configuration drift or unauthorized access, ensuring that deployments remain secure and compliant.

Selecting the right prompt engineering platform hinges on your goals, budget, and technical needs. Each solution brings its own strengths tailored to specific use cases.

| Platform | Key Strengths | Model Support | Pricing Structure | Best Fit Use Case |

|---|---|---|---|---|

| Prompts.ai | Unified interface for 35+ models, FinOps cost tracking, enterprise governance, up to 98% cost savings | GPT-5, Claude, LLaMA, Gemini, Grok-4, Flux Pro, Kling, and 35+ others | Pay-as-you-go TOKN credits, Personal: $0-$99/month, Business: $99-$129/member/month | Enterprise teams needing centralized AI orchestration with cost control |

| LangChain | Comprehensive framework for AI applications, extensive community support, modular architecture | OpenAI, Anthropic, Hugging Face, Azure OpenAI, AWS Bedrock, and 100+ providers | Open source (free), LangSmith starts at $39/month for teams | Developers building complex AI applications with custom workflows |

| PromptLayer | Advanced prompt versioning, A/B testing capabilities, detailed analytics and logging | OpenAI, Anthropic Claude, Google PaLM, Cohere, and major LLM providers | Free tier available, Pro plans from $49/month, Enterprise custom pricing | Teams focused on prompt optimization and performance tracking |

| OpenPrompt | Research-oriented framework, academic backing, standardized prompt templates | BERT, GPT, T5, RoBERTa, and transformer-based models | Open source (completely free) | Researchers and academics working on prompt-based learning |

| Agenta | Integrated experimentation to production pipeline, Model Hub for multi-provider management | Azure OpenAI, AWS Bedrock, self-hosted models, OpenAI-compatible APIs | Usage-based pricing with free development tiers and custom enterprise rates | Development teams requiring seamless testing-to-deployment workflows |

This comparison underscores key differences in cost, compatibility, and ease of deployment. For organizations with fluctuating AI usage, Prompts.ai's TOKN credit system eliminates subscription fees, offering flexibility. LangChain provides the most budget-friendly entry point for developers who are comfortable with open-source tools, while PromptLayer and Agenta follow traditional SaaS pricing models with features tailored for specific needs.

When it comes to model compatibility, Prompts.ai supports over 35 cutting-edge models, making it ideal for diverse use cases. On the other hand, LangChain integrates with the broadest range of providers, boasting support for more than 100 platforms. Meanwhile, OpenPrompt focuses on transformer-based models, offering deep specialization but with a narrower scope.

Enterprise features also vary significantly. Prompts.ai leads with robust enterprise deployment capabilities, while Agenta excels in production-focused workflows, though it may require a more technical setup. LangChain and OpenPrompt cater primarily to developers and often necessitate additional infrastructure for scaling to enterprise levels.

The learning curve is another critical factor. Prompts.ai simplifies onboarding with guided training and certification programs, making it accessible to non-technical teams. PromptLayer offers user-friendly interfaces for prompt management, while LangChain and OpenPrompt demand programming knowledge, making them better suited for technically skilled teams.

In terms of speed, Prompts.ai and PromptLayer allow teams to begin optimizing prompts almost immediately, ensuring quick deployment. Agenta streamlines the transition from development to production with its unified playground, while LangChain and OpenPrompt may require more setup time but deliver greater customization for specialized needs. These distinctions highlight the unique strengths of each platform, helping users align their choice with their specific requirements.

Prompt engineering has become a cornerstone for organizations leveraging AI technologies. The five solutions highlighted here demonstrate how structured methodologies in advanced prompt engineering, cost management, and workflow automation can significantly enhance team performance.

Prompts.ai stands out by offering seamless access to multiple models and exceptional cost efficiency for enterprises aiming to streamline AI operations. LangChain is a favorite among developers for its extensive framework and active community support. Meanwhile, PromptLayer shines with its strong focus on prompt versioning and A/B testing tools. For academic research, OpenPrompt provides a wealth of resources, and Agenta simplifies the process of moving from experimentation to production deployment.

The importance of prompt engineering spans multiple disciplines, making it crucial for optimizing large language model (LLM) performance and ensuring outputs that are reliable, safe, and practical. Companies that prioritize building robust prompt engineering systems today will gain a lasting competitive edge in the future.

To refine your AI workflows, take a strategic approach: evaluate your organization's specific requirements in areas like model variety, cost management, and compliance. Assess your team's technical capabilities to determine whether you need user-friendly tools or can work with developer-focused platforms. Additionally, take advantage of free tiers and trial periods offered by these solutions. For instance, LangChain and OpenPrompt provide immediate access for testing, while Prompts.ai, PromptLayer, and Agenta offer trial periods to explore enterprise-level features. A targeted strategy will help your organization stay ahead in the evolving AI landscape.

The financial benefits alone make this a pressing priority. With AI software costs eating up a growing portion of tech budgets, platforms that optimize usage tracking and resource allocation can deliver substantial savings. By focusing on cost efficiency, interoperability, and governance, these tools offer a clear return on investment. Investing in prompt engineering today is a decisive step toward securing your organization's future success.

Prompt engineering allows businesses to significantly reduce AI operational costs by crafting prompts that require fewer tokens. This approach decreases processing time and computational demands, leading to lower expenses when running AI models.

Beyond cost savings, carefully designed prompts enhance efficiency by cutting down on irrelevant outputs and reducing energy consumption. This enables businesses to scale their AI systems in a cost-effective manner while maintaining high performance. With strategic prompt engineering, companies can streamline operations, saving money and boosting productivity simultaneously.

Prompts.ai offers robust governance and compliance tools tailored for enterprises that must meet stringent regulatory requirements. These features include secure API management, detailed audit trails, and flexible permission controls, all designed to safeguard data and align with internal policies.

By embedding governance directly into AI workflows, the platform enables real-time tracking, risk evaluation, and policy implementation. This approach promotes transparency, accountability, and ethical AI practices, making it a smart choice for organizations that prioritize compliance and operational trustworthiness.

LangChain provides an open-source framework designed to make building applications with large language models more straightforward. Its modular and flexible structure allows developers to integrate multiple LLMs and external data sources with minimal coding effort, speeding up the development and refinement of AI-driven tools.

The platform's user-friendly tools and APIs are ideal for creating applications such as chatbots, question-answering systems, and AI-powered agents. By simplifying workflows and cutting through complexity, LangChain enables developers to channel their energy into innovation and quickly roll out advanced AI solutions.