Prompt routing can dramatically reduce AI costs and simplify workflows when done right. In 2026, businesses are using smarter tools to optimize AI operations, cut expenses, and improve efficiency. Here's a quick breakdown of the top solutions:

These strategies help businesses save up to 70% on AI costs by combining smarter routing, reusable templates, and better resource allocation. Start by auditing your workflows, choosing the right tools, and focusing on cost-efficient models to scale your operations effectively.

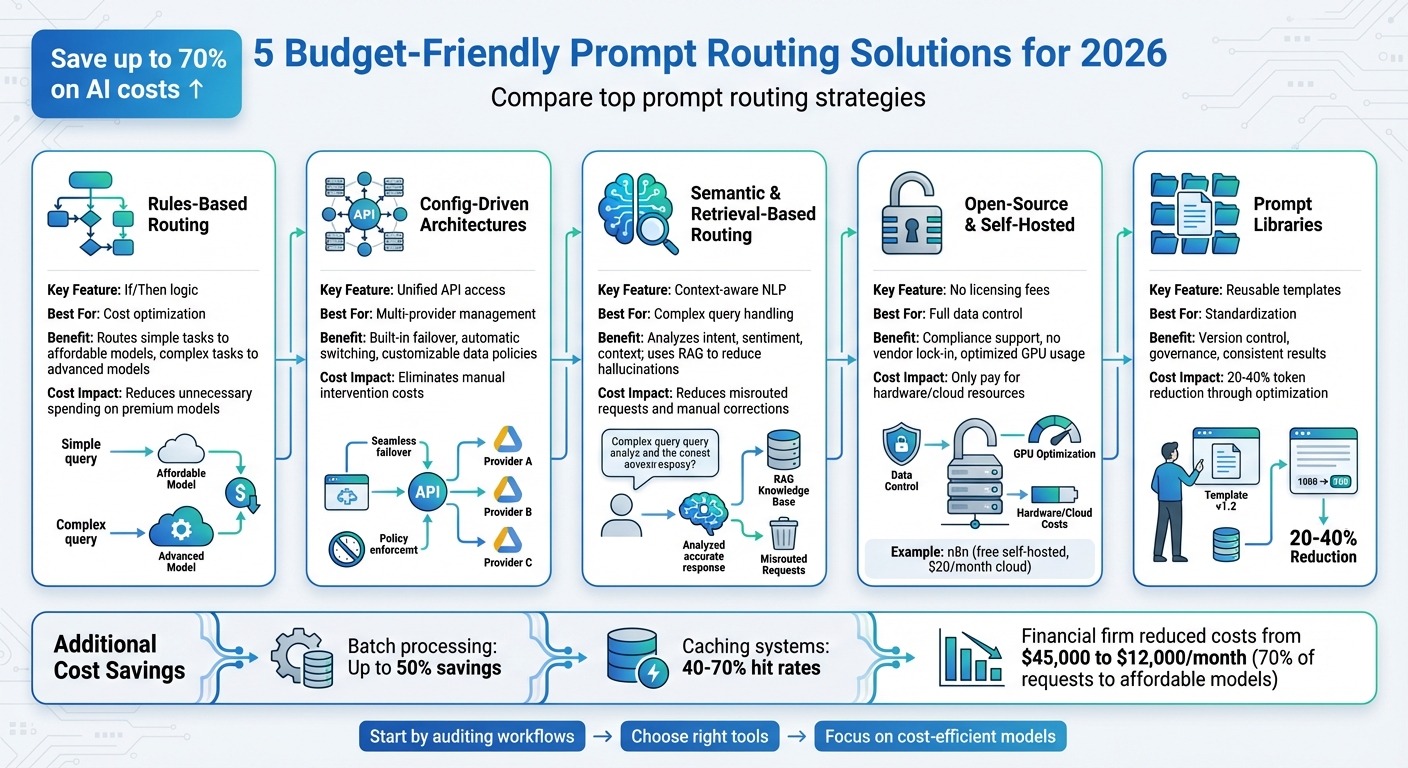

5 Budget-Friendly Prompt Routing Solutions Comparison Chart 2026

Rules-based routing tools rely on straightforward if/then logic to guide prompts, data, and tasks within AI workflows. This structured approach ensures transparency and predictability in decision-making, making it a reliable foundation for efficient AI operations.

One of the standout benefits is cost optimization. These tools assign simpler tasks to more affordable models or internal systems, reserving the more advanced (and expensive) AI models for handling complex, high-priority tasks. This targeted distribution helps manage resources effectively.

In addition to cost savings, rules-based systems are excellent for automating repetitive tasks, reducing errors, and allowing teams to focus on more strategic initiatives. They also play a key role in data validation, ensuring only high-quality inputs are sent to your AI models.

For even greater efficiency, consider combining traditional if/then rules with natural-language assessments. These advanced routers evaluate content and AI confidence levels to determine the best course of action. This hybrid approach integrates smoothly into existing workflows while keeping costs in check.

Config-driven architectures simplify AI integration by offering a unified API that connects to multiple AI models. Instead of juggling individual integrations for each language model provider, you can set routing rules once and let the system handle everything automatically.

This setup not only streamlines access but also ensures reliability with built-in failover mechanisms. If one provider experiences downtime, your workflows automatically switch to an alternative model, keeping operations smooth and uninterrupted. This hands-free continuity minimizes disruptions and avoids the costly delays associated with manual intervention.

Customizable data policies add another layer of control, allowing sensitive prompts to be routed exclusively to trusted models. This reduces the risks and expenses tied to potential data breaches while maintaining the flexibility to choose different providers for specific tasks.

From a budget perspective, treating routing logic as configuration rather than code delivers significant advantages. Teams can adjust settings like model preferences, fallback rules, and cost limits without rewriting applications. This speeds up deployment and reduces the engineering time required to fine-tune AI spending. Low-code visual tools take it a step further by enabling non-technical users to orchestrate workflows without relying on extensive development resources. By empowering both technical and business teams to manage routing decisions, organizations can boost efficiency without increasing staffing costs.

Expanding on traditional rule-based and configuration-driven approaches, semantic routing uses advanced language understanding to refine how prompts are distributed. By incorporating machine learning and natural language processing, it moves beyond basic keyword matching. These systems analyze factors like intent, sentiment, and context to interpret complex queries and automatically route them to the most fitting workflow. This precision significantly reduces misrouted requests and limits the need for manual corrections.

Taking this a step further, Retrieval-Augmented Generation (RAG) enhances the process by anchoring AI responses in relevant information from your existing knowledge base. Instead of relying solely on high-parameter models for every query, the system retrieves contextually appropriate documents first. This approach minimizes inaccuracies, often referred to as hallucinations, and improves response reliability.

Modern generative AI platforms now offer these advanced capabilities with minimal setup requirements. By intelligently routing queries based on context, these systems not only streamline workflows but also help cut operational costs.

Open-source and self-hosted routing solutions provide the freedom to manage your AI infrastructure without the burden of licensing fees. Instead of paying for software licenses, your costs are limited to hardware and cloud resources. This approach allows you to optimize GPU usage and reduce cold starts, which can significantly lower expenses. At the same time, these solutions grant unmatched control over your data and compliance processes.

In addition to cost advantages, these tools address essential compliance requirements. They support data residency, secure secret management, and role-based access control. By keeping your data within your own systems, you avoid vendor lock-in, giving you the flexibility to switch cloud providers or transition to on-premise setups as your needs change.

However, open-source platforms come with their own challenges. Unlike proprietary services that handle maintenance for you, open-source tools require your engineering team to manage upgrades and security. To bridge this gap, the "Managed Open Core" model has gained traction. It combines open-source frameworks like MLflow or BentoML with proprietary managed services, offering a balance of flexibility and reliability.

A great example of this approach is n8n, a platform used by technically skilled teams to build advanced workflows. In 2025, n8n was leveraged to create multi-agent workflows that generated social media posts from news stories and crafted replies using retrieval-augmented generation (RAG) with podcast transcripts stored as metadata. The platform offers a free self-hosted option, while its cloud plans start at $20 per month. With support for custom code in JavaScript and Python, along with source-available licensing, n8n provides the extensibility required for intricate integrations.

For teams equipped with the technical expertise to manage infrastructure, self-hosted solutions can deliver substantial long-term benefits. Just be sure to account for the engineering resources needed to maintain, secure, and scale these systems as your AI workflows expand.

Expanding on earlier strategies for routing, prompt libraries simplify development by creating a standardized approach to AI instructions. These libraries, combined with snippet management tools, allow you to develop prompts once and deploy them consistently across your team's workflows. Instead of drafting new instructions every time, you can store proven prompts in a centralized repository, making them accessible for team-wide use. This method ensures more uniform results in tasks like customer service, content creation, and data processing, all while reducing the need for constant supervision.

This approach also delivers cost savings by cutting out repetitive work. For instance, a successful email prompt used by one team can be repurposed for outreach tasks, saving both time and minimizing errors. Analysts highlight that future efficiency gains will heavily depend on effective prompt management practices, including features like version control, governance, reuse, and distribution. A well-organized prompt library further enhances efficiency by categorizing prompts based on use case, ownership, approval status, and performance metrics. This structure makes it easier to find the right prompt quickly and ensures safer reuse.

For even faster deployment, pair your centralized repository with a lightweight text expander. This setup simplifies inserting prompts into workflows while maintaining a single source of truth, reducing mistakes and troubleshooting time.

To make prompt management accessible to everyone on your team, consider no-code or low-code tools that let non-technical users create and edit prompts. Customizable templates for tasks like proposals, reports, or customer responses can streamline operations. Additionally, tracking prompt performance and retiring underperforming ones keeps your library efficient and cost-effective. This approach aligns seamlessly with earlier discussions on cost-efficient, interoperable routing solutions, further enhancing the automation of AI workflows.

Selecting the right prompt routing solution isn’t about finding a one-size-fits-all tool - it’s about aligning your workflow with a mix of cost-conscious strategies. As Eduardo Barrientos wisely states:

"The most cost-effective AI strategy isn't a single model - it's the ability to adapt across models, providers, and workloads."

This adaptability is crucial, especially when hidden costs - like retry overhead, quality assurance, infrastructure, and personnel - can inflate base token expenses by 2–5x if not carefully managed.

Before committing to a solution, take a close look at your specific needs. Addressing hidden costs early allows you to tailor your routing strategy effectively. Think about factors such as where your data is stored (data gravity), your security requirements, the speed of iteration you need, and the scale of your operations. For instance, a financial services firm managed to cut their monthly LLM costs from $45,000 to $12,000 in September 2025 by using intelligent routing. They directed 70% of their requests to more affordable models while maintaining the same quality. This kind of thoughtful evaluation lays the groundwork for integrating various routing methods smoothly.

Once your requirements are clear, explore how different routing strategies can work together to drive down costs. Combining approaches often yields better results than relying on a single method. For example, pairing a structured prompt library with intelligent routing can reduce token usage by 20–40% through prompt optimization. Meanwhile, caching systems can achieve hit rates of 40–70%, significantly cutting costs for many applications.

Take the time to audit your AI workflows to identify areas of overspending or inefficiency. Implement measures like batch processing, which can save up to 50%, and set clear routing rules based on task complexity. Also, keep an eye on pricing predictability - unexpected cost spikes can be just as damaging as high base costs. Prioritize models that offer stable pricing structures as your usage scales. This kind of auditing and planning ensures you’re choosing the right mix of tools and strategies for cost-effective operations.

The strategies discussed here offer a practical guide to building efficient AI workflows. Experiment with different combinations, monitor their impact on both performance and budget, and refine your approach as your needs change. By crafting the right routing strategy today, you can set the stage for scalable and efficient AI operations in the future.

Rules-based routing is a smart way to cut AI expenses by ensuring tasks are assigned to the most efficient and cost-effective models. It evaluates factors like task complexity and performance needs, reserving high-cost resources for situations where they’re truly required. This targeted approach helps avoid unnecessary spending.

In addition to saving money, this method enhances operational efficiency by simplifying workflows and making better use of available resources. It’s a practical solution for managing AI-driven processes effectively.

Open-source routing tools bring several standout advantages to managing AI workflows. First, they provide transparency, letting you clearly see how the system functions. This openness builds trust and ensures you're always in control.

These tools are also highly adaptable, allowing you to tailor them to fit your unique workflow needs. Unlike rigid, pre-packaged solutions, they give you the freedom to design systems that suit your specific goals.

One of the biggest perks? Cost efficiency. Most open-source tools are free, helping you cut down on expenses without sacrificing performance. On top of that, they come with community-driven support, offering access to shared resources, expertise, and regular updates. This combination of flexibility, affordability, and collaboration makes open-source solutions a smart choice for those looking to streamline AI operations without breaking the bank.

Prompt libraries simplify AI workflows by automating the process of choosing the most efficient and budget-friendly AI models for specific tasks. This minimizes the need for manual adjustments, improves resource allocation, and speeds up task execution.

These libraries also support smooth model switching, prompt chaining, and offer real-time analytics, making it easier to handle intricate AI operations while maintaining cost efficiency.