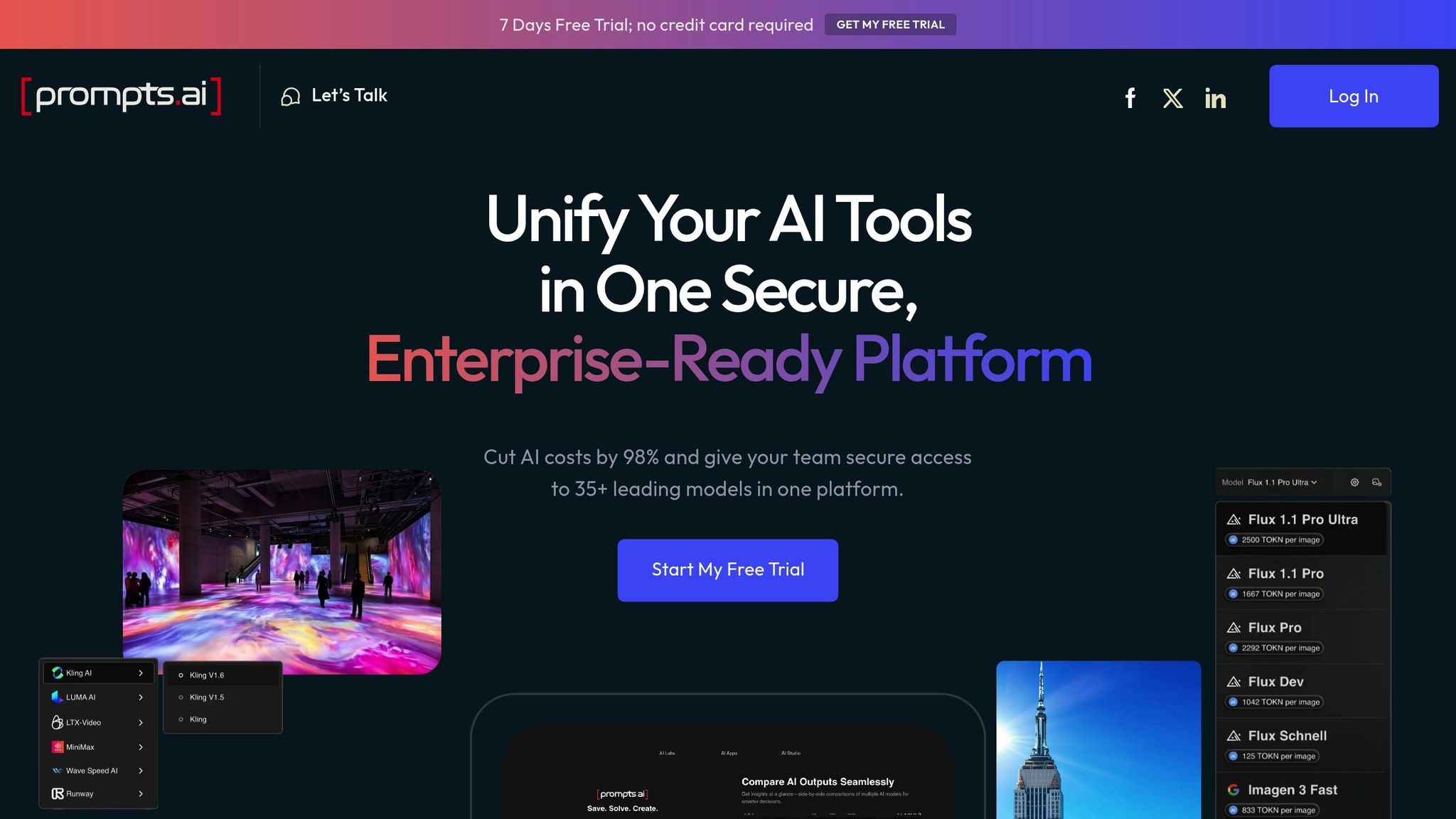

Prompts.ai is the leading choice for enterprises managing large-scale AI operations. It simplifies AI workflows by unifying 35+ top language models, offering real-time cost tracking, and ensuring compliance with enterprise-grade security. Designed for scalability, it handles complex workloads, optimizes expenses with token-level tracking, and fosters team collaboration through shared workspaces and role-based permissions. Prompts.ai’s pay-as-you-go TOKN credits align costs with usage, making it a cost-efficient and secure platform for AI orchestration.

Prompts.ai combines real-time governance, financial transparency, and collaborative tools to meet the demands of enterprise AI. It eliminates inefficiencies, reduces costs, and ensures compliance, all within a single platform.

For enterprises seeking to streamline AI operations, Prompts.ai is the clear choice.

To support thousands of users while ensuring security and managing costs, enterprise AI command centers need to deliver a powerful combination of features. These platforms must address three critical areas to grow alongside organizational needs: security, financial transparency, and collaboration.

A strong security framework is the backbone of any scalable AI command center. This starts with enterprise-grade authentication, which integrates with identity providers like Active Directory, Okta, or SAML-based systems. Features like single sign-on (SSO) not only streamline user access but also maintain strict security protocols across workflows.

Role-based access control (RBAC) is another essential feature, especially for large teams with diverse responsibilities. For example, finance teams may only require view-only access to cost analytics, while data scientists need deployment permissions without budgetary controls. By assigning granular permissions, organizations can ensure users access only the tools and data they need, minimizing risks.

To protect sensitive information, the platform must encrypt data both at rest and in transit. AI workflows often handle confidential customer data, proprietary algorithms, and critical business insights. End-to-end encryption safeguards this data throughout its journey, from input to processing to storage.

Audit trails play a vital role in compliance, logging every action with timestamps, user IDs, and detailed changes. This creates an immutable record that meets regulatory requirements such as SOC 2, GDPR, and HIPAA, while also aiding in forensic analysis when needed.

Finally, API security measures - including rate limiting, token authentication, and request validation - are crucial. As AI operations grow, API endpoints can become targets for malicious activities. These safeguards protect against unauthorized access while ensuring legitimate workflows remain uninterrupted.

When paired with financial oversight, these security measures provide a solid foundation for scalable operations.

Effective financial management is key to scaling AI operations. Token-level tracking offers granular visibility into AI spending, pinpointing which teams and workflows consume the most resources. This insight allows for better resource allocation and cost management.

Real-time budget controls help organizations avoid unexpected cost overruns. Automated alerts can notify teams when spending nears preset thresholds, while hard limits can pause non-essential workflows to prevent runaway expenses. Allocating department-level budgets ensures individual teams stay within their limits without disrupting overall operations.

By implementing cost-to-outcome mapping, organizations can directly link AI investments to measurable business results. For instance, the cost of deploying a model can be compared to the operational savings it generates. This approach reframes AI as a business driver rather than a cost center, providing clear justification for continued investment.

The platform should also proactively identify cost-saving opportunities. By analyzing usage patterns, it can suggest more efficient model configurations, flag underused resources, and recommend scheduling adjustments to take advantage of lower-cost computing periods.

Lastly, chargeback capabilities enable precise cost allocation across departments and projects. This fosters accountability, as teams are held responsible for managing their allocated resources effectively.

Clear financial insights ensure that AI operations remain efficient and aligned with organizational goals.

Collaboration tools are essential for breaking down silos and streamlining AI workflows. Shared workspaces provide a common environment where data scientists, engineers, and stakeholders can collaborate on models, test workflows, and share feedback - all without switching between platforms. Version control ensures everyone works with up-to-date configurations while retaining access to previous versions for rollbacks.

To maintain quality at scale, the platform must support role-based approval workflows. For instance, critical model deployments might require sign-offs from technical leads and compliance officers, while routine updates can proceed with automated approvals. These customizable workflows adapt to varying organizational structures and risk levels.

Real-time collaboration tools improve communication around AI workflows. Features like instant notifications, built-in messaging, and annotation capabilities allow teams to address issues quickly and stay informed without relying on external tools.

Managing the lifecycle of AI projects is another critical requirement. Lifecycle management provides visibility into each workflow's status, performance metrics, and maintenance needs. Automated policies can trigger updates, reviews, or decommissioning based on set criteria, ensuring resources are allocated efficiently.

Lastly, integration is key. The platform should seamlessly connect with existing tools like GitHub, Slack, and Jira. Instead of replacing these tools, the command center should enhance workflows with AI-specific features while maintaining compatibility with established processes.

These collaboration and workflow management features enable enterprises to centralize AI operations and scale effectively, ensuring teams can work together efficiently and achieve their goals.

Prompts.ai stands out as the ultimate AI command center for enterprises seeking scalable deployment. While many platforms promise to tackle enterprise AI challenges, Prompts.ai delivers a complete solution by offering unified multi-model access, real-time governance, and integrated FinOps - all designed for large-scale operations.

Handling multiple AI models from various vendors often leads to complications, higher costs, and slower deployment. Prompts.ai eliminates these hurdles by bringing together over 35 leading AI models into one secure platform. This allows teams to compare model performance side-by-side using identical prompts and datasets, test different configurations, and quickly adapt to new models or pricing changes - all without disrupting their existing infrastructure.

This integration reshapes how enterprises choose and deploy models. Teams can switch between models effortlessly to meet specific workload demands. For instance, a data scientist can test a customer service workflow using Claude for conversational tasks and then pivot to GPT-4 for analytical needs - all within the same environment.

The platform’s interoperability ensures workflows aren’t tied to a single vendor. If a new model becomes available or pricing shifts, teams can adapt without having to rebuild their systems. This flexibility, combined with real-time execution capabilities, strengthens operational efficiency and control.

Enterprise AI demands immediate oversight and control of all operations. Prompts.ai’s real-time execution engine provides instant feedback on model performance, costs, and compliance. Teams can monitor workflows live, identifying potential bottlenecks or issues before they escalate into larger problems.

The platform also integrates advanced security features such as authentication protocols, audit trails, and API-level safeguards. These tools ensure that teams have immediate insights into costs, performance, and compliance. With role-based access controls, users only access the tools they need, maintaining both operational efficiency and regulatory compliance.

In addition to its model management and governance tools, Prompts.ai includes financial controls tailored for scalable AI deployments. Traditional AI operations often face unexpected expenses, but Prompts.ai’s FinOps features help optimize spending through token-level tracking, real-time budget controls, and pay-as-you-go TOKN credits that align costs with actual usage.

Token-level tracking offers detailed insights into AI spending, showing which teams, projects, or workflows consume the most resources. Real-time budget controls prevent overspending by sending alerts and pausing non-essential workflows when spending approaches set limits.

With cost-to-outcome mapping, organizations can directly link AI investments to tangible business results. For example, teams can evaluate the cost of deploying a customer service bot against the operational savings it delivers. Additionally, the platform analyzes usage patterns to uncover cost-saving opportunities, such as recommending more efficient model configurations.

When selecting an AI command center for enterprise use, it's crucial to evaluate platforms based on their ability to meet essential requirements. Below is a detailed comparison of Prompts.ai's capabilities across critical areas, scored on a 5-point scale where 5 represents top-tier performance and 1 signifies basic functionality.

| Evaluation Criteria | Enterprise Requirement | Prompts.ai Score | Key Capabilities |

|---|---|---|---|

| Multi-Model Access | Support for over 35 leading AI models with a unified interface | 5/5 | Access to over 35 AI models in one platform |

| Security & Compliance | Enterprise-grade authentication, audit trails, and role-based access | 5/5 | Advanced authentication, detailed audit logs, and granular controls |

| Cost Management | Real-time spend tracking, budget controls, and usage optimization | 5/5 | Token-level tracking, budget alerts, and pay-as-you-go TOKN credits |

| Scalability | Handle enterprise workloads without performance issues | 5/5 | Real-time execution, instant model switching, and scalable user management |

| Workflow Management | Centralized prompt workflows, team collaboration, and automation | 4/5 | Workflow tools and team collaboration features |

| Performance Monitoring | Real-time analytics and model comparisons | 5/5 | Live insights and side-by-side model testing |

| Integration Capabilities | API access and compatibility with existing systems | 4/5 | Robust API suite and smooth integration |

| Governance Controls | Policy enforcement and comprehensive audit trails | 5/5 | Automated policies and detailed audit tracking |

This table highlights Prompts.ai's ability to meet the diverse needs of enterprise AI users while addressing challenges like scalability, cost control, and security.

Each score reflects Prompts.ai's effectiveness in tackling enterprise demands, supported by specific features and functionalities.

Multi-Model Access (5/5): By offering access to over 35 models through a single interface, Prompts.ai simplifies workflows and eliminates the need for juggling multiple vendors. This also allows for direct performance comparisons between models.

Security & Compliance (5/5): The platform provides enterprise-level security with features like role-based access, authentication protocols, and detailed audit logs, ensuring secure and accountable AI operations.

Cost Management (5/5): Real-time spend tracking, automated budget alerts, and the use of pay-as-you-go TOKN credits enable businesses to monitor and control expenses effectively.

Scalability (5/5): With its real-time execution engine and scalable user management, Prompts.ai handles large-scale workloads seamlessly, ensuring performance remains consistent as demands grow.

Workflow Management (4/5): While offering strong tools for managing workflows and team collaboration, there is potential for more customization in niche or highly specialized processes.

Performance Monitoring (5/5): The platform’s real-time analytics and ability to compare models side-by-side empower users to make informed, data-driven decisions immediately.

Integration Capabilities (4/5): Prompts.ai supports most enterprise systems through its API suite, though older legacy infrastructures may require additional setup or adjustments.

Governance Controls (5/5): Automated enforcement of policies and comprehensive audit trails ensure that every AI operation remains compliant with organizational and regulatory standards.

This analysis underscores how Prompts.ai stands out as a robust solution for enterprises seeking to streamline and optimize their AI operations. Its blend of advanced features and user-centric design makes it a strong contender in the AI command center market.

Deploying Prompts.ai across multiple environments calls for a careful balance between maintaining security and enabling flexibility. Start by setting up distinct environments - development, staging, and production - that align with your organization’s infrastructure. Use Prompts.ai’s built-in governance tools to ensure seamless management across these setups.

Organize user groups based on your organizational structure to assign environment-specific permissions. For instance, data scientists, prompt engineers, business analysts, and administrators should each have access tailored to their roles. Integrate Prompts.ai with your existing identity management system to enable enterprise-grade authentication and single sign-on (SSO). This ensures uniform security policies across your AI operations while maintaining detailed audit logs. Define clear policies, such as controlling which models teams can access and setting spending limits, to prevent misuse while still allowing room for innovation.

Each environment should reflect its specific purpose. For example, the development environment can allow broader access to models for testing and experimentation, while the production environment should have stricter controls to ensure stability. Prompts.ai’s workflow management tools allow you to create flexible templates that ensure teams stay within approved guidelines.

Once environments are securely configured, the next step is to optimize financial management.

Prompts.ai’s pay-as-you-go TOKN credit system aligns costs directly with usage, eliminating the need for recurring subscription fees. Use real-time analytics to track token consumption across models, teams, and projects, providing detailed insights into resource usage and identifying opportunities to reduce costs.

To further manage expenses, tag workflows with project codes, department identifiers, or business objectives. This tagging not only helps demonstrate ROI but also supports more strategic planning for future AI investments. Implement approval workflows for spending to prevent budget overruns while maintaining operational efficiency.

With strong financial controls in place, you can focus on lifecycle management and tracking success metrics.

Building on governance and cost controls, lifecycle management ensures smooth operations from deployment through long-term performance. Use version control to document every prompt template, model configuration, and automation rule. This makes it easier to roll back changes if needed and ensures compliance with audit requirements.

Regularly evaluate model performance to ensure they remain effective and efficient. Focus on metrics such as response accuracy, processing speed, and resource allocation to gauge operational success. Additionally, monitor deployment timelines to assess efficiency and track user adoption rates to measure how well teams are adapting to the platform. Prompts.ai’s analytics tools provide insights into team engagement, helping you identify patterns and areas for improvement.

A unified platform like Prompts.ai can cut software costs by up to 98% while reducing IT overhead by consolidating AI operations.

Establish quality metrics that link AI performance to broader business outcomes. Automated reports and executive dashboards can summarize critical performance indicators - such as cost trends, adoption rates, and operational impact - ensuring transparency and enabling data-driven decision-making.

Prompts.ai emerges as the ultimate AI command center for enterprises aiming to scale their AI operations with confidence. Its performance highlights its ability to tackle the challenges enterprises face when deploying AI at scale. By unifying multi-model orchestration under one platform, Prompts.ai ensures top-tier security and compliance while delivering the cost efficiencies discussed earlier.

The platform's real-time execution engine combined with built-in governance tools allows organizations to expand their AI capabilities without losing control or oversight. This is especially crucial for AI-driven financial solutions, where compliance with regulatory standards and maintaining transparent audit trails are non-negotiable. Prompts.ai strikes the perfect balance - offering the flexibility teams need to innovate while ensuring executives have the governance structures they require.

With real-time analytics and token tracking, the platform provides unmatched visibility into costs, turning AI cost management into a precise, data-driven process. This transparency enables organizations to plan budgets strategically and measure ROI with accuracy, eliminating the guesswork.

Prompts.ai also transforms individual AI experiments into repeatable, scalable workflows through its collaborative workflow management tools. Additionally, its community and certification programs offer expert-curated workflows that speed up adoption and minimize the learning curve for enterprise AI deployment.

The best way to understand the advantages of Prompts.ai is to experience it firsthand. The platform offers hands-on onboarding and enterprise training, ensuring your team is equipped to leverage its full potential from the start. Beyond that, its active community of prompt engineers provides ongoing support and shared expertise, creating a partnership that goes beyond the typical vendor relationship.

Take the first step risk-free with Prompts.ai's $0/month Pay As You Go plan. This option lets you test the platform's features without any upfront investment. Thanks to its enterprise-grade infrastructure, you can start small with a pilot project and seamlessly scale to full organizational deployment as your AI initiatives grow.

Reach out to Prompts.ai today to schedule a personalized demo and see how their AI orchestration platform can redefine your approach to scalable AI deployment.

Prompts.ai places a strong emphasis on data security and meeting regulatory standards, deploying advanced safeguards to protect sensitive information. The platform complies with GDPR and HIPAA regulations by integrating automated tools for compliance monitoring and managing vulnerabilities, ensuring that personal data and protected health information (PHI) are kept secure.

Beyond healthcare, Prompts.ai applies industry-standard practices to uphold privacy and maintain regulatory alignment across various sectors. These measures not only secure AI workflows but also help teams navigate strict compliance demands with confidence and efficiency.

Token-level tracking allows for detailed insights into the costs of specific AI tasks by breaking down expenses to the token level. This approach supports real-time monitoring, helping teams quickly spot irregularities, manage spending wisely, and keep budgets aligned with their goals.

With accurate cost attribution, token-level tracking empowers teams to use resources more efficiently, enhancing the effectiveness of AI operations. This makes it simpler to expand projects while maintaining tight control over expenses.

Prompts.ai enables teams to work together efficiently, offering role-based controls, centralized oversight, and real-time cost tracking. These tools ensure data remains secure, operations stay transparent, and collaboration runs smoothly across various teams.

The platform’s centralized repository streamlines the creation, sharing, and management of AI prompts, encouraging consistency and teamwork across projects. Its intuitive tools and straightforward interface simplify managing workflows, allowing teams to launch agents, track performance, and design intricate workflows - all within a single, unified system.