Managing AI across teams is messy - too many tools, rising costs, and duplicated efforts. The solution? Platforms that unify top AI models like GPT-4, Claude, and PaLM 2, streamline workflows, and enforce governance.

Here’s what you need to know:

| Platform | Key Strengths | Collaboration Features | Deployment Options | Cost Management |

|---|---|---|---|---|

| Prompts.ai | 35+ models unified, real-time teamwork | Shared libraries, co-editing | Cloud-based | Real-time token tracking |

| Google Cloud | Vertex AI, PaLM 2, open-source support | Basic sharing | Cloud-first | Basic billing dashboards |

| AWS | Bedrock marketplace, multi-cloud setup | Team access controls | Cloud or hybrid | CloudWatch integration |

| Anthropic | Claude-centric, safety-focused | Multi-user prompt editing | API/Cloud | Usage metrics |

| Databricks | Unified data pipelines, MLflow tracking | Notebook collaboration | Hybrid cloud | Resource monitoring |

| SuperAGI | CRM automation, no-code workflows | Visual flow builder | Cloud | N/A |

| Langflow | Visual AI builder, flexible integrations | Real-time changes, notes tracking | Cloud or on-premises | N/A |

| Akka | Multitasking AI, actor-based model | Task segmentation, monitoring | Cloud, on-premises, or hybrid | Resource allocation tools |

These platforms help enterprises cut AI costs, drive team collaboration, and simplify governance. Whether you need real-time co-editing, multi-cloud setups, or unified model access, there’s a solution tailored to fit your team.

Let’s explore how they work.

Prompts.ai is made for groups, offering an AI space that puts team work first. Unlike tools for just one person, it focuses on group tasks and lets many use it at once. Groups can work together on AI work, share thoughts right away, and build complex tasks with no mix-ups.

Prompts.ai makes teamwork easy by bringing more than 35 top AI types - like GPT-4, Claude, LLaMA, and Gemini - into one space. This cuts out the need to juggle many accounts or use different screens. A key part of this area is comparing models side-by-side, letting groups test and check different AI kinds. For example, ad teams can try different kinds to make ad text, while support teams can figure out the best way to answer customer questions. This one setup lets them compare what works best, costs, and outcomes, all in one spot.

The platform also links well with daily tools like Slack, Gmail, and Trello through AI links. Groups can set up tasks across these tools with no need to make their own links or handle many API keys.

Working as one is key on Prompts.ai. Groups can edit prompts together with tools like Whiteboards and Docs, making a space like Google Docs. This lets ad folks, writers, plans people, and bosses work together with no walls.

All talk about the project is in one place, so choices and news are clear, cutting down on mix-ups and making sure everything is clear.

Prompts.ai makes groups more efficient with clear tracking of AI use, including how many tokens used, costs, and how well they work. This clear view helps tech heads choose how to use resources and pick models. Also, the strong rules on who can see what keep the workflow safe and in order.

With top-level safe keeping and full checks, teams can use AI tools and be sure that keeping data safe and following rules is key at every step. This full way shows the platform's plan to help groups drive new ideas with AI that works well together.

Google Cloud's Vertex AI puts a lot of AI models and tools into one clear work area. By mixing Google’s AI tools with other choices, the platform makes a space where teams can make, try out, and use AI fixes. Let's look at what sets Vertex AI apart.

Vertex AI works with many AI models, like PaLM 2 and Codey, made for jobs like making text, ending code, and looking at images. The platform also fits well with known open-source set-ups like TensorFlow, PyTorch, and scikit-learn, letting teams keep their liked tools and keep their normal work going.

The Model Garden part lets you use ready-made models from Google and trusted friends like Hugging Face. For instance, marketing teams can try out word models to plan campaign words, while help teams can look at chatbot models to better talk with buyers.

Vertex AI Workbench lets people work together in real time through shared notebooks, where data folks, tech folks, and business folks can all join in, track changes, and write notes.

For making hard work flows simpler, Vertex AI Pipelines breaks projects into small, easy tasks. This way lets team members work on bits of the project while keeping linked - great for making suggestion systems or making content by machine.

Google Cloud keeps up with common rules by backing formats like ONNX for sharing models and Kubeflow for work plans. This makes sure teams can move models between places or work with outside friends without being stuck in closed systems.

The platform also backs REST APIs and gRPC ways, making it simple to add AI tools with stuff like Salesforce, Slack, or apps made by you.

Google Cloud gives you many ways to put things in place, from full help by them to custom container set-ups. Teams can start with easy API calls and grow to big, many-model systems as needed. By taking care of the setup, Google Cloud lets teams focus on making AI fixes.

The Vertex AI Feature Store mixes data control with rule tools. Teams can set who can get to data and models, watch use in projects, and keep logs to be sure of following the rules. These parts are key for areas like money or health care, where tight data rules are key, but working together is still a must.

Anthropic's Claude AI is a leader because it is built on big, rule-based ideas. It aims for safe and right use within tasks we already do.

Claude fits right in with current tech and job systems. Its API lets groups add AI steps right into their work, linking with all sorts of data spots and control tools. This push for easy joint work makes team work smooth.

Claude lets many users work together at the same time, making it easy for groups to fix prompts and work on stuff like making content or helping customers. This helps teams do more without messing up the work flow.

Anthropic lets you pick how to use Claude - from cloud API to big office needs. This is good for groups that need to keep data safe and meet rules. The platform has tools like safety checks, watching content, who can see what, and keeping track of what is done, making sure AI use is good and fits rules.

Amazon Web Services (AWS) uses its big cloud set up to help many AI apps. With its full set of tools, AWS allows teams to make and run AI flows that mix different techs and data sources.

AWS has a lot of AI and machine learning tools. This includes Amazon Bedrock for base models, SageMaker for making your own models, and Comprehend for reading text. These tools work well together, letting teams move data well through all steps of an AI job. The system is made to join with other work systems, making it easy to get data from many places without needing a lot of new work made.

For teams that want choices, AWS lets you use both cloud and local setups with things like AWS Outposts. This means teams can run AI tasks where they need to while still managing everything from the cloud. This mix helps teams work together and makes projects flow better.

AWS helps teams work together with tools like Amazon SageMaker Studio, which gives one space for AI making. Data people and engineers can work on models at the same time, share notes, and watch tests live. Shared spots make sure easy reach to models, data sets, and code, cutting extra work and pushing steady work.

Also, tools like AWS CodeCommit and CodePipeline make work flows easier by doing things like tests, putting to use, and keeping track of changes all by themselves. This makes sure AI jobs are kept up well, just like usual software making steps.

AWS gives you different ways to put things to use that fit team needs and rule needs. Whether running tasks in the cloud or in both cloud and local places, teams can run things well to reach their work goals.

Safety and rules are first with AWS Identity and Access Management (IAM), which lets you tightly control user, group, and role rights. Tools like AWS CloudTrail and CloudWatch give live info on how the system works and how it's used, helping teams track costs and make things run better. AWS also follows rules like HIPAA, SOC 2, and GDPR, making sure AI tools work in safe and private ways.

Databricks joins data science and AI with its Lakehouse Platform, making a single area to mix many data types and tools. It makes working together easy while keeping data safe and sorted.

Databricks links various data styles and AI tools. It fits well with Apache Spark, MLflow, and Delta Lake, covering all from databases and cloud stores to flowing data. The stage fits with a lot of code types, like Python, R, Scala, and SQL.

It also links well with big cloud groups like Microsoft Azure, AWS, and Google Cloud, letting teams keep their setups. Engineers can pull data from places such as Snowflake, PostgreSQL, and MongoDB without big moves.

For AI model making, Databricks backs setups like TensorFlow, PyTorch, and scikit-learn. Teams can build models using liked tools and start them right in the stage. This cuts out the hard steps of jumping between tools, making work flow smooth and helping teams work better.

Databricks boosts work together with strong tools for teams. The Databricks Workspace lets group people work on AI tasks at the same time. Data people, engineers, and analysts can share notes, talk about code, and see changes live, making sure all stay on the same page.

MLflow helps teamwork by handling the whole life of AI models. Teams can track tries, look at model types, and share finds, making it easy to tweak and better their work.

Databricks makes not only teamwork easy - it also makes rules simple. The Unity Catalog puts control in one place, letting teams set rules for data use and keep info safe.

For groups with tight rule needs, Databricks has tools to track data start and check model acts. Teams can track where data came from and know how AI models think. This clear view helps meet rule needs and fix issues well.

The stage also makes resource changes easy. When needs grow, Databricks changes power use as needed. This lets teams focus on making and bettering AI setups without the extra worry of handling stuff.

SuperAGI is a tool made to change CRM join by using new AI help. It goes past just data and setup control, to start its Agentic CRM tool, which brings together key market functions. By using an agent kind of setup, SuperAGI breaks tough flows into easy, auto jobs, making steps work better.

SuperAGI works well with big work tools like Salesforce, HubSpot, and Airtable. This join makes lead care and customer talk auto, giving a 40% rise in sales work. Its agent kind lets the build of steps in flows that link tools in a firm's tech setup, making work smooth in groups.

A key part is the tool's visual flow maker, which lets groups make and change steps in flows across channels with no need to code. This no-code part is easy for users from many jobs - like ads and customer help - to make and improve AI-driven flows. Also, live updates make sure all in the team are in sync.

SuperAGI's agent build breaks tough flows into easy, small tasks, making it easy to test, watch, and change parts without messing with work. Plus, its unified CRM setup puts control in one place, letting good rights care and better watch over auto steps.

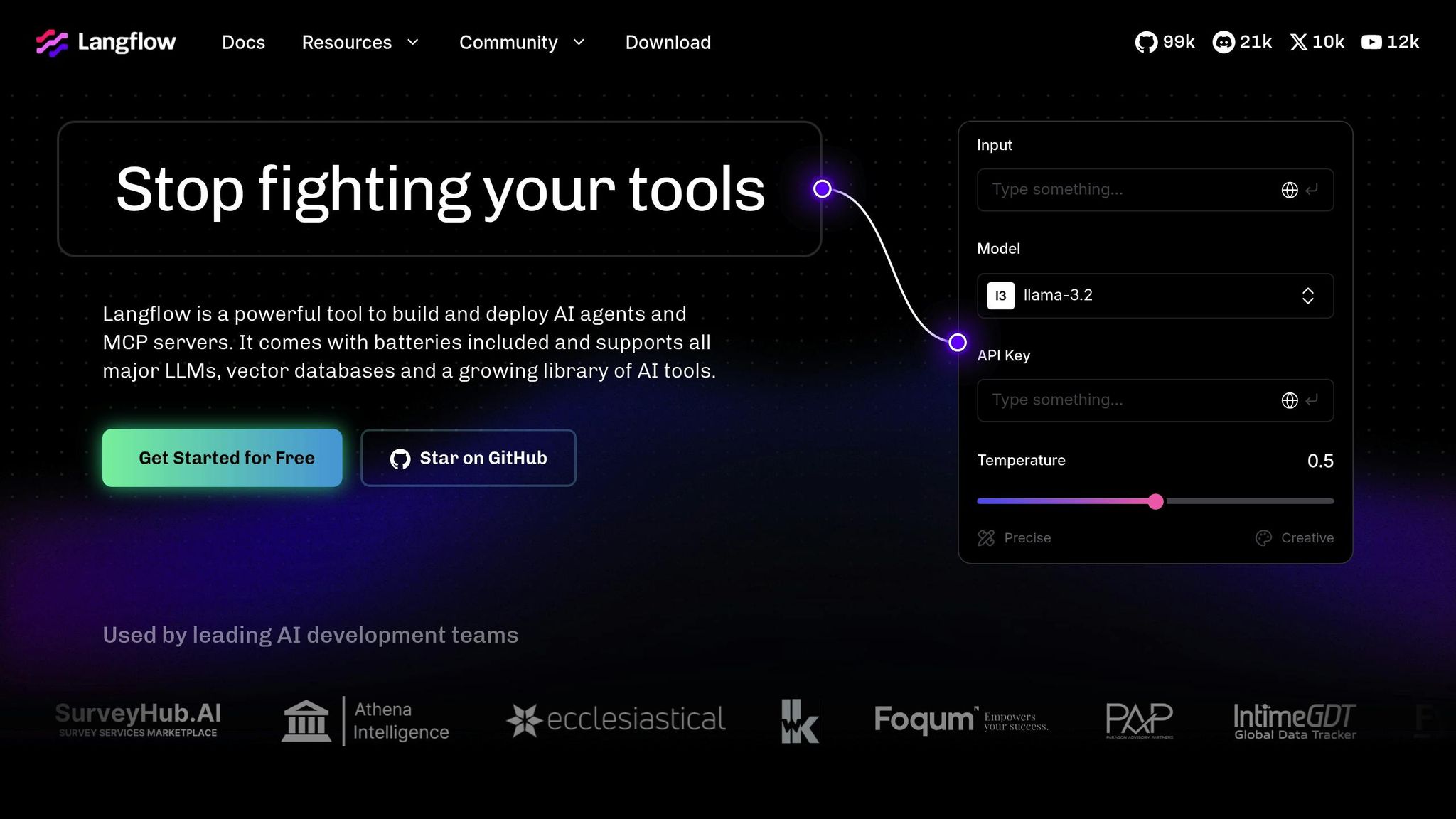

Langflow gives a clear way to make AI projects. You can move through its parts with ease. Teams can make, tweak, and set up AI systems without much coding knowledge. This opens doors for more people to jump into AI work. Its build fits well with many other tools and setups.

At its heart, Langflow works well with others. It fits well with lots of language model setups and has ready parts to link with top tools. Its piece-by-piece build lets you make parts you can use more than once, helping you save time and be more open to change.

The tools for team work help everyone work together better and do more. A lot of members can work on stuff at the same time and see changes as they happen. Things like tracking who changed what and having note tools help keep track of changes and let people talk right in the tool. This makes the whole making process smoother and more joined-up.

Langflow supports top data kinds and ways, making sure it works well with other systems. You can set it up in the cloud, on your own spots, or both, fitting what different groups need. Also, who can do what in the tool is set up to keep things safe but easy to work in, sticking to its goal of safe and easy-to-mix AI setups.

Akka uses an actor method to handle many tasks at once. This makes it a good pick for AI jobs that need to do many things at the same time. Its skill to grow with need means it can keep up with hard jobs.

Akka fits well with a lot of code languages and systems. It works with Java, Scala, and .NET, letting teams use tools they know. It also links well to big data setups like Apache Kafka, Apache Cassandra, and many cloud service. This ease of mix helps put Akka into current tech setups with less need to change a lot.

The system lets parts of an AI app talk well with each other. For instance, when one part is done, it can send the data fast to the next step. This works well for AI jobs with many steps, like getting data ready, guessing with models, and fine-tuning after. By making data flow well, Akka helps make AI systems that work well and are easy to manage.

Akka's actor model breaks big AI work into smaller bits, letting teams work on parts at the same time. Each actor does its own bit, cutting down on mixed up work and upping how much gets done.

The system also has tools for watching and fixing problems, giving teams a view into how their AI is working. They can watch how messages move and spot issues early. This clear view helps teams work well together and makes sure things run smooth.

Akka lets you set up in many ways, on private servers, in the cloud, or across many spots. Its strong setup means it stays up even if a part fails, which is key for AI that must be ready all the time.

Managing resources is another strong point for Akka. Teams can set how much memory and power each part gets, keeping big tasks from taking over. Rules can also be set on how to act when things go wrong, keeping the system stable in tough times. This control keeps things working well and sure in heavy AI jobs.

This comparison delves into how various platforms tackle enterprise AI challenges, showcasing their distinct strengths and approaches.

When it comes to interoperability, platforms vary significantly. Prompts.ai stands out by consolidating over 35 models into a single interface, simplifying access and management. In contrast, Google Cloud focuses on integrating Vertex AI with select third-party tools, while AWS offers its Bedrock marketplace for model selection. Anthropic, on the other hand, centers its ecosystem around Claude, its proprietary AI model.

Collaboration features further differentiate these platforms. Prompts.ai shines with real-time co-editing, shared asset libraries, and detailed permission controls, fostering smooth teamwork. Traditional cloud providers, like Google Cloud, often fall short here, offering only basic sharing functionalities.

The ability to align with open standards plays a crucial role in integrating with existing enterprise systems. While most platforms support REST APIs and standard authentication protocols, some go beyond. Databricks excels in data pipeline integration, Langflow focuses on visual workflow standards, and Akka brings robust interoperability with its actor model, supporting Java, Scala, and .NET environments.

| Platform | Interoperability Scope | Collaboration Features | Deployment Model | FinOps Visibility | Enterprise Readiness |

|---|---|---|---|---|---|

| Prompts.ai | 35+ models unified | Real-time co-editing, shared libraries | Cloud-based | Real-time token tracking, cost optimization | Enterprise-grade governance |

| Google Cloud | Vertex AI ecosystem | Basic sharing functionalities | Cloud-first | Basic billing dashboards | Enterprise-grade security |

| Anthropic | Claude-centric | Individual workspaces | API/Cloud | Usage metrics | AI safety frameworks |

| AWS | Bedrock model marketplace | Team access controls | Multi-cloud | Integration with CloudWatch | Comprehensive compliance |

| Databricks | ML and data pipelines focus | Notebook collaboration | Hybrid cloud | Resource monitoring | Data governance tools |

Deployment flexibility is another critical factor. Prompts.ai offers a cloud-based solution designed to integrate seamlessly with existing systems, whereas others, like Databricks, emphasize hybrid models, and AWS promotes multi-cloud compatibility.

With the rising costs of AI, FinOps visibility has become indispensable. Prompts.ai leads here with real-time token tracking and cost optimization, claiming to cut AI software expenses by up to 98%. Its pay-as-you-go TOKN credits align expenses with actual usage, eliminating recurring subscription fees. In contrast, traditional cloud providers often rely on basic billing tools, lacking the detailed cost controls enterprises need for AI-specific budgeting.

Finally, U.S. enterprise readiness - covering security, compliance, and support - remains a top priority. Prompts.ai offers enterprise-grade governance and full audit trails, ensuring transparency and oversight. Similarly, AWS and Google Cloud are well-regarded for their extensive compliance certifications. The choice between platforms often boils down to organizational priorities: teams seeking rapid deployment and collaboration may lean toward specialized solutions like Prompts.ai, while those heavily invested in existing cloud infrastructures might prefer extending their platforms to include AI capabilities.

The world of interoperable AI is advancing at a rapid pace, as businesses work to address the growing challenges of AI tool sprawl and improve team collaboration. While major cloud providers like Google Cloud and AWS continue to expand their ecosystems, a new wave of specialized platforms is emerging. These platforms are designed specifically for enterprise AI orchestration, offering solutions that simplify integration and improve operational workflows.

The most effective platforms share a few standout features: they bring multiple AI models together under one interface, enable real-time team collaboration, and include tools for transparent cost management. This combination directly tackles the main obstacles U.S. enterprises face when scaling AI across different departments.

One of the most pressing needs is cost visibility. Platforms that incorporate detailed FinOps controls are changing the game by moving away from traditional software pricing models, making AI adoption more feasible for organizations of all sizes. Equally important is collaboration. Whether it’s marketing teams crafting LLM-driven campaigns, support teams fine-tuning AI assistants, or internal teams deploying shared workflows, modern platforms must support multi-user environments with proper permissions and shared resources. This collaborative approach is what sets these platforms apart from standalone APIs or single-purpose productivity tools.

Ultimately, businesses must decide between specialized platforms that allow for fast, collaborative deployment and broader cloud solutions that build on existing infrastructure. Regardless of the choice, the companies highlighted here illustrate a clear trend: the future of enterprise AI depends on unified, collaborative, and cost-aware platforms that empower teams to innovate without the headaches of juggling disconnected tools.

Prompts.ai streamlines teamwork on AI workflows by providing a centralized platform where users can collaborate effortlessly. Teams can co-edit prompts, oversee agents, and monitor token usage as it happens. With role-based permissions, everyone works securely while keeping a clear view of project activity.

Features such as real-time syncing, shared asset libraries, and governance controls break down barriers, ensuring smooth collaboration. It’s an excellent fit for marketing teams crafting AI-powered campaigns, support teams refining virtual assistants, and internal groups deploying shared workflows with ease.

Real-time cost tracking offers precise control over expenses, allowing teams to stick to their budgets and sidestep unforeseen overspending. By delivering up-to-the-minute insights into spending, it empowers teams to make informed decisions and adjust swiftly as project requirements shift.

This capability proves particularly useful for teams operating in dynamic, high-pressure settings. It ensures resources are distributed effectively and transparently, promoting seamless collaboration and a strong sense of accountability among all stakeholders.

AI interoperability enhances enterprise workflows by facilitating smooth interaction between various AI models and systems. This capability empowers teams to select the most suitable tools for specific tasks, improving precision, efficiency, and cost management.

By making AI orchestration simpler and minimizing IT hurdles, interoperability enables workflows that are both scalable and cohesive. The result? More efficient processes, quicker decisions, and heightened productivity across key areas like marketing, customer support, and internal operations.