Tokenization is the backbone of how Large Language Models (LLMs) process text, directly influencing performance, cost, and efficiency. This guide explores how to optimize tokenization strategies to improve model outputs, reduce expenses, and ensure compliance. Key takeaways include:

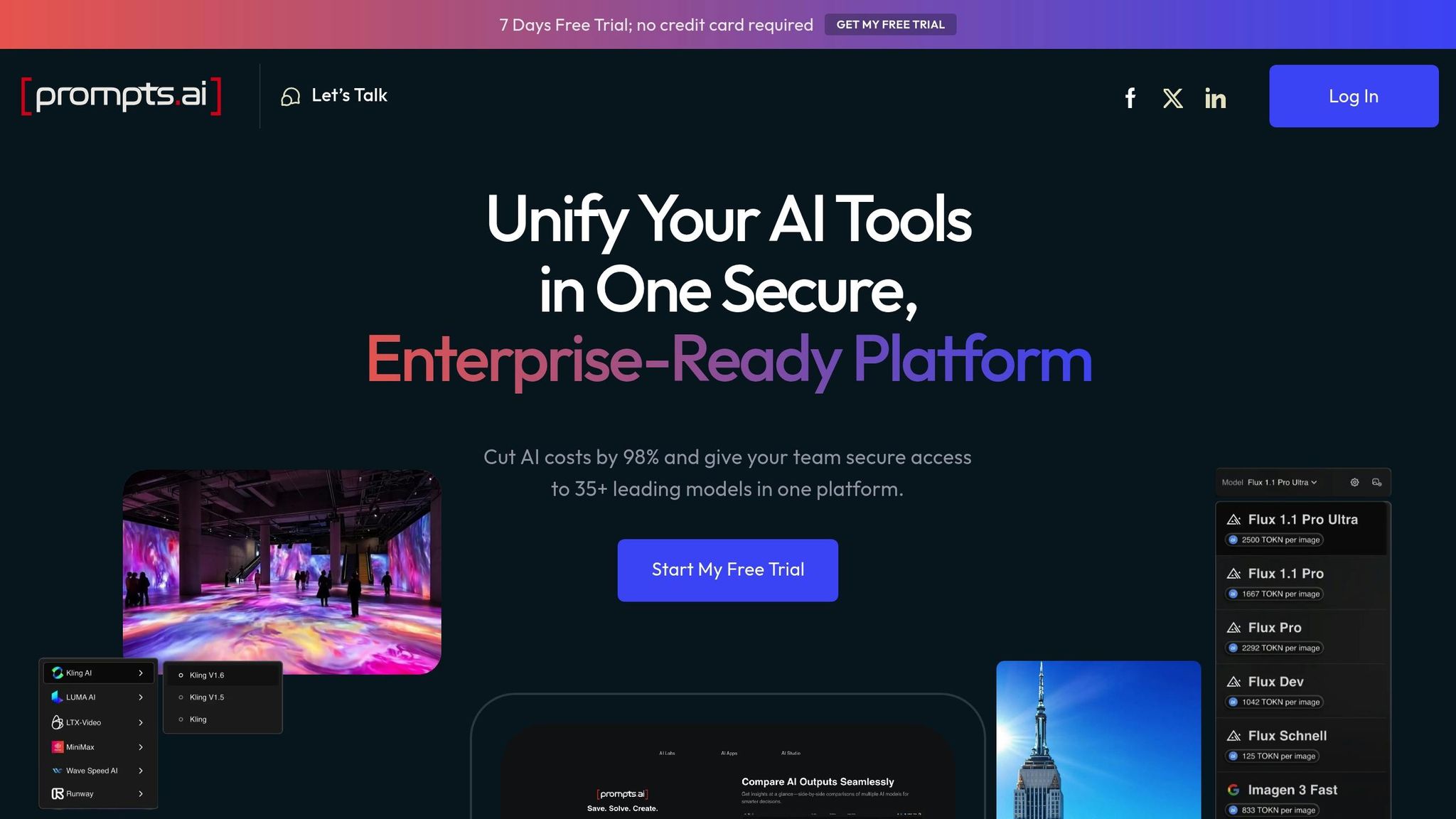

For enterprises, platforms like prompts.ai simplify tokenization management, offering real-time cost tracking, governance tools, and collaborative features to streamline operations. Whether you're fine-tuning prompts or scaling across systems, these practices ensure your tokenization strategy delivers efficiency and reliability.

When selecting a tokenizer, several factors should guide your decision, including language complexity, domain needs, vocabulary size, and the specific requirements of your application. Language characteristics are a critical starting point. For languages like German or Finnish, which have complex word structures, subword or character-level tokenization is better suited to handle intricate word formations. On the other hand, simpler languages may perform well with word-level tokenization.

Domain specificity is another key consideration. Tailoring tokenizers to specialized training data improves compression rates and ensures better performance in specific contexts. This alignment between tokenizer and domain can significantly impact the quality of results.

When it comes to vocabulary size, finding the right balance between accuracy and computational efficiency is essential. For English, approximately 33,000 tokens are often sufficient. However, multilingual models, especially those supporting five or fewer languages, may require vocabularies that are three times larger to maintain consistent performance across languages. Keep in mind that larger vocabularies increase computational demands, so weigh these costs against the potential benefits.

Application complexity and model requirements also play an important role. Subword tokenization, for instance, strikes a balance between vocabulary size and language complexity, making it a good choice for applications that need strong semantic understanding. Popular transformer models like BERT and GPT often rely on subword methods such as Byte Pair Encoding (BPE) or WordPiece. Meanwhile, character-level tokenization is better suited for recurrent neural networks (RNNs) and text-to-speech tasks.

Once these factors are clear, the next step is to fine-tune tokenization parameters for optimal performance.

To maximize performance, focus on optimizing pre-tokenization and training parameters. Start by configuring pre-tokenization schemes with regular expressions. These patterns allow you to customize text segmentation based on specific needs, offering a powerful way to tailor preprocessing.

Training data selection is equally important. Training tokenizers on data that closely resembles what they’ll encounter during inference yields the best results. For example, training on code improves compression for programming languages, while multilingual datasets enhance performance across multiple languages. A balanced mix of data types ensures consistent results across domains.

Careful attention should also be given to vocabulary size and sequence length. Larger vocabularies can reduce memory usage but increase decoding costs. However, excessive compression may shorten sequences too much, which can hurt reasoning capabilities. In resource-limited environments, it’s crucial to strike a balance between compression and maintaining enough context for effective processing.

With your strategy in place, it’s time to evaluate tokenization algorithms to find the best fit for your performance and scalability needs. Each algorithm comes with its own strengths and trade-offs.

| Algorithm | Compression Rate | Processing Speed | Memory Usage | Multilingual Support | Best Use Cases |

|---|---|---|---|---|---|

| BPE | Moderate | Fast | Low | Good | General text processing, balanced performance |

| WordPiece | High | Moderate | Moderate | Good | Transformer models, semantic understanding |

| Unigram | High | Slow | High | Excellent | Complex multilingual applications |

| SentencePiece | Very High | Fast | Low | Superior | Multilingual contexts, diverse text types |

Among these, SentencePiece stands out for its ability to handle diverse languages efficiently, making it a top choice for global applications. For tasks requiring fast processing, BPE is a reliable option, offering a good balance of speed and performance. WordPiece is particularly effective for tasks that demand strong semantic understanding, which is why it’s widely used in transformer models. Meanwhile, Unigram provides excellent support for multilingual tasks but demands more computational resources, making it ideal when accuracy is prioritized over speed.

Modern tokenizer implementations, like the Hugging Face Tokenizer, demonstrate impressive efficiency, processing around 1 GB of data on a CPU in under 20 seconds. This capability ensures that even large workloads can be handled effectively, regardless of the chosen algorithm.

For those fine-tuning existing models, tokenizers can often be adjusted with minimal impact on downstream performance, provided the training dataset includes at least 50 billion tokens. This flexibility allows for ongoing optimization of tokenization strategies, even after a model has been developed.

Striking the right balance between vocabulary size and sequence length plays a crucial role in maximizing the performance of large language models. Smaller vocabularies tend to split text into more, smaller tokens, while larger vocabularies produce fewer, larger tokens. For example, GPT-4 uses approximately 100k tokens, LLaMA handles around 128k, and Mistral operates with about 32k tokens, reflecting their unique optimization goals and target applications.

A larger vocabulary, like that of GPT-4, reduces the number of tokens needed to represent text compared to earlier models such as GPT-2. This effectively doubles the amount of information the model can process within a given context window. Multilingual models that support a limited number of languages may require vocabularies up to three times larger to maintain consistent performance across languages. Selecting the right vocabulary size minimizes token fragmentation while keeping the model efficient. Subword tokenization offers a balance between compression and handling new words, making it a practical choice for many applications.

Once vocabulary and sequence length are optimized, efficiency can be further improved through caching and parallel processing.

Caching is an effective way to enhance tokenization efficiency by storing computations for reuse. Key-Value (KV) caching, for instance, saves key and value tensors from earlier inference steps, reducing redundant calculations. For example, Amazon Bedrock has demonstrated up to 85% faster response times for cached content, with cached tokens incurring only about 10% of the cost of regular input tokens. Similarly, enabling KV caching in Hugging Face Transformers can speed up generation by approximately 5× for a 300-token output on a T4 GPU, significantly reducing processing time.

To maximize caching benefits, structure prompts strategically. Place static content first, followed by a cache checkpoint, and then add dynamic content. For instance, in a document-based question-answering system, positioning the document text at the beginning, inserting a cache checkpoint, and then adding the user's question can streamline processing.

Parallel processing also boosts performance by distributing tokenization tasks across multiple processors. This approach is particularly effective for batch tokenization. Modern tokenizers, such as the Hugging Face Tokenizer, can process large datasets efficiently, handling approximately 1 GB of data on a CPU in under 20 seconds.

After implementing these techniques, it’s crucial to measure their impact using performance metrics.

Monitoring performance metrics is essential to ensure your tokenization strategy is both efficient and cost-effective. Key metrics to track include Normalized Sequence Length (NSL) and subword fertility, as lower token counts generally indicate reduced fragmentation and improved efficiency.

For instance, the SUTRA tokenizer has shown exceptional performance across 14 languages based on NSL metrics. Additionally, advancements like GPT-4o have demonstrated better handling of certain Indian languages compared to GPT-4. Beyond NSL and subword fertility, keep an eye on latency, throughput, and resource usage to fine-tune your tokenization approach for optimal speed and cost savings.

Regularly evaluating these metrics allows for data-driven adjustments, ensuring your tokenization strategy remains aligned with real-world demands while delivering measurable improvements in performance and efficiency.

When dealing with massive volumes of text spread across servers and data centers, traditional tokenization methods often hit performance bottlenecks. To overcome these challenges, distributed strategies play a crucial role in maintaining efficiency, controlling costs, and ensuring consistency. These approaches reflect a broader commitment to optimizing processes for large-scale applications.

Scaling tokenization effectively starts with distributing workloads intelligently. This involves using tools like load balancers, schedulers, and monitors alongside strategies such as Round-Robin, Least Connections, Weighted Load Balancing, and Dynamic Load Balancing. However, real-world scenarios introduce complexities like fluctuating workloads, varying resource capacities, network delays, and the need for fault tolerance. Addressing these factors is essential to ensure smooth operations across distributed environments.

Monitoring tokenization costs in distributed setups is becoming increasingly important as AI investments grow. With AI spending projected to increase by 36% by 2025 and only 51% of organizations confident in assessing their AI ROI, cost transparency is more critical than ever. Tools like LangSmith and Langfuse simplify token cost tracking, while cloud tagging features, such as those offered by Amazon Bedrock, help allocate expenses with precision. By implementing data governance frameworks and automating data collection, organizations can improve data quality and reduce inefficiencies.

Platforms like prompts.ai take this a step further by integrating FinOps capabilities that monitor token usage in real time. With its pay-as-you-go TOKN credit system, prompts.ai provides clear insights into tokenization costs across multiple models and nodes. This enables organizations to fine-tune their tokenization strategies based on actual usage, ensuring cost-effective scalability.

As workloads are distributed, maintaining token consistency across nodes becomes a top priority. Centralized token management services or libraries can standardize token generation and ensure uniform mappings through a shared token vault. Techniques like consensus algorithms, ACID transactions, lock managers, data partitioning, and replication further enhance consistency. For geographically dispersed systems, geo-aware solutions help maintain compliance with local data regulations, while automating tokenization policies reduces the likelihood of human error as systems grow in complexity.

As tokenization becomes a cornerstone of enterprise AI operations, it brings with it challenges that go beyond technical efficiency. Organizations must address potential security flaws, adhere to strict regulatory standards, and navigate ethical considerations. These factors are vital to ensuring responsible AI implementation across varied global markets.

Tokenization introduces vulnerabilities that can expose AI systems to threats like prompt injection, data reconstruction, and model theft. Attackers exploit weaknesses in token processing to manipulate systems or extract sensitive information. For example, data reconstruction attacks can reverse-engineer confidential details from token patterns, while model theft exploits tokenization gaps to extract proprietary algorithms.

The root of these issues often lies in how tokenization algorithms handle input. Errors in tokenization can lead to misinterpretation by large language models (LLMs), resulting in inaccurate outputs that attackers can exploit. Many of these flaws stem from the limitations of subword-level vocabularies, which struggle with complex linguistic structures.

Languages add another layer of complexity, as each introduces unique risks. Organizations operating in multilingual environments must account for these variations when designing security measures.

To mitigate these risks, companies can strengthen tokenization by diversifying segmentation methods and implementing strict access controls. Role-based access controls can limit unauthorized access to tokenization systems, while continuous monitoring can help detect unusual patterns that signal potential breaches. These robust defenses lay the groundwork for meeting compliance and governance standards.

Beyond security, organizations must ensure their tokenization practices align with regulatory frameworks. Standards like PCI DSS, HIPAA, GDPR, and FedRAMP all recommend tokenization as a key security measure. These regulations often require sensitive data to remain within specific geographic boundaries, even when tokens are used for cloud processing.

For instance, Netflix successfully used tokenization to secure payment card data, enabling compliance with stringent regulations while maintaining smooth customer experiences.

Compliance also demands regular audits to validate tokenization integrity. Organizations must routinely assess both their internal systems and external vendors to ensure adherence to standards. When outsourcing tokenization, companies should confirm that service providers meet PCI DSS requirements and include compliance attestations in their audits.

As regulations evolve, organizations must update tokenization policies to remain aligned with new requirements. Clear retention policies are critical, defining how long tokenized data is stored and outlining secure disposal practices once it is no longer needed.

Platforms like prompts.ai simplify these challenges by offering governance features that track tokenization use across distributed systems. With transparent cost tracking and audit trails, organizations can maintain compliance while optimizing operations across various AI models and regions.

Ethical decision-making is just as important as security and compliance when it comes to tokenization. The choices made in tokenization can have far-reaching consequences, particularly in terms of fairness and representation. One key concern is multilingual equity. Tokenization systems that inadequately represent non-English languages risk perpetuating systemic biases by creating poorly trained tokens. This can lead to subpar AI performance for speakers of those languages.

Tokenization can also amplify existing data biases. Underrepresented languages and demographic attributes often result in skewed model performance, raising ethical concerns in areas such as healthcare. For example, studies show that LLMs can use as few as 15 demographic attributes to reidentify nearly all personal data in anonymized datasets, posing serious privacy risks. In healthcare applications, biases have been observed in tools like ChatGPT-4, which sometimes resorts to stereotypes in diagnostic suggestions, disproportionately affecting certain races, ethnicities, and genders.

To address these challenges, organizations should implement clear accountability frameworks. Transparency measures can help track responsibility for AI decisions, while diverse AI teams can identify biases that might go unnoticed in homogenous groups. Continuous evaluation systems are also essential for monitoring LLM outputs and addressing unintended consequences.

"We need guidelines on authorship, requirements for disclosure, educational use, and intellectual property, drawing on existing normative instruments and similar relevant debates, such as on human enhancement." – Julian Savulescu, Senior Author

Tokenization also raises ethical questions in content generation. While it enables large-scale content creation, it also opens the door to harmful outcomes, including misinformation and disinformation. Organizations must implement robust content moderation policies and prioritize user education to minimize these risks. Balancing innovation with responsibility is key to ensuring tokenization strategies benefit society.

In healthcare, the ethical stakes are particularly high. Tokenization must account for patient privacy, equity, safety, transparency, and clinical integration. Specialized approaches are necessary to protect sensitive health data while ensuring diagnostic tools remain effective across diverse populations.

Fine-tuning tokenization for large language models involves a thoughtful approach that prioritizes performance, cost management, and ethical responsibility. By following the strategies outlined here, enterprise teams can cut expenses while ensuring consistent, high-quality AI outputs across various systems. Below is a streamlined guide to putting these practices into action.

The following methods align with earlier discussions on improving performance, ensuring security, and addressing ethical concerns:

To roll out an effective tokenization strategy, break the process into three key phases:

Platforms designed for large-scale AI management, such as prompts.ai, can simplify and accelerate the process of optimizing tokenization across distributed systems. With its unified interface, prompts.ai supports multiple large language models, streamlining model management in a secure environment.

The platform's built-in FinOps layer provides real-time token tracking and cost optimization, helping organizations avoid overcharges in pay-per-token pricing models. Its governance features ensure compliance with transparent audit trails and cost accountability. Additionally, collaborative tools make it easier for teams to refine prompt engineering, reducing token usage while maintaining - or even improving - output quality. For enterprises scaling their tokenization strategies, prompts.ai eliminates the complexity of managing multi-vendor environments, enabling teams to focus on driving innovation and achieving their goals.

Choosing the right vocabulary size for your language model hinges on the nature of your dataset and the goals of your project. Begin by examining the token frequency distribution in your dataset to strike a balance between capturing a wide range of words and keeping the process efficient by avoiding unnecessary complexity.

For smaller datasets, opting for a smaller vocabulary size is often more practical. This approach minimizes computational demands while still delivering solid performance. On the flip side, larger datasets usually benefit from a more extensive vocabulary, as it allows for better token representation and improved accuracy. The best results often emerge through a process of trial, error, and fine-tuning.

Using tools like prompts.ai can make this task simpler. With built-in features for tokenization tracking and optimization, you can save time and scale your efforts more effectively.

To protect tokenized data and maintain compliance in environments with multiple languages, it's crucial to implement tools that accommodate diverse languages and character sets. This minimizes risks such as data misinterpretation or unintended exposure. Employing strict access controls, conducting regular audits, and following standards like PCI DSS are key steps in safeguarding sensitive information.

Moreover, tokens should be designed to have relevance only within specific application contexts. Consistent use of encryption and de-identification policies further ensures that tokenized data stays secure and compliant, no matter the language or region where it's utilized.

Caching, particularly key-value caching, plays a crucial role in improving tokenization efficiency. By storing token representations that have already been computed, it eliminates the need for repetitive calculations. This not only accelerates the tokenization process but also speeds up inference in large language models (LLMs).

In addition, parallel processing enhances performance by allowing multiple operations to occur simultaneously. This approach helps populate caches more quickly and minimizes delays, including the critical time-to-first-token (TTFT). When combined, these strategies enhance scalability, increase throughput, and significantly reduce the operational costs associated with deploying LLMs.