Cut AI confusion and boost efficiency. Disconnected tools slow teams, leading to missed opportunities and wasted time. Prompts.ai provides a single platform to test, track, and manage AI prompts, delivering measurable results:

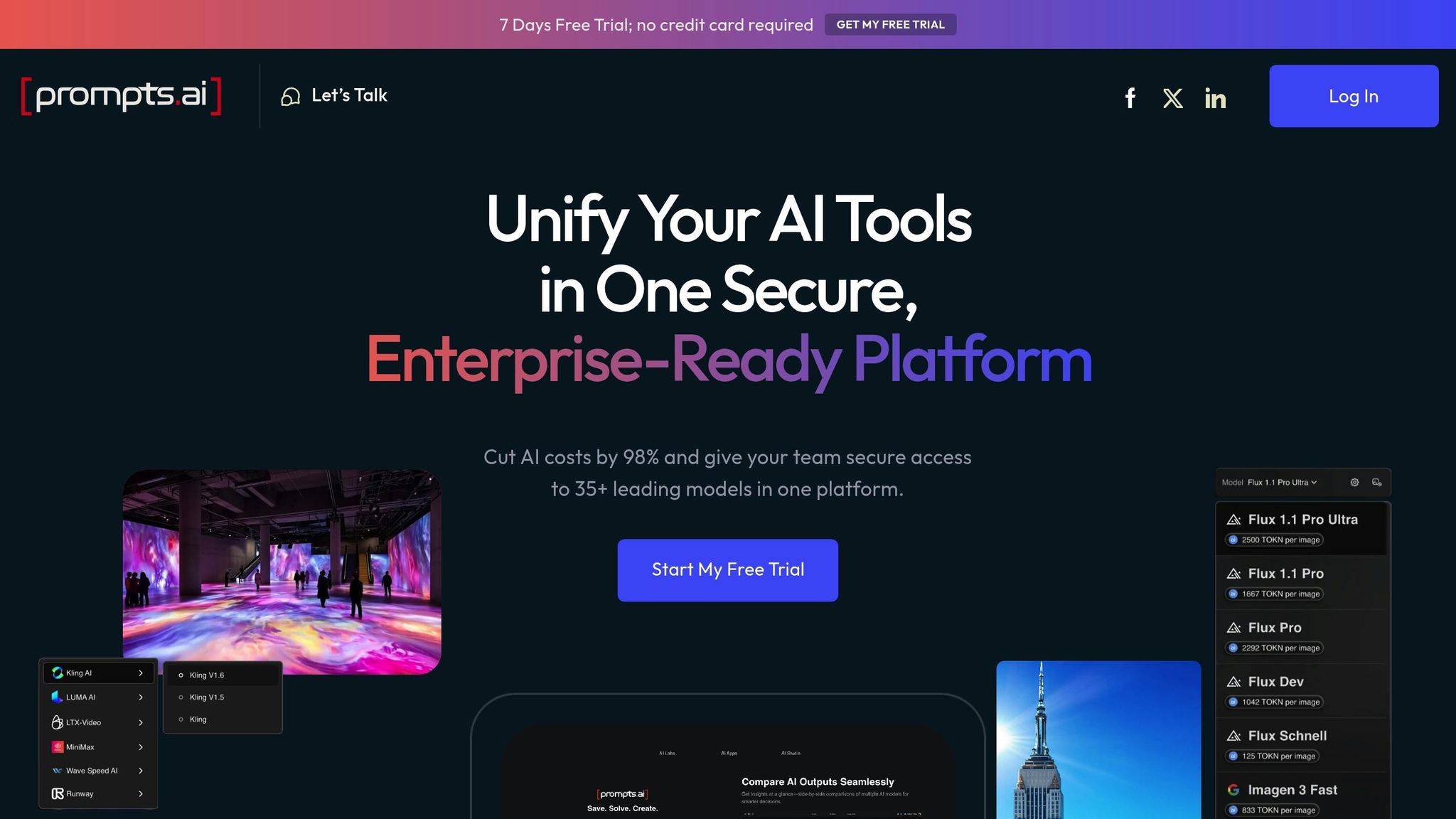

Access 35+ LLMs like GPT-4, Claude, and Gemini in one interface. Test prompts side by side, track costs with TOKN credits, and manage versions with enterprise-grade security.

Why it matters: Teams using unified workflows report an 85% success rate on the first attempt and cut refinement time by 71%. Prompts.ai simplifies collaboration, reduces errors, and ensures faster results.

You’re one prompt away from streamlined AI workflows.

An effective prompt management platform supports the entire lifecycle of AI development, from initial testing to production deployment. By integrating prompt testing, version control, and collaboration tools into a single environment, teams can overcome the fragmentation challenges discussed earlier. Let’s break down the key features that make this possible and how they work together to streamline AI prompt management.

Testing prompts across different AI models is critical for identifying the best-performing configurations. Since large language models (LLMs) are highly sensitive to even minor changes in prompts, a prompt that delivers excellent results on GPT-4 might perform less effectively on Claude or Gemini. This variability makes comprehensive testing indispensable.

A robust platform should enable simultaneous testing across multiple models, such as GPT-4, Claude, and Gemini, all within a single interface. This eliminates the inefficiency of switching between separate dashboards. Additionally, the platform should measure key metrics like execution time, cost per request, and accuracy, providing reliable data for comparison over multiple test runs. Given the nondeterministic nature of LLM outputs, running multiple tests ensures a more accurate evaluation. This approach helps identify the optimal response for specific use cases.

Managing prompts effectively requires the same level of care as managing application code. Jesse Sumrak from LaunchDarkly emphasizes this point:

"Prompts need to be treated with the same care normally applied to application code. You wouldn't push code straight to production without version control, testing, and proper deployment processes; your prompts deserve the same treatment."

A well-designed platform goes beyond basic change tracking, maintaining detailed records with AI-specific metadata. This includes system instructions, context, and performance data for every version. Such records make it easier to understand and refine system behavior.

Teams adopting version-controlled workflows report a 30% improvement in prompt quality and consistency. They also reduce debugging and management time by 40%-45%. Another valuable feature is the ability to update prompts at runtime without redeploying the entire application, which adds flexibility to the development process.

Collaboration tools are a vital complement to testing and version control, enabling cross-functional teams to contribute effectively to prompt development. A strong platform should include shared workspaces, granular permissions, and structured review processes to support this collaborative environment.

Centralized version control systems significantly enhance collaboration, improving efficiency by 41%. Teams also experience up to 60% fewer merge conflicts compared to traditional file-sharing methods. Collaborative review processes, especially for production-level prompts, align with software development best practices, helping to catch issues before they affect end users.

Shared prompt libraries further streamline workflows by allowing teams to create reusable components. This not only ensures consistency across projects but also reduces development time. Organizations that implement structured prompt management see productivity gains of up to 30%. With 78% of AI teams identifying version control as critical for maintaining model quality, these collaborative features are essential for staying competitive in the fast-evolving AI landscape.

Prompts.ai tackles the challenges of fragmented AI workflows by bringing prompt testing and versioning together in one streamlined platform. Instead of juggling multiple tools, teams can oversee the entire lifecycle of their prompts through a single, intuitive dashboard. This approach combines robust security with user-friendly design, simplifying AI development processes.

Switching between platforms to test prompts is a thing of the past. Prompts.ai provides seamless access to over 35 large language models, including GPT-4, Claude, LLaMA, and Gemini, all through a unified, secure interface. This eliminates the hassle of managing multiple accounts and dashboards.

The platform allows teams to test prompts across multiple models simultaneously, making it easy to compare results side by side. Since each model may interpret and respond to prompts differently, this feature is crucial for identifying the best model-prompt pairing for specific needs.

Managing costs in AI development can be a daunting task, but Prompts.ai simplifies this with its integrated FinOps layer. The platform tracks every token used, provides real-time spending insights, and links costs directly to business outcomes.

With the pay-as-you-go TOKN credits system, organizations gain full transparency over their expenses. This system enables real-time monitoring and optimization, helping teams cut unnecessary spending without compromising performance. In fact, businesses using Prompts.ai have reported reducing AI software costs by up to 98% compared to maintaining multiple subscriptions.

For enterprises, adopting AI requires a strong focus on security and compliance. Prompts.ai addresses these needs with advanced governance tools and detailed audit trails embedded into every workflow.

The platform enforces strict access controls and encrypts all versioning systems to protect sensitive data and prompts. Granular permission settings and action logs ensure only authorized users can modify prompts, while comprehensive audit trails document every change, test, and decision - complete with timestamps and user details. These features help organizations meet regulatory standards like GDPR, the AI Act, and CCPA, and structured versioning protocols have been shown to increase response accuracy by 20%.

To further enhance security, Prompts.ai separates user inputs from system instructions to prevent prompt injection attacks. It also uses format constraints to manage outputs and minimize interpretation errors. Environmental controls monitor interactions and limit modification rights to authorized personnel, ensuring a secure and controlled environment for prompt development.

These measures go beyond basic protection, offering teams a reliable framework to experiment, refine, and deploy prompts confidently. By combining agility with strict compliance, Prompts.ai enables rapid innovation without compromising enterprise security standards.

Building effective AI workflows involves more than crafting well-written prompts. It requires careful testing, systematic version management, and strong security measures. Prompts.ai simplifies these tasks by offering a platform that integrates testing, version control, and security into a single enterprise-grade solution.

Developing prompts efficiently means treating them as you would application code. Batch testing across multiple models saves time and highlights performance differences. With Prompts.ai, you can test prompts side by side on models like GPT-4, Claude, LLaMA, and Gemini, comparing their responses in real time.

A/B testing is seamless within Prompts.ai. You can deploy different prompt versions to specific user segments directly from the platform, allowing you to measure key performance metrics such as response accuracy, user satisfaction, and task completion rates. These insights are available alongside tools for managing costs and tracking versions.

Start by crafting prompts with clear instructions, examples, and constraints. Testing against edge cases and unexpected inputs helps identify weaknesses before deployment. Additionally, defining output formats ensures consistency and reduces the need for post-processing, making results more predictable across different models.

Once you're confident in a prompt's performance, version management becomes essential for maintaining reproducibility and driving continuous improvements.

Proper version control turns chaotic experimentation into organized progress. Using semantic versioning (X.Y.Z format) provides a clear structure for tracking changes:

Clear labeling and structured documentation are crucial as your prompt library grows. Tag each prompt with metadata linked to internal tracking systems and use release labels like "production", "staging", or "development" to manage deployment stages efficiently.

A centralized repository enhances team collaboration by offering visibility into version histories, rollback options, and detailed documentation of changes. This transparency reduces conflicts when multiple team members work on the same prompts and ensures everyone understands the reasons behind specific updates.

Rollback capabilities should be part of your workflow from the start. Feature flags and checkpoints allow you to quickly revert problematic updates without disrupting operations. Document changes thoroughly, including why they were made and how outcomes compared to expectations.

| Version Component | Purpose | Example Change |

|---|---|---|

| Major (X) | For significant structural changes | Overhauling the prompt framework |

| Minor (Y) | For adding new features or context | Adding additional context parameters |

| Patch (Z) | For minor fixes | Correcting grammar issues |

Strong security measures are essential to protect the integrity of your prompts. Security should be integrated into every stage of prompt development. A key practice is separating user inputs from system instructions to guard against prompt injection attacks.

Environmental controls can monitor prompt interactions for unusual behavior, while strict modification rights ensure only authorized users can make changes. Detailed logs track who made changes and when, providing granular access control that protects sensitive prompts while enabling collaboration.

Input validation and content filtering play a critical role in catching harmful requests before they reach the models. Testing prompts against adversarial inputs helps identify vulnerabilities early in the process.

Audit trails document every interaction, including timestamps and user details, ensuring compliance with regulations such as GDPR and CCPA. For critical prompts, go beyond basic version control by implementing approval workflows for those handling sensitive data or overseeing key business processes. Regularly monitor performance metrics and address security concerns or model drift as they arise.

Prompts.ai simplifies AI development by bringing prompt testing and versioning into a single, unified platform. With access to over 35 leading LLMs - including GPT-4, Claude, LLaMA, and Gemini - it eliminates the hassle of managing multiple tools while significantly cutting costs. Organizations using Prompts.ai have reported savings of up to 95% and a tenfold increase in productivity.

The platform also transforms team collaboration. By centralizing workflows, teams no longer need to juggle various platforms to test prompts, track versions, or document changes. Prompts.ai’s centralized governance ensures smooth onboarding of new models, users, and workflows, all while maintaining enterprise-grade security and complete audit trails. With over 200 teams across 80 countries and 4,500 participants already using the platform for global AI initiatives, Prompts.ai has proven its value across a wide range of industries and organizational sizes.

In addition to collaboration, Prompts.ai offers robust tools that deliver tangible business results. For organizations scaling their AI efforts, it combines cost efficiency, advanced functionality, and enterprise-level security. Instead of wrestling with fragmented tools and complex version control processes, teams can focus on crafting prompts that deliver real impact. Features like built-in FinOps capabilities, real-time cost tracking, and full visibility into AI interactions ensure that growth is both controlled and compliant. This integrated approach supports everyone, from individual creators to large enterprises.

Prompts.ai empowers users at every level to securely and efficiently develop, test, and deploy prompts, paving the way for smarter and more effective AI solutions.

Prompts.ai places a strong emphasis on security and compliance, ensuring your AI prompts are protected through advanced encryption methods. This safeguards your data while secure prompt engineering techniques minimize risks of misuse or unintended actions.

To strengthen defenses even further, Prompts.ai integrates AI-driven tools that identify and counteract threats such as prompt injection attacks. These robust measures create a safe, controlled environment, giving you peace of mind about the integrity and reliability of your AI initiatives.

Prompts.ai transforms the way you develop AI prompts by enabling simultaneous testing across multiple models. This not only saves time but also speeds up the process of refining and improving your prompts, delivering quicker and more precise outcomes.

The platform also includes built-in version control, making it simple to track changes, manage updates, and roll back to previous versions when needed. This feature ensures consistency, simplifies audits, and fosters smoother collaboration - whether you're working as part of a team or on your own. By integrating testing and versioning into a single platform, Prompts.ai streamlines the entire prompt development process, making it easier and more efficient to handle.

The TOKN credits system offers a straightforward way to handle costs, acting as a flexible, pay-as-you-go currency for accessing AI tools and capabilities. Instead of locking into fixed subscription plans, you only pay for the credits you actually use. This makes it easier to budget for both large, ongoing projects and smaller, variable ones.

By using this system, you gain better control over expenses, improve cost predictability, and avoid unnecessary spending. Whether you're part of a team or working solo, it allows you to focus on refining your AI workflows without worrying about overshooting your budget. It's an efficient way to manage development costs while keeping your options open.