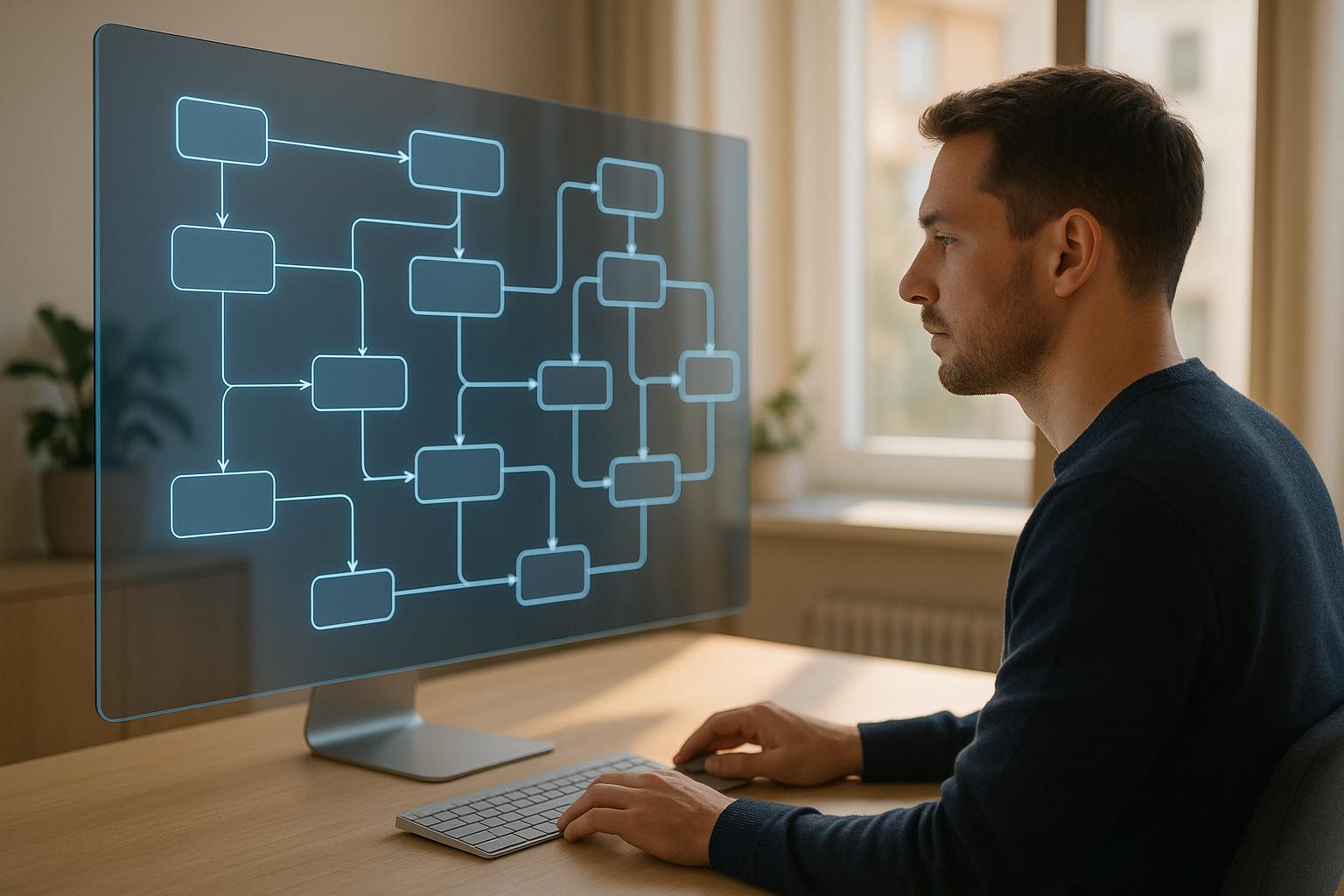

LLM decision pipelines are systems that use AI to turn raw data into decisions and actions, automating complex workflows. Here’s a quick breakdown:

LLM pipelines consist of three main stages: data ingestion, prompt management, and output validation. Platforms like prompts.ai simplify their deployment with tools for real-time monitoring, RAG pipelines, and compliance integration. These systems are transforming industries like finance, healthcare, and customer support by making decisions faster and more scalable.

Building effective LLM decision pipelines requires a seamless integration of three main stages, from gathering raw data to making informed decisions.

The first step in any LLM decision pipeline is data ingestion - the process of collecting raw information from various sources and converting it into a format that LLMs can process. This step is essential for ensuring the system has the right foundation to deliver meaningful results.

It begins by loading external documents like PDFs, DOCX files, plain text, or HTML and breaking them into manageable chunks. These chunks are designed to fit within the LLM’s processing limits while maintaining their original context.

Vector databases are a game-changer here. Unlike traditional databases that rely on exact matches, vector stores use similarity-based retrieval, making it easier to find relevant information even when the query doesn’t perfectly match the source material. When choosing between cloud-based and locally managed vector databases, organizations face a trade-off: cloud options are easier to scale but come with added costs, while local setups offer more control but require greater maintenance.

For example, in September 2024, a RAG (Retrieval-Augmented Generation) system using LangChain demonstrated how diverse data sources could be loaded, converted into embeddings, and stored in a vector database. This setup allowed the LLM to pull relevant information from knowledge sources and generate responses enriched with context.

Proper data ingestion is the backbone of efficient searches, accurate recommendations, and insightful analysis. Once the data is ready, the next focus is on managing how the LLM interprets and responds to prompts.

With data in place, prompt management becomes the key to steering the LLM’s behavior. This stage determines how the system interprets user queries and generates responses that align with specific needs.

Well-crafted prompts strike a balance between being clear and providing enough context to guide the LLM effectively. For instance, in June 2024, Salus AI improved LLM accuracy for health screening compliance tasks from 80% to 95–100% by refining prompts. A vague prompt like "Does the call agent suggest the test is a necessity?" was revised to "Does the call agent tell the consumer the test is required?" - a change that boosted accuracy from 69 to 99 percentage points. Additionally, optimized prompts have shown to improve performance by up to 68 percentage points, with single-question prompts adding another 15-point boost.

Best practices in prompt management include versioning and keeping prompts separate from code for better security and easier updates. Modular prompts, built with reusable components and interpolated variables, simplify maintenance. Iterative testing ensures continual refinement, while collaboration among technical teams, domain experts, and users enhances the overall design.

Once prompts are optimized, the pipeline shifts to validating and refining the LLM’s outputs.

The final step in the pipeline is output processing, which ensures that the LLM’s responses meet quality standards before they’re used to make decisions. This step is critical for maintaining accuracy and reliability.

"Model output validation is a crucial step in ensuring the accuracy and reliability of machine learning models." – Nightfall AI

Two common methods for evaluating outputs are statistical scoring and model-based scoring. Statistical scorers offer consistency but may struggle with complex reasoning, while model-based scorers excel in accuracy but can be less reliable. Many organizations combine these approaches for a more balanced evaluation.

Key metrics for output evaluation include relevancy, task completion, correctness, hallucination detection, tool accuracy, and contextual appropriateness. Experts recommend limiting evaluation pipelines to five metrics to maintain efficiency. For instance, in a hospital text summarization case, a DAG scorer ensured summaries followed the required structure, awarding perfect scores only when all formatting criteria were met.

"Ensuring the reliability of LLMs is paramount, especially when they are integrated into real-world applications where accuracy and coherence are essential." – Antematter.io

Ongoing monitoring is just as important. Telemetry systems track model performance, customer engagement, and satisfaction, helping to identify and address any performance issues. Combining automated metrics with human oversight provides a more nuanced understanding of how well the LLM is performing.

Now that we’ve covered the core components, let’s dive into how these pipelines operate in practice. The process unfolds in three phases, each building on the last to deliver reliable and automated decisions.

The journey begins with gathering raw data from a variety of sources and shaping it into a format the LLM can process. This phase ensures the data is clean, structured, and ready for real-time analysis.

Take Unstructured AI as an example. It transforms semi-structured documents like PDFs and DOCX files into structured outputs. This includes converting tables into CSV or Excel formats, extracting characters with semantic labels, logically organizing text, and storing numerical embeddings in a vector database for quick retrieval.

One key step here is tokenization, where the input text is broken into smaller, manageable pieces. On average, one token represents about four English characters.

This step becomes critical in live applications where the system must handle diverse inputs - like customer service tickets, financial reports, or sensor data - and convert them into a standardized format. This consistency ensures the LLM can process the data accurately, no matter its original form.

Once the data is formatted, the pipeline moves to the processing phase, where the LLM works its magic. Here, the model transforms input tokens into actionable decisions, leveraging its inferencing capabilities in two stages: prefill and decode.

"Inferencing allows an LLM to reason from context clues and background knowledge to draw conclusions. Without it, LLMs would merely store patterns, unable to apply their knowledge meaningfully."

During the prefill phase, the system converts the user’s input into tokens and then into numerical values the model can interpret. The decode phase follows, where the model generates vector embeddings based on the input and predicts the next token.

At its core, this process revolves around one fundamental task: predicting the next word. But decision-making goes beyond that. LLMs combine statistical reasoning, rule-based heuristics, and external tools to filter out key decision variables and propose optimized solutions [32, 34].

A real-world example highlights this process. In a sustainable infrastructure planning case study, LLMs provided tailored insights for different audiences. For domain experts, the model identified that Solution 404 increased renewable energy usage from 15% to 55%, cutting the Environmental Impact Score by over 54%. For mid-level staff, it showed that Solution 232 improved cost efficiency to 46 Units/$, enhancing the Environmental Impact Score from 1.004 to 0.709. For decision-makers, it explained how increasing durability from 25 to 35 years reduced environmental impacts while balancing higher costs with long-term benefits.

To handle high-volume requests, organizations often use techniques like model compression, quantization, and efficient memory management. These optimizations are essential for maintaining performance in real-time scenarios.

Once the LLM has processed the data and made decisions, the system prepares the results for immediate use.

The final phase focuses on delivering decisions in formats that are actionable, transparent, and compliant with user and system needs.

Output delivery must address various audiences simultaneously. For instance, a single decision might need to be presented as a detailed technical report for engineers, a summary dashboard for managers, and an automated action trigger for integrated systems. Modern pipelines achieve this through multi-format output generation, tailoring the information to fit specific use cases.

Automated reporting plays a critical role here, especially for industries like healthcare, finance, and legal services, where compliance is non-negotiable. The system logs decision rationales, confidence scores, and supporting data, creating an audit trail that satisfies regulatory requirements.

In March 2025, Thoughtworks emphasized the importance of integrating evaluations into deployment pipelines to ensure consistent performance. These evaluations validate the model’s reliability before deployment and maintain quality throughout its lifecycle.

"Don’t treat evals as an afterthought - make them a cornerstone of your development process to build robust, user-focused AI applications."

Before decisions reach end users, real-time validation steps - like content moderation, accuracy checks, and compliance reviews - ensure the outputs meet quality standards. This layered approach minimizes the risk of errors making it to production.

Platforms like prompts.ai simplify this entire workflow. They offer tools for tokenization tracking, multi-modal processing, and automated reporting, all while maintaining a pay-as-you-go pricing model that scales with usage.

Still, many organizations face challenges in implementing these pipelines. A survey found that 55% of companies have yet to deploy an ML model, primarily due to the complexity of managing data workflows and deployment. However, those that successfully implement these three-phase pipelines often see major improvements in decision speed, consistency, and scalability.

Integrating LLM decision pipelines into your workflows takes careful planning, particularly when selecting orchestration tools and scaling strategies that align with your business growth.

Modern LLM orchestration frameworks offer modular solutions tailored to various needs. Among the most popular is LangChain, boasting 83,800 GitHub stars. It stands out for its modular design, prompt templates, and seamless integration with vector databases, making it ideal for complex AI workflows. LlamaIndex, with 31,200 stars, focuses on data integration and retrieval-augmented generation (RAG), offering connectors for over 160 data sources.

Choosing the right framework depends on your specific use case. LangChain is perfect for dynamic tool integration and agentic behavior, while LlamaIndex excels in workflows requiring efficient data retrieval from large document sets.

| Framework | GitHub Stars |

|---|---|

| LangChain | 83,800 |

| AutoGen | 38,700 |

| LlamaIndex | 31,200 |

| crewAI | 25,900 |

| Haystack | 19,000 |

Each framework has its strengths. LangChain supports modular workflows, AutoGen focuses on agent communication, LlamaIndex specializes in RAG applications, crewAI handles role-specific assignments, and Haystack provides semantic search and document retrieval.

However, experts warn against over-relying on these frameworks in production environments. Richard Li, an advisor on Agentic AI, notes:

"The value they have is it's an easier experience - you follow a tutorial and boom, you already have durable execution, and boom, you already have memory. But the question is, at what point are you going to be like, 'Now I'm running this in production, and it doesn't work very well?' That's the question".

To address this, platforms like prompts.ai take a different route. Instead of locking you into one framework, prompts.ai enables interoperable LLM workflows that integrate multiple models effortlessly. Its multi-modal capabilities handle everything from text processing to sketch-to-image prototyping, while vector database integration supports RAG applications without vendor lock-in.

Cost efficiency is another critical factor. Since tokenization directly affects costs - each token represents about four English characters - accurate token tracking ensures better budgeting and usage optimization.

For practical application, Vincent Schmalbach, a Web Developer and AI Engineer, advises simplicity:

"Most people overcomplicate LLM workflows. I treat each model like a basic tool - data goes in, something comes out. When I need multiple LLMs working together, I just pipe the output from one into the next".

A notable example from October 2024 involved integrating an AI code review action into a CI pipeline. This setup checked style conformance, security vulnerabilities, performance optimization, and documentation completeness, using an AI Code Review job configured on Ubuntu with an OpenAI key. This demonstrates how LLMs can enhance workflows without requiring a complete system overhaul.

A microservices architecture is often the best approach for integration. It isolates the LLM module, allowing it to scale independently. This ensures that updates or issues with the AI component won’t disrupt the entire system.

With orchestration frameworks in place, the next step involves scaling and maintaining these workflows effectively.

Scaling LLM decision pipelines requires thoughtful architecture and proactive maintenance. A good starting point is automated LLMOps workflows to manage tasks like data preprocessing and deployment.

Cloud platforms like AWS, Google Cloud, and Azure provide scalable infrastructure, but balancing cost and performance is key. Implementing CI/CD pipelines tailored for LLMs ensures that updates are tested and deployed efficiently while optimizing model performance.

Tools like Kubeflow, MLflow, and Airflow simplify the orchestration of LLM lifecycle components. They make troubleshooting easier, enhance scalability, and integrate seamlessly with existing systems.

Performance optimization is a must. Techniques such as model distillation, token budgeting, and reducing context length can improve efficiency. For high-stakes environments, incorporating human-in-the-loop feedback ensures validation and refinement of LLM outputs.

Monitoring and observability are essential. Key metrics like response times, token usage, error rates, and hallucination rates help identify issues early and guide continuous improvement.

Scaling also brings heightened security requirements. Best practices include input sanitization, API key protection, and encryption of LLM logs. Many industries also require compliance measures, such as filtering for PII or offensive content and labeling AI-generated responses.

Starting small and scaling gradually is often the most effective strategy. By focusing on a narrow use case, teams can deploy faster, learn from initial results, and expand based on performance. Human oversight and approval gates for critical changes ensure a controlled scaling process.

Continuous improvement is vital. A/B testing of prompts and diverse test inputs, along with feedback mechanisms, helps track accuracy and measure the impact on development speed. This ensures the system evolves positively over time.

Cost management becomes increasingly important as usage grows. Pay-as-you-go platforms like prompts.ai align costs with actual usage, avoiding unnecessary overhead. Coupled with token tracking, this approach provides transparency into cost drivers and highlights areas for optimization.

Finally, the decision to use open-source models like Mistral, Falcon, or LLaMA versus commercial APIs like OpenAI, Anthropic, or Cohere affects latency, compliance, customization, and costs. Each option has trade-offs that become more pronounced as systems scale.

LLM decision pipelines are reshaping industries by delivering practical solutions where speed, precision, and scalability are critical. Let’s dive into some of the key areas where these pipelines are making a real impact.

A staggering 94% of organizations consider business analytics essential for growth, with 57% actively leveraging data analytics to shape their strategies. LLM pipelines excel at turning raw data into actionable insights by processing both unstructured inputs like emails and support tickets alongside structured data from databases. This creates a comprehensive view that helps businesses make smarter decisions.

Take Salesforce, for example. They use LLMs to predict customer churn by analyzing historical purchase patterns and customer support interactions. This enables them to pinpoint at-risk customers and take proactive steps to retain them. Their Einstein GPT integrates multiple LLMs to tackle CRM tasks such as forecasting and predictive analytics.

What sets LLMs apart in predictive analytics is their ability to detect patterns, correlations, and anomalies that traditional models might overlook. For instance, GPT-4 has demonstrated a 60% accuracy rate in financial forecasting, outperforming human analysts.

"In essence, an AI data pipeline is the way an AI model is fed, delivering the right data, at the right time, in the right format to power intelligent decision making." – David Lipowitz, Senior Director, Sales Engineering, Matillion

However, success depends on maintaining high-quality data through rigorous cleansing and validation processes. Businesses must also invest in robust infrastructure, such as cloud storage or distributed computing, to handle large-scale, real-time data processing. Regular audits are crucial to identify and address biases, ensuring that human oversight keeps the outcomes fair and relevant.

LLM pipelines don’t just enhance analytics - they also revolutionize customer support.

In customer support, LLM decision pipelines are delivering tangible cost savings and operational efficiencies. For example, retailers using chatbots have reported a 30% reduction in customer service costs. Delta Airlines’ "Ask Delta" chatbot helps customers with tasks like flight check-ins and luggage tracking, which has led to a 20% drop in call center volume.

Implementing these systems requires careful planning. One broadcaster successfully launched a chatbot using AWS to assist with questions about government programs by pulling information from official documents. Multi-agent systems, where individual LLMs handle specific tasks, help reduce latency and improve performance. Techniques like Retrieval Augmented Generation (RAG) further enhance accuracy by incorporating external knowledge into responses.

To ensure reliability, businesses must continuously monitor these systems and establish feedback loops to address anomalies quickly. Techniques like canary deployments and shadow testing are also effective for mitigating risks during implementation.

Beyond customer support, LLM pipelines are driving advancements in content creation and workflow automation.

Platforms like prompts.ai are enabling businesses to streamline operations with multi-modal workflows that simplify integration and cost management. Content generation is one area where LLM pipelines are making waves. According to McKinsey, generative AI could add $240–$390 billion annually to the retail sector, with companies like The Washington Post already using LLMs to draft articles, suggest headlines, and surface relevant information.

Shopify uses an LLM-powered system to generate release notes from code changes, significantly speeding up deployment processes. Surveys show that developers using AI tools see a 55% boost in coding efficiency. Similarly, EY has deployed its private LLM, EYQ, to 400,000 employees, increasing productivity by 40%.

Other notable applications include Amazon’s use of LLMs for sentiment analysis to gauge customer satisfaction and JPMorgan Chase’s deployment of LLMs to classify documents like loan applications and financial statements.

"LLMs aren't just generating text - they're integrating core intelligence across enterprise systems." – Sciforce

Industry-specific LLMs like MedGPT for healthcare and LegalGPT for law are also emerging, delivering precise insights and reducing error rates. Looking ahead, future systems will seamlessly process text, images, video, and audio, enabling deeper analyses and even incorporating simulation tools.

Platforms like prompts.ai continue to support these advancements with flexible workflows that handle everything from text processing to sketch-to-image prototyping. Their pay-as-you-go pricing model and token tracking provide cost transparency, making these tools accessible as businesses scale.

These examples highlight how LLM pipelines are redefining how industries operate, paving the way for even greater innovation.

LLM decision pipelines are reshaping how businesses operate by offering fast, data-driven solutions. For instance, JPMorgan's AI system handles over 12,000 transactions per second, boosting fraud detection accuracy by nearly 50%. In healthcare, LLMs can process an astonishing 200 million pages of medical data in under three seconds. This kind of speed and scale is revolutionizing decision-making across industries.

However, integrating LLMs is no walk in the park. It’s a complex process that spans multiple disciplines. As Pritesh Patel explains:

"LLM integration is not a plug-and-play process - it's a multidisciplinary endeavor that touches on architecture, security, ethics, product design, and business strategy. Done right, LLMs can drastically improve user experience, reduce costs, and open new opportunities for innovation".

This complexity means businesses need a thoughtful and strategic approach. Starting small is key - focus on use cases that reduce support costs or organize unstructured data. Incorporating human-in-the-loop feedback and tracking metrics like response time, token usage, and user satisfaction can help refine these systems over time.

The financial impact of LLM pipelines is hard to ignore. Amazon’s recommendation system, for example, generates nearly 35% of its total sales. Predictive maintenance powered by LLMs can cut equipment downtime by up to 50% and extend machine life by 20–40%. AlexanderFish from 4Degrees highlights how LLMs save time and improve efficiency:

"LLMs can automate deal sourcing, initial screening of pitch decks, market and competitive research, document summarization, drafting investment memos, and due diligence tasks, saving analysts 5–10 hours a week and enabling faster, data-driven decisions".

Platforms like prompts.ai are making it easier for businesses to adopt LLM-powered pipelines. Their tools - such as multi-modal workflows, token tracking for cost transparency, and pay-as-you-go pricing - allow companies to experiment without hefty upfront investments.

LLM-powered decision pipelines bring a new level of speed and precision to data processing by analyzing massive datasets in real time. This capability allows industries like finance and healthcare to make faster, well-informed decisions while cutting down on human errors.

In the finance sector, these systems deliver detailed insights for tasks such as market forecasting and risk evaluation. Meanwhile, in healthcare, they assist with clinical decision-making by providing data-backed recommendations, leading to better patient care and more efficient resource management. By reducing biases and errors, LLM pipelines enable smarter, more dependable choices in these critical fields.

When organizations adopt LLM decision pipelines, they often face a range of challenges. These can include hefty implementation costs, ensuring output accuracy and reliability, managing data privacy concerns, and navigating technical issues like scalability and hardware requirements.

To address these obstacles, businesses can take several steps. They can work on improving model performance to reduce costs, establish rigorous validation and testing processes to boost accuracy, and implement strong data security measures to safeguard sensitive information. On top of that, investing in scalable infrastructure and keeping models updated ensures the pipeline stays efficient and aligned with evolving needs.

Prompt management plays a key role in maintaining consistency and clarity when structuring prompts within large language model (LLM) decision workflows. By carefully organizing and fine-tuning prompts, it minimizes variability in responses, ensuring outputs are more predictable and dependable.

On the other hand, output validation adds another layer of reliability by assessing the accuracy, safety, and relevance of the generated content. This step helps catch and address mistakes, misinformation, or inappropriate material before they impact decision-making processes.

When combined, these practices create a solid foundation of trust in LLM-powered systems, ensuring the generated outputs are both reliable and aligned with user needs.