Evaluating the outputs of generative AI models is critical for ensuring quality, reliability, and alignment with business objectives. Without a structured evaluation approach, inconsistencies, hallucinations, and biases can lead to poor performance, compliance risks, and loss of trust. Here’s what you need to know:

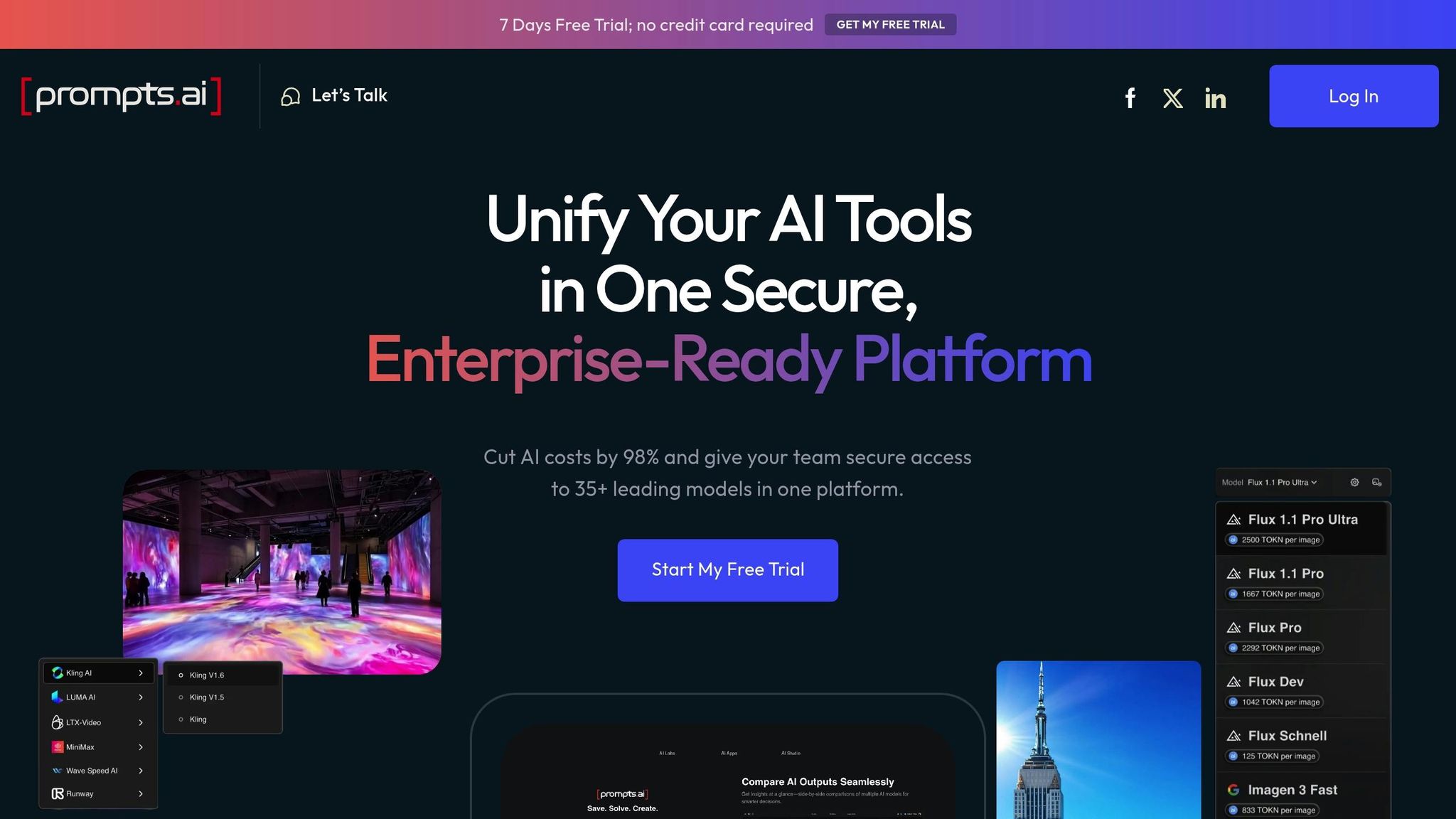

Platforms like Prompts.ai simplify this process by offering tailored workflows, side-by-side model comparisons, and structured evaluations across 35+ leading models. With these tools, organizations can confidently deploy AI solutions that meet high standards and deliver measurable results.

These five metrics offer a structured way to assess the performance of large language models (LLMs), ensuring they meet expectations across various applications.

Factuality measures how well the output aligns with verified facts and established knowledge. This is particularly important when LLMs handle tasks like answering customer queries, generating reports, or providing information that influences decisions. Correctness, on the other hand, extends to logical reasoning, accurate calculations, and adherence to specified guidelines.

To evaluate factuality effectively, use ground truth datasets containing verified information tailored to your application. For example, in customer support, this might include product details, pricing, and company policies. In content creation, fact-checking against reliable sources or industry databases is crucial.

Assessment methods include comparing outputs to ground truth datasets, using test sets with definitive answers, and applying multi-step verification processes. These steps help uncover subtle inaccuracies that might otherwise go undetected.

Bias detection identifies instances of unfair treatment or representation, while toxicity assessment focuses on spotting offensive, harmful, or inappropriate content. These metrics are critical for protecting brand reputation and adhering to ethical AI standards.

Bias can appear as demographic stereotypes or insensitive representations. Testing outputs using diverse prompts across various scenarios helps reveal hidden biases.

For toxicity, outputs are screened for hate speech, harassment, explicit language, and other harmful content. Use automated tools alongside human reviews to detect nuanced issues. Regular testing with challenging prompts can uncover vulnerabilities before they affect users.

Ethical considerations also involve ensuring that outputs respect user privacy, avoid manipulation, and present balanced perspectives on sensitive topics. Outputs should include disclaimers or context when addressing controversial issues to maintain transparency and fairness.

Clarity evaluates whether the response is easy to understand and actionable. Usefulness measures how well the output helps users achieve their goals, and relevance determines how closely the response aligns with the given question or context.

Clarity can be assessed by examining structure, vocabulary, and flow, often using readability scores. For business applications, ensure technical terms are explained clearly and that instructions are actionable.

Usefulness depends on understanding user needs and tracking how well responses fulfill them. Metrics like follow-up questions, satisfaction scores, or task completion rates can highlight gaps in usefulness. If users frequently seek clarification, this indicates room for improvement.

Relevance focuses on how well the response matches the original query. Scoring systems can help measure the alignment of outputs with the context provided, ensuring responses are on-topic and concise. In conversational AI, maintaining contextual relevance is vital, as responses should build logically on previous interactions.

Hallucinations occur when LLMs generate plausible-sounding but false or fabricated information. This metric is especially critical in enterprise settings, where accuracy impacts decisions and trust.

To detect hallucinations, fact-check outputs against verified sources and track how often fabricated content appears. Patterns of hallucination might include fake citations, incorrect historical dates, or made-up statistics. Develop evaluation datasets specifically designed to test for these issues, including prompts that challenge the model's knowledge boundaries.

Measuring hallucination rates involves calculating the percentage of responses containing fabricated information within a representative sample. Since hallucination patterns can vary across domains, continuous monitoring is essential.

Task completion measures whether the AI fulfills the specific request or objective outlined in the prompt. Accuracy assesses how closely the output matches the expected results or adheres to the given requirements.

To evaluate task completion and accuracy, compare outputs to expected results and calculate success rates and error frequencies. Clearly define success criteria for each use case. For instance, in customer service, a task might be considered complete when the user's query is fully addressed and any required follow-up actions are identified. In content generation, success might depend on meeting specific length, tone, or formatting requirements.

Accuracy scoring should reflect both complete and partial successes. For example, a response that addresses 80% of a multi-part question provides more value than one that misses entirely. Weighted scoring systems can capture this nuance, balancing credit for partial correctness with the need for high standards.

These five metrics provide a well-rounded framework for evaluating LLM performance. The next section will explore practical ways to apply these metrics in real-world scenarios.

Structured evaluation methods ensure a consistent and reliable way to measure the performance of large language models (LLMs). These methods range from automated scoring systems to human oversight, ensuring quality control across various applications.

Reference-based evaluation involves comparing LLM outputs to predefined "golden" answers or datasets. This method works well for tasks with clear, objective answers, such as solving math problems, answering factual questions, or translating text. For instance, metrics like BLEU scores for translation or exact match percentages for factual queries provide measurable results. In customer service scenarios, generated responses might be compared against a database of approved answers to check for consistency and adherence to known information.

On the other hand, reference-free evaluation assesses outputs without relying on predefined answers. This approach is more suitable for tasks like creative writing, brainstorming, or open-ended questions where multiple valid responses are possible. Instead of focusing on a single "correct" answer, evaluators consider factors such as coherence, relevance, and usefulness. This method often uses trained evaluator models or human judgment to assess the quality of outputs. For example, when testing creative writing tools, evaluators might judge the creativity and relevance of the generated content rather than its factual accuracy.

The choice between these methods depends on the specific use case. For instance, financial reporting or medical information systems demand reference-based evaluation for accuracy, while marketing content generation or creative writing tools benefit from reference-free evaluation to capture nuanced qualities like tone and style.

Many organizations adopt hybrid approaches, combining both methods. Reference-based evaluation might handle factual accuracy, while reference-free methods focus on aspects like creativity or tone. This combination ensures a well-rounded assessment of LLM performance, with human oversight often adding an extra layer of refinement.

While automated metrics provide consistency, human oversight addresses more complex, context-sensitive issues. Human-in-the-loop verification blends the efficiency of automated systems with the nuanced understanding that only humans can bring to the table.

This approach is particularly valuable in domain-specific applications like medical AI, legal document analysis, or financial advisory tools, where subject matter expertise is crucial. Human experts can identify industry-specific errors or subtleties that automated systems might miss.

To scale human involvement, organizations use sampling strategies such as random, stratified, or confidence-based sampling. For instance, outputs flagged with lower confidence by automated systems may be prioritized for human review. Additionally, expert panels are often employed for controversial topics or edge cases, helping to refine evaluation rubrics for new or complex applications.

Human feedback also drives continuous improvement loops. By flagging recurring errors or patterns, human reviewers contribute to refining evaluation criteria and improving training data. This feedback ensures that LLMs adapt to new types of queries and evolving user needs.

To keep costs manageable, human review is typically reserved for high-impact decisions, controversial content, or cases where automated confidence scores fall below a set threshold. This targeted approach leverages human expertise effectively while maintaining scalability.

Standard evaluation methods often overlook how LLMs handle unusual or challenging scenarios. Testing edge cases helps uncover weaknesses and ensures models perform reliably under less predictable conditions.

Adversarial prompting is one way to test vulnerabilities, such as attempts to bypass safety features, generate biased content, or produce fabricated information. Regular adversarial testing helps identify and address these issues before they affect users.

Stress testing with volume and complexity pushes LLMs to their limits by using long prompts, rapid-fire questions, or tasks requiring the processing of conflicting information. This type of testing reveals where performance starts to degrade and helps establish operational boundaries.

Domain boundary testing examines how well LLMs respond to prompts outside their area of expertise. For example, a model designed for medical applications might be tested with prompts that gradually shift into unrelated fields. Understanding these boundaries helps set realistic expectations and implement safeguards.

Contextual stress testing evaluates how well LLMs maintain coherence and accuracy during extended conversations or multi-step tasks. This is especially useful for applications that require sustained context retention.

Platforms like Prompts.ai enable systematic edge case testing by allowing teams to design structured workflows that automatically generate challenging scenarios and apply consistent evaluation standards. This automation makes it easier to regularly conduct stress tests, catching potential issues before deployment.

Synthetic data generation also supports edge case testing by creating diverse, challenging scenarios at scale. LLMs can even generate their own test cases, offering a broader range of edge cases than human testers might consider. This approach ensures comprehensive coverage and helps teams identify vulnerabilities across different types of inputs.

The insights gained from these tests guide both model selection and prompt engineering. Teams can choose models that are better equipped for specific challenges and refine prompts to minimize errors, ensuring robust performance across various applications.

Prompts.ai streamlines the evaluation of large language models (LLMs) by merging access to over 35 leading models into a single, secure platform. This unified approach eliminates the need for juggling multiple tools, making it easier for teams - from Fortune 500 companies to research institutions - to conduct assessments while maintaining compliance and reducing complexity.

Prompts.ai offers flexible workflows that allow teams to design evaluation processes that align with their specific internal standards. This structured approach ensures consistent and repeatable assessments of LLM outputs. To help organizations stay on budget, the platform includes integrated cost tracking, providing real-time insights into evaluation expenses. These features create an environment where cross-model comparisons are both efficient and effective.

The platform's interface makes it simple to compare LLMs directly. Users can send the same prompt to multiple models and evaluate their responses based on predefined criteria. With built-in governance tools and transparent cost reporting, teams can monitor performance over time and make data-driven decisions that suit their unique operational goals.

Building on the core metrics and methods discussed earlier, selecting the right evaluation strategy depends on your specific use case, available resources, and quality expectations. It's essential to weigh different methodologies to strike a balance between accuracy and efficiency, ensuring evaluations remain reliable and straightforward.

Each evaluation method has its strengths and limitations, making them suitable for different scenarios. The table below outlines key aspects of common approaches:

| Method | Pros | Cons | Best For |

|---|---|---|---|

| Reference-Based | High accuracy, objective scoring, consistent benchmarks | Requires ground truth data, limited to known scenarios | Academic research, standardized testing, compliance checks |

| Reference-Free | Flexible, scalable, handles novel scenarios | More subjective, harder to validate, needs careful prompt design | Creative tasks, open-ended responses, exploratory testing |

| Human Scoring | Provides nuanced judgment, contextual understanding, catches subtle issues | Time-intensive, costly, potential reviewer inconsistency | High-stakes applications, complex reasoning tasks, final quality checks |

| Automated Scoring | Fast, consistent, cost-effective, handles large volumes | May miss subtle issues, lacks contextual understanding | Initial screening, continuous monitoring, large-scale testing |

| Binary Scales | Simple, fast decisions, clear pass/fail criteria | Lacks granularity, oversimplifies complex outputs | Safety checks, compliance screening, basic quality gates |

| Continuous Scales | Detailed feedback, tracks incremental improvements, provides rich data | More complex to implement, requires careful calibration | Performance optimization, model comparison, detailed analysis |

In practice, hybrid approaches often deliver the best results. For example, many organizations start with automated screening to eliminate obvious failures and then apply human review to borderline cases. This combination ensures efficiency without compromising quality.

To manage increasing volume and complexity, it's crucial to design workflows that scale while maintaining high-quality standards. Here’s how to achieve that:

Taking a structured approach to evaluating large language models (LLMs) ensures dependable AI workflows that consistently meet business objectives. Organizations adopting systematic evaluation processes gain measurable improvements in model performance, lower operational risks, and stronger alignment between AI outputs and their goals. This foundation supports the scalable and precise evaluation methods discussed earlier.

Moving away from ad-hoc testing to structured evaluation frameworks revolutionizes AI deployment. Teams can make informed, data-backed decisions about model selection, prompt refinement, and quality benchmarks. This becomes increasingly essential as AI expands across various departments and use cases.

With these evaluation metrics in place, Prompts.ai offers a practical and efficient solution for scalable assessments. The platform simplifies evaluations by providing tools for custom scoring flows, edge case simulations, and performance tracking across multiple leading models - all within a unified system.

The benefits of precise evaluations extend well beyond immediate quality gains. Organizations with robust frameworks see a higher return on investment (ROI) by identifying the models and prompts that excel at specific tasks. Compliance becomes more straightforward as every AI interaction is tracked and measured against set criteria. Continuous performance optimization replaces reactive fixes, enabling teams to catch and address potential issues before they impact users.

Perhaps most importantly, structured evaluations make AI more accessible throughout an organization. When evaluation criteria are clear and consistently applied, teams don’t need deep technical expertise to assess the quality of outputs or make informed deployment decisions. This clarity encourages adoption while maintaining the high standards required for enterprise applications.

Evaluating the outputs of generative AI models is no small task. Challenges like factual inaccuracies, bias, hallucinations, and inconsistent responses can arise due to the unpredictable behavior of large language models (LLMs).

A structured approach is key to tackling these issues effectively. Combining various metrics - such as factual accuracy, clarity, and practical usefulness - with human judgment provides a more balanced and thorough evaluation. Additionally, testing models under edge cases and realistic scenarios using defined protocols can uncover weaknesses and improve the reliability of their responses. These strategies help make evaluations more precise and actionable, paving the way for better performance.

Prompts.ai makes evaluating LLM outputs straightforward with its structured scoring tools and customizable evaluation rubrics. These features, combined with capabilities like batch prompt execution and agent chaining, enable users to tackle complex tasks by breaking them into smaller, easier-to-handle steps. This approach ensures evaluations remain consistent, scalable, and accurate.

With support for over 35 LLMs, the platform provides a flexible solution for comparing and assessing outputs from various models. It’s particularly suited for research labs, AI trainers, and QA leads who need dependable methods to evaluate key aspects such as factual accuracy, clarity, and bias - while also working to reduce hallucination rates.

Balancing automated tools with human review is essential for thoroughly evaluating outputs from large language models (LLMs). Automated tools are unmatched in processing vast amounts of data quickly, spotting patterns, and flagging responses that fall short in quality. However, they can miss finer details, such as subtle biases, contextual nuances, or intricate inaccuracies.

This is where human judgment steps in. Humans bring critical thinking and a deeper grasp of context, ensuring that outputs are not just accurate but also fair and practical. By combining the efficiency of automation with the thoughtful analysis of human oversight, this approach ensures evaluations are both dependable and thorough. Together, they strike the right balance for assessing LLM performance effectively.