Large Language Models (LLMs) and knowledge graphs are transforming how we interact with data. By combining LLMs' natural language processing capabilities with the structured data of knowledge graphs, users can perform complex queries without technical expertise. Here's the key takeaway:

This integration makes data more accessible and actionable, but challenges like high resource demands, prompt quality, and maintaining alignment between LLMs and graph structures require careful planning.

By combining the strengths of large language models (LLMs) with structured knowledge graphs, users can now perform natural and efficient queries without needing technical expertise. LLMs simplify interactions by translating everyday language into precise, structured queries, making complex data more accessible. This eliminates the need for specialized query languages, opening up knowledge graphs to a broader audience.

One of the most transformative abilities of LLMs is converting natural language into formal query languages like SPARQL. As Sir Tim Berners-Lee aptly put it:

"Trying to use the Semantic Web without SPARQL is like trying to use a relational database without SQL. SPARQL makes it possible to query information from databases and other diverse sources in the wild, across the Web."

LLMs bridge the gap by taking user-friendly inputs, understanding intent, identifying relevant entities, and generating structured queries tailored to the graph's schema.

Techniques like template-based methods combined with Retrieval-Augmented Generation frameworks improve query accuracy. For example, the jina-embeddings-v3 model achieved an impressive 0.81 accuracy and a Matthews Correlation Coefficient (MCC) of 0.8 in template retrieval tasks. Similarly, SQL-based semantic layers allow LLMs to create efficient and accurate SQL queries, simplifying the process of translating human language into complex syntax.

These advancements lay the groundwork for better entity mapping and semantic query refinement.

LLMs are particularly effective at mapping entities and relationships from natural language queries to knowledge graph elements. Frameworks like Althire AI have demonstrated that LLM-based extraction can achieve over 90% accuracy in entity and relation mapping. Specifically, entity extraction has reached 92% accuracy, while relationship extraction stands at 89% with well-tuned LLMs.

LLMs also tackle entity disambiguation, resolving duplicate entries that appear in various forms across datasets. To boost performance, a clearly defined graph schema with allowed node and relationship types is essential. Incorporating contextual data from the knowledge graph during the extraction process further enhances the accuracy and consistency of these mappings.

LLMs take query handling a step further by optimizing the extracted data semantically. This involves refining queries to improve relevance and retrieval, moving beyond simple keyword matching to grasp the full meaning and context of user inputs.

A noteworthy example comes from the Australian National University (ANU), where researchers integrated LLMs with the ANU Scholarly Knowledge Graph (ASKG). Their system used automatic LLM-SPARQL fusion to retrieve both facts and textual nodes, delivering better accuracy and efficiency compared to traditional methods. As the researchers stated:

"By combining the ASKG with LLMs, our approach enhances knowledge utilization and natural language understanding capabilities."

LLMs also employ query relaxation techniques, such as adjusting parameters or replacing terms, to refine searches when initial queries yield insufficient results. This ensures that even ambiguous or incomplete queries can lead to meaningful outcomes. For instance, the KGQP (KG-enhanced Query Processing) framework uses structured knowledge graphs alongside LLMs to provide context during question-answer interactions.

Furthermore, LLMs can create feedback loops during query processing. If a query generates errors or unexpected results, the model analyzes the issue, refines the query, and retries until it produces accurate outcomes. This iterative process significantly improves success rates and reliability.

The LLAMA 3.1 70B model exemplifies this capability, achieving a flawless Execution Success Rate (ESR) of 100% for queries related to Observation tasks. This highlights how advanced LLMs excel in handling intricate semantic queries with exceptional precision.

Semantic optimization is especially useful for handling vague or incomplete queries. LLMs can infer missing details, suggest related entities, or expand queries to better align with the user's intent. This adaptability transforms knowledge graphs into dynamic, intelligent tools for retrieving information, making them far more versatile than traditional rigid systems.

Building on earlier discussions about improving large language models (LLMs), this step-by-step workflow outlines how to create a strong query processing system. The goal? To transform raw data into insights you can act on. By following these steps, your knowledge graph can handle complex natural language queries while delivering accurate results.

The success of a knowledge graph starts with solid data preparation. This phase is crucial because it sets the stage for the quality and reliability of your entire system. First, gather datasets tailored to your needs. These can include structured data like tables, semi-structured formats like JSON or XML, and unstructured sources such as text documents, emails, or system logs.

Data cleaning is a must. Raw data often contains errors, inconsistencies, and missing values that can compromise your system. Standardize formats - for instance, use MM/DD/YYYY for dates and ensure temperature readings are consistently in Fahrenheit. Duplicate records, like multiple profiles for the same customer, should be merged or removed. For missing values, decide whether to impute, flag, or eliminate them based on their importance.

Error correction is another key step. Fix issues such as typos, invalid identification numbers, or logical inconsistencies. Use natural language processing to extract meaningful information from text, converting it into a uniform format while accounting for variations in language and style.

For multimedia data, tools like image recognition or video analysis can extract features and metadata that add depth to your knowledge graph. Use a unified schema to integrate structured and unstructured data seamlessly. Create identifiers or keys to link data points across different sources.

In e-commerce, for example, this process might involve gathering user purchase histories, demographic data, product catalogs, and category hierarchies. ETL (Extract, Transform, Load) tools can simplify this by converting various data formats into structures that work with your graph database.

LLMs are incredibly effective at turning unstructured data into structured entities and relationships, which are the building blocks of knowledge graphs. They excel at understanding context and meaning, eliminating the need for costly retraining for every new dataset.

"Using LLMs to extract entities and relationships for knowledge graphs can improve data organization efficiency and accuracy." - TiDB Team

Start with entity identification, where LLMs pinpoint meaningful entities and attributes in text. These entities - like people, places, or products - become the nodes in your knowledge graph. Next, the models identify relationships between these entities, forming the edges that connect them and build the graph's structure.

Relationship extraction comes after identifying entities. LLMs determine how entities are connected, whether through hierarchies, associations, or timelines. When done right, entity extraction can achieve accuracy rates of 92%, with relationship extraction close behind at 89%.

In March 2025, Althire AI showcased this capability by integrating data from emails, calendars, chats, documents, and logs into a comprehensive knowledge graph. Their system automated entity extraction, inferred relationships, and added semantic layers, enabling advanced tools for task management, expertise discovery, and decision-making.

Entity disambiguation ensures that duplicate entities - like different forms of the same name - are merged correctly. Caching can speed up this process by avoiding repeated efforts.

"LLMs excel in inferring context and meaning of unseen data without the need for expensive training. This eases the implementation of LLM-enabled knowledge extraction tools, making them attractive for data management solutions." - Max Dreger, Kourosh Malek, Michael Eikerling

To optimize costs and efficiency, consider fine-tuning smaller, task-specific models instead of relying entirely on large, general-purpose ones. Caching previously processed data can further reduce computational demands and speed up response times.

Once you’ve mapped entities and relationships, the next step is to execute and refine queries for practical applications.

With your knowledge graph ready, the focus shifts to running and refining queries for peak performance. This involves translating natural language queries into structured database queries, executing them effectively, and improving results through iterative tweaks.

Query translation starts when a user submits a natural language query. The LLM interprets the request, identifies relevant entities, and generates structured queries (such as SPARQL or SQL) based on your knowledge graph's schema. This simplifies the process for users by eliminating the need to learn complex query languages.

Error handling and correction introduce feedback loops. If the initial query fails or returns inaccurate results, the LLM refines the query structure and retries until it meets the user’s needs. This iterative process enhances both accuracy and reliability.

Dynamic optimization fine-tunes query parameters in real time. For example, if a query returns limited results, you can broaden the scope by relaxing search terms, replacing specific words with general alternatives, or including related entities and relationships.

Performance monitoring is critical to maintaining system efficiency. Track metrics like query execution time, relevance of results, and user satisfaction to identify areas for improvement.

Contextual enhancements can make your knowledge graph smarter. When users submit vague or incomplete queries, the system can infer missing details, suggest related entities, or expand the query scope to better match the user’s intent. This turns your knowledge graph into a dynamic, intelligent tool for retrieving information.

Finally, result validation adds a layer of quality control. Cross-reference query results with known facts in your knowledge graph to catch inconsistencies or errors before presenting them to users. This step helps maintain trust in your system over time.

Building on the earlier discussion of workflows, let’s dive into the benefits and challenges of using large language models (LLMs) for querying knowledge graphs. Understanding these aspects is essential for organizations to make informed decisions about adopting this technology. While LLMs bring new levels of accessibility and efficiency, they also introduce unique challenges that require thoughtful planning.

One of the standout benefits is greater accessibility. With LLMs, users no longer need to master specialized query languages. This means that employees across an organization, regardless of technical expertise, can interact with data more freely.

Another major advantage is better contextual understanding. LLMs are skilled at interpreting user intent, allowing knowledge graphs to return results that go beyond simple keyword matches. Instead, they focus on capturing the meaning behind queries.

"The misconception that flooding LLMs with information will magically solve problems overlooks a key fact: human knowledge is about context, not just content. Similar to the brain, 'meaning' emerges from the interplay between information and the unique context of each individual. Businesses must shift from one-size fits all LLMs and focus on structuring data to enable LLMs to provide contextually relevant results for effective outcomes." - Mo Salinas, Data Scientist at Valkyrie Intelligence

Fewer hallucinations are another benefit when LLMs are grounded in structured knowledge graphs. By relying on factual relationships within the graph, LLMs can avoid generating inaccurate or misleading information, leading to more trustworthy outputs.

LLMs also offer scalability. As data volumes grow, knowledge graphs provide a structured foundation, while LLMs handle increasingly complex queries with ease. This combination is particularly effective for large-scale enterprise applications, where traditional methods often struggle to keep up.

Despite the advantages, there are hurdles to overcome. One issue is alignment and consistency. LLMs' flexibility doesn’t always mesh perfectly with the rigid structure of knowledge graphs, which can result in mismatched or inconsistent outputs.

Real-time querying can also strain resources. Translating natural language queries into structured formats and executing them can be computationally demanding. Organizations must invest in high-performance systems to deliver quick and reliable responses.

The quality of prompts plays a critical role in accuracy. Poorly worded inputs can lead to misinterpretations or incorrect query translations, which can undermine the reliability of results.

Another challenge is the high resource demand. Running LLMs, especially for real-time applications, requires significant computational power. For smaller organizations or high-traffic scenarios, this can quickly become cost-prohibitive.

Ambiguous queries pose yet another obstacle. While LLMs are good at understanding context, vague or poorly phrased questions can still lead to irrelevant or incorrect results.

"The language model generates random facts that are not based on the data it was trained on and do not correspond to reality. This is because it was trained on unstructured data and delivers probabilistic outcomes." - Jörg Schad, CTO at ArangoDB

Finally, specialized expertise is needed to implement and maintain these systems. While end users benefit from simplified interfaces, building and managing LLM-driven knowledge graph solutions requires deep knowledge of both graph databases and language model architectures.

The table below outlines the key benefits and challenges of LLM-driven querying, summarizing the discussion:

| Aspect | Benefits | Challenges |

|---|---|---|

| Data Management | Structured organization with flexibility | Ensuring consistency between flexible LLMs and structured knowledge graphs |

| User Experience | Natural language querying removes the need for technical query skills | Relies heavily on prompt quality and struggles with ambiguous inputs |

| Contextual Understanding | Combines graph relationships with LLM processing for deeper insights | Aligning LLM outputs with the graph’s structure and rules |

| System Maintenance | Continuous updates improve both LLMs and knowledge graphs | Requires ongoing alignment and management as new data is added |

| Performance | Supports informed decision-making with reliable, structured knowledge | Real-time querying demands high computational resources |

| Scalability | Handles growing data complexity through structured foundations | Scaling infrastructure and maintaining updates become more challenging with larger systems |

| Accuracy | Reduces hallucinations by grounding LLMs in factual data | Requires constant validation to eliminate inaccuracies in both LLMs and knowledge graphs |

Organizations weighing the adoption of LLM-driven querying must carefully evaluate these trade-offs based on their specific needs, resources, and technical capabilities. Success hinges on thorough planning, robust infrastructure, and ongoing refinement of the system.

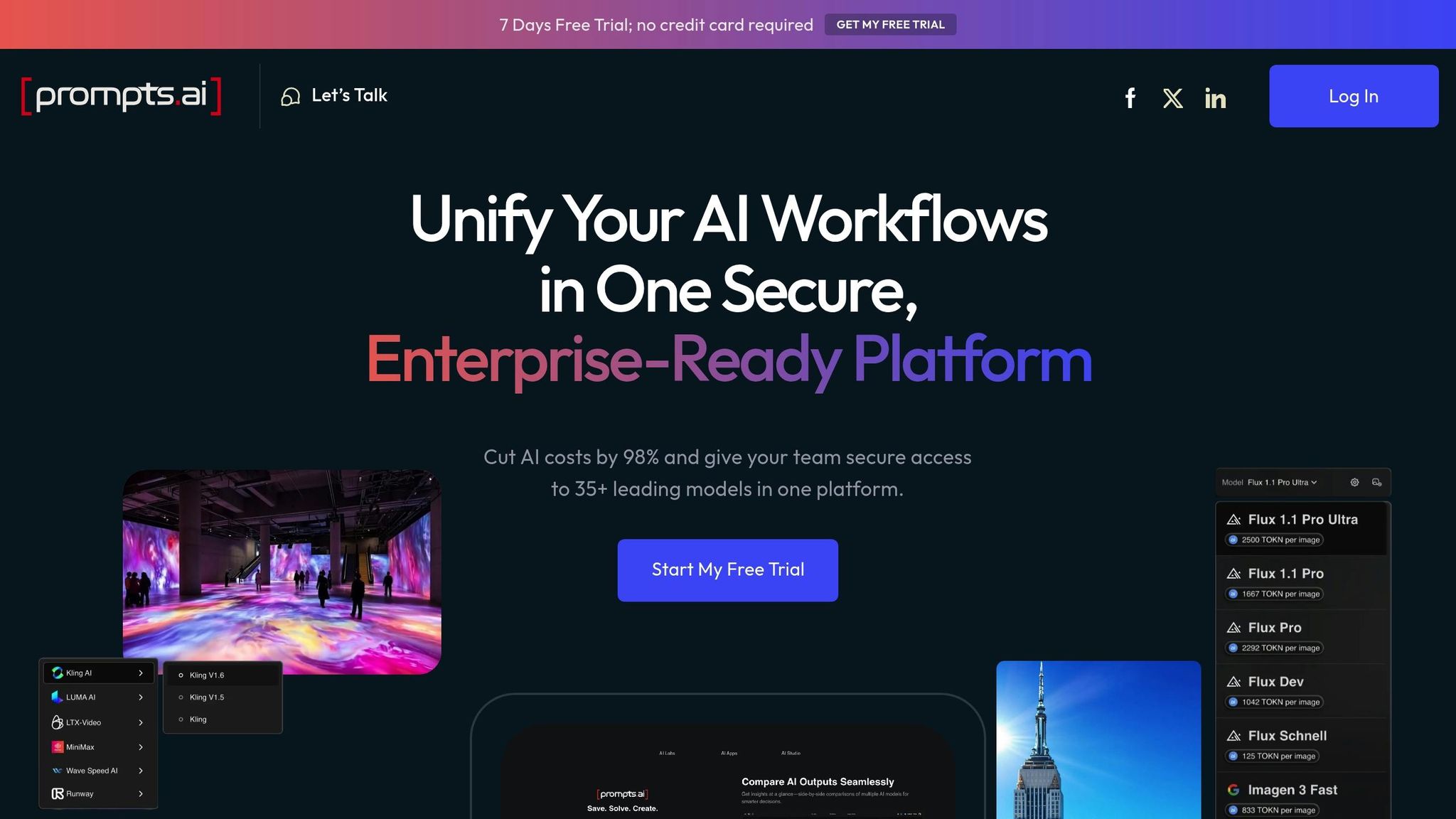

When it comes to integrating large language models (LLMs) with knowledge graphs, prompts.ai steps in to simplify the process while addressing common hurdles. By offering efficient orchestration and automated workflows, the platform ensures smoother and more secure integration.

prompts.ai takes the hassle out of integration with its automated workflow capabilities. By connecting users to leading AI models like GPT-4, Claude, LLaMA, and Gemini through a single interface, the platform eliminates repetitive tasks and streamlines operations. Its real-time collaboration tools make it easy for distributed teams to work together seamlessly. On top of that, prompts.ai integrates with popular tools like Slack, Gmail, and Trello, embedding knowledge graph querying right into your existing workflows.

Managing prompts effectively is crucial for successful integration, and prompts.ai delivers with a system designed for organization. Users can create, store, and version queries for knowledge graph tasks, ensuring everything is neat and accessible. The platform also includes a token tracking system, allowing organizations to monitor usage in real time and stick to their budgets. Pricing is transparent: the Creator plan costs $29/month (or $25/month annually) with 250,000 TOKN credits, while the Problem Solver plan is $99/month (or $89/month annually) with 500,000 TOKN credits.

One standout feature is the ability to compare top LLMs side by side, which can increase productivity by up to 10×.

"Instead of wasting time configuring it, he uses Time Savers to automate sales, marketing, and operations, helping companies generate leads, boost productivity, and grow faster with AI-driven strategies." - Dan Frydman, AI Thought Leader

The platform’s Time Savers feature adds further convenience by supporting custom micro workflows. This allows users to create reusable prompt templates, standardizing query patterns and ensuring consistency across teams. These tools make scaling up easier and keep query performance steady.

For organizations handling sensitive data, security and interoperability are non-negotiable. prompts.ai addresses these concerns with robust encrypted data protection and advanced security features, offering full visibility and auditability for all AI interactions. The platform also supports multi-modal AI workflows and integrates a vector database for Retrieval-Augmented Generation (RAG) applications, ensuring LLM responses are grounded in accurate knowledge graph data.

Flexibility is another key strength. prompts.ai’s interoperable workflows allow organizations to switch between different AI models based on their needs without overhauling their entire query infrastructure. This adaptability is complemented by the platform’s ability to consolidate over 35 disconnected AI tools, slashing costs by up to 95%. With an average user rating of 4.8/5, the platform has earned praise for its streamlined workflows and scalability. Its recognition by GenAI.Works as a leading AI platform for enterprise problem-solving and automation underscores its value in tackling complex integration challenges.

Blending large language models (LLMs) with knowledge graphs is reshaping how we approach data querying. This guide has walked through both the theoretical foundations and practical applications of this integration. We've seen how LLMs bridge the gap between natural language queries and structured data, making even complex information easier to access for users, regardless of their technical expertise.

The numbers speak for themselves: integrating knowledge graphs with LLMs delivers accuracy improvements of over 3X. For example, SPARQL accuracy can reach up to 71.1% - a 2.8X boost over SQL in complex scenarios. For simpler schema-based questions, SPARQL achieved 35.7% accuracy, while SQL accuracy dropped to 0%.

Here’s what stands out: LLM-powered knowledge graph querying doesn’t just improve accuracy - it adds crucial business context by capturing relationships, constraints, and domain-specific semantics. This added context enables organizations to break down multi-step questions into manageable sub-questions while keeping the reasoning process consistent and meaningful.

That said, success hinges on careful implementation. Organizations need to invest in high-quality, up-to-date knowledge graphs to achieve reliable accuracy levels. Maintaining these graphs, optimizing query performance, and fine-tuning LLMs with domain-specific data are all critical steps. The challenge isn’t just technical - it’s about integrating knowledge graphs as a core element of data management strategies.

Modern AI platforms are making this process more accessible. By automating workflows, managing prompts efficiently, and offering secure frameworks, these platforms help reduce the complexity of integration, as discussed earlier.

Combining LLMs with knowledge graphs creates AI systems that are both contextually aware and factually precise. This combination is key for organizations looking to democratize data access while maintaining the precision needed for high-stakes decisions. As the technology evolves and adapts to real-world schemas, LLM-driven knowledge graph querying is proving to be a practical solution for enterprise environments.

Ultimately, success lies in balancing technical sophistication with ease of use. Organizations that master this integration will unlock competitive advantages in data accessibility, query accuracy, and user experience. When implemented effectively, this approach leads to better decision-making and lowers the barriers to actionable insights.

Large language models (LLMs) improve the precision of knowledge graph (KG) queries by blending their ability to understand natural language with the structured data found in KGs. This combination helps LLMs interpret intricate relationships, carry out advanced reasoning, and deliver more accurate, fact-driven answers.

By anchoring their responses in the structured and verifiable data of a KG, LLMs minimize errors and increase dependability. This approach is especially useful for enterprise-level or highly complex datasets, where delivering accurate, context-sensitive results is essential.

Integrating large language models (LLMs) with knowledge graphs (KGs) brings two primary hurdles to overcome:

Here are some practical ways to tackle these challenges:

By applying these strategies, you can boost the accuracy and efficiency of your knowledge graph queries while unlocking new possibilities with LLMs.

Large language models (LLMs) make working with knowledge graphs much easier by allowing users to interact with them through natural language. Instead of requiring technical expertise to craft complicated queries, users can simply ask their questions in plain English. The LLMs then handle the heavy lifting, converting those questions into the correct query language.

On top of that, LLMs can create easy-to-read summaries of the data pulled from knowledge graphs. This means even non-technical users can grasp and draw insights from complex datasets. By breaking down these barriers, LLMs make the technology more approachable and practical for a broader range of people.