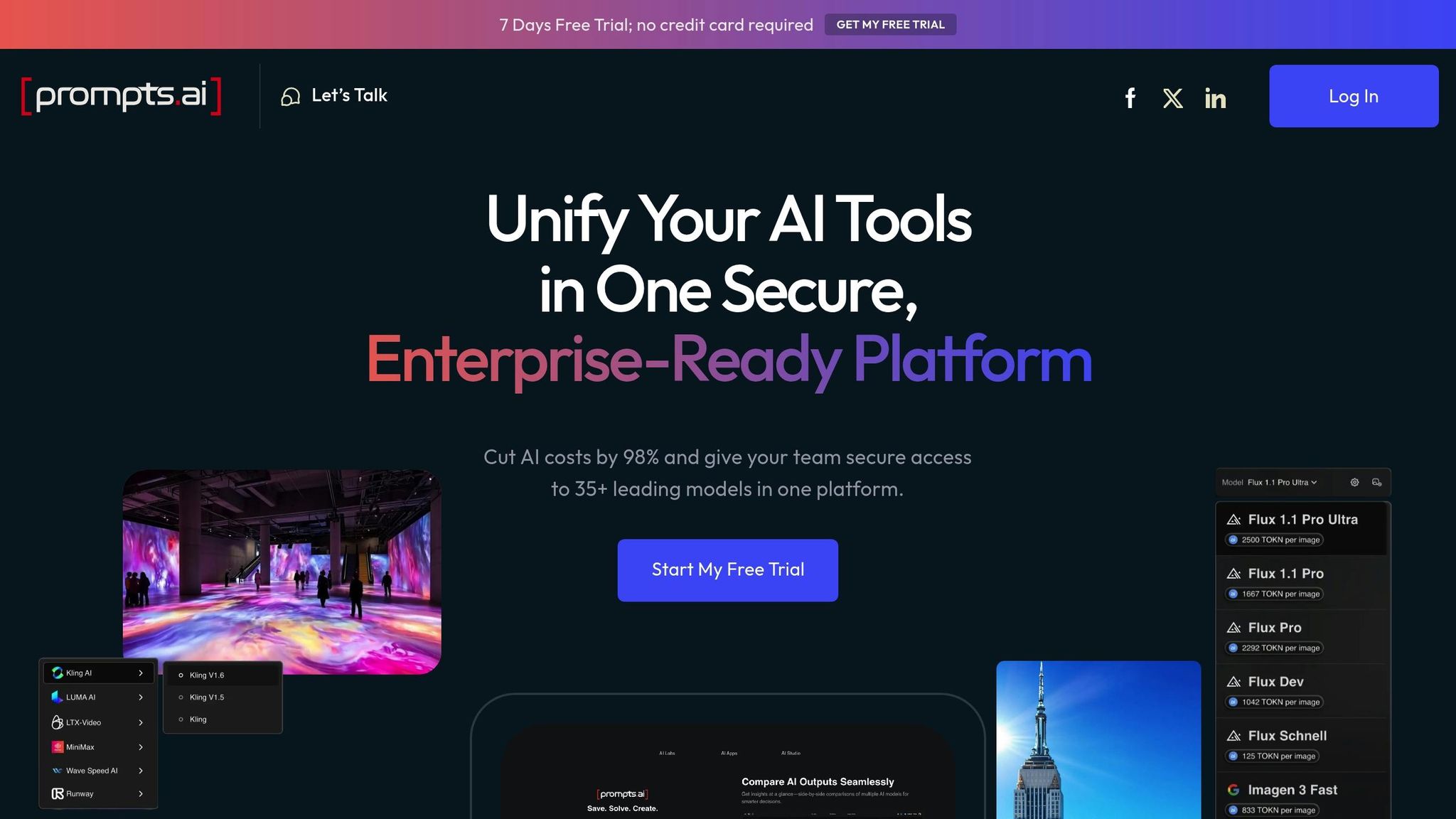

Managing AI workflows securely is no longer optional - it’s a must. For enterprises in sectors like healthcare, finance, and government contracting, balancing innovation with strict compliance is critical. Tools like Prompts.ai offer a solution, combining access to over 35 leading AI models with robust security measures such as access control, encrypted storage, and sandboxed environments. This platform also simplifies cost management with its pay-as-you-go TOKN credit system, cutting AI expenses by up to 98% while maintaining governance and audit capabilities.

Key Features of Prompts.ai:

For organizations needing secure, streamlined AI workflows, Prompts.ai delivers a unified platform that prioritizes both security and efficiency.

Prompts.ai serves as a secure platform tailored for enterprise AI workflow orchestration, uniting over 35 top-tier large language models within a single, secure interface. Designed to meet the rigorous security demands of U.S. enterprises, especially in regulated sectors, it helps organizations cut AI-related costs by up to 98% while embedding strong governance and audit capabilities into every workflow. Let’s explore the key security features that make Prompts.ai a trusted choice for enterprises.

At the core of Prompts.ai’s security is a robust access control system. It employs a multilayered authorization framework that safeguards access to both AI applications and sensitive data. Using an identity and context-based approach, the platform enforces detailed, department-specific access policies through contextual runtime authorization. This ensures that access aligns with job roles and privacy requirements, while seamlessly integrating with leading identity providers for streamlined management.

Prompts.ai ensures the security of sensitive information - such as prompts, model outputs, and training datasets - by encrypting data both at rest and in transit. This comprehensive encryption strategy protects data throughout its entire lifecycle, minimizing vulnerabilities.

The platform maintains real-time, detailed audit logs of every interaction. These logs are essential for compliance and enable rapid detection of anomalies, which is particularly crucial for organizations operating in regulated industries.

Prompts.ai employs sandboxed environments to isolate AI operations from the broader network infrastructure. Each sandbox operates independently, with its own resource allocation and security boundaries. This setup prevents cross-contamination between workflows and reduces the potential impact of breaches.

To maintain tight control over access, Prompts.ai features a model-permission gating system that inspects prompts and responses in real time. This system enforces precise access policies, ensuring that only authorized users can interact with specific models and functionalities. By doing so, it helps organizations safeguard sensitive information and stay compliant with regulatory requirements.

Prompts.ai stands out with its strong security foundation, cost-saving potential, and operational clarity, making it a trusted choice for managing AI workflows. By bringing together over 35 leading large language models (LLMs) under one secure platform, it eliminates the hassle of juggling multiple subscriptions while ensuring enterprise-level security and governance.

Key Benefits of the platform include its ability to cut AI-related software costs by up to an impressive 98%, thanks to its flexible pay-as-you-go TOKN credit system. This system ties costs directly to actual usage, avoiding the burden of recurring fees. Additionally, its real-time FinOps capabilities provide detailed insights into AI spending, enabling teams to monitor every token and directly link expenses to business outcomes. To further support organizations, Prompts.ai offers a built-in community and a Prompt Engineer Certification program, helping teams adopt best practices and develop in-house expertise.

The table below highlights these advantages, showcasing how Prompts.ai not only matches but surpasses industry standards:

| Feature | Prompts.ai Capability | Industry Best Practice |

|---|---|---|

| Multi-Model Access | Access to over 35 LLMs through one secure interface | Typically limited to 1–3 models per platform |

| Cost Transparency | Real-time token tracking with advanced FinOps controls | Opaque subscription pricing |

| Security Controls | Advanced sandbox isolation and dynamic model controls | Basic access controls |

| Audit Capabilities | Instant, continuously updated logs for compliance | Standard logging practices |

| Pricing Model | Pay-as-you-go TOKN credits, avoiding recurring fees | Fixed monthly/annual subscription fees |

| Community Support | Certification programs and expert-designed workflows | Limited to standard documentation |

Prompts.ai is particularly well-suited for organizations operating in highly regulated industries, offering governance features that meet stringent compliance requirements. For teams focused on security, cost efficiency, and scalability, this platform provides a unified, robust solution to streamline AI workflows.

For organizations, especially those in regulated sectors, the challenge lies in driving innovation while maintaining strict compliance and security measures. This is where having a unified platform becomes indispensable.

Prompts.ai brings everything together by managing top AI models through a single, secure interface. With a focus on transparency and cost control, its built-in FinOps tools and the flexible pay-as-you-go TOKN system make budgeting straightforward. By directly tying AI expenses to measurable results, it ensures smarter spending and clearer outcomes.

Prompts.ai places a strong emphasis on safeguarding sensitive data by aligning with rigorous compliance standards, including GDPR, HIPAA, and SOC 2. These protocols give enterprises in regulated industries the confidence to manage their AI workflows securely and reliably.

The platform is built with advanced security features like encrypted storage, token-based access controls, and sandboxed environments, ensuring data remains protected at all times. Additionally, with detailed audit trails and role-based permissions, Prompts.ai offers a secure and reliable framework for handling large language model (LLM) workflows, even in high-risk or tightly regulated environments.

The TOKN credit system offers a smarter way to manage costs with its flexible, pay-as-you-go pricing model. Unlike traditional fixed monthly or annual fees, this system lets you pay only for what you actually use, helping to eliminate wasteful spending.

This setup is particularly useful for teams dealing with changing workloads. It allows you to adjust your spending on the fly, aligning costs with your current needs. By steering clear of paying for unused resources, the TOKN system promotes better control over your budget and ensures resources are allocated efficiently.

Prompts.ai offers model-permission gating, a feature designed to enhance security and ensure compliance by giving organizations the ability to control access to AI models. With this system, access is restricted based on predefined roles and permissions, minimizing the chances of unauthorized interactions or modifications.

This robust access control helps teams align with industry regulations and protect sensitive information, making it especially well-suited for environments where security and regulatory adherence are critical.