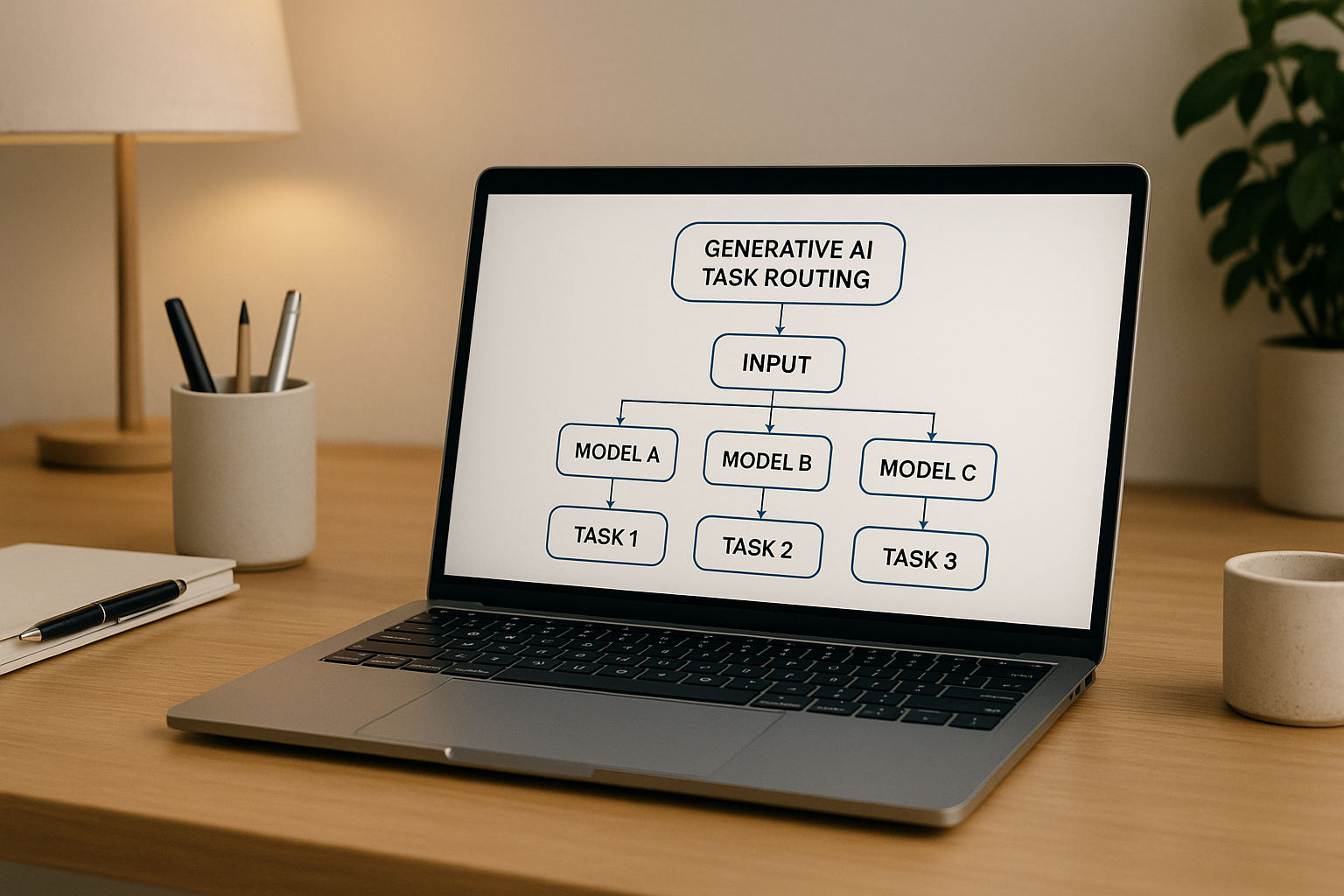

Task-specific generative AI model routing ensures tasks are matched with the best AI models for speed, precision, and cost efficiency. By integrating predefined rules and centralized orchestration, enterprises can simplify workflows, reduce costs, and improve results across multiple AI models. Here's how:

Key Benefits:

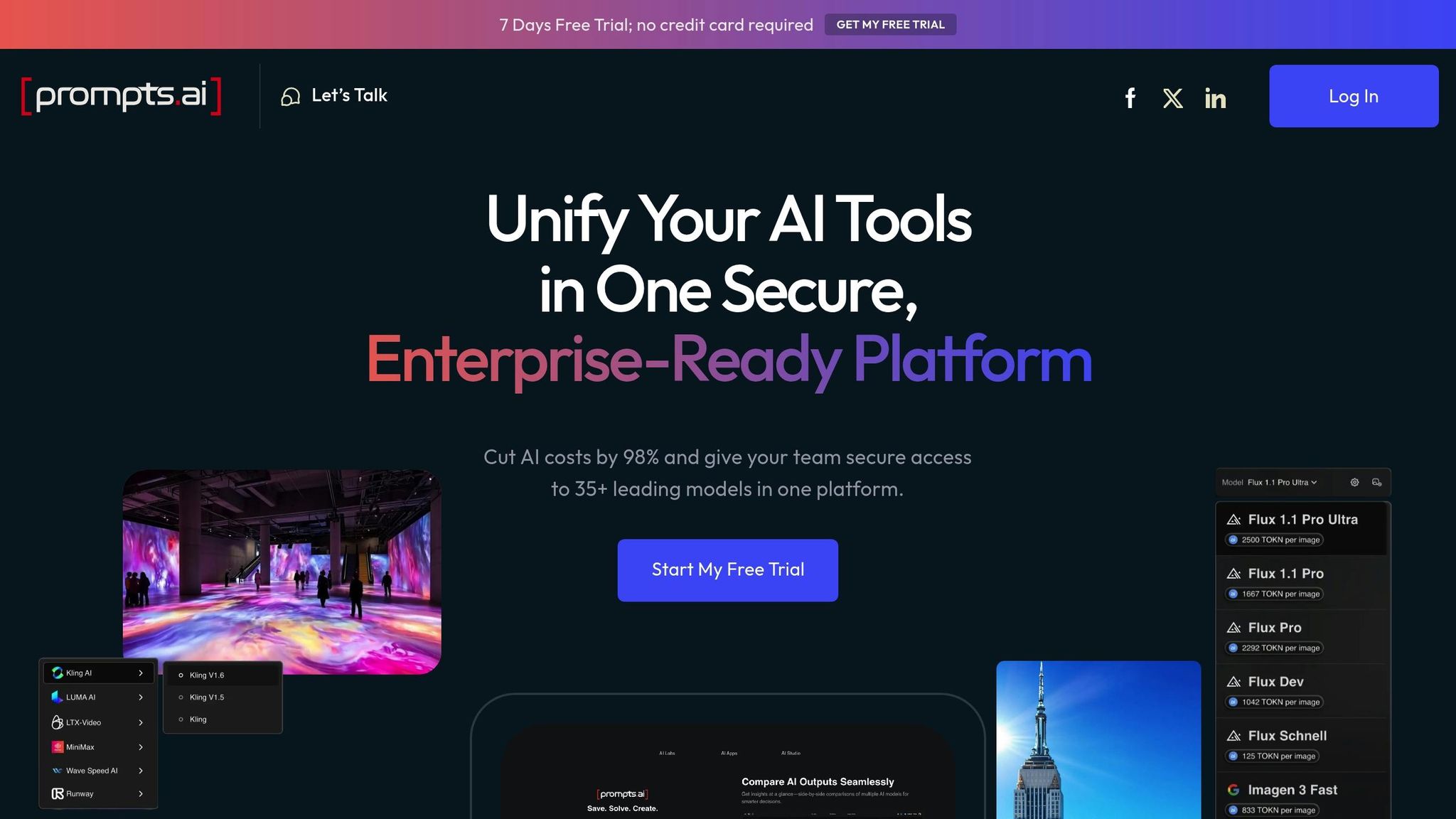

Platforms like Prompts.ai streamline this process by integrating 35+ leading models into a single interface, enabling smarter workflows and better decision-making. Start small, track results, and scale confidently with centralized orchestration.

Laying the groundwork for efficient task-specific routing starts with pinpointing and organizing tasks to ensure optimal model usage. The process begins by cataloging the tasks you aim to address.

Start by reviewing existing workflows to compile a comprehensive list of tasks. Examine areas like customer service, content creation, data analysis, or any other processes where AI could enhance efficiency or outcomes.

Dive deeper by analyzing user intent to differentiate tasks more effectively. For example, requests for summaries, translations, code generation, or creative content can be grouped based on their unique requirements. Each type of request highlights a task that may benefit from specialized routing.

Another approach is to map data flows within your system. By tracing how data enters, transforms, and exits, you can identify natural points where AI models could step in to handle specific tasks.

Consider incorporating feedback loops that allow users to specify task types when submitting requests. This input can help refine task identification, especially for less common or complex scenarios.

Once tasks are identified, organize them into categories that align with model strengths. Start with data type classification to create an initial structure. Text-based tasks include activities like content generation, summarization, translation, and sentiment analysis. Visual tasks might involve image analysis, chart interpretation, or document processing. Code-related tasks cover programming, debugging, and creating technical documentation.

Adding a second layer of organization, complexity levels can further refine classifications. Simple tasks, such as keyword extraction or basic formatting, often work well with faster, cost-efficient models. Medium complexity tasks, like multi-step reasoning or constrained creative writing, may require models with more nuanced capabilities. High complexity tasks, such as advanced reasoning or multi-modal processing, are best suited for specialized models with expertise in areas like finance, healthcare, or legal analysis.

Processing requirements also play a role in classification. Tasks requiring real-time responses differ significantly from those that can tolerate slower processing for higher accuracy. Similarly, batch processing tasks have distinct needs compared to interactive, conversational workflows.

Finally, security and compliance requirements must be considered. Tasks involving sensitive data - like personally identifiable information, financial records, or regulated content - should be routed to models that meet stringent security and compliance standards.

By clearly categorizing tasks, you can align them with business priorities, ensuring resources are focused where they’ll have the greatest impact.

Aligning tasks with business objectives helps prioritize their importance. For instance:

To prioritize effectively, create a task priority matrix that evaluates business impact against implementation complexity. Tasks with high impact and low complexity are ideal starting points, while high-impact, high-complexity tasks may need more advanced routing strategies and careful model selection.

This structured approach to task identification and classification lays a strong foundation for the next step: selecting the right models for each task. By ensuring tasks are matched with models that suit their requirements, you can streamline workflows and achieve efficient routing.

Once you've outlined and classified your tasks, the next step is to choose the right AI models for each workload. This decision is essential, as it directly affects both performance and costs. Instead of relying on assumptions or brand reputation, a systematic evaluation ensures you pick the best-fit models for your needs.

Choosing the right model involves assessing several factors that align with your business goals. Key considerations include accuracy, response time, cost efficiency, domain expertise, integration requirements, and compliance.

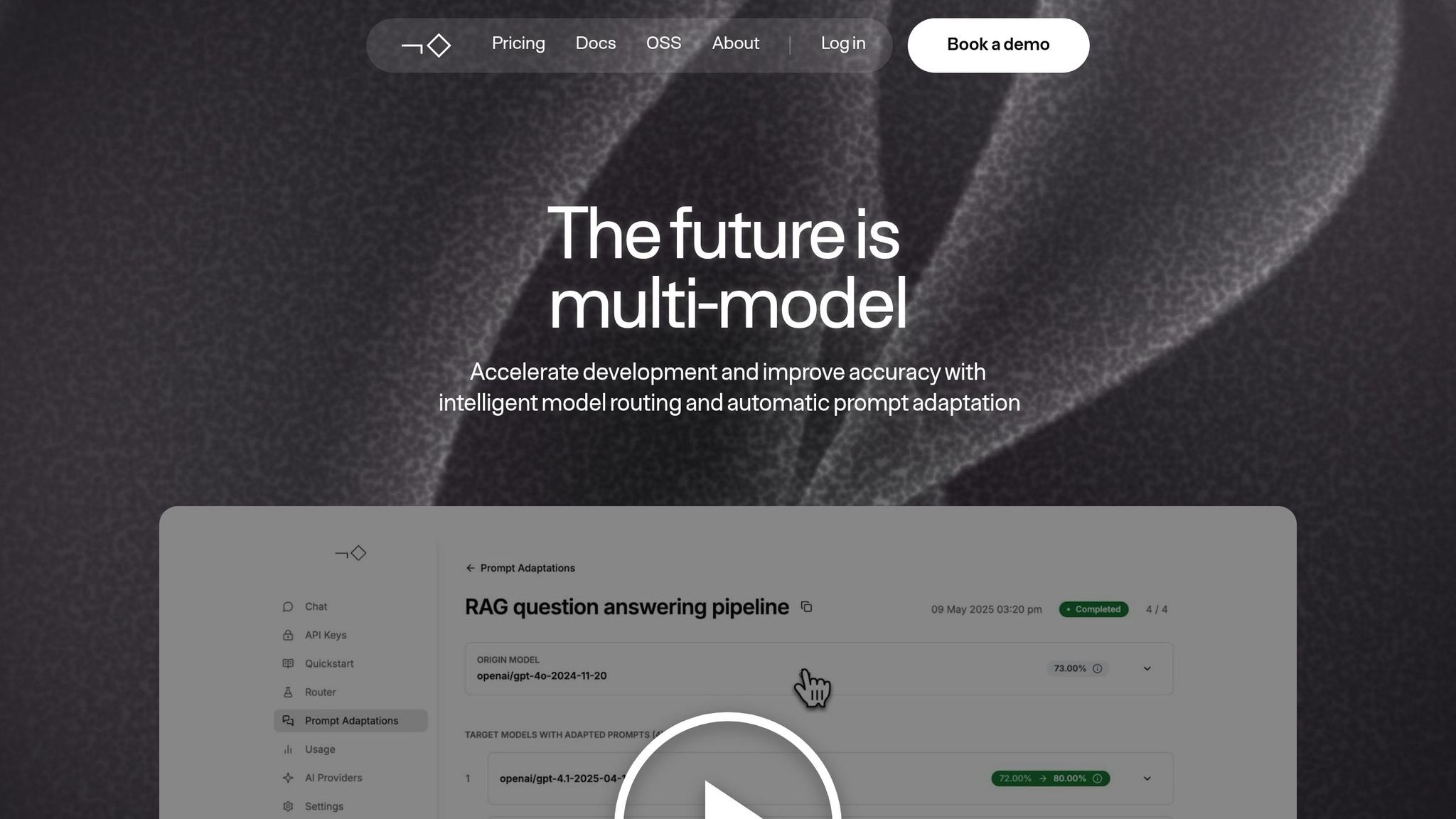

Prompts.ai simplifies this process by providing access to over 35 leading large language models through a single, secure interface. This eliminates the hassle of managing multiple vendor relationships and API integrations. The platform allows you to compare models side-by-side using identical prompts, delivering actionable data based on real-world performance instead of theoretical benchmarks.

The platform’s comparisons highlight strengths and weaknesses for different use cases. For instance, GPT-4 and Claude 3 excel in deep reasoning and multi-step problem-solving, while Claude 3 leads in generating creative content. For high-volume processing, GPT-3.5 and Gemini Pro offer a balance of capability and cost efficiency, making them ideal for handling thousands of daily requests.

Prompts.ai also offers real-time FinOps cost controls, giving you visibility into spending patterns and enabling ongoing optimization. Additionally, its multimodal comparison capabilities make it easy to evaluate models for tasks involving images, documents, or mixed media.

These tools provide a clear foundation for selecting and prioritizing the best models for your workflows.

When prioritizing models, it’s essential to balance technical capabilities with business constraints. A performance-cost matrix can help visualize which models provide the most value, allowing you to reserve premium models for complex tasks and use budget-friendly options for simpler ones.

The best model selection strategy balances performance, cost, and operational demands. Regularly revisiting and adjusting your priorities ensures your AI workflows stay aligned with evolving business needs, new model releases, and changing cost structures.

| Model | Reasoning | Creativity | Speed | Cost |

|---|---|---|---|---|

| GPT-4 | Excellent | Very Good | Moderate | High |

| Claude 3 | Excellent | Excellent | Fast | Moderate |

| Gemini Pro | Very Good | Good | Very Fast | Low |

| GPT-3.5 | Good | Good | Very Fast | Very Low |

With your models selected and prioritized, the next step is integrating them into your workflow using tailored routing logic to maximize efficiency and performance.

Once you've selected and prioritized your models, the next step is to bring them together into a streamlined system. By automating task routing, you can transform a manual, multi-model approach into an efficient, automated workflow.

Creating effective routing logic involves combining straightforward rules with more adaptive algorithms to handle tasks dynamically and in real time.

To ensure uninterrupted workflows, your routing logic should include fallback mechanisms. If a primary model becomes unavailable, tasks can automatically shift to a secondary option without delays or disruptions.

The key to successful integration is making the routing system invisible to users while giving administrators full control and oversight.

It’s also essential to incorporate real-time feedback loops. By capturing performance data and user satisfaction metrics, you can refine your routing logic based on actual outcomes, ensuring continuous improvement.

For enterprise environments, routing logic must be designed with strict security controls and compliance measures to safeguard sensitive data and meet regulatory requirements.

After deployment, it's essential to keep a close watch on your system to ensure it maintains peak performance. This phase focuses on monitoring, refining workflows, and scaling operations to meet growing demands while delivering measurable results.

Monitoring isn't just about making sure systems stay online; it’s about understanding how each model performs in real-world tasks and the impact on your bottom line. Key metrics like response times for customer-facing applications and accuracy rates for analytical tasks reveal whether the models are meeting your needs. Platforms such as Prompts.ai offer real-time dashboards that track these metrics across more than 35 models, giving you a clear view of performance.

Cost tracking is equally critical. By analyzing expenses at both the task and model levels, you can make smarter routing decisions. For example, identifying which tasks consume the most resources allows you to adjust workflows or budgets accordingly. Automated alerts can also help you stay ahead of potential issues. Notifications for cost overruns, slower response times, or rising error rates enable you to fix problems before they affect users.

Once you’ve established robust monitoring, you can shift your focus to refining workflows based on actual data, rather than assumptions. This involves analyzing how tasks flow through the system and identifying areas for improvement. For instance, adding a review stage or merging steps might reduce delays and enhance output quality.

Optimization often hinges on the smooth handoff between models. Take a market research example: one model might quickly gather initial data, while another performs deeper analysis. Adjusting how these models share information - such as improving the format or content of handoffs - can boost overall efficiency and reduce resource use.

User feedback is another valuable tool for optimization. When users rate outputs or request revisions, integrating this data into your routing decisions helps the system adapt and better align with user expectations. A/B testing different routing strategies can further refine workflows, offering data-backed insights to guide your decisions.

With optimized workflows in place, scaling becomes the next priority. Expanding operations requires careful planning to maintain quality while meeting increased demand and addressing new challenges.

Start small by rolling out to teams with well-defined, repetitive tasks, such as customer service or content creation. Once these teams see tangible improvements, you can expand into areas with more complex requirements, such as compliance or security-sensitive tasks.

User onboarding plays a critical role during this phase. Teams need to understand not just how to use the system but also the logic behind its routing decisions. Structured training programs - like those available through Prompts.ai - can help users get up to speed quickly, ensuring a smooth adoption process.

As your system scales, governance frameworks need to evolve. Define clear policies for modifying routing rules, evaluating new models, and handling unexpected results. Implement access controls that limit users to only the tools and models relevant to their roles, following the principle of least privilege.

Technical scalability is equally important. Your infrastructure should handle increased workloads without compromising performance. This might involve using load balancers to distribute traffic or setting up regional deployments to minimize latency. Cost management also becomes more complex as usage grows. Different teams may prioritize speed, accuracy, or cost efficiency differently, so your system should be flexible enough to accommodate these variations while staying within budget.

Benchmarking performance is a final, crucial step. Establish baseline metrics for tasks and departments so you can monitor changes as the system scales. If performance dips, you can quickly address the issue by tweaking routing rules or improving preprocessing steps.

Scaling isn’t just about handling more tasks; it’s about building a system that gets smarter and more effective over time. Each new use case adds to the system’s capabilities, setting the stage for broader AI adoption across your organization.

Task-specific generative AI model routing simplifies the complexity of multi-step workflows, moving away from generic solutions toward finely-tuned systems that deliver measurable outcomes.

This five-step process lays the groundwork for smarter AI operations. By starting with task identification and classification, companies gain a clear understanding of their actual needs instead of relying on assumptions. The selection and prioritization phase ensures resources are directed to models that perform best for specific tasks, while effective routing logic allows for smooth transitions between AI functions.

This method not only optimizes resource allocation but also significantly cuts costs. By matching tasks to appropriately scaled models instead of defaulting to high-cost options, organizations can achieve notable savings. Over time, the monitoring and scaling phase ensures these systems adapt to evolving business demands, compounding their benefits.

Additionally, task-specific routing addresses accuracy issues that often hinder AI systems. Instead of overburdening a single model with everything from basic data tasks to complex analyses, specialized routing improves output quality and builds greater confidence in AI results.

Centralized orchestration takes these principles further, streamlining operations and enhancing efficiency.

Managing multiple AI models for various tasks can quickly become chaotic without proper orchestration. Unified platforms bring order, ensuring streamlined operations and compliance with governance standards. This is especially critical for enterprises that must adhere to strict data governance and regulatory requirements.

Prompts.ai exemplifies this centralized approach by integrating over 35 leading models into one secure platform. Companies can cut AI costs by up to 98% while retaining access to a wide range of capabilities, including GPT-4, Claude, LLaMA, and Gemini. The platform’s real-time FinOps controls provide the transparency needed for sustainable scaling.

Centralized orchestration also simplifies governance. By channeling all AI interactions through a single system, enterprises can transform scattered experiments into structured, auditable processes that align with organizational standards.

With centralized systems in place, companies can confidently move forward with implementation and scaling.

Start small and expand strategically. Select a manageable use case, track measurable improvements, and use those results to build momentum for broader adoption.

Invest in comprehensive training to ensure teams understand both the technical features and the strategic rationale behind routing decisions. Platforms like Prompts.ai offer enterprise training programs and prompt engineer certification to fast-track adoption and develop internal expertise.

As you plan your implementation, think long-term. Your routing system should be flexible enough to integrate new models, adapt to changing business needs, and support a growing user base without requiring major overhauls. Prioritize solutions that balance adaptability with the governance and security standards your organization demands.

Task-specific AI model routing helps cut costs and boost accuracy by pairing each task in a workflow with the AI model best suited for the job. This method avoids relying on overly complex or resource-intensive models for simpler tasks, saving both time and money.

By fine-tuning model selection for each step, this approach reduces errors, simplifies processes, and improves precision. The result? Faster task completion, improved oversight, and meaningful cost reductions - freeing teams to focus on delivering top-notch results with greater efficiency.

When choosing AI models for specific tasks, several factors should guide your decision, including task complexity, data quality, and specific domain needs. For instance, tasks requiring advanced reasoning or multi-step solutions often benefit from more sophisticated models, while straightforward tasks can perform effectively with simpler ones.

It's also crucial to evaluate the quality and availability of your data, as this directly influences the model's performance and adaptability. Ensure the model aligns with your task's objectives, focusing on accuracy and efficiency to meet performance expectations. Selecting the right model helps streamline workflows and achieve better results.

To safeguard sensitive information and maintain compliance, businesses should implement strong security frameworks such as NIST or MITRE ATLAS, which provide structured guidelines for securely deploying AI. Essential practices include encrypting data both at rest and in transit, anonymizing private information, and applying stringent access controls to restrict unauthorized access.

Ongoing vigilance is equally important. Regularly monitoring for data drift, automating policy enforcement, and retraining models on a consistent basis help ensure that security protocols remain effective over time. Furthermore, establishing a well-prepared incident response plan allows organizations to respond swiftly to breaches or anomalies, minimizing risks and upholding compliance standards. These measures collectively help protect valuable data and maintain trust.