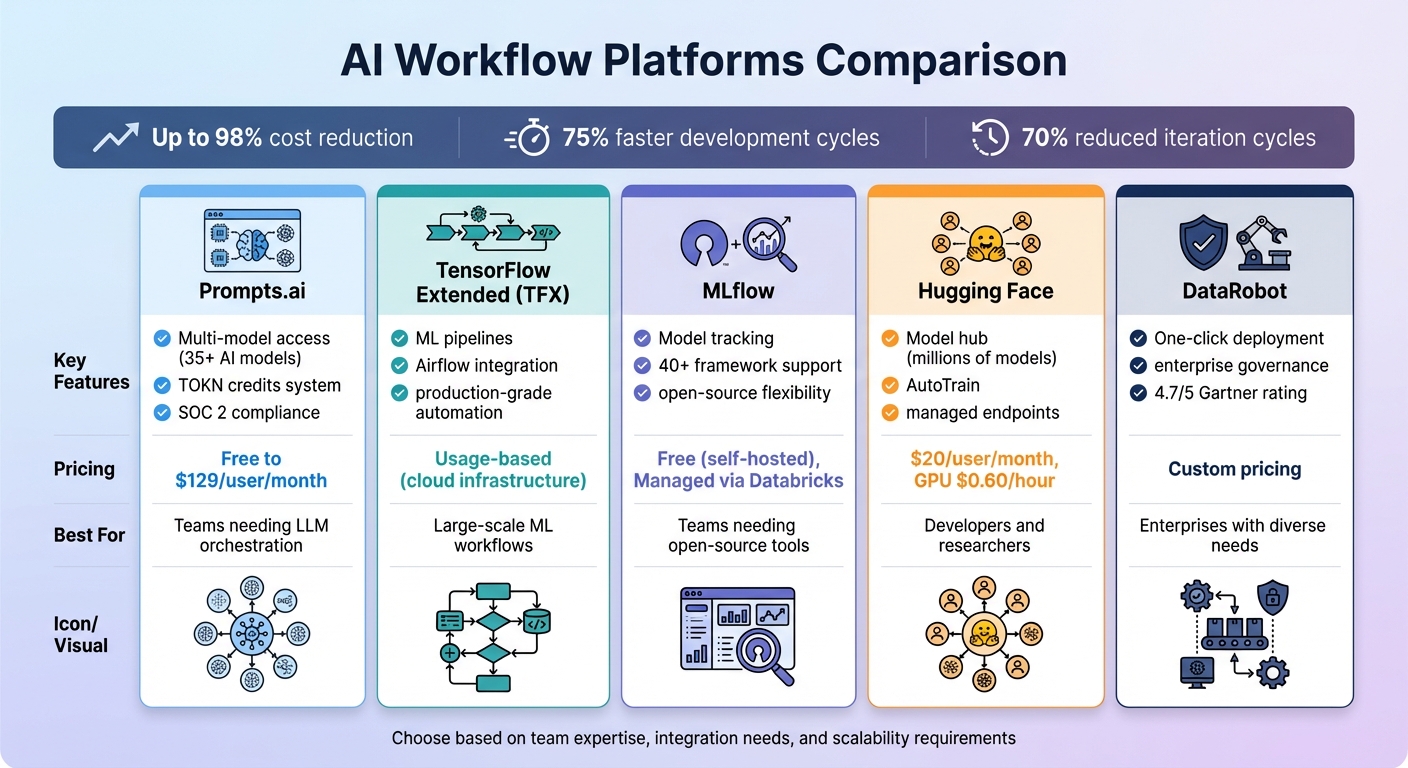

Businesses are overwhelmed by disconnected AI tools, rising costs, and security risks. AI workflow platforms solve this by unifying tools, automating tasks, and optimizing processes. With up to 98% cost reduction and 75% faster development cycles, these platforms streamline operations while maintaining security and flexibility. Below are five standout platforms for managing AI workflows:

Quick Comparison:

| Platform | Key Features | Cost Model | Best For |

|---|---|---|---|

| Prompts.ai | Multi-model access, TOKN credits, SOC 2 | Free to $129/user/month | Teams needing LLM orchestration |

| TFX | ML pipelines, integration with Airflow | Usage-based (cloud infra) | Large-scale ML workflows |

| MLflow | Model tracking, open-source flexibility | Free (self-hosted), Managed via Databricks | Teams needing open-source tools |

| Hugging Face | Model hub, AutoTrain, managed endpoints | $20/user/month, GPU $0.60/hour | Developers and researchers |

| DataRobot | One-click deployment, enterprise tools | Custom pricing | Enterprises with diverse needs |

Each platform offers unique strengths, from cost savings to scalability, ensuring a tailored solution for any AI challenge.

AI Workflow Platforms Comparison: Features, Pricing, and Best Use Cases

Prompts.ai brings together access to over 35 top AI models - including GPT, Claude, LLaMA, and Gemini - into one secure and streamlined interface. Instead of juggling multiple subscriptions and logins, teams can compare outputs from various large language models side by side, making it easier to identify the best fit for specific tasks. This all-in-one solution eliminates the fragmentation often caused by using too many tools across departments, paving the way for seamless automation, scalability, and collaboration.

With Prompts.ai, users gain access to over 35 AI models without the hassle of managing separate accounts or API integrations. This unified system allows simultaneous prompts, enabling teams to evaluate quality, speed, and relevance across models in real time. Business plans take it a step further by offering Interoperable Workflows, which let organizations create scalable, repeatable processes. For instance, one model can handle customer inquiries, while another focuses on data analysis, all within the same ecosystem.

Prompts.ai turns manual, one-off AI tasks into automated workflows that operate around the clock. These workflows integrate effortlessly with tools like Slack, Gmail, and Trello, streamlining productivity. For example, Steven Simmons reduced weeks-long 3D rendering and proposal writing to just one day. Similarly, architect Ar. June Chow uses the platform’s side-by-side LLM comparison feature to experiment with creative design concepts and tackle complex projects with ease.

The platform’s Business plans include unlimited workspaces and collaboration options, making it ideal for large teams. Features like TOKN Pooling and Storage Pooling allow teams to share resources effectively, while centralized governance ensures full visibility and accountability for all AI activities. Prompts.ai has also begun its SOC 2 Type 2 audit process as of June 19, 2025, and integrates compliance frameworks from HIPAA and GDPR, addressing enterprise-level security and data protection needs. Teams can deploy new models, add members, and launch workflows in less than 10 minutes.

Prompts.ai’s TOKN credit system transforms fixed monthly software expenses into flexible, usage-based spending, helping users optimize costs. The platform claims to reduce AI-related expenses by up to 98% by consolidating over 35 separate tools into one. Pricing options range from a free Pay As You Go plan with limited credits to the Business Elite plan at $129 per member per month, which includes 1,000,000 TOKN credits and advanced creative tools. Frank Buscemi, CEO and CCO, highlights how the platform has streamlined content creation and automated strategy workflows, allowing his team to focus on high-level creative projects instead of repetitive tasks.

TensorFlow Extended (TFX) is an end-to-end platform designed for deploying production-grade machine learning (ML) pipelines, covering everything from data validation to model serving. While primarily built around TensorFlow, TFX supports workflows that include other frameworks like PyTorch, Scikit-learn, and XGBoost through containerization. This flexibility allows teams to manage mixed-framework projects seamlessly, especially in environments such as Vertex AI. Its comprehensive structure paves the way for streamlined automation across diverse setups.

TFX simplifies the entire ML lifecycle with its adaptable architecture. It automates workflows using prebuilt components like ExampleGen, StatisticsGen, Transform, Trainer, Evaluator, and Pusher. These components integrate with orchestrators such as Apache Airflow, Kubeflow Pipelines, and Apache Beam, making it easy to embed TFX into enterprise environments. For instance, in October 2023, Spotify leveraged TFX and TF-Agents to simulate listening behaviors for reinforcement learning, enhancing their music recommendation systems based on user interactions. Similarly, Vodafone adopted TensorFlow Data Validation (TFDV) in March 2023 to oversee data governance across its global telecommunications operations.

"When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative." - Google Developers

TFX is built to scale, utilizing Apache Beam for distributed data processing across platforms like Google Cloud Dataflow, Apache Flink, and Apache Spark. It also integrates with enterprise tools such as Vertex AI Pipelines and Vertex AI Training, enabling teams to process massive datasets and train models across multiple nodes with GPU acceleration. The Kubeflow ecosystem, which frequently powers TFX pipelines, has seen significant adoption, with over 258 million PyPI downloads and 33,100 GitHub stars. Additionally, ML Metadata (MLMD) tracks model lineage and pipeline execution histories, automatically logging artifacts and parameters to ensure transparency and traceability. This scalability makes TFX a powerful tool for unifying complex ML workflows into an efficient system.

TFX helps organizations manage costs by using caching to avoid re-executing redundant components, which saves compute resources during iterative training. For teams running on Google Cloud, billing data can be exported to BigQuery, allowing detailed cost analysis of individual pipeline runs. The platform's modular design also provides flexibility: teams can use standalone libraries like TFDV or TFT without deploying the entire TFX system, tailoring the platform to their specific needs.

MLflow is a versatile, open-source tool that connects over 40 AI frameworks, including PyTorch, TensorFlow, scikit-learn, OpenAI, Hugging Face, and LangChain. As part of the Linux Foundation, it allows teams to run workflows locally, on-premises, or across major cloud platforms. With more than 20,000 GitHub stars and over 50 million monthly downloads, MLflow has become a widely adopted solution for managing AI workflows. Its seamless integration capabilities form the foundation of its advanced features.

MLflow 3 simplifies model tracking with its unified model URI (models:/<model_id>), supporting lifecycle management across various stages. Developers can use Python, REST, R, and Java APIs to log parameters, metrics, and artifacts from almost any environment. For Generative AI (GenAI) workflows, MLflow integrates with over 30 tools, including Anthropic, Gemini, Bedrock, LlamaIndex, and CrewAI. Additionally, its tracing capabilities align with OpenTelemetry, ensuring AI observability data fits smoothly into existing enterprise monitoring systems.

MLflow takes the complexity out of workflow management with its automation features. The mlflow.autolog() function and Model Registry streamline metric logging and automate version transitions from Staging to Production. For GenAI applications, MLflow captures the entire execution process - covering prompts, retrievals, and tool calls - making it easier to debug workflows automatically.

MLflow supports scalability by separating the Backend Store, which uses SQL databases like PostgreSQL or MySQL for metadata, from the Artifact Store, which manages large files through services like Amazon S3, Azure Blob Storage, or Google Cloud Storage. For massive model files, multipart uploads break artifacts into 100 MB chunks, bypassing the tracking server to improve upload speeds and efficiency. Teams can deploy Tracking Server instances in "Artifacts-only Mode" and use SQL-like queries to quickly locate high-performing models, such as metrics.accuracy > 0.95.

MLflow is available for free under the Apache-2.0 license for self-hosted deployments. For those seeking a managed solution, a free version is available, with enterprise-grade options offered through Databricks. To handle large models efficiently, enabling MLFLOW_ENABLE_PROXY_MULTIPART_UPLOAD allows direct uploads to cloud storage, reducing server load and cutting compute costs. By combining unified model management with automation and scalable infrastructure, MLflow addresses the key challenges of modern AI workflows effectively.

Hugging Face serves as a central hub for AI development, offering millions of models, datasets, and demo applications (Spaces). With over 50,000 organizations onboard - including giants like Google, Microsoft, Amazon, and Meta - the platform emphasizes a community-driven approach to advancing AI. As stated in their documentation:

"No single company, including the Tech Titans, will be able to 'solve AI' by themselves – the only way we'll achieve this is by sharing knowledge and resources in a community-centric approach".

Hugging Face’s vast repository ensures seamless compatibility across a range of AI models. Key libraries like Transformers and Diffusers provide cutting-edge PyTorch models, while Transformers.js enables model execution directly in web browsers. With a single Hugging Face API token, users gain access to over 45,000 models across more than 10 inference partners - including AWS, Azure, and Google Cloud - at the providers' standard rates. The platform also integrates with specialized libraries like Asteroid and ESPnet, as well as widely-used LLM frameworks such as LangChain, LlamaIndex, and CrewAI. Tools like Optimum enhance model performance for hardware like AWS Trainium and Google TPUs, while PEFT (Parameter-Efficient Fine-Tuning) and Accelerate simplify training on diverse hardware setups.

Hugging Face streamlines model fine-tuning through its AutoTrain feature, which automates the process via APIs and a user-friendly interface, eliminating the need for extensive manual coding. Repository-level webhooks allow users to trigger external actions when models, datasets, or Spaces are updated. For AI agent development, the smolagents Python library helps orchestrate tools and manage complex tasks. Fully managed Inference Endpoints make deploying models into production straightforward, while the Hub Jobs framework automates and schedules machine learning tasks through either APIs or a visual interface. Together, these automation tools support scalable, enterprise-ready workflows.

Hugging Face offers enterprise-grade features like Single Sign-On (SSO), Audit Logs, and Resource Groups, making it easy for large teams to collaborate while maintaining compliance. The platform uses Xet technology for efficient storage and versioning of large files within Git-based repositories, streamlining the management of extensive models and datasets. Teams can group accounts, assign granular roles for access control, and centralize billing for datasets, models, and Spaces. Additionally, the platform supports datasets in over 8,000 languages and provides fully managed Inference Endpoints integrated with major cloud providers.

The Team Plan starts at $20 per user per month, including features like SSO, audit logs, and resource groups. GPU usage is priced at $0.60 per hour, and Inference Providers charge users directly at their standard rates, with no added markups from Hugging Face. For demo applications, ZeroGPU Spaces dynamically allocate NVIDIA H200 GPUs in real time, eliminating the need for permanent, high-cost hardware. Custom pricing is available for enterprises that require advanced security, dedicated support, and enhanced access controls.

DataRobot is a comprehensive AI platform designed to handle everything from experimentation to production deployment. Garnering a 4.7/5 rating on Gartner Peer Insights and a 90% user recommendation rate, it has also been recognized as a leader in the Gartner Magic Quadrant for Data Science and Machine Learning Platforms. The platform focuses on integration, automation, and scalability, making it easier to navigate the complexities of AI workflows. Tom Thomas, Vice President of Data Strategy, Analytics & Business Intelligence at FordDirect, shared:

"What we find really valuable with DataRobot is the time to value. DataRobot helps us deploy AI solutions to market in half the time we used to do it before."

DataRobot's Model Agnostic Registry provides centralized management for model packages from any source. It supports both open-source and proprietary Large Language Models (LLMs) and Small Language Models (SLMs), regardless of the provider. With native integrations for platforms like Snowflake, AWS, Azure, and Google Cloud, the platform ensures seamless connections to existing tech stacks. Its NextGen UI offers flexibility for both development and governance, allowing users to toggle between a graphical interface and programmatic tools like REST API or Python client packages. This seamless integration sets the stage for advanced automation in future workflows.

DataRobot simplifies the journey from development to production with one-click deployment, creating API endpoints and configuring monitoring automatically. Its dynamic compute orchestration eliminates the hassle of manual server management - users specify their compute needs, and the system takes care of provisioning and workload distribution. Ben DuBois, Director of Data Analytics at Norfolk Iron & Metal, emphasized its benefits:

"The main thing that DataRobot brings for my team is the ability to iterate quickly. We can try new things, put them into production fast. That flexibility is key - especially when you're working with legacy systems."

The platform also generates compliance documentation automatically, addressing model governance and regulatory standards. "Use Case" containers help keep projects organized and audit-ready, ensuring workflows remain structured across enterprise environments.

DataRobot makes it easy to manage a wide range of models, from dozens to hundreds, through a centralized system. It supports deployment across managed SaaS, VPC, or on-premise infrastructures. For example, a global energy company achieved a $200 million ROI across 600+ AI use cases, while a top 5 global bank saw a $70 million ROI through 40+ AI applications across the organization. Thibaut Joncquez, Director of Data Science at Turo, highlighted the platform’s standardization capabilities:

"Nothing else out there is as integrated, easy-to-use, standardized, and all-in-one as DataRobot. DataRobot provided us with a structured framework to ensure everybody has the same standard."

The platform brings together diverse teams - data scientists, developers, IT, and InfoSec - by offering both visual tools and programmatic interfaces. Its pre-built "AI Accelerators" speed up the transition from experimentation to production. By unifying workflows, automating complex processes, and scaling effortlessly, DataRobot helps organizations achieve enterprise-grade AI capabilities with ease.

AI workflow platforms are reshaping how organizations move from isolated experiments to fully operational systems. By adopting the right platform, businesses can significantly speed up development cycles - some report cutting the time needed to create agentic workflows by 75% and reducing iteration cycles by 70% with dedicated AI platforms. These efficiencies lead to faster launches and improved returns on investment.

The key to these advancements lies in three main advantages: interoperability, automation, and scalability. Platforms that integrate with various models and existing tech stacks prevent vendor lock-in and unpredictable costs. Orchestration layers ensure system reliability and streamline recovery processes, allowing teams to focus on their core objectives. For cross-departmental teams, tools like shared workspaces and visual builders help bridge the gap between technical and non-technical users, while governance features - such as audit trails and role-based access controls - ensure workflows remain secure and compliant.

Choosing the right platform is critical to unlocking these benefits. Opt for solutions that align with your team's expertise, offering no-code interfaces for non-technical users and API-driven options for developers. Look for platforms with robust observability features - such as node-level traces, cost metrics, and searchable logs - to quickly identify and resolve production issues. Organizations leveraging external partnerships or specialized low-code AI tools have seen double the success rates in moving projects from pilot to production compared to those relying solely on internal resources.

The numbers speak for themselves: companies using AI automation report up to 35% higher productivity and 25–50% cost savings. As Andres Garcia, Chief Technology Officer, explains:

"To me as CTO, investing in proven automation frees teams to innovate. I don't want my team building connections, monitors, or logging when there's infrastructure already in place."

Start with high-volume, repetitive tasks like data enrichment to achieve quick wins. Ensure the platform integrates seamlessly with your existing SaaS and legacy systems, as 46% of product teams cite poor integration as the top obstacle to AI adoption. A platform that simplifies complexity rather than adding to it ensures your team can focus on driving innovation and delivering meaningful business results.

AI workflow platforms offer a smart way to cut down expenses by bringing tools, models, and data pipelines together in one unified, pay-as-you-go system. Instead of juggling multiple licenses for different AI models, users gain access to over 35 models through a single platform, paying only for the computing power they actually use. This approach eliminates wasted resources and ensures no capacity sits idle.

With real-time cost tracking and governance tools, users get complete transparency into their spending. Paired with built-in automation, these features minimize manual tasks and help avoid unnecessary cloud costs. Together, these efficiencies can lead to cost savings of up to 98% compared to the inefficiencies of managing fragmented, multi-vendor setups.

Prompts.ai is built to simplify how teams handle and coordinate multiple large language models (LLMs) within a single, secure environment. With access to more than 35 top-tier models, including GPT-5, Claude, and Grok-4, users can seamlessly switch between models or use them simultaneously - all without the hassle of managing separate accounts or APIs.

The platform includes real-time cost tracking and a flexible pay-as-you-go credit system, making it easier for teams to keep expenses under control while cutting down on AI-related costs. Enterprise-level security ensures that data remains protected, while integrated automation tools take the complexity out of designing, testing, and deploying LLM workflows. Prompts.ai offers a streamlined, efficient way for organizations to boost productivity and foster collaboration in their AI initiatives.

AI workflow platforms place a strong emphasis on security and compliance, incorporating features like role-based access control (RBAC), detailed audit logs, and data privacy safeguards. These capabilities allow organizations to track who interacts with models, when they do so, and what data is involved, ensuring accountability at every step.

To safeguard sensitive information, these platforms often employ encryption - both for data at rest and during transit - along with sandboxed environments and automated data-cleansing measures. They also adhere to strict organizational policies to regulate connections with third-party providers, minimizing the risk of unauthorized data sharing. Policy-driven guardrails and tamper-evident logs further enhance regulatory compliance while promoting operational transparency.

Together, these measures create a secure and reliable framework, enabling organizations to scale their AI workflows confidently while upholding privacy and compliance standards.